Preprocessing Criteo Dataset for Prediction of Click Through Rate on Ads

预处理Criteo数据集以预测广告的点击率

In this post, I will be taking you through the steps that I performed to preprocess the Criteo Data set.

在这篇文章中,我将指导您完成预处理Criteo数据集的步骤。

Some Aspects to Consider when Preprocessing the Data 预处理数据时应考虑的一些方面Criteo data set is an online advertising dataset released by Criteo Labs. It contains feature values and click feedback for millions of display Ads, the data serves as a benchmark for clickthrough rate (CTR) prediction. Every Ad has features that describe the data. The data set has 40 attributes, the first attribute is the label where a value 1 represents that the Ad has been clicked on and a 0 represents it wasn’t clicked on. The attributes consist of 13 integer columns and 26 categorical columns.

Criteo数据集是Criteo Labs发布的在线广告数据集。 它包含数百万个展示广告的功能值和点击反馈,该数据可作为点击率(CTR)预测的基准。 每个广告都有描述数据的功能。 数据集具有40个属性,第一个属性是标签,其中值1表示已单击广告,而值0表示未单击广告。 该属性包含13个整数列和26个类别列。

I used PySpark on DataBricks notebooks to preprocess the data and train ML models.

我在DataBricks笔记本电脑上使用PySpark预处理数据并训练ML模型。

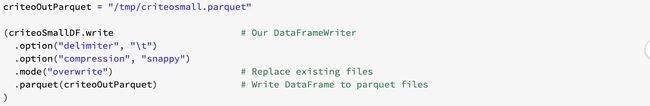

I started by reading the Critieo data in a data frame, the Criteo data was a TSV file tab separated and zipped (.gz). I saved the Criteo data set as a Parquet file, which saves the data in columnar format that allows the data to be handled mush faster than CSV format.

我从读取数据帧中的Critieo数据开始,Criteo数据是一个分隔并压缩(.gz)的TSV文件选项卡。 我将Criteo数据集保存为Parquet文件,该数据集以列格式保存数据,从而可以比CSV格式更快地处理数据。

One side note here to consider about Parquet files, that sometimes I noticed that overwriting an existing Parquet file could cause the data to be corrupted so I solved this situation by creating a new Parquet file rather than overwriting the old one.

这里有一个关于Parquet文件的注意事项,有时我注意到覆盖现有的Parquet文件可能会导致数据损坏,因此我通过创建一个新的Parquet文件而不是覆盖旧文件解决了这种情况。

I started by exploring the data in order to check whether it is balanced or not. The total number of records in the dataset is 45,840,617 records. I counted the records where the label equals 1 and where the labels equals 0 as follows:

我从探索数据开始,以检查数据是否平衡。 数据集中的记录总数为45,840,617条记录。 我计算了标签等于1且标签等于0的记录,如下所示:

I found that the number of clicked on Ads is only approximately 26% of the data while 74% of the data represents unclicked on Ads.

我发现,点击广告的次数仅占数据的26%,而其中74%的数据表示未点击广告。

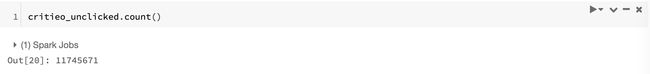

The count of clicked on adds (1) versus unclicked on adds (0) 点击添加次数(1)与未点击添加次数(0)的计数The conclusion here is that the data is unbalanced. So I balanced the data by creating a new dataset that consists of all the Ad records that was clicked on added to it a random sample of the Ad records that was not clicked on, the size of that sample approximately equals the number of the Ad records that was clicked on.

这里的结论是数据不平衡。 因此,我通过创建一个新数据集来平衡数据,该数据集包含所有被点击的广告记录,并向其中添加了一个未被点击的广告记录的随机样本,该样本的大小大约等于广告记录的数量被点击。

So, 11,745,671 records were selected from the 34,095,179 records of not clicked on Ads.

因此,从未点击广告的34,095,179条记录中选择了11,745,671条记录。

The size of selected sample of the not clicked Ads is almost equal to the clicked on Ads 所选未点击广告样本的大小几乎等于已点击广告的大小I explored whether the columns had null values or not. I calculated the percentage of null values in each column. I found that out of the 39 coulmns, 15 coulmns had no null values and 24 columns had null values with different percentages.

我探讨了这些列是否具有空值。 我计算了每一列中空值的百分比。 我发现在39个库仑中,有15个库仑没有空值,而24列有空值且百分比不同。

Out of the 24 coulmns that had null values, I found that 2 columns (one integer coulmn and one categorical coulmn) had more than 75% null values which is a high percentage. I decided to drop those two columns. Now the dataset has the label column, and 37 other coulmns which are 12 integer columns and 25 categorical columns. Five other attributes (2 integer columns and 3 categorical columns) had more than 40% null values between 44% to 45%. I decided to convert those coulmns into boolean types True/False. I treated the null/zero values as False otherwise True.

在24个具有空值的库仑中,我发现2列(一个整数库仑和一个类别库仑)的空值超过75%,这是一个很高的百分比。 我决定删除这两列。 现在,数据集具有标签列和37个其他列,分别是12个整数列和25个分类列。 其他五个属性(2个整数列和3个类别列)的空值在44%到45%之间超过40%。 我决定将这些同语转换为布尔类型True / False。 我将null / zero值视为False,否则视为True。

For the columns that had null values (missing values) less than 40%, I did the following: for the integer coulmns, I calculated the mean for the clicked on Ad records and for the not clicked on Ad records. I replaced the null values in each column by the mean value based on whether the missing value exists in an Ad record that was clicked on or unclicked on. For the categorical columns, I calculated the mode for the clicked on Ad records and for the unclicked Ad records. I replaced the null values in each column by the mode value based on whether the missing value exists in an Ad record that was clicked on or unclicked on.

对于具有小于40%的空值(缺失值)的列,我执行了以下操作:对于整数列,我计算了广告记录上单击的和未点击广告记录的平均值。 根据缺少的值是否存在于被单击或未被单击的广告记录中,我将每列中的空值替换为平均值。 对于分类列,我计算了点击的广告记录和未点击的广告记录的模式。 根据缺少的值是否存在于单击或未单击的广告记录中,我用模式值替换了每列中的空值。

I also looked for outliers in the integer columns and replaced those values by the mean value. I Calculated the interquartile range (IQR) for the data in each coulmn. I then considered any value that is more than 1.5 x (IQR) plus the third quartile or less than the first quartile minus 1.5 x (IQR) as an outlier.

我还在整数列中查找异常值,并用平均值替换了这些值。 我计算了四分位间距(IQR) 对于每个库仑中的数据。 然后,我将任何大于1.5 x(IQR)加上第三个四分位数或小于第一个四分位数减去1.5 x(IQR)的值作为离群值。

Since the integer columns had continuous values with different ranges. I z-scored the values of the integer columns, then bucketized them.

由于整数列具有不同范围的连续值。 我对整数列的值进行了z计分,然后对它们进行了存储桶化。

The categorical columns in the dataset are anonymized strings, I transformed the values to indexes using the StringIndexr() transformer. Then I counted the distinct values for each categorical coulmn.

数据集中的分类列是匿名字符串,我使用StringIndexr()转换器将值转换为索引。 然后,我为每个分类库计数了不同的值。

Column name, distinct values count 列名,不同值计数I found that most of them have high dimensionality (e.g. 5,736,858 distinct values) and some considered low dimensional (e.g. 27 distinct values). I applied one hot encoding on the low dimensional categorical columns only. I combined the preprocessed features into one sparse vector using vector assembler.

我发现它们大多数具有较高的维数(例如5,736,858个不同的值),并且有一些被认为是低维的(例如27个不同的值)。 我仅对低维分类列应用了一种热编码。 我使用向量汇编器将预处理的特征组合到一个稀疏向量中。

For the steps described above, I needed to develop seven custom transformers. I developed transformers to perform the boolean transformation, to replace the null values in both the integer and categorical columns, to cap the outliers in the integer columns, scale the integer columns

对于上述步骤,我需要开发七个定制转换器。 我开发了转换器来执行布尔值转换,替换整数列和分类列中的空值,覆盖整数列中的离群值,缩放整数列

From pyspark.ml.feature, I used the built in Bucketizer() to bucketize the integer columns, the StringIndexr() to index the categorical columns with both low and high cardinality, the OneHotEncoder() to one hot encode the categorical columns with low cardinality and the VectorAssembler() to combine relevant columns into one column that I called “features”.

从pyspark.ml.feature中,我使用内置的Bucketizer()对整数列进行存储桶化,使用StringIndexr()对基数低和高的类别列进行索引,将OneHotEncoder()进行热编码,对基数低的类别列进行热编码基数和VectorAssembler()将相关列合并为一列,我称之为“功能”。

I added all the transformers for all the columns to the stages of a pipeline and then I fitted and transformed the dataset using the pipeline.

我将所有列的所有转换器添加到管道的各个阶段,然后使用管道拟合并转换了数据集。

I arranged the data that will be used for training and testing ML models into a dataset that contains two columns; the label and the features column. I splited the data into a testing set (25%) and a training set (75%).

我将用于训练和测试ML模型的数据安排到一个包含两列的数据集中。 标签和功能列。 我将数据分为测试集(25%)和训练集(75%)。

I trained three ML models; a logistic Regression, Random Forest and a Linear SVM Model on the training data set using the default parameter values for those models. I transformed the testing set using the three models and got label predictions. I calculated the accuracy of each model. The accuracy was approximately 70% (Linear SVM), 72% (logistic Regression) and 74% (Random Forest). By tunning the parameter values I was able to increase the models accuracy, for instance with the Random forest model I was able to get the accuracy to jump to 78% by setting the numTrees=50 and the maxDepth=20. You can always use something like grid search in sklearn which in Spark is referred to as ParamGridBuilder() to experiment couple of values for different parameters and get the best model that acheives the highest accuracy. You just need to ensure that you experiment with the right parameter values. For instance you need to ensure that the maxDepth can not go beyond 30, more than 30 is not supported yet. You also need to insure that your cluster configuration is done properly. I had set my cluster autopilot options to enable “auto scaling”, and I realized that when training my RF model I was getting errors indicating that some jobs has failed and from the error I got an indication that may be because the workers were auto scaling this could have been the problem where the jobs kept loosing some workers. Disabling the “auto scaling” option solved my problem. You should also make sure that the machines on your cluster have enough RAM. For instance, if you are training with a value more than 20 for the maxDepth you need machines with definitely more than 32 GB. You also need to expect that the training time will increase depending on the size of the data. For instance, training a random forest model with numTrees=50 and the maxDepth=20 took me more than 2 hours on a cluster of 16 machines, m5.2xlarge (32 GB, 8 cores).

我训练了三个ML模型; 使用这些模型的默认参数值在训练数据集上进行逻辑回归,随机森林和线性SVM模型。 我使用这三个模型转换了测试集,并获得了标签预测。 我计算了每个模型的准确性。 准确性约为70%(线性SVM),72%(逻辑回归)和74%(随机森林)。 通过调整参数值,我能够提高模型的准确性,例如,使用随机森林模型,通过设置numTrees = 50和maxDepth = 20,我能够使准确性提高到78%。 您始终可以在sklearn中使用诸如网格搜索之类的方法,在Spark中将其称为ParamGridBuilder()来对不同参数的几个值进行实验,并获得可实现最高准确性的最佳模型。 您只需要确保试验正确的参数值即可。 例如,您需要确保maxDepth不能超过30,尚不支持超过30。 您还需要确保集群配置正确完成。 我已经设置了群集自动驾驶仪选项以启用“自动缩放”,并且我意识到,在训练我的RF模型时,我收到指示某些作业失败的错误,并且从错误中我得到的指示可能是由于工人正在自动缩放这可能是工作继续失去一些工人的问题。 禁用“自动缩放”选项可以解决我的问题。 您还应确保群集上的计算机具有足够的RAM。 例如,如果您要为maxDepth训练大于20的值,则您需要的机器肯定大于32 GB。 您还需要期望训练时间会根据数据的大小而增加。 例如,在numTrees = 50和maxDepth = 20的情况下训练随机森林模型,使我在16台m5.2xlarge(32 GB,8核)机器上运行了2个多小时。

You can find my code here. I hope, you enjoyed my post and found it useful. In my next post, I will take you through the steps that I followed to use the trained models to make predictions on streaming data. You can find the next post here.

您可以在这里找到我的代码。 希望您喜欢我的帖子,并发现它很有用。 在我的下一篇文章中,我将带您完成遵循的步骤,以使用经过训练的模型对流数据进行预测。 您可以在这里找到下一篇文章。

翻译自: https://medium.com/@amany.m.abdelhalim/preprocessing-criteo-dataset-for-prediction-of-click-through-rate-on-ads-7dee096a2dd9