学习记录(一)制作python版本的CIFAR10数据集

学习记录(一)

1. 制作自己的数据集

该数据集是通过使用网络爬虫以及对其他车辆数据集中的图片进行收集,制作的一个与cifar10数据集结构相同的车辆数据集。所有照片被分为10个不同的类别,它们分别是train,bus,minibus,fireengin,motorcycle,ambulance,sedan,jeep,bike和truck,共六万张,图片的规格为32×32×3。其中50000张图片被划分为训练集,剩下的10000张图片为测试集。

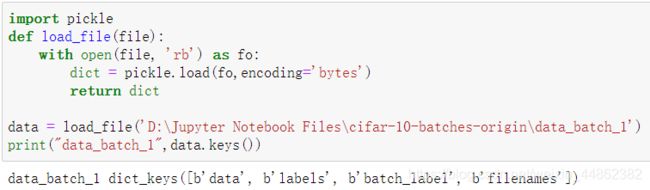

制作好的数据集的字典内的顺序为:[data、labels、batch_label、filenames]

参考文章:用自己的数据,制作python版本的cifar10数据集

将自己的数据集转换为cifar数据集格式

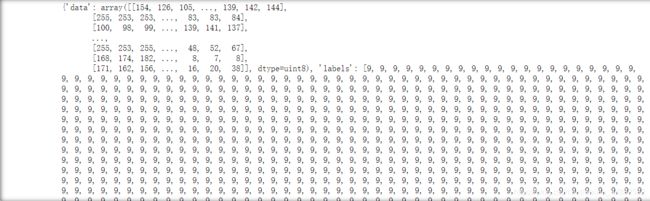

- data:data为uint8编码的numpy数组,为RGB的值。

- labels:labels就是第几类,此处对应0-9共10类,labels为utf-8编码的列表。

- batch_label:batch_label就是第几个batch,batch_label为utf-8编码的字符串。共有5个batch,而此处查看的为第一个batch。

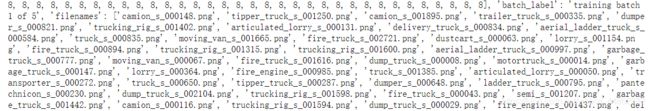

- filenames:filename就是图片的名字,filename为utf-8编码的字符串。此处有:

camion、tipper_truck、trailer_truck、dumper、trucking_rig、articulated_lorry、delivery_truck、aerial_ladder_truck、truck、moving_van、file_truck、dust_cart、lorry、garbage_truck、dump_truck、motortruck、fire_engine、transporter、ladder_truck、pantechnicon、semi、wrecker、tipper_lorry、tipper、laundry_truck、tandem_trailer、tow_truck、panel_truck、car_transporter、bookmobile、tractor_trailer等。

2. 生成GAN数据集

- GAN的定义

简单来说就是由两部分组成,生成器generator网络和判别器discriminator网络。一部分不断进化,使其对立部分也不断进化,实现共同进化的过程。

需要注意的是,discriminator对于输入的真实图像都应是高分,那么如果训练时只给它真实图像的话,他就无法实现正确的判断,会将所有输入都判为高分。所以需要一些差的图像送给discriminator进行训练,并且这些差的图像不应是简单的加些噪声之类的能让它轻易分辨的。因此,训练它的方法是,除真实图像外先给它一些随机生成的差的例子,然后对discriminator解argmaxD(x)做generation生成出一些他觉得好的图像,然后将原本极差的图像换为这些图像再进行训练,如此往复,discriminator会不断产生更好的图像,将这些作为negative examples给其学习,达到训练的目的。 - GAN的实现

- 导入keras库

import tensorflow.compat.v2 as tf

from tensorflow import keras

from tensorflow.keras import layers

import numpy as np

- 生成器网络

将一个向量(来自潜在空间,训练过程中对其随机采样)转换为一张候选图像。

生成器从未直接见过训练集中的图像,它所知道的关于数据的信息都来自于判别器。

latent_dim = 32

height = 32

width = 32

channels = 3

generator_input = tf.keras.Input(shape=(latent_dim,))

将输入转换为大小为16*16的128个通道的特征图

x = layers.Dense(128*16*16)(generator_input)

x = layers.LeakyReLU()(x)

x = layers.Reshape((16,16,128))(x)

x = layers.Conv2D(256,5,padding='same')(x)

x = layers.LeakyReLU()(x)

上采样为32*32

x = layers.Conv2DTranspose(256,4,strides=2,padding='same')(x)

x = layers.LeakyReLU()(x)

x = layers.Conv2D(256,5,padding='same')(x)

x = layers.LeakyReLU()(x)

x = layers.Conv2D(256,5,padding='same')(x)

x = layers.LeakyReLU()(x)

生成一个大小为32*32的单通道特征图(即CIFAR10图像的形状)

x = layers.Conv2D(channels,7,activation='tanh',padding='same')(x)

将生成器模型实例化,它将形状为(latent_dim,)的输入映射到形状为(32,32,3)的图像

generator = tf.keras.models.Model(generator_input,x)

generator.summary()

- 判别器网络

它接收一张候选图像(真实的或合成的)作为输入,并将其划分到这两个类别之一:“生成图像"或"来自训练集的真实图像”。

设置GAN,将生成器和判别器连接在一起训练时,这个模型将让生成器向某个方向移动,从而提高它欺骗判别器的能力。这个模型将潜在空间的点转换为一个分类决策(即"真"或"假") 它训练的标签都是"真实图像"。因此,训练gan将会更新generator得到权重,使得discriminator在观测假图像时更有可能预测为"真"。

#GAN判别器网络

discriminator_input = layers.Input(shape=(height,width,channels))

x = layers.Conv2D(128,3)(discriminator_input)

x = layers.LeakyReLU()(x)

x = layers.Conv2D(128,4,strides=2)(x)

x = layers.LeakyReLU()(x)

x = layers.Conv2D(128,4,strides=2)(x)

x = layers.LeakyReLU()(x)

x = layers.Conv2D(128,4,strides=2)(x)

x = layers.LeakyReLU()(x)

x = layers.Flatten()(x)

一个dropout层

x = layers.Dropout(0.4)(x)

分类层

x = layers.Dense(1,activation='sigmoid')(x)#分类层

将判别器模型实例化,它将形状为(32,32,3)的输入转换为一个二进制分类决策(真/假)

discriminator = tf.keras.models.Model(discriminator_input,x)

discriminator.summary()

discriminator_optimizer = tf.keras.optimizers.RMSprop(

lr=0.0008,

clipvalue = 1.0, #在优化器中使用梯度裁剪(限制梯度值的范围)

decay = 1e-8,#为了稳定训练过程,使用学习率衰减

)

discriminator.compile(optimizer=discriminator_optimizer,

loss='binary_crossentropy')

- 对抗网络

discriminator.trainable = True #将判别器权重设置为不可训练(仅应用于gan模型)

gan_input = tf.keras.Input(shape=(latent_dim,))

gan_output = discriminator(generator(gan_input))

gan = tf.keras.models.Model(gan_input,gan_output)

gan_optimizer = tf.keras.optimizers.RMSprop(lr=0.0004,clipvalue=1.0,decay=1e-8)

gan.compile(optimizer=gan_optimizer,loss='binary_crossentropy')

- 实现GAN的训练

import os

from tf.keras.preprocessing import image

(x_train,y_train),(_,_) = keras.datasets.cifar10.load_data() #cifar数据集

x_train = x_train[y_train.flatten() == 6]#选择青蛙的图像

x_train = x_train.reshape((x_train.shape[0],) + (height,width,channels)).astype('float32')/255.

iterations = 1000

batch_size = 2

save_dir = 'frog_dir'

start = 0

for step in range(iterations):

random_latent_vectors = np.random.normal(size=(batch_size,latent_dim))

generated_images = generator.predict(random_latent_vectors)#点-->虚假图像

stop = start + batch_size

混淆真实图像和虚假图像

real_images = x_train[start:stop]

combined_images = np.concatenate([generated_images,

real_images])

labels = np.concatenate([np.ones((batch_size,1)),

np.zeros((batch_size,1))])

labels += 0.05 * np.random.random(labels.shape) #向标签中添加噪声

训练判别器

d_loss = discriminator.train_on_batch(combined_images,labels)

在潜在空间中采样随机点

random_latent_vectors = np.random.normal(size=(batch_size,latent_dim))

合并标签,全都是“真实图像”(这是在撒谎)

misleading_targets = np.zeros((batch_size,1))

通过gan模型来训练生成器(此时冻结判别器模型)

a_loss = gan.train_on_batch(random_latent_vectors,misleading_targets)

start += batch_size

if start > len(x_train) - batch_size:

start = 0

if step % 2 == 0:

gan.save_weights('gan.h5')

print('discriminator loss:',d_loss)

print('adversarial loss:',a_loss)

img = image.array_to_img(generated_images[0] * 255.,scale=False)

img.save(os.path.join(save_dir,'generated_frog'+str(step)+'.png'))

img = image.array_to_img(real_images[0]*255.,scale=False)

img.save(os.path.join(save_dir,'real_frog'+str(step)+'.png'))

遇到的问题

- 用于生成GAN图像的源图像集为上一步生成的数据集,故在代码中将导入数据集修改为:

#cifar数据集

(x_train,y_train),(_,_) = tf.keras.datasets.cifar10.load_data()

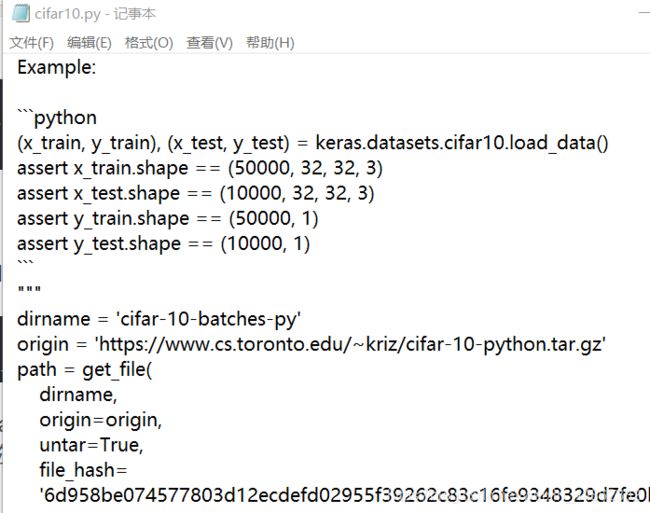

并且在路径:D:\anaconda3\envs\tensorflow_gpu\Lib\site-packages\keras\datasets中加入源图像集,修改名称为:cifar-10-batches-py。(如下图,由于在cifar10源码中文件索引名为cifar-10-batches-py)

参考文章:keras实现–>生成式深度学习之用GAN生成图像