NNDL 实验六 卷积神经网络(1)卷积 边缘检测之传统边缘检测算子和基于pytorch的Canny边缘检测

文章目录

-

- 卷积神经网络(Convolutional Neural Network,CNN)

-

- 5.1 卷积

-

- 5.1.1 二维卷积运算

- 5.1.2 二维卷积算子

- 5.1.3 二维卷积的参数量和计算量

- 5.1.4 感受野

- 5.1.5 卷积的变种

-

- 5.1.5.1 步长(Stride)

- 5.1.5.2 零填充(Zero Padding)

- 5.1.6 带步长和零填充的二维卷积算子

- 5.1.7 使用卷积运算完成图像边缘检测任务

- 选做题

-

- 边缘检测系列1:传统边缘检测算子 - 飞桨AI Studio

-

- 罗伯茨(roberts)算子:

- 普雷维特(prewitt)算子:

- 索贝尔(sobel)算子:

- 克里施(Krisch)算子:

- 罗宾逊(Robinson)算子:

- Laplacian(拉普拉斯)算子:

- 边缘检测系列2:简易的 Canny 边缘检测器 - 飞桨AI Studio

-

- 1.用高斯滤波器处理图像

- 2.计算图像的梯度强度和方向

- 3.非极大值抑制

- 4.双阈值检测

- 4.抑制孤立低阈值点

- 全部代码

- 边缘检测系列3:【HED】 Holistically-Nested 边缘检测 - 飞桨AI Studio

- 边缘检测系列4:【RCF】基于更丰富的卷积特征的边缘检测 - 飞桨AI Studio (baidu.com)

- 边缘检测系列5:【CED】添加了反向细化路径的 HED 模型 - 飞桨AI Studio (baidu.com)

卷积神经网络(Convolutional Neural Network,CNN)

受生物学上感受野机制的启发而提出。

一般是由卷积层、汇聚层和全连接层交叉堆叠而成的前馈神经网络

有三个结构上的特性:局部连接、权重共享、汇聚。

具有一定程度上的平移、缩放和旋转不变性。

和前馈神经网络相比,卷积神经网络的参数更少。

主要应用在图像和视频分析的任务上,其准确率一般也远远超出了其他的神经网络模型。

近年来卷积神经网络也广泛地应用到自然语言处理、推荐系统等领域。

5.1 卷积

5.1.1 二维卷积运算

这里只涉及通常意义上的图像卷积。

5.1.2 二维卷积算子

在本书后面的实现中,算子都继承paddle.nn.Layer,并使用支持反向传播的飞桨API进行实现,这样我们就可以不用手工写backword()的代码实现。

【使用pytorch实现】

torch.nn下的现有层实际上都是继承torch.nn.Module类,拥有Module类的所有功能。

torch.nn.Conv2d(in_channels=1,out_channels=1,kernel_size=kernel.shape)

5.1.3 二维卷积的参数量和计算量

随着隐藏层神经元数量的变多以及层数的加深,

使用全连接前馈网络处理图像数据时,参数量会急剧增加。

如果使用卷积进行图像处理,相较于全连接前馈网络,参数量少了非常多。

5.1.4 感受野

单层卷积:

就是卷积核的尺寸。

多层卷积:

和每个卷积核的尺寸有关。以两层卷积核为例,里层的卷积核尺寸为(a1,b1),记为卷积核1,最外层的卷积核尺寸为(a2,b2),记为卷积核2。那么,对于卷积核1,感受野尺寸为(a1,b1),卷积核1会以这个尺寸感受原始图像输出一个特征值。对于卷积核1输出的每个特征值,又作为卷积核2的感受野对象。

那么对于卷积核2,我们需要卷积核2尺寸数目的卷积核1 输出的特征值,这样卷积核1需要向后移动卷积核2的尺寸数目。这直接使得整个卷积过程再原图的感受范围变大了。如果卷积核再多一层,也是这样一层层叠加,感受野就是最外层卷积核的每个特征值最后感知的原图的范围。

定量分析:

以两层卷积核为例,里层的卷积核尺寸为(a1,b1),记为卷积核1,最外层的卷积核尺寸为(a2,b2),记为卷积核2。不考虑步长和补零的情况下。

则二层卷积的感受野范围是:

( a 1 + ( a 2 − 1 ) , b 1 + ( b 2 − 1 ) ) \big(a1+(a2-1),b1+(b2-1)\big) (a1+(a2−1),b1+(b2−1))

同理三层卷积的感受野范围是:

( ( a 1 + ( a 2 − 1 ) ) + ( a 3 − 1 ) , ( ( b 1 + ( b 2 − 1 ) ) + ( b 3 − 1 ) ) \big((a1+(a2-1))+(a3-1),((b1+(b2-1))+(b3-1)\big) ((a1+(a2−1))+(a3−1),((b1+(b2−1))+(b3−1))

那么对于n层卷积,其感受野范围:

( a 1 + a 2 + . . . + a n − ( n − 1 ) , b 1 + b 2 + . . . + b 3 + ( n − 1 ) ) \big(a1+a2+...+an-(n-1),b1+b2+...+b3+(n-1)\big) (a1+a2+...+an−(n−1),b1+b2+...+b3+(n−1))

记为:

( ∑ i = 0 n a i − ( n − 1 ) , ∑ i = 0 n b i − ( n − 1 ) ) \big(\sum^n_{i=0}a_i-(n-1),\sum^n_{i=0}b_i-(n-1)\big) (∑i=0nai−(n−1),∑i=0nbi−(n−1))

5.1.5 卷积的变种

5.1.5.1 步长(Stride)

卷积核后移的长度,值越大,移动的距离越远,卷积得到的特征图越小。

5.1.5.2 零填充(Zero Padding)

为了更有效利用上图像边缘信息,减少图像信息的丢失。具体不再赘述。

5.1.6 带步长和零填充的二维卷积算子

从输出结果看出,使用3×3大小卷积,

padding为1,

当stride=1时,模型的输出特征图与输入特征图保持一致;

当stride=2时,模型的输出特征图的宽和高都缩小一倍。

【使用pytorch实现】

设置卷积核为边缘检测,

( − 1 − 1 − 1 − 1 8 − 1 − 1 − 1 − 1 ) \begin{pmatrix} -1&-1&-1\\ -1&8&-1\\ -1&-1&-1 \end{pmatrix} ⎝⎛−1−1−1−18−1−1−1−1⎠⎞

填充为1,步长分别设为1和2分别进行卷积,并输出结果显示。

#coding:utf-8

import numpy as np

import torch

from matplotlib import pyplot as plt

outline_kernel=np.array([[-1,-1,-1],#边缘检测

[-1,8,-1],

[-1,-1,-1]])

def rgb_to_gray(rgb):

r, g, b = rgb[:, :, 0], rgb[:, :,1], rgb[:, :, 2]

gray = 0.2989 * r + 0.5870 * g + 0.1140 * b

return gray

if __name__=='__main__':

img_path = 'cat.jpg' #

img = plt.imread(img_path)

img = np.array(img)

img = rgb_to_gray(img)

print(img.shape)

labels = ['outline_kernel', 'sharpen_kernel', 'blur_kernel']

for stride in [(1,1),(2,2)]:

for j, kernel in enumerate([outline_kernel]):

in_img = torch.from_numpy(img.astype(np.float32)).reshape((1, 1, img.shape[0], img.shape[1]))

conv2d = torch.nn.Conv2d(in_channels=1, out_channels=1, kernel_size=kernel.shape,padding=1,stride=stride)

kernel = torch.from_numpy(kernel.astype(np.float32)).reshape((1, 1, kernel.shape[0], kernel.shape[1]))

conv2d.weight.data = kernel

out_img = conv2d(in_img)

print(out_img.shape)

(530, 640)

(530, 640)

(265, 320)

Process finished with exit code 0

可见结果完全符合如上分析。

5.1.7 使用卷积运算完成图像边缘检测任务

【使用pytorch实现】

#coding:utf-8

import numpy as np

import torch

from matplotlib import pyplot as plt

outline_kernel=np.array([[-1,-1,-1],#边缘检测

[-1,8,-1],

[-1,-1,-1]])

def rgb_to_gray(rgb):

r, g, b = rgb[:, :, 0], rgb[:, :,1], rgb[:, :, 2]

gray = 0.2989 * r + 0.5870 * g + 0.1140 * b

return gray

def adjust(img):

'''将像素值大于255的记为255,小于0的记为0'''

for i in range(img.shape[0]):

for j in range(img.shape[1]):

if img[i][j] > 255:

img[i][j] = 255

elif img[i][j] < 0:

img[i][j] = 0

else:

continue

return img

if __name__=='__main__':

img_path = 'cat.jpg' #

img = plt.imread(img_path)

img = np.array(img)

img = rgb_to_gray(img)

print(img.shape)

plt.figure()

labels = ['outline_kernel',]

for stride in [(1,1),(2,2)]:

for j, kernel in enumerate([outline_kernel]):

i = 1

'''绘制原图'''

plt.subplot(1, 2, i)

plt.imshow(img, cmap='gray')

plt.title('Original')

i += 1

in_img = torch.from_numpy(img.astype(np.float32)).reshape((1, 1, img.shape[0], img.shape[1]))

conv2d = torch.nn.Conv2d(in_channels=1, out_channels=1, kernel_size=kernel.shape,padding=1,stride=stride)

kernel = torch.from_numpy(kernel.astype(np.float32)).reshape((1, 1, kernel.shape[0], kernel.shape[1]))

conv2d.weight.data = kernel

out_img = conv2d(in_img)

'''绘制卷积后的图像'''

out_img = np.squeeze(out_img.detach().numpy()) # detach()将计算图中的非叶节点分离,否则没法numpy()

out_img = adjust(out_img) # 处理溢出值

print(out_img.shape)

plt.subplot(1, 2, i)

plt.imshow(out_img, cmap='gray')

plt.title('{} stride={}'.format(labels[j],stride))

plt.show()

步长为1

步长为2

选做题

Pytorch实现1、2;阅读3、4、5写体会。

边缘检测系列1:传统边缘检测算子 - 飞桨AI Studio

实现一些传统边缘检测算子,如:Roberts、Prewitt、Sobel、Scharr、Kirsch、Robinson、Laplacian

罗伯茨(roberts)算子:

共4种。

# coding:utf-8

import numpy as np

import torch

from matplotlib import pyplot as plt

roberts_kernels = np.array([

[

[0, 1],#右上roberts

[-1, 0]

],

[

[-1, 0],#右下roberts

[0, 1]

],

[

[1, 0],#左上roberts

[0, -1]

],

[

[0, -1],#左下roberts

[1, 0]

],

])

def rgb_to_gray(rgb):

r, g, b = rgb[:, :, 0], rgb[:, :, 1], rgb[:, :, 2]

gray = 0.2989 * r + 0.5870 * g + 0.1140 * b

return gray

def adjust(img):

'''将像素值大于255的记为255,小于0的记为0'''

for i in range(img.shape[0]):

for j in range(img.shape[1]):

if img[i][j] > 255:

img[i][j] = 255

elif img[i][j] < 0:

img[i][j] = 0

else:

continue

return img

if __name__ == '__main__':

img_path = 'cat.jpg' #

img = plt.imread(img_path)

img = np.array(img)

img = rgb_to_gray(img)

plt.figure()

labels = ['top_right_roberts_kernel', 'bottom_right_roberts_kernel', 'top_left_roberts_kernel',

'bottom_left_roberts_kernel']

for j, kernel in enumerate(roberts_kernels):

i = 1

'''绘制原图'''

plt.subplot(1, 2, i)

plt.imshow(img, cmap='gray')

plt.title('Original')

i += 1

in_img = torch.from_numpy(img.astype(np.float32)).reshape((1, 1, img.shape[0], img.shape[1]))

conv2d = torch.nn.Conv2d(in_channels=1, out_channels=1, kernel_size=kernel.shape)

kernel = torch.from_numpy(kernel.astype(np.float32)).reshape((1, 1, kernel.shape[0], kernel.shape[1]))

conv2d.weight.data = kernel

out_img = conv2d(in_img)

'''绘制卷积后的图像'''

out_img = np.squeeze(out_img.detach().numpy()) # detach()将计算图中的非叶节点分离,否则没法numpy()

out_img = adjust(out_img) # 处理溢出值

plt.subplot(1, 2, i)

plt.imshow(out_img, cmap='gray')

plt.title('{}'.format(labels[j]))

plt.show()

左下roberts:

左上roberts:

右下roberts:

右上roberts:

普雷维特(prewitt)算子:

共八种。

# coding:utf-8

import numpy as np

import torch

from matplotlib import pyplot as plt

prewitt_kernels = np.array([

[

[1, 1, 1], # 顶部prewitt

[0, 0, 0],

[-1, -1, -1]

],

[

[-1, -1, -1], # 底部prewitt

[0, 0, 0],

[1, 1, 1]

],

[[1, 0, -1], # 左prewitt

[1, 0, -1],

[1, 0, -1]],

[[-1, 0, 1], # 右prewitt

[-1, 0, 1],

[-1, 0, 1]],

[

[0, 1, 1], # 右上prewitt

[-1, 0, 1],

[-1, -1, 0]

],

[

[-1, -1, 0], # 右下prewitt

[-1, 0, 1],

[0, 1, 1]

],

[

[1, 1, 0], # 左上prewitt

[1, 0, -1],

[0, -1, -1]

],

[

[0, -1, -1], # 左下prewitt

[1, 0, -1],

[1, 1, 0]

]

])

def rgb_to_gray(rgb):

r, g, b = rgb[:, :, 0], rgb[:, :, 1], rgb[:, :, 2]

gray = 0.2989 * r + 0.5870 * g + 0.1140 * b

return gray

def adjust(img):

'''将像素值大于255的记为255,小于0的记为0'''

for i in range(img.shape[0]):

for j in range(img.shape[1]):

if img[i][j] > 255:

img[i][j] = 255

elif img[i][j] < 0:

img[i][j] = 0

else:

continue

return img

if __name__ == '__main__':

img_path = 'cat.jpg' #

img = plt.imread(img_path)

img = np.array(img)

img = rgb_to_gray(img)

plt.figure()

labels = ['top_prewitt_kernel', 'bottom_prewitt_kernel', 'left_prewitt_kernel', 'right_prewitt_kernel',

'top_right_prewitt_kernel', 'bottom_right_prewitt_kernel', 'top_left_prewitt_kernel',

'bottom_left_prewitt_kernel']

for j, kernel in enumerate(prewitt_kernels):

i = 1

'''绘制原图'''

plt.subplot(1, 2, i)

plt.imshow(img, cmap='gray')

plt.title('Original')

i += 1

in_img = torch.from_numpy(img.astype(np.float32)).reshape((1, 1, img.shape[0], img.shape[1]))

conv2d = torch.nn.Conv2d(in_channels=1, out_channels=1, kernel_size=kernel.shape)

kernel = torch.from_numpy(kernel.astype(np.float32)).reshape((1, 1, kernel.shape[0], kernel.shape[1]))

conv2d.weight.data = kernel

out_img = conv2d(in_img)

'''绘制卷积后的图像'''

out_img = np.squeeze(out_img.detach().numpy()) # detach()将计算图中的非叶节点分离,否则没法numpy()

out_img = adjust(out_img) # 处理溢出值

plt.subplot(1, 2, i)

plt.imshow(out_img, cmap='gray')

plt.title('{}'.format(labels[j]))

plt.show()

左下prewitt:

左上prewitt:

右下prewitt:

右上prewitt:

左prewitt:

右prewitt:

底部prewitt:

顶部prewitt:

索贝尔(sobel)算子:

共八种。

# coding:utf-8

import numpy as np

import torch

from matplotlib import pyplot as plt

sobel_kernels = np.array([

[

[1, 2, 1], # 顶部索贝尔

[0, 0, 0],

[-1, -2, -1]

],

[

[-1, -2, -1], # 底部索贝尔

[0, 0, 0],

[1, 2, 1]

],

[[1, 0, -1], # 左索贝尔

[2, 0, -2],

[1, 0, -1]],

[[-1, 0, 1], # 右索贝尔

[-2, 0, 2],

[-1, 0, 1]],

[

[0, 1, 2], # 右上索贝尔

[-1, 0, 1],

[-2, -1, 0]

],

[

[-2, -1, 0], # 右下索贝尔

[-1, 0, 1],

[0, 1, 2]

],

[

[2, 1, 0], # 左上索贝尔

[1, 0, -1],

[0, -1, -2]

],

[

[0, -1, -2], # 左下索贝尔

[1, 0, -1],

[2, 1, 0]

]

])

def rgb_to_gray(rgb):

r, g, b = rgb[:, :, 0], rgb[:, :, 1], rgb[:, :, 2]

gray = 0.2989 * r + 0.5870 * g + 0.1140 * b

return gray

def adjust(img):

'''将像素值大于255的记为255,小于0的记为0'''

for i in range(img.shape[0]):

for j in range(img.shape[1]):

if img[i][j] > 255:

img[i][j] = 255

elif img[i][j] < 0:

img[i][j] = 0

else:

continue

return img

if __name__ == '__main__':

img_path = 'cat.jpg' #

img = plt.imread(img_path)

img = np.array(img)

img = rgb_to_gray(img)

plt.figure()

labels = ['top_sobel_kernel', 'bottom_sobel_kernel', 'left_sobel_kernel', 'right_sobel_kernel',

'top_right_sobel_kernel', 'bottom_right_sobel_kernel', 'top_left_sobel_kernel',

'bottom_right_sobel_kernel']

for j, kernel in enumerate(sobel_kernels):

i = 1

'''绘制原图'''

plt.subplot(1, 2, i)

plt.imshow(img, cmap='gray')

plt.title('Original')

i += 1

in_img = torch.from_numpy(img.astype(np.float32)).reshape((1, 1, img.shape[0], img.shape[1]))

conv2d = torch.nn.Conv2d(in_channels=1, out_channels=1, kernel_size=kernel.shape)

kernel = torch.from_numpy(kernel.astype(np.float32)).reshape((1, 1, kernel.shape[0], kernel.shape[1]))

conv2d.weight.data = kernel

out_img = conv2d(in_img)

'''绘制卷积后的图像'''

out_img = np.squeeze(out_img.detach().numpy()) # detach()将计算图中的非叶节点分离,否则没法numpy()

out_img = adjust(out_img) # 处理溢出值

plt.subplot(1, 2, i)

plt.imshow(out_img, cmap='gray')

plt.title('{}'.format(labels[j]))

plt.show()

左上索贝尔:

左下索贝尔:

右下索贝尔:

右上索贝尔:

右索贝尔:

左索贝尔:

底部索贝尔:

顶部索贝尔:

##### 沙尔(Scharr)算子:

共八种。

# coding:utf-8

import numpy as np

import torch

from matplotlib import pyplot as plt

Scharr_kernels = np.array([

[

[3, 10, 3], # 顶部沙尔

[0, 0, 0],

[-3, -10, -3]

],

[

[-3, -10, -3], # 底部沙尔

[0, 0, 0],

[3, 10, 3]

],

[[3, 0, -3], # 左沙尔

[10, 0, -10],

[3, 0, -3]],

[[-3, 0, 3], # 右沙尔

[-10, 0, 10],

[-3, 0, 3]],

[

[0, 3, 10], # 右上沙尔

[-3, 0, 3],

[-10, -3, 0]

],

[

[-10, -3, 0], # 右下沙尔

[-3, 0, 3],

[0, 3, 10]

],

[

[10, 3, 0], # 左上沙尔

[3, 0, -3],

[0, -3, -10]

],

[

[0, -3, -10], # 左下沙尔

[3, 0, -3],

[10, 3, 0]

]

])

def rgb_to_gray(rgb):

r, g, b = rgb[:, :, 0], rgb[:, :, 1], rgb[:, :, 2]

gray = 0.2989 * r + 0.5870 * g + 0.1140 * b

return gray

def adjust(img):

'''将像素值大于255的记为255,小于0的记为0'''

for i in range(img.shape[0]):

for j in range(img.shape[1]):

if img[i][j] > 255:

img[i][j] = 255

elif img[i][j] < 0:

img[i][j] = 0

else:

continue

return img

if __name__ == '__main__':

img_path = 'cat.jpg' #

img = plt.imread(img_path)

img = np.array(img)

img = rgb_to_gray(img)

plt.figure()

labels = ['top_Scharr_kernel', 'bottom_Scharr_kernel', 'left_Scharr_kernel', 'right_Scharr_kernel',

'top_right_Scharr_kernel', 'bottom_right_Scharr_kernel', 'top_left_Scharr_kernel',

'bottom_left_Scharr_kernel']

for j, kernel in enumerate(Scharr_kernels):

i = 1

'''绘制原图'''

plt.subplot(1, 2, i)

plt.imshow(img, cmap='gray')

plt.title('Original')

i += 1

in_img = torch.from_numpy(img.astype(np.float32)).reshape((1, 1, img.shape[0], img.shape[1]))

conv2d = torch.nn.Conv2d(in_channels=1, out_channels=1, kernel_size=kernel.shape)

kernel = torch.from_numpy(kernel.astype(np.float32)).reshape((1, 1, kernel.shape[0], kernel.shape[1]))

conv2d.weight.data = kernel

out_img = conv2d(in_img)

'''绘制卷积后的图像'''

out_img = np.squeeze(out_img.detach().numpy()) # detach()将计算图中的非叶节点分离,否则没法numpy()

out_img = adjust(out_img) # 处理溢出值

plt.subplot(1, 2, i)

plt.imshow(out_img, cmap='gray')

plt.title('{}'.format(labels[j]))

plt.show()

左上沙尔:

左下沙尔:

右下沙尔:

左沙尔:

右上沙尔:

右沙尔:

底部沙尔:

顶部沙尔:

克里施(Krisch)算子:

共八种。

# coding:utf-8

import numpy as np

import torch

from matplotlib import pyplot as plt

Krisch_kernels = np.array([

[

[5, 5, 5], # 顶部克里施

[-3, 0, -3],

[-3, -3, -3]

],

[

[-3, -3, -3], # 底部克里施

[-3, 0,-3],

[5, 5, 5]

],

[[5, -3, -3], # 左克里施

[5, 0, -3],

[5, -3, -3]],

[[-3, -3, 5], # 右克里施

[-3, 0, 5],

[-3, -3, 5]],

[

[-3, 5, 5], # 右上克里施

[-3, 0, 5],

[-3, -3, -3]

],

[

[-3, -3,-3], # 右下克里施

[-3, 0, 5],

[-3, 5, 5]

],

[

[5, 5, -3], # 左上克里施

[5, 0, -3],

[-3, -3, -3]

],

[

[-3, -3, -3], # 左下克里施

[5, 0, -3],

[5, 5, -3]

]

])

def rgb_to_gray(rgb):

r, g, b = rgb[:, :, 0], rgb[:, :, 1], rgb[:, :, 2]

gray = 0.2989 * r + 0.5870 * g + 0.1140 * b

return gray

def adjust(img):

'''将像素值大于255的记为255,小于0的记为0'''

for i in range(img.shape[0]):

for j in range(img.shape[1]):

if img[i][j] > 255:

img[i][j] = 255

elif img[i][j] < 0:

img[i][j] = 0

else:

continue

return img

if __name__ == '__main__':

img_path = 'cat.jpg' #

img = plt.imread(img_path)

img = np.array(img)

img = rgb_to_gray(img)

plt.figure()

labels = ['top_Krisch_kernel', 'bottom_Krisch_kernel', 'left_Krisch_kernel', 'right_Krisch_kernel',

'top_right_Krisch_kernel', 'bottom_right_Krisch_kernel', 'top_left_Krisch_kernel',

'bottom_left_Krisch_kernel']

for j, kernel in enumerate(Krisch_kernels):

i = 1

'''绘制原图'''

plt.subplot(1, 2, i)

plt.imshow(img, cmap='gray')

plt.title('Original')

i += 1

in_img = torch.from_numpy(img.astype(np.float32)).reshape((1, 1, img.shape[0], img.shape[1]))

conv2d = torch.nn.Conv2d(in_channels=1, out_channels=1, kernel_size=kernel.shape)

kernel = torch.from_numpy(kernel.astype(np.float32)).reshape((1, 1, kernel.shape[0], kernel.shape[1]))

conv2d.weight.data = kernel

out_img = conv2d(in_img)

'''绘制卷积后的图像'''

out_img = np.squeeze(out_img.detach().numpy()) # detach()将计算图中的非叶节点分离,否则没法numpy()

out_img = adjust(out_img) # 处理溢出值

plt.subplot(1, 2, i)

plt.imshow(out_img, cmap='gray')

plt.title('{}'.format(labels[j]))

plt.show()

左下克里施:

左上克里施:

右下克里施:

右上克里施:

右克里施:

左克里施:

底部克里施:

顶部克里施:

罗宾逊(Robinson)算子:

共八种。

# coding:utf-8

import numpy as np

import torch

from matplotlib import pyplot as plt

Robinson_kernels = np.array([

[

[1, 1, 1], # 顶部罗宾逊

[1, -2, 1],

[-1, -1, -1]

],

[

[-1, -1, -1], # 底部罗宾逊

[1, -2,1],

[1,1, 1]

],

[[1, 1, -1], # 左罗宾逊

[1, -2, -1],

[1, 1, -1]],

[[-1, 1, 1], # 右罗宾逊

[-1, -2, 1],

[-1, 1, 1]],

[

[1, 1, 1], # 右上罗宾逊

[-1, -2, 1],

[-1, -1, 1]

],

[

[-1, -1,1], # 右下罗宾逊

[-1, -2, 1],

[1, 1, 1]

],

[

[1, 1, 1], # 左上罗宾逊

[1, -2, -1],

[1, -1, -1]

],

[

[1, -1, -1], # 左下罗宾逊

[1, -2, -1],

[1, 1, 1]

]

])

def rgb_to_gray(rgb):

r, g, b = rgb[:, :, 0], rgb[:, :, 1], rgb[:, :, 2]

gray = 0.2989 * r + 0.5870 * g + 0.1140 * b

return gray

def adjust(img):

'''将像素值大于255的记为255,小于0的记为0'''

for i in range(img.shape[0]):

for j in range(img.shape[1]):

if img[i][j] > 255:

img[i][j] = 255

elif img[i][j] < 0:

img[i][j] = 0

else:

continue

return img

if __name__ == '__main__':

img_path = 'cat.jpg' #

img = plt.imread(img_path)

img = np.array(img)

img = rgb_to_gray(img)

plt.figure()

labels = ['top_Robinson_kernel', 'bottom_Robinson_kernel', 'left_Robinson_kernel', 'right_Robinson_kernel',

'top_right_Robinson_kernel', 'bottom_right_Robinson_kernel', 'top_left_Robinson_kernel',

'bottom_left_Robinson_kernel']

for j, kernel in enumerate(Robinson_kernels):

i = 1

'''绘制原图'''

plt.subplot(1, 2, i)

plt.imshow(img, cmap='gray')

plt.title('Original')

i += 1

in_img = torch.from_numpy(img.astype(np.float32)).reshape((1, 1, img.shape[0], img.shape[1]))

conv2d = torch.nn.Conv2d(in_channels=1, out_channels=1, kernel_size=kernel.shape)

kernel = torch.from_numpy(kernel.astype(np.float32)).reshape((1, 1, kernel.shape[0], kernel.shape[1]))

conv2d.weight.data = kernel

out_img = conv2d(in_img)

'''绘制卷积后的图像'''

out_img = np.squeeze(out_img.detach().numpy()) # detach()将计算图中的非叶节点分离,否则没法numpy()

out_img = adjust(out_img) # 处理溢出值

plt.subplot(1, 2, i)

plt.imshow(out_img, cmap='gray')

plt.title('{}'.format(labels[j]))

plt.show()

左下罗宾逊:

左上罗宾逊:

右下罗宾逊:

右上罗宾逊:

右罗宾逊:

左罗宾逊:

底部罗宾逊:

顶部罗宾逊:

Laplacian(拉普拉斯)算子:

共两种。

# coding:utf-8

import numpy as np

import torch

from matplotlib import pyplot as plt

Laplacian_kernels = np.array([

[

[0, 1, 0], # 4邻域拉普拉斯核

[1, -4, 1],

[0, 1, 0]

],

[

[1, 1, 1], # 8邻域拉普拉斯核

[1, -8,1],

[1,1, 1]

],

])

def rgb_to_gray(rgb):

r, g, b = rgb[:, :, 0], rgb[:, :, 1], rgb[:, :, 2]

gray = 0.2989 * r + 0.5870 * g + 0.1140 * b

return gray

def adjust(img):

'''将像素值大于255的记为255,小于0的记为0'''

for i in range(img.shape[0]):

for j in range(img.shape[1]):

if img[i][j] > 255:

img[i][j] = 255

elif img[i][j] < 0:

img[i][j] = 0

else:

continue

return img

if __name__ == '__main__':

img_path = 'cat.jpg' #

img = plt.imread(img_path)

img = np.array(img)

img = rgb_to_gray(img)

plt.figure()

labels = ['4_neighbor_Laplacian_kernel', '8_neighbor_Laplacian_kernel',]

for j, kernel in enumerate(Laplacian_kernels):

i = 1

'''绘制原图'''

plt.subplot(1, 2, i)

plt.imshow(img, cmap='gray')

plt.title('Original')

i += 1

in_img = torch.from_numpy(img.astype(np.float32)).reshape((1, 1, img.shape[0], img.shape[1]))

conv2d = torch.nn.Conv2d(in_channels=1, out_channels=1, kernel_size=kernel.shape)

kernel = torch.from_numpy(kernel.astype(np.float32)).reshape((1, 1, kernel.shape[0], kernel.shape[1]))

conv2d.weight.data = kernel

out_img = conv2d(in_img)

'''绘制卷积后的图像'''

out_img = np.squeeze(out_img.detach().numpy()) # detach()将计算图中的非叶节点分离,否则没法numpy()

out_img = adjust(out_img) # 处理溢出值

plt.subplot(1, 2, i)

plt.imshow(out_img, cmap='gray')

plt.title('{}'.format(labels[j]))

plt.show()

8邻域拉普拉斯:

4邻域拉普拉斯:

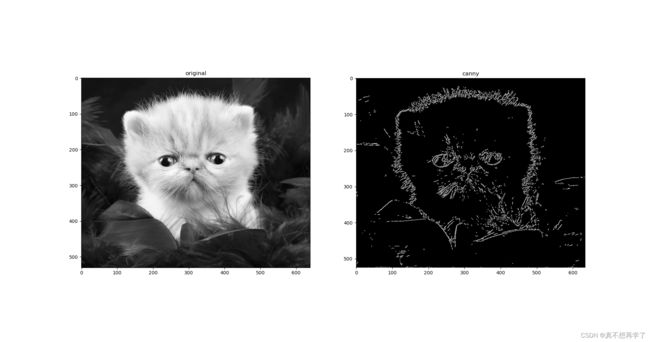

边缘检测系列2:简易的 Canny 边缘检测器 - 飞桨AI Studio

实现的简易的 Canny 边缘检测算法

1986年,JOHN CANNY首次在论文《A Computational Approach to Edge Detection》中提出Canny边缘检测。细节方面可以查阅相关资料。

Canny边缘检测算法可以分为以下5个步骤:

- 使用高斯滤波器,以平滑图像,滤除噪声。

- 计算图像中每个像素点的梯度强度和方向。

- 应用非极大值(Non-Maximum Suppression)抑制,以消除边缘检测带来的杂散响应。

- 应用双阈值(Double-Threshold)检测来确定真实的和潜在的边缘。

- 通过抑制孤立的弱边缘最终完成边缘检测。

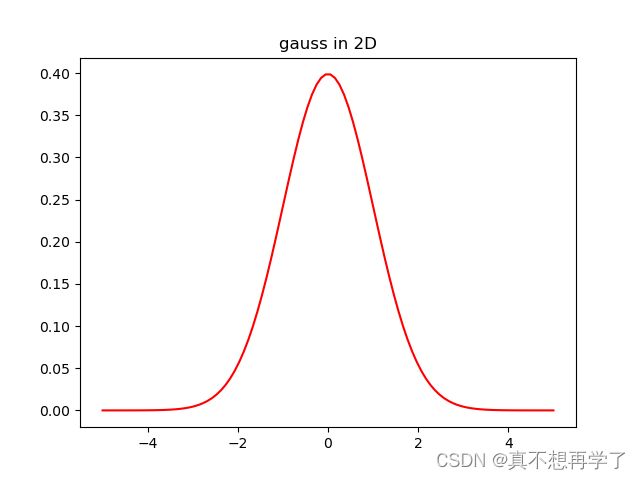

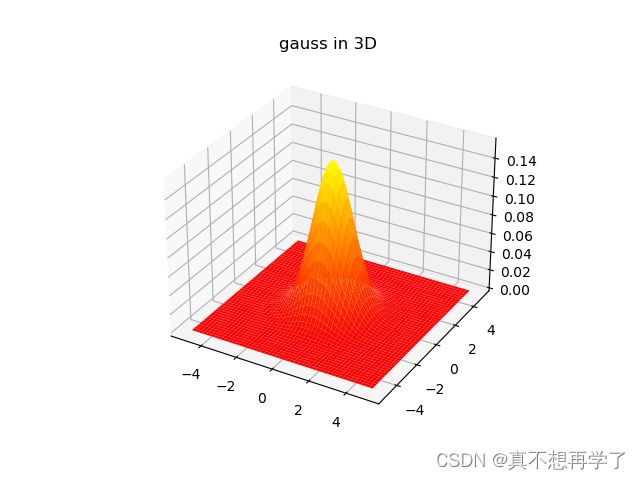

1.用高斯滤波器处理图像

首先应该了解高斯函数:

一维gauss:

y = 1 2 π σ e − x 2 2 σ 2 y=\frac{1}{\sqrt{2\pi}\sigma}e^{-\frac{x^2}{2\sigma^2}} y=2πσ1e−2σ2x2

二维gauss:

h = 1 2 π σ 2 e − x 2 + y 2 2 σ 2 h=\frac{1}{2\pi\sigma^2}e^{-\frac{x^2+y^2}{2\sigma^2}} h=2πσ21e−2σ2x2+y2

为了便于理解高斯函数与高斯核,设计了一个显示高斯函数的代码:

import math

import matplotlib.pyplot as plt

import numpy as np

def show_gauss2D(x=np.linspace(-5, 5, 100),sigma=1):

y=1/((2*math.pi)**(1/2)*sigma) *math.e**(-x**2/2*sigma**2)

plt.figure()

plt.plot(x,y,c='r')

plt.title('gauss in 2D')

plt.show()

def show_gauss3D(x= np.linspace(-5, 5, 50),y= np.linspace(-5, 5, 50),sigma=1):

x, y = np.meshgrid(x, y)

map=(1/(2*math.pi*sigma**2)*math.e**(-(x**2+y**2)/(2*sigma**2)))

map=np.array(map)

map=map.reshape(len(x),len(y))

fg = plt.figure()

ax3d = fg.add_subplot(projection='3d')

ax3d.plot_surface(x, y, map,cmap='autumn')

plt.title('gauss in 3D')

plt.show()

if __name__=='__main__':

show_gauss2D()

show_gauss3D()

图像卷积的高斯核就是根据高斯函数的二维分布情况进行采样的,对于不同的需求,我们可以调整theta参数来改变高斯函数的形状,还可以调整采样间隔来改变采样点的疏密,最后对采样结果归一化即可。

实际上,高斯核的参数选取就类似一个对高斯函数离散化的过程,对于一个 ( 2 k + 1 ) ∗ ( 2 k + 1 ) (2k+1)*(2k+1) (2k+1)∗(2k+1)尺寸的高斯核,我们按照下式选取它的参数:

H i j = 1 2 π σ 2 e − ( i − ( k + 1 ) ) 2 + ( j − ( k + 1 ) ) 2 2 σ 2 H_{ij}=\frac{1}{2\pi\sigma^2}e^{-\frac{(i-(k+1))^2+(j-(k+1))^2}{2\sigma^2}} Hij=2πσ21e−2σ2(i−(k+1))2+(j−(k+1))2

代码实现:

import math

import numpy as np

def get_gauss_kernel(kernel_shape=(3,3),sigma=1):

k=(kernel_shape[0]-1)/2

kernel=[]

for i in range(1,kernel_shape[0]+1):

for j in range(1,kernel_shape[1]+1):

kernel.append(1/(2*math.pi*sigma**2)*math.e**(-((i-(k+1))**2+(j-(k+1))**2)/2*sigma**2))

return np.array(kernel).reshape(kernel_shape)/sum(kernel)

if __name__=='__main__':

gauss_kernel1=get_gauss_kernel()

gauss_kernel2=get_gauss_kernel(kernel_shape=(5,5),sigma=1.2)

print(gauss_kernel1)

print(gauss_kernel2)

运行结果

[[0.07511361 0.1238414 0.07511361]

[0.1238414 0.20417996 0.1238414 ]

[0.07511361 0.1238414 0.07511361]]

[[0.00072432 0.00628066 0.0129032 0.00628066 0.00072432]

[0.00628066 0.05446048 0.11188541 0.05446048 0.00628066]

[0.0129032 0.11188541 0.2298611 0.11188541 0.0129032 ]

[0.00628066 0.05446048 0.11188541 0.05446048 0.00628066]

[0.00072432 0.00628066 0.0129032 0.00628066 0.00072432]]

Process finished with exit code 0

模糊效果:

#coding:utf-8

import math

import matplotlib.pyplot as plt

import numpy as np

import torch

def get_gauss_kernel(kernel_shape=(3,3),sigma=1):

k=(kernel_shape[0]-1)/2

kernel=[]

for i in range(1,kernel_shape[0]+1):

for j in range(1,kernel_shape[1]+1):

kernel.append(1/(2*math.pi*sigma**2)*math.e**(-((i-(k+1))**2+(j-(k+1))**2)/2*sigma**2))

return np.array(kernel).reshape(kernel_shape)/sum(kernel)

def rgb_to_gray(rgb):

r, g, b = rgb[:, :, 0], rgb[:, :,1], rgb[:, :, 2]

gray = 0.2989 * r + 0.5870 * g + 0.1140 * b

return gray

def adjust(img):

'''将像素值大于255的记为255,小于0的记为0'''

for i in range(img.shape[0]):

for j in range(img.shape[1]):

if img[i][j] > 255:

img[i][j] = 255

elif img[i][j] < 0:

img[i][j] = 0

else:

continue

return img

if __name__=='__main__':

#gauss_kernel=get_gauss_kernel()

gauss_kernel = get_gauss_kernel(kernel_shape=(5, 5), sigma=1.2)

img_path = 'cat.jpg' #

img = plt.imread(img_path)

img = np.array(img)

img = rgb_to_gray(img)

plt.figure()

labels = ['gauss_kernel of size{}'.format(gauss_kernel.shape)]

for j, kernel in enumerate([gauss_kernel]):

i = 1

'''绘制原图'''

plt.subplot(1, 2, i)

plt.imshow(img, cmap='gray')

plt.title('Original')

i += 1

in_img = torch.from_numpy(img.astype(np.float32)).reshape((1, 1, img.shape[0], img.shape[1]))

conv2d = torch.nn.Conv2d(in_channels=1, out_channels=1, kernel_size=kernel.shape)

kernel = torch.from_numpy(kernel.astype(np.float32)).reshape((1, 1, kernel.shape[0], kernel.shape[1]))

conv2d.weight.data = kernel

out_img = conv2d(in_img)

'''绘制卷积后的图像'''

out_img = np.squeeze(out_img.detach().numpy()) # detach()将计算图中的非叶节点分离,否则没法numpy()

out_img = adjust(out_img) # 处理溢出值

plt.subplot(1, 2, i)

plt.imshow(out_img, cmap='gray')

plt.title('{}'.format(labels[j]))

plt.show()

2.计算图像的梯度强度和方向

用右sobel和底部sobel分别求出图像中每个像素点在x方向和y方向上的梯度gx和gy,再由这两个方向上的梯度来计算出整幅图像每个像素点的梯度大小和梯度方向。

梯度大小:

S = g x 2 + g y 2 S=\sqrt{{g_x}^2+{g_y}^2} S=gx2+gy2

梯度方向由角度的反正切表示:

θ = arctan g y g x \theta=\arctan{\frac{g_y}{g_x}} θ=arctangxgy

#coding:utf-8

import math

import matplotlib.pyplot as plt

import numpy as np

import torch

sobel_kernels =[

[[-1, 0, 1], # 右索贝尔

[-2, 0, 2],

[-1, 0, 1]],

[[-1, -2, -1], # 底部索贝尔

[0, 0, 0],

[1, 2, 1]]

]

def get_gauss_kernel(kernel_shape=(3,3),sigma=1.0):

k=(kernel_shape[0]-1)/2

kernel=[]

for i in range(1,kernel_shape[0]+1):

for j in range(1,kernel_shape[1]+1):

kernel.append(1/(2*math.pi*sigma**2)*math.e**(-((i-(k+1))**2+(j-(k+1))**2)/2*sigma**2))

return np.array(kernel).reshape(kernel_shape)/sum(kernel)

def rgb_to_gray(rgb):

r, g, b = rgb[:, :, 0], rgb[:, :,1], rgb[:, :, 2]

gray = 0.2989 * r + 0.5870 * g + 0.1140 * b

return gray

def adjust(img):

'''将像素值大于255的记为255,小于0的记为0'''

for i in range(img.shape[0]):

for j in range(img.shape[1]):

if img[i][j] > 255:

img[i][j] = 255

elif img[i][j] < 0:

img[i][j] = 0

else:

continue

return img

def conv(img,kernel):

in_img = torch.from_numpy(img.astype(np.float32)).reshape((1, 1, img.shape[0], img.shape[1]))

conv2d = torch.nn.Conv2d(in_channels=1, out_channels=1, kernel_size=kernel.shape)

kernel = torch.from_numpy(kernel.astype(np.float32)).reshape((1, 1, kernel.shape[0], kernel.shape[1]))

conv2d.weight.data = kernel

out_img = conv2d(in_img)

'''绘制卷积后的图像'''

out_img = np.squeeze(out_img.detach().numpy()) # detach()将计算图中的非叶节点分离,否则没法numpy()

return out_img

def show_img(img):

out_img = adjust(img) # 处理溢出值

plt.figure()

plt.imshow(out_img, cmap='gray')

plt.show()

def get_Grad_info(grad_x,grad_y):

Grad_strength=(grad_x**2+grad_y**2)**(1/2)

Grad_theta=[]

for i,j in zip(grad_y.flatten(),grad_x.flatten()):

Grad_theta.append(i/j)

Grad_theta=np.array(Grad_theta).reshape(grad_x.shape)

return np.array(Grad_strength),np.array(Grad_theta)

if __name__=='__main__':

'''获取图像'''

img_path = 'cat.jpg' #

img = plt.imread(img_path)

img = np.array(img)

'''灰度化'''

img = rgb_to_gray(img)

'''高斯平滑'''

# gauss_kernel=get_gauss_kernel()

gauss_kernel = get_gauss_kernel(kernel_shape=(5, 5), sigma=1.2)

img=conv(img,gauss_kernel)

#show_img(img)

'''计算水平和垂直方向上的梯度'''

grad_x=conv(img,np.array(sobel_kernels[0]))

grad_y=conv(img,np.array(sobel_kernels[1]))

'''计算每个像素点的梯度大小和方向'''

G_strength,G_theta=get_Grad_info(grad_x,grad_y)

show_img(G_strength)

for i,j in zip(G_strength.flatten(),G_theta.flatten()):

pass

3.非极大值抑制

为了更好的理解角度,设计代码观察-2 π \pi π~2 π \pi π的arctan值

import math

import matplotlib.pyplot as plt

import numpy as np

x=np.linspace(-2*math.pi,2*math.pi,400)

y=[]

for i in x :

y.append(math.atan(i))

y=np.array(y)

plt.figure()

plt.plot(x,y,c='r')

plt.title('arctan(x) in (-2pi,2pi)')

plt.show()

图像说明一个角度对应着一个arctan值,成正相关。而在一个感受野中,一个 θ \theta θ对应着两个方向,因为对顶角相等。这两个方向上的 θ \theta θ是一致的,则他们的arctan值也是一致的。这样我们就可以把角度分析缩小到- π \pi π~ π \pi π之间。

每一个 θ \theta θ可以代表两个梯度方向(一前一后),我们可以在这个 θ \theta θ的两个方向上进行梯度强度的比较。当中心梯度强度是这两个方向上的极大值时,保留。否则抑制,记为0。实际上实现了对边缘的”细化“。

还有一个问题就是我们不能保证梯度方向一定落在相邻像素点上,如果只对边和角进行对比,效果会有但是不会是最好。如果想获得更好的抑制效果,我们需要对所有的角度都进行比较。那么对于梯度方向上的非像素点,可以用线性插值法进行预测,即根据相邻点值和距离预测,进而比较大小。综上分析一共会有四种范围。

设计函数:

def non_maximum_suppression(G_strength, G_theta):

for i in range(1, G_strength.shape[0] - 1):

for j in range(1, G_strength.shape[1] - 1):

if (G_theta[i][j] > math.atan(-math.pi / 2)) & (G_theta[i][j] < math.atan(-math.pi / 4)):

'''分上下左右up down l r'''

'''这种情况是考虑up,upr downl,down'''

up = G_strength[i - 1][j]

upr = G_strength[i - 1][j + 1]

down = G_strength[i + 1][j]

downl = G_strength[i + 1][j - 1]

'''这种情况下theta是负值,因此要绝对值化'''

pre_up = abs(G_theta[i][j]) * upr + (1 - abs(G_theta[i][j])) * up

pre_down = abs(G_theta[i][j]) * downl + (1 - abs(G_theta[i][j])) * down

if (G_strength[i][j] > pre_up) & (G_strength[i][j] > pre_down):

continue

else:

G_strength[i][j] = 0

elif (G_theta[i][j] > math.atan(-math.pi / 4)) & (G_theta[i][j] < math.atan(0)):

'''left leftdown right rightup'''

left = G_strength[i][j - 1]

leftdown = G_strength[i + 1][j - 1]

right = G_strength[i][j + 1]

righup = G_strength[i - 1][j + 1]

'''ranctan=1/tan'''

pre_up = abs(1 / G_theta[i][j]) * righup + (1 - abs(1 / G_theta[i][j])) * right

pre_down = abs(1 / G_theta[i][j]) * leftdown + (1 - abs(1 / G_theta[i][j])) * left

if (G_strength[i][j] > pre_up) & (G_strength[i][j] > pre_down):

continue

else:

G_strength[i][j] = 0

elif (G_theta[i][j] > math.atan(0)) & (G_theta[i][j] > math.atan(math.pi / 4)):

'''right rightd left leftu'''

right = G_strength[i][j + 1]

rightdown = G_strength[i + 1][j + 1]

left = G_strength[i - 1][j]

leftup = G_strength[i - 1][j - 1]

pre_down = abs(1 / G_theta[i][j]) * rightdown + (1 - abs(1 / G_theta[i][j])) * right

pre_up = abs(1 / G_theta[i][j]) * leftup + (1 - abs(1 / G_theta[i][j])) * left

if (G_strength[i][j] > pre_up) & (G_strength[i][j] > pre_down):

continue

else:

G_strength[i][j] = 0

else:

'''down downright up upleft'''

down = G_strength[i + 1][j]

downright = G_strength[i + 1][j + 1]

up = G_strength[i - 1][j]

upleft = G_strength[i - 1][j - 1]

pre_down = abs(G_theta[i][j]) * downright + (1 - abs(G_theta[i][j])) * down

pre_up = abs(G_theta[i][j]) * upleft + (1 - abs(G_theta[i][j])) * up

if (G_strength[i][j] > pre_up) & (G_strength[i][j] > pre_down):

continue

else:

G_strength[i][j] = 0

return G_strength

4.双阈值检测

设置两个阈值,对每个像素点进行分析,如果低于低阈值,像素点被排除,若处于低阈值和高阈值之间,记为弱边缘,若高于高阈值点,记为强边缘。

对应函数:

为了便于显示,还将强边缘点设置为255,若边缘点设置为150.

def double_threshold_detection(img, low_threshold, high_threshold):

'''双阈值检测'''

target = []

for i in range(0, img.shape[0]):

for j in range(0, img.shape[1]):

if img[i][j] <= low_threshold:

target.append(0)

img[i][j]=0

elif (img[i][j] > low_threshold) & (img[i][j] <= high_threshold):

target.append(1)

img[i][j]=150

else:

target.append(2)

img[i][j]=255

return np.array(target).reshape(img.shape),img

4.抑制孤立低阈值点

对于强边缘,我们已经确定它是真正的边缘,而对于弱边缘,它还有可能是由模糊或颜色变化引起的,因此还需要对弱边缘进行选取。对于真正的弱边缘,我们通常认为,其四周连接着一个强边缘像素点,则认为这个点是真正的弱边缘点。

对应函数:

def Sup_isolate_low_threshold_points(G_strength,target):

for i in range(1, target.shape[0] - 1):

for j in range(1, target.shape[1] - 1):

if (target[i][j] == 1) & (target[i - 1][j - 1] == 2 or target[i-1][j ] == 2 or target[i - 1][j + 1] == 2 or

target[i][j - 1] == 2 or target[i][j + 1] == 2 or target[i + 1][j - 1] == 2 or

target[i+1][j ] == 2 or target[i + 1][j + 1] == 2):

continue

elif target[i][j]==2:continue

else:

G_strength[i][j] = 0

return G_strength

全部代码

# coding:utf-8

import math

import matplotlib.pyplot as plt

import numpy as np

import torch

sobel_kernels = [

[[-1, 0, 1], # 右索贝尔

[-2, 0, 2],

[-1, 0, 1]],

[[-1, -2, -1], # 底部索贝尔

[0, 0, 0],

[1, 2, 1]]

]

def get_gauss_kernel(kernel_shape=(3, 3), sigma=1.0):

k = (kernel_shape[0] - 1) / 2

kernel = []

for i in range(1, kernel_shape[0] + 1):

for j in range(1, kernel_shape[1] + 1):

kernel.append(1 / (2 * math.pi * sigma ** 2) * math.e ** (

-((i - (k + 1)) ** 2 + (j - (k + 1)) ** 2) / 2 * sigma ** 2))

return np.array(kernel).reshape(kernel_shape) / sum(kernel)

def rgb_to_gray(rgb):

r, g, b = rgb[:, :, 0], rgb[:, :, 1], rgb[:, :, 2]

gray = 0.2989 * r + 0.5870 * g + 0.1140 * b

return gray

def adjust(img):

'''将像素值大于255的记为255,小于0的记为0'''

for i in range(img.shape[0]):

for j in range(img.shape[1]):

if img[i][j] > 255:

img[i][j] = 255

elif img[i][j] < 0:

img[i][j] = 0

else:

continue

return img

def conv(img, kernel):

in_img = torch.from_numpy(img.astype(np.float32)).reshape((1, 1, img.shape[0], img.shape[1]))

conv2d = torch.nn.Conv2d(in_channels=1, out_channels=1, kernel_size=kernel.shape)

kernel = torch.from_numpy(kernel.astype(np.float32)).reshape((1, 1, kernel.shape[0], kernel.shape[1]))

conv2d.weight.data = kernel

out_img = conv2d(in_img)

'''绘制卷积后的图像'''

out_img = np.squeeze(out_img.detach().numpy()) # detach()将计算图中的非叶节点分离,否则没法numpy()

return out_img

def show_img(img):

out_img = adjust(img) # 处理溢出值

plt.figure()

plt.imshow(out_img, cmap='gray')

plt.show()

def get_Grad_info(grad_x, grad_y):

Grad_strength = (grad_x ** 2 + grad_y ** 2) ** (1 / 2)

Grad_theta = []

for i, j in zip(grad_y.flatten(), grad_x.flatten()):

Grad_theta.append(i / j)

Grad_theta = np.array(Grad_theta).reshape(grad_x.shape)

return np.array(Grad_strength), np.array(Grad_theta)

def non_maximum_suppression(G_strength, G_theta):

for i in range(1, G_strength.shape[0] - 1):

for j in range(1, G_strength.shape[1] - 1):

if (G_theta[i][j] > math.atan(-math.pi / 2)) & (G_theta[i][j] < math.atan(-math.pi / 4)):

'''分上下左右up down l r'''

'''这种情况是考虑up,upr downl,down'''

up = G_strength[i - 1][j]

upr = G_strength[i - 1][j + 1]

down = G_strength[i + 1][j]

downl = G_strength[i + 1][j - 1]

'''这种情况下theta是负值,因此要绝对值化'''

pre_up = abs(G_theta[i][j]) * upr + (1 - abs(G_theta[i][j])) * up

pre_down = abs(G_theta[i][j]) * downl + (1 - abs(G_theta[i][j])) * down

if (G_strength[i][j] > pre_up) & (G_strength[i][j] > pre_down):

continue

else:

G_strength[i][j] = 0

elif (G_theta[i][j] > math.atan(-math.pi / 4)) & (G_theta[i][j] < math.atan(0)):

'''left leftdown right rightup'''

left = G_strength[i][j - 1]

leftdown = G_strength[i + 1][j - 1]

right = G_strength[i][j + 1]

righup = G_strength[i - 1][j + 1]

'''ranctan=1/tan'''

pre_up = abs(1 / G_theta[i][j]) * righup + (1 - abs(1 / G_theta[i][j])) * right

pre_down = abs(1 / G_theta[i][j]) * leftdown + (1 - abs(1 / G_theta[i][j])) * left

if (G_strength[i][j] > pre_up) & (G_strength[i][j] > pre_down):

continue

else:

G_strength[i][j] = 0

elif (G_theta[i][j] > math.atan(0)) & (G_theta[i][j] > math.atan(math.pi / 4)):

'''right rightd left leftu'''

right = G_strength[i][j + 1]

rightdown = G_strength[i + 1][j + 1]

left = G_strength[i - 1][j]

leftup = G_strength[i - 1][j - 1]

pre_down = abs(1 / G_theta[i][j]) * rightdown + (1 - abs(1 / G_theta[i][j])) * right

pre_up = abs(1 / G_theta[i][j]) * leftup + (1 - abs(1 / G_theta[i][j])) * left

if (G_strength[i][j] > pre_up) & (G_strength[i][j] > pre_down):

continue

else:

G_strength[i][j] = 0

else:

'''down downright up upleft'''

down = G_strength[i + 1][j]

downright = G_strength[i + 1][j + 1]

up = G_strength[i - 1][j]

upleft = G_strength[i - 1][j - 1]

pre_down = abs(G_theta[i][j]) * downright + (1 - abs(G_theta[i][j])) * down

pre_up = abs(G_theta[i][j]) * upleft + (1 - abs(G_theta[i][j])) * up

if (G_strength[i][j] > pre_up) & (G_strength[i][j] > pre_down):

continue

else:

G_strength[i][j] = 0

return G_strength

def double_threshold_detection(img, low_threshold, high_threshold):

'''双阈值检测'''

target = []

for i in range(0, img.shape[0]):

for j in range(0, img.shape[1]):

if img[i][j] <= low_threshold:

target.append(0)

img[i][j]=0

elif (img[i][j] > low_threshold) & (img[i][j] <= high_threshold):

target.append(1)

img[i][j]=150

else:

target.append(2)

img[i][j]=255

return np.array(target).reshape(img.shape),img

def Sup_isolate_low_threshold_points(G_strength,target):

for i in range(1, target.shape[0] - 1):

for j in range(1, target.shape[1] - 1):

if (target[i][j] == 1) & (target[i - 1][j - 1] == 2 or target[i-1][j ] == 2 or target[i - 1][j + 1] == 2 or

target[i][j - 1] == 2 or target[i][j + 1] == 2 or target[i + 1][j - 1] == 2 or

target[i+1][j ] == 2 or target[i + 1][j + 1] == 2):

continue

elif target[i][j]==2:continue

else:

G_strength[i][j] = 0

return G_strength

if __name__ == '__main__':

'''获取图像'''

img_path = 'cat.jpg' #

img = plt.imread(img_path)

img = np.array(img)

'''灰度化'''

img = rgb_to_gray(img)

plt.figure()

plt.subplot(1,2,1)

plt.imshow(img,cmap='gray')

plt.title('original')

'''高斯平滑'''

# gauss_kernel=get_gauss_kernel()

gauss_kernel = get_gauss_kernel(kernel_shape=(5, 5), sigma=1.2)

img = conv(img, gauss_kernel)

# show_img(img)

'''计算水平和垂直方向上的梯度'''

grad_x = conv(img, np.array(sobel_kernels[0]))

grad_y = conv(img, np.array(sobel_kernels[1]))

'''计算每个像素点的梯度大小和方向'''

G_strength, G_theta = get_Grad_info(grad_x, grad_y)

#show_img(G_strength)

'''非极大值抑制'''

G_strength = non_maximum_suppression(G_strength, G_theta)

#show_img(G_strength)

'''双阈值检测'''

target,G_strength = double_threshold_detection(G_strength, 25,80)

#show_img(G_strength)

'''抑制孤立低阈值点'''

G_strength=Sup_isolate_low_threshold_points(G_strength,target)

#show_img(G_strength)

plt.subplot(1,2,2)

plt.imshow(G_strength,cmap='gray')

plt.title('canny')

plt.show()

canny虽然分为五步,但是只有两种常见的卷积,一次是高斯模糊,一次是sobel(或其他)计算梯度,在两个方向上计算梯度,实际上卷积了两次,那么一共是三次卷积。而后来的三步都是对通过梯度卷积出来的梯度强度进行进一步的处理,这几步也用类似卷积的方法,因为每一个像素点的分析都有一个感受野,即以目标像素点为中心的3x3的范围,输出也为一个特征值,其实也可以认为是一种卷积。

另外说是基于pytorch,实际上只用到了它的Conv2d算法,其他的部分都是直接遍历的,这导致运行效率比较低,可以作为一个参考。

边缘检测系列3:【HED】 Holistically-Nested 边缘检测 - 飞桨AI Studio

ref:https://aistudio.baidu.com/aistudio/projectdetail/2596381

复现论文 Holistically-Nested Edge Detection,发表于 CVPR 2015

一个基于深度学习的端到端边缘检测模型。

边缘检测系列4:【RCF】基于更丰富的卷积特征的边缘检测 - 飞桨AI Studio (baidu.com)

ref:https://aistudio.baidu.com/aistudio/projectdetail/2512625

http://mmcheng.net/rcfedge/

复现论文 Richer Convolutional Features for Edge Detection,CVPR 2017 发表

一个基于更丰富的卷积特征的边缘检测模型 【RCF】。

边缘检测系列5:【CED】添加了反向细化路径的 HED 模型 - 飞桨AI Studio (baidu.com)

ref:https://aistudio.baidu.com/aistudio/projectdetail/3151386

Crisp Edge Detection(CED)模型是前面介绍过的 HED 模型的另一种改进模型

总结:

1.学习了常见的传统边缘检测的卷积核,并实现了实例化。

2.学习了Canny边缘检测方法,了解了其步骤和原理,并实现了实例化。

3.对于经典的HED、RCF、CED边缘检测模型有了浅显的认识。

相比较来说Canny边缘检测很明显显得不是很美观,甚至显得很简陋,HED比Canny要更细致,但是线条要更粗一些。而RCF就更加细致,更加美观一些。CED是对HED的改进,表现在对HED特征图的进一步细化,使边缘更加清晰。

ref:

传统边缘检测算子:

https://blog.csdn.net/qq_44116998/article/details/124659472

canny边缘检测:

https://blog.csdn.net/m0_43609475/article/details/112775377

https://blog.csdn.net/weixin_40647819/article/details/91411424

https://www.cnblogs.com/techyan1990/p/7291771.html

实验所用图片来自互联网,供学术交流使用,侵删。