Colab平台利用gensim包实现Word2Vec和FastText(CBOW, Skip Gram两种实现)

重复造轮子不可取,要合理学会调(tou)包(lan)!

Gensim是一个可以用来进行无监督学习和自然语言处理的开源库,编写语言为Python和Cython,更多细节可以上官网查询。

首先导入基本的包:

import pprint

import re

# For parsing our XML data

from lxml import etree

# For data processing

import nltk

nltk.download('punkt')

from nltk.tokenize import word_tokenize, sent_tokenize

from gensim.models import Word2Vec

from gensim.models import FastText

import warnings

warnings.simplefilter(action='ignore', category=FutureWarning)

接下来创建模型:

wv_cbow_model = Word2Vec(sentences=sentences, size=100, window=5, min_count=5, workers=2, sg=0)

wv_sg_model = Word2Vec(sentences=sentences, size=100, window=5, min_count=5, workers=2, sg=1)

很简单吧,sg=0就是cbow模型,sg=1就是skip gram模型。其他几个参数的意义:第一个sentences是参数名,第二个sentences就是你要使用的数据,具体数据会在接下来介绍, size是word vector的维度,这里设定成100,window窗口大小,min_count 设定一个最小值,出现频率低于最小值的词会被模型忽略掉,workers 线程数。

接下来是数据, 这里会用到colab登陆(需要科学上网)以获取数据:

!pip install -U -q PyDrive

from pydrive.auth import GoogleAuth

from pydrive.drive import GoogleDrive

from google.colab import auth

from oauth2client.client import GoogleCredentials

# Authenticate and create the PyDrive client.

auth.authenticate_user()

gauth = GoogleAuth()

gauth.credentials = GoogleCredentials.get_application_default()

drive = GoogleDrive(gauth)

我们首先使用TED数据:

id = '1B47OiEiG2Lo1jUY6hy_zMmHBxfKQuJ8-'

downloaded = drive.CreateFile({'id':id})

downloaded.GetContentFile('ted_en-20160408.xml')

数据预处理:

targetXML=open('ted_en-20160408.xml', 'r', encoding='UTF8')

# Getting contents of tag from the xml file

target_text = etree.parse(targetXML)

parse_text = '\n'.join(target_text.xpath('//content/text()'))

# Removing "Sound-effect labels" using regular expression (regex) (i.e. (Audio), (Laughter))

content_text = re.sub(r'\([^)]*\)', '', parse_text)

# Tokenising the sentence to process it by using NLTK library

sent_text=sent_tokenize(content_text)

# Removing punctuation and changing all characters to lower case

normalized_text = []

for string in sent_text:

tokens = re.sub(r"[^a-z0-9]+", " ", string.lower())

normalized_text.append(tokens)

# Tokenising each sentence to process individual word

sentences=[]

sentences=[word_tokenize(sentence) for sentence in normalized_text]

# Prints only 10 (tokenised) sentences

print(sentences[:10])

这里面包含一些NLP基本操作,比如用正则表达式移除一些拟声词,比如haha等,然后将句子tokenize,并且移除标点符号。

训练的词向量word vectors会被存在模型的wv中, 现在查看第一个模型中最接近‘man’这个词的10个向量,使用most_similar()即可

similar_words=wv_cbow_model.wv.most_similar("man") # topn=10 by default

pprint.pprint(similar_words)

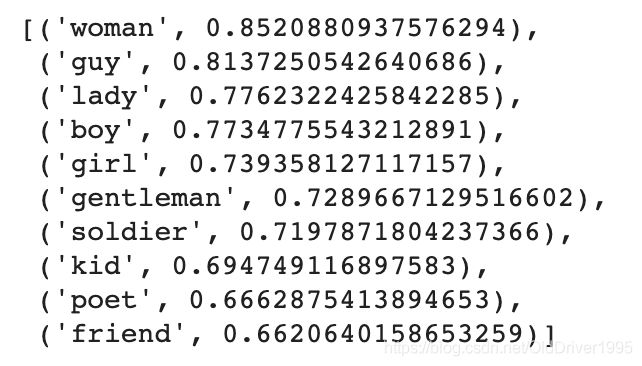

most_similar()默认显示10个,于是可以看到结果如下:

前十个最相似词以及他们的向量。

接下来对第二个模型(skip gram)使用相同的方式, 结果不再展示:

similar_words=wv_sg_model.wv.most_similar("man")

pprint.pprint(similar_words)

对于WordVector而言,most_similar()函数只能查找存在于训练的vocabulary中的词,比如你输入一个不存在的词,他是不能返回结果并且会报错的。

接下来对比FastText,跟Word2Vec相似,他也有CBOW和Skip Gram两种版本。区别在于,FastText可以查询vocabulary以外的词

ft_sg_model = FastText(sentences, size=100, window=5, min_count=5, workers=2, sg=1)

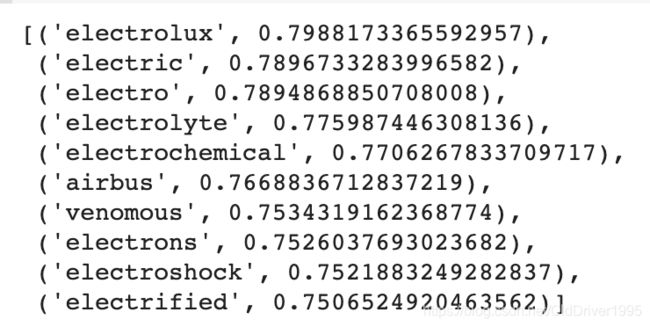

result=ft_sg_model.wv.most_similar("electrofishing")

pprint.pprint(result)

结果如下,electrofishing这个词是不存在于Vocabulary中的,不能用Word2Vec

同样的,也可以实现CBOW版本, 操作和之前类似:

ft_cbow_model = FastText(sentences, size=100, window=5, min_count=5, workers=2, sg=0)

现在我们希望通过词向量进行预测(NLP中经常会预测!)King-Man+Woman=? 从人类的直觉而言,这个答案可能会是Queen,当然不同的人可能会有不同的答案。

通过Word2Vec CBOW预测:

result = wv_cbow_model.wv.most_similar(positive=['woman', 'king'], negative=['man'], topn=1)

print(result)

结果是Luther??

[(‘luther’, 0.7577797174453735)]

利用Word2VecSkip Gram,FastText预测

result = wv_sg_model.wv.most_similar(positive=['woman', 'king'], negative=['man'], topn=1)

print(result)

result = ft_cbow_model.wv.most_similar(positive=['woman', 'king'], negative=['man'], topn=1)

print(result)

result = ft_sg_model.wv.most_similar(positive=['woman', 'king'], negative=['man'], topn=1)

print(result)

结果如下:

[(‘luther’, 0.6635635495185852)]

[(‘kidding’, 0.9021628499031067)]

[(‘jarring’, 0.7094123363494873)]

也可以设置topn查看更多结果。

接下来使用在Google News上进行过预训练的词向量, 注意这个数据有3.39G,可能要下载很久。然后进行解压

id2 = '0B7XkCwpI5KDYNlNUTTlSS21pQmM'

downloaded = drive.CreateFile({'id':id2})

downloaded.GetContentFile('GoogleNews-vectors-negative300.bin.gz')

#uncompress

!gzip -d /content/GoogleNews-vectors-negative300.bin.gz

然后利用另一个模型预测, 并查看结果:

from gensim.models import KeyedVectors

import warnings

warnings.simplefilter(action='ignore', category=FutureWarning)

# Load the pretrained vectors with KeyedVectors instance - might be long waiting!

filename = 'GoogleNews-vectors-negative300.bin'

gn_wv_model = KeyedVectors.load_word2vec_format('GoogleNews-vectors-negative300.bin', binary=True)

result = gn_wv_model.most_similar(positive=['woman', 'king'], negative=['man'], topn=1)

print(result)

可以得到:

[(‘queen’, 0.7118192911148071)]

这个结果就比较符合人类的直觉。