大数据实战项目 -- 离线数仓

一、数仓规划

1.1 集群规划

- 技术选型

| 位置 | 框架 |

|---|---|

| 数据采集传输 | Flume,Kafka,Sqoop ,Logstash,DataX, |

| 数据存储 | MySql,HDFS,HBase,Redis,MongoDB |

| 数据计算 | Hive,Tez, Spark, Flink |

| 数据查询 | Presto,Druid ,Impala,Kylin |

| 数据可视化 | Echarts、Superset、Tableau、QuickBI、DataV |

| 任务调度 | Azkaban、Oozie |

| 集群监控 | Zabbix |

| 元数据管理 | Atlas |

- 框架版本选型

| 框架 | 版本(老版本) |

|---|---|

| Hadoop | 3.1.3(2.7.2) |

| Flume | 1.9.0(1.7.0) |

| Kafka | 2.4.1(0.11.0.2) |

| Hive | 3.1(2.3) |

| Sqoop | 1.4.6 |

| MySQL | 5.6.24 |

| Azkaban | 2.5.0 |

| Java | 1.8 |

| Zookeeper | 3.5.7(3.4.10) |

| Presto | 0.189 |

- 集群资源规划设计

| 服务名称 | 子服务 | simwor01(6G) | simwor02(4G) | simwor03(4G) |

|---|---|---|---|---|

| HDFS | NameNode | √ | ||

| DataNode | √ | √ | √ | |

| SecondaryNameNode | √ | |||

| Yarn | NodeManager | √ | √ | √ |

| Resourcemanager | √ | |||

| Zookeeper | Quorum | √ | √ | √ |

| Flume(采集日志) | Flume | √ | √ | |

| Kafka | Broker | √ | √ | √ |

| Flume(消费Kafka) | Flume | √ | ||

| Hive | Hive | √ | ||

| MySQL | MySQL | √ | ||

| Sqoop | Sqoop | √ | ||

| Presto | Coordinator | √ | ||

| Worker | √ | √ | ||

| Azkaban | WebServer | √ | ||

| ExecutorServer | √ | |||

| Druid | Druid | √ | √ | √ |

| Kylin | Kylin | √ | ||

| Hbase | HMaster | √ | ||

| HRegionServer | √ | √ | √ | |

| Superset | Superset | √ | ||

| Atlas | Atlas | √ | ||

| Solr | Jar | √ | ||

| 服务数总计 | 19 | 9 | 9 |

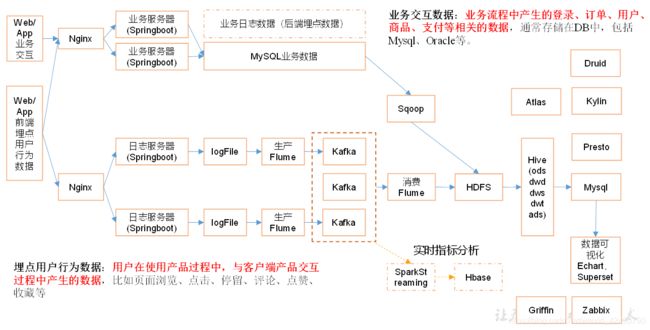

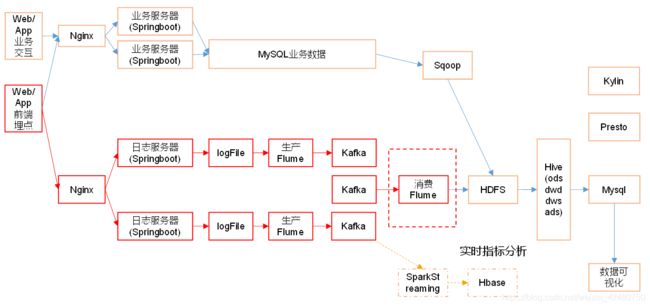

- 系统数据流

1.2 数据模拟

1.2.1 用户行为日志

- 启动日志格式

| 标签 | 含义 |

|---|---|

| entry | 入口: push=1,widget=2,icon=3,notification=4, lockscreen_widget =5 |

| open_ad_type | 开屏广告类型: 开屏原生广告=1, 开屏插屏广告=2 |

| action | 状态:成功=1 失败=2 |

| loading_time | 加载时长:计算下拉开始到接口返回数据的时间,(开始加载报0,加载成功或加载失败才上报时间) |

| detail | 失败码(没有则上报空) |

| extend1 | 失败的message(没有则上报空) |

| en | 日志类型start |

{

"action": "1",

"ar": "MX",

"ba": "Huawei",

"detail": "542",

"en": "start",

"entry": "4",

"extend1": "",

"g": "[email protected]",

"hw": "640*960",

"l": "pt",

"la": "-16.6",

"ln": "-99.3",

"loading_time": "3",

"md": "Huawei-11",

"mid": "17",

"nw": "WIFI",

"open_ad_type": "2",

"os": "8.1.2",

"sr": "U",

"sv": "V2.7.5",

"t": "1621094955645",

"uid": "17",

"vc": "8",

"vn": "1.1.8"

}

- 用户行为日志格式

# 样例

{

"ap": "xxxxx", //项目数据来源 app pc

"cm": { //公共字段

"mid": "", // (String) 设备唯一标识

"uid": "", // (String) 用户标识

"vc": "1", // (String) versionCode,程序版本号

"vn": "1.0", // (String) versionName,程序版本名

"l": "zh", // (String) language系统语言

"sr": "", // (String) 渠道号,应用从哪个渠道来的。

"os": "7.1.1", // (String) Android系统版本

"ar": "CN", // (String) area区域

"md": "BBB100-1", // (String) model手机型号

"ba": "blackberry", // (String) brand手机品牌

"sv": "V2.2.1", // (String) sdkVersion

"g": "", // (String) gmail

"hw": "1620x1080", // (String) heightXwidth,屏幕宽高

"t": "1506047606608", // (String) 客户端日志产生时的时间

"nw": "WIFI", // (String) 网络模式

"ln": 0, // (double) lng经度

"la": 0 // (double) lat 纬度

},

"et": [ //事件

{

"ett": "1506047605364", //客户端事件产生时间

"en": "display", //事件名称

"kv": { //事件结果,以key-value形式自行定义

"goodsid": "236",

"action": "1",

"extend1": "1",

"place": "2",

"category": "75"

}

}

]

}

# 实例

{

"cm": {

"ln": "-47.2",

"sv": "V2.4.9",

"os": "8.1.8",

"g": "[email protected]",

"mid": "999",

"nw": "4G",

"l": "en",

"vc": "0",

"hw": "640*960",

"ar": "MX",

"uid": "999",

"t": "1620912298272",

"la": "-36.8",

"md": "sumsung-10",

"vn": "1.3.9",

"ba": "Sumsung",

"sr": "T"

},

"ap": "app",

"et": [{

"ett": "1620836353194",

"en": "display",

"kv": {

"goodsid": "248",

"action": "1",

"extend1": "2",

"place": "2",

"category": "30"

}

}, {

"ett": "1620852165154",

"en": "newsdetail",

"kv": {

"entry": "3",

"goodsid": "249",

"news_staytime": "10",

"loading_time": "0",

"action": "4",

"showtype": "5",

"category": "51",

"type1": "201"

}

}, {

"ett": "1620850884761",

"en": "loading",

"kv": {

"extend2": "",

"loading_time": "0",

"action": "2",

"extend1": "",

"type": "1",

"type1": "",

"loading_way": "1"

}

}, {

"ett": "1620880365609",

"en": "notification",

"kv": {

"ap_time": "1620848443801",

"action": "2",

"type": "1",

"content": ""

}

}, {

"ett": "1620873678198",

"en": "error",

"kv": {

"errorDetail": "java.lang.NullPointerException\\n at cn.lift.appIn.web.AbstractBaseController.validInbound(AbstractBaseController.java:72)\\n at cn.lift.dfdf.web.AbstractBaseController.validInbound",

"errorBrief": "at cn.lift.dfdf.web.AbstractBaseController.validInbound(AbstractBaseController.java:72)"

}

}]

}

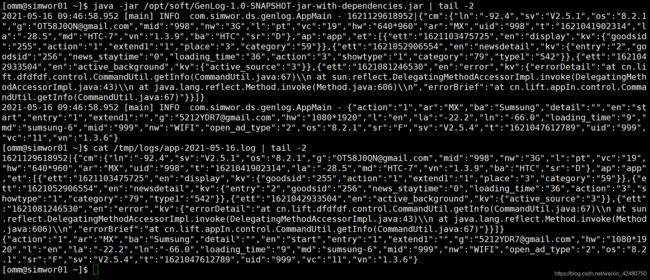

- 代码生成模拟日志

随机生成启动日志和用户行为日志,默认在 ‘/tmp/logs/app-YYYY-MM-dd.log’。

参数一:控制发送每条的延时时间,默认是0

参数二:循环遍历次数(产生日志的条数),默认是1000

[omm@simwor01 ~]$ cat bin/genlog

#! /bin/bash

for i in simwor01 simwor02

do

ssh $i "java -jar /opt/soft/GenLog-1.0-SNAPSHOT-jar-with-dependencies.jar $1 $2 &>/dev/null &"

done

[omm@simwor01 ~]$

1.2.2 业务交互数据

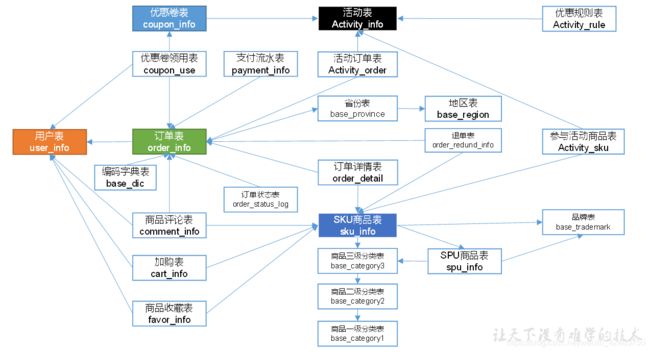

- 电商业务流程

- 电商常识

- SKU=Stock Keeping Unit(库存量基本单位)。现在已经被引申为产品统一编号的简称,每种产品均对应有唯一的SKU号。

- SPU(Standard Product Unit):是商品信息聚合的最小单位,是一组可复用、易检索的标准化信息集合。

- 例如:iPhoneX手机就是SPU。一台银色、128G内存的、支持联通网络的iPhoneX,就是SKU。

- 电商表结构

- 代码生成业务数据

mock.date : 模拟生成哪一天的数据

mock.clear : 是否清除历史数据(0 否 1 是)

[omm@simwor01 mysql-data-generator]$ pwd

/opt/module/mysql-data-generator

[omm@simwor01 mysql-data-generator]$ vim application.properties

[omm@simwor01 mysql-data-generator]$ grep mock. application.properties

mock.date=2021-03-11

mock.clear=0

mock.user.count=50

mock.user.male-rate=20

mock.favor.cancel-rate=10

mock.favor.count=100

mock.cart.count=10

mock.cart.sku-maxcount-per-cart=3

mock.order.user-rate=80

mock.order.sku-rate=70

mock.order.join-activity=1

mock.order.use-coupon=1

mock.coupon.user-count=10

mock.payment.rate=70

mock.payment.payment-type=30:60:10

mock.comment.appraise-rate=30:10:10:50

mock.refund.reason-rate=30:10:20:5:15:5:5

[omm@simwor01 mysql-data-generator]$ java -jar gmall-mock-db-2020-03-16-SNAPSHOT.jar

*************************** 16. row ***************************

id: 3225

consignee: 祁波宁

consignee_tel: 13559397760

final_total_amount: 8384.00

order_status: 1004

user_id: 6

delivery_address: 第8大街第27号楼2单元925门

order_comment: 描述927727

out_trade_no: 518433858745953

trade_body: 联想(Lenovo)Y9000X 2019新款 15.6英寸高性能标压轻薄本笔记本电脑(i5-9300H 16G 512GSSD FHD)深空灰等2件商品

create_time: 2021-03-11 00:00:00

operate_time: 2021-03-11 00:00:00

expire_time: 2021-03-11 00:15:00

tracking_no: NULL

parent_order_id: NULL

img_url: http://img.gmall.com/362367.jpg

province_id: 20

benefit_reduce_amount: 6227.00

original_total_amount: 14598.00

feight_fee: 13.00

16 rows in set (0.00 sec)

mysql> select * from order_info\G

1.3 日志采集

1.3.1 file -> flume -> kafka

- Source

- TailDir Source: 断点续传、多目录。Flume1.6以前需要自己自定义Source记录每次读取文件位置,实现断点续传。

- Exec Source可以实时搜集数据,但是在Flume不运行或者Shell命令出错的情况下,数据将会丢失。

- Spooling Directory Source监控目录,不支持断点续传。

- 日志收集的配置文件

[omm@simwor01 ~]$ cd /opt/module/flume/jobs/

[omm@simwor01 jobs]$ cat file-flume-kafka.conf

a1.sources=r1

a1.channels=c1 c2

# configure source

a1.sources.r1.type = TAILDIR

a1.sources.r1.positionFile = /opt/module/flume/jobs/file-flume-kafka.json

a1.sources.r1.filegroups = f1

a1.sources.r1.filegroups.f1 = /tmp/logs/app.+

a1.sources.r1.fileHeader = true

a1.sources.r1.channels = c1 c2

# interceptor

a1.sources.r1.interceptors = i1 i2

a1.sources.r1.interceptors.i1.type = com.simwor.flume.interceptor.LogETLInterceptor$Builder

a1.sources.r1.interceptors.i2.type = com.simwor.flume.interceptor.LogTypeInterceptor$Builder

a1.sources.r1.selector.type = multiplexing

a1.sources.r1.selector.header = topic

a1.sources.r1.selector.mapping.topic_start = c1

a1.sources.r1.selector.mapping.topic_event = c2

# configure channel

a1.channels.c1.type = org.apache.flume.channel.kafka.KafkaChannel

a1.channels.c1.kafka.bootstrap.servers = simwor01:9092,simwor02:9092,simwor03:9092

a1.channels.c1.kafka.topic = topic_start

a1.channels.c1.parseAsFlumeEvent = false

a1.channels.c1.kafka.consumer.group.id = flume-consumer

a1.channels.c2.type = org.apache.flume.channel.kafka.KafkaChannel

a1.channels.c2.kafka.bootstrap.servers = simwor01:9092,simwor02:9092,simwor03:9092

a1.channels.c2.kafka.topic = topic_event

a1.channels.c2.parseAsFlumeEvent = false

a1.channels.c2.kafka.consumer.group.id = flume-consumer

[omm@simwor01 jobs]$

- 日志清洗拦截器

package com.simwor.flume.interceptor;

import com.simwor.flume.utils.LogUtils;

import org.apache.flume.Context;

import org.apache.flume.Event;

import org.apache.flume.interceptor.Interceptor;

import java.nio.charset.StandardCharsets;

import java.util.ArrayList;

import java.util.List;

public class LogETLInterceptor implements Interceptor {

public static class Builder implements Interceptor.Builder {

@Override

public Interceptor build() {

return new LogETLInterceptor();

}

@Override public void configure(Context context) { }

}

@Override public void initialize() { }

@Override

public Event intercept(Event event) {

byte[] body = event.getBody();

String log = new String(body, StandardCharsets.UTF_8);

if(log.contains("start") && LogUtils.validateStart(log))

return event;

else if(LogUtils.validateEvent(log))

return event;

return null;

}

@Override

public List<Event> intercept(List<Event> events) {

ArrayList<Event> interceptors = new ArrayList<>();

Event eventIntercepted;

for (Event event : events) {

eventIntercepted = intercept(event);

if (eventIntercepted != null){

interceptors.add(eventIntercepted);

}

}

return interceptors;

}

@Override public void close() { }

}

- 日志类型分发拦截器

package com.simwor.flume.interceptor;

import org.apache.flume.Context;

import org.apache.flume.Event;

import org.apache.flume.interceptor.Interceptor;

import java.nio.charset.StandardCharsets;

import java.util.ArrayList;

import java.util.List;

import java.util.Map;

public class LogTypeInterceptor implements Interceptor {

public static class Builder implements Interceptor.Builder{

@Override

public Interceptor build() {

return new LogTypeInterceptor();

}

@Override public void configure(Context context) { }

}

@Override public void initialize() { }

@Override

public Event intercept(Event event) {

String log = new String(event.getBody(), StandardCharsets.UTF_8);

Map<String, String> headers = event.getHeaders();

if (log.contains("start")) {

headers.put("topic","topic_start");

}else {

headers.put("topic","topic_event");

}

return event;

}

@Override

public List<Event> intercept(List<Event> events) {

ArrayList<Event> interceptors = new ArrayList<>();

for (Event event : events)

interceptors.add(intercept(event));

return interceptors;

}

@Override public void close() { }

}

- 效果验证

- 日志采集脚本

[omm@simwor01 ~]$ cat bin/file-flume-kafka

#! /bin/bash

case $1 in

"start"){

for i in simwor01 simwor02

do

echo " --------启动 $i 采集flume-------"

ssh $i "nohup /opt/module/flume/bin/flume-ng agent --conf-file /opt/module/flume/jobs/file-flume-kafka.conf --name a1 -Dflume.root.logger=INFO,LOGFILE &>/opt/module/flume/jobs/file-flume-kafka.log &"

done

};;

"stop"){

for i in simwor01 simwor02

do

echo " --------停止 $i 采集flume-------"

ssh $i "ps -ef | grep file-flume-kafka | grep -v grep |awk '{print \$2}' | xargs -n1 kill -9 "

done

};;

esac

[omm@simwor01 ~]$

- 展示

[omm@simwor01 ~]$ kafka-console-consumer.sh --topic topic_start --bootstrap-server simwor01:9092 --from-beginning | tail

^CProcessed a total of 3509 messages

[omm@simwor01 ~]$ file-flume-kafka start

--------启动 simwor01 采集flume-------

--------启动 simwor02 采集flume-------

[omm@simwor01 ~]$ genlog

[omm@simwor01 ~]$ kafka-console-consumer.sh --topic topic_start --bootstrap-server simwor01:9092 --from-beginning | tail

^CProcessed a total of 4477 messages

[omm@simwor01 ~]$

1.3.2 kafka -> flume -> hdfs

- FileChannel和MemoryChannel区别

- MemoryChannel传输数据速度更快,但因为数据保存在JVM的堆内存中,Agent进程挂掉会导致数据丢失,适用于对数据质量要求不高的需求。

- FileChannel传输速度相对于Memory慢,但数据安全保障高,Agent进程挂掉也可以从失败中恢复数据。

- FileChannel优化

- 通过配置 dataDirs 指向多个路径,每个路径对应不同的硬盘,增大Flume吞吐量。

- checkpointDir 和 backupCheckpointDir 也尽量配置在不同硬盘对应的目录中,保证checkpoint坏掉后,可以快速使用backupCheckpointDir恢复数据

- HDFS Sink

- hdfs.rollInterval = 3600 文件创建超3600秒时会滚动生成新文件

- hdfs.rollSize = 134217728 或文件在达到128M时会滚动生成新文件

- hdfs.rollCount = 0 不要根据event数量作为生成新文件的依据

![]()

- 配置文件

[omm@simwor03 jobs]$ pwd

/opt/module/flume/jobs

[omm@simwor03 jobs]$ cat kafka-flume-hdfs.conf

## 组件

a1.sources=r1 r2

a1.channels=c1 c2

a1.sinks=k1 k2

## source1

a1.sources.r1.type = org.apache.flume.source.kafka.KafkaSource

a1.sources.r1.batchSize = 5000

a1.sources.r1.batchDurationMillis = 2000

a1.sources.r1.kafka.bootstrap.servers = simwor01:9092,simwor02:9092,simwor03:9092

a1.sources.r1.kafka.topics=topic_start

## source2

a1.sources.r2.type = org.apache.flume.source.kafka.KafkaSource

a1.sources.r2.batchSize = 5000

a1.sources.r2.batchDurationMillis = 2000

a1.sources.r2.kafka.bootstrap.servers = simwor01:9092,simwor02:9092,simwor03:9092

a1.sources.r2.kafka.topics=topic_event

## channel1

a1.channels.c1.type = file

a1.channels.c1.checkpointDir = /opt/module/flume/jobs/kafka-flume-hdfs/checkpoint/topic-start

a1.channels.c1.dataDirs = /opt/module/flume/jobs/kafka-flume-hdfs/data/topic-start

a1.channels.c1.maxFileSize = 2146435071

a1.channels.c1.capacity = 1000000

a1.channels.c1.keep-alive = 6

## channel2

a1.channels.c2.type = file

a1.channels.c2.checkpointDir = /opt/module/flume/jobs/kafka-flume-hdfs/checkpoint/topic-event

a1.channels.c2.dataDirs = /opt/module/flume/jobs/kafka-flume-hdfs/data/topic-event

a1.channels.c2.maxFileSize = 2146435071

a1.channels.c2.capacity = 1000000

a1.channels.c2.keep-alive = 6

## sink1

a1.sinks.k1.type = hdfs

a1.sinks.k1.hdfs.path = /origin_data/gmall/log/topic_start/%Y-%m-%d

a1.sinks.k1.hdfs.filePrefix = logstart-

##sink2

a1.sinks.k2.type = hdfs

a1.sinks.k2.hdfs.path = /origin_data/gmall/log/topic_event/%Y-%m-%d

a1.sinks.k2.hdfs.filePrefix = logevent-

## 不要产生大量小文件

a1.sinks.k1.hdfs.rollInterval = 10

a1.sinks.k1.hdfs.rollSize = 134217728

a1.sinks.k1.hdfs.rollCount = 0

a1.sinks.k2.hdfs.rollInterval = 10

a1.sinks.k2.hdfs.rollSize = 134217728

a1.sinks.k2.hdfs.rollCount = 0

## 控制输出文件是原生文件。

a1.sinks.k1.hdfs.fileType = CompressedStream

a1.sinks.k2.hdfs.fileType = CompressedStream

a1.sinks.k1.hdfs.codeC = lzop

a1.sinks.k2.hdfs.codeC = lzop

## 拼装

a1.sources.r1.channels = c1

a1.sinks.k1.channel= c1

a1.sources.r2.channels = c2

a1.sinks.k2.channel= c2

[omm@simwor03 jobs]$

# 启动 kafka -> flume -> hdfs 采集任务

[omm@simwor03 ~]$ /opt/module/flume/bin/flume-ng agent --conf-file /opt/module/flume/jobs/kafka-flume-hdfs.conf --name a1 -Dflume.root.logger=INFO,LOGFILE

# 生成新的日志

[omm@simwor01 ~]$ genlog

[omm@simwor01 ~]$ ps -ef | grep file-flume-kafka

...

org.apache.flume.node.Application --conf-file /opt/module/flume/jobs/file-flume-kafka.conf --name a1flume-kafka

...

[omm@simwor01 ~]$

# 验证 HDFS

[omm@simwor03 ~]$ hdfs dfs -ls /origin_data/gmall/log

Found 2 items

drwxr-xr-x - omm supergroup 0 2021-05-16 15:26 /origin_data/gmall/log/topic_event

drwxr-xr-x - omm supergroup 0 2021-05-16 15:26 /origin_data/gmall/log/topic_start

[omm@simwor03 ~]$ hdfs dfs -ls /origin_data/gmall/log/topic_event/2021-05-16

Found 1 items

-rw-r--r-- 1 omm supergroup 310973 2021-05-16 15:27 /origin_data/gmall/log/topic_event/2021-05-16/logevent-.1621150013089.lzo

[omm@simwor03 ~]$

- 启停脚本

[omm@simwor03 ~]$ cat bin/kafka-flume-hdfs

#! /bin/bash

case $1 in

"start"){

for i in simwor03

do

echo " --------启动 $i 消费flume-------"

ssh $i "nohup /opt/module/flume/bin/flume-ng agent --conf-file /opt/module/flume/jobs/kafka-flume-hdfs.conf --name a1 -Dflume.root.logger=INFO,LOGFILE &>/opt/module/flume/jobs/kafka-flume-hdfs.log &"

done

};;

"stop"){

for i in simwor03

do

echo " --------停止 $i 消费flume-------"

ssh $i "ps -ef | grep kafka-flume-hdfs | grep -v grep |awk '{print \$2}' | xargs -n1 kill"

done

};;

esac

[omm@simwor03 ~]$

1.4 数据导入

1.4.1 安装 Sqoop

- 准备软件包

[omm@simwor01 soft]$ ll | grep sqoop

-rw-r--r--. 1 omm omm 16870735 May 11 21:00 sqoop-1.4.6.bin__hadoop-2.0.4-alpha.tar.gz

[omm@simwor01 soft]$ tar -zxf sqoop-1.4.6.bin__hadoop-2.0.4-alpha.tar.gz -C /opt/module/

[omm@simwor01 soft]$ ln -s /opt/module/sqoop-1.4.6.bin__hadoop-2.0.4-alpha /opt/module/sqoop

[omm@simwor01 soft]$ cd /opt/module/sqoop

[omm@simwor01 sqoop]$

- 修改配置文件

[omm@simwor01 sqoop]$ cd conf/

[omm@simwor01 conf]$ cp sqoop-env-template.sh sqoop-env.sh

[omm@simwor01 conf]$ vi sqoop-env.sh

[omm@simwor01 conf]$ cat sqoop-env.sh

#Set path to where bin/hadoop is available

export HADOOP_COMMON_HOME=/opt/module/hadoop

#Set path to where hadoop-*-core.jar is available

export HADOOP_MAPRED_HOME=/opt/module/hadoop

#set the path to where bin/hbase is available

#export HBASE_HOME=

#Set the path to where bin/hive is available

export HIVE_HOME=/opt/module/hive

#Set the path for where zookeper config dir is

export ZOOKEEPER_HOME=/opt/module/zookeeper

export ZOOCFGDIR=/opt/module/zookeeper/conf

[omm@simwor01 conf]$

- 准备 mysql jar 包

[omm@simwor01 ~]$ cd /opt/soft/

[omm@simwor01 soft]$ cp mysql-connector-java-5.1.48.jar /opt/module/sqoop/lib/

[omm@simwor01 soft]$

- 添加环境变量并验证

[omm@simwor01 ~]$ sudo vim /etc/profile.d/omm_env.sh

[omm@simwor01 ~]$ source /etc/profile.d/omm_env.sh

[omm@simwor01 ~]$ sqoop help

Available commands:

codegen Generate code to interact with database records

create-hive-table Import a table definition into Hive

eval Evaluate a SQL statement and display the results

export Export an HDFS directory to a database table

help List available commands

import Import a table from a database to HDFS

import-all-tables Import tables from a database to HDFS

import-mainframe Import datasets from a mainframe server to HDFS

job Work with saved jobs

list-databases List available databases on a server

list-tables List available tables in a database

merge Merge results of incremental imports

metastore Run a standalone Sqoop metastore

version Display version information

See 'sqoop help COMMAND' for information on a specific command.

[omm@simwor01 ~]$

- 第一条 sqoop 命令

[omm@simwor01 ~]$ sqoop list-databases --connect jdbc:mysql://simwor01:3306/ --username root --password abcd1234..

information_schema

mysql

performance_schema

sys

[omm@simwor01 ~]$

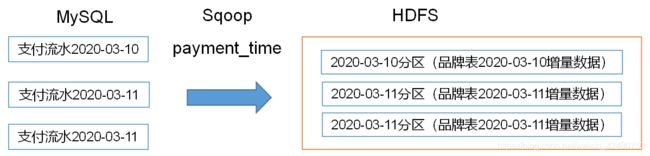

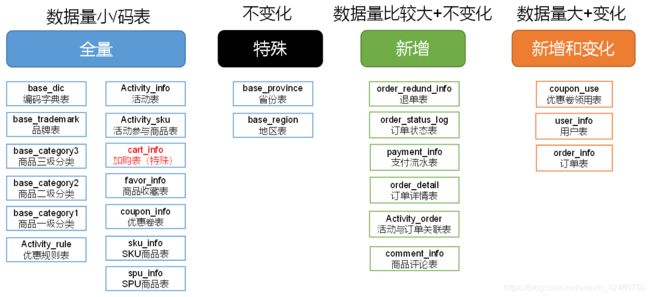

1.4.2 数据同步策略

- 类型

- 全量表:存储完整的数据。

- 增量表:存储新增加的数据。

- 新增及变化表:存储新增加的数据和变化的数据。

- 特殊表:只需要存储一次。

- 全量同步策略

每日全量,就是每天存储一份完整数据,作为一个分区。

适用于表数据量不大,且每天既会有新数据插入,也会有旧数据的修改的场景。

- 增量同步策略

每日增量,就是每天存储一份增量数据,作为一个分区。

适用于表数据量大,且每天只会有新数据插入的场景。

- 新增及变化策略

- 每日新增及变化,就是存储创建时间和操作时间都是今天的数据。

- 适用场景为,表的数据量大,既会有新增,又会有变化。

- 特殊策略

- 客观世界维度:没变化的客观世界的维度(比如性别,地区,民族,政治成分,鞋子尺码)可以只存一份固定值。

- 日期维度:日期维度可以一次性导入一年或若干年的数据。

- 地区维度:省份表、地区表。

- 分析表同步策略

1.4.3 mysql -> sqoop -> hdfs

- 数据同步导入脚本

#! /bin/bash

sqoop=/opt/module/sqoop/bin/sqoop

do_date=`date -d '-1 day' +%F`

# 是否指定导入日期,否则导入前一天的数据。

if [[ -n "$2" ]]; then

do_date=$2

fi

import_data(){

$sqoop import \

--connect jdbc:mysql://simwor01:3306/gmall \

--username root \

--password abcd1234.. \

--target-dir /origin_data/gmall/db/$1/$do_date \

--delete-target-dir \

--query "$2 and \$CONDITIONS" \

--num-mappers 1 \

--fields-terminated-by '\t' \

# Hive中的Null在底层是以“\N”来存储,而MySQL中的Null在底层就是Null;

# 为了保证数据两端的一致性:

# 在导出数据时采用--input-null-string和--input-null-non-string两个参数。

# 导入数据时采用--null-string和--null-non-string。

--null-string '\\N' \

--null-non-string '\\N'

}

import_order_info(){

import_data order_info "select

id,

final_total_amount,

order_status,

user_id,

out_trade_no,

create_time,

operate_time,

province_id,

benefit_reduce_amount,

original_total_amount,

feight_fee

from order_info

where (date_format(create_time,'%Y-%m-%d')='$do_date'

or date_format(operate_time,'%Y-%m-%d')='$do_date')"

}

import_coupon_use(){

import_data coupon_use "select

id,

coupon_id,

user_id,

order_id,

coupon_status,

get_time,

using_time,

used_time

from coupon_use

where (date_format(get_time,'%Y-%m-%d')='$do_date'

or date_format(using_time,'%Y-%m-%d')='$do_date'

or date_format(used_time,'%Y-%m-%d')='$do_date')"

}

import_order_status_log(){

import_data order_status_log "select

id,

order_id,

order_status,

operate_time

from order_status_log

where date_format(operate_time,'%Y-%m-%d')='$do_date'"

}

import_activity_order(){

import_data activity_order "select

id,

activity_id,

order_id,

create_time

from activity_order

where date_format(create_time,'%Y-%m-%d')='$do_date'"

}

import_user_info(){

import_data "user_info" "select

id,

name,

birthday,

gender,

email,

user_level,

create_time,

operate_time

from user_info

where (DATE_FORMAT(create_time,'%Y-%m-%d')='$do_date'

or DATE_FORMAT(operate_time,'%Y-%m-%d')='$do_date')"

}

import_order_detail(){

import_data order_detail "select

od.id,

order_id,

user_id,

sku_id,

sku_name,

order_price,

sku_num,

od.create_time

from order_detail od

join order_info oi

on od.order_id=oi.id

where DATE_FORMAT(od.create_time,'%Y-%m-%d')='$do_date'"

}

import_payment_info(){

import_data "payment_info" "select

id,

out_trade_no,

order_id,

user_id,

alipay_trade_no,

total_amount,

subject,

payment_type,

payment_time

from payment_info

where DATE_FORMAT(payment_time,'%Y-%m-%d')='$do_date'"

}

import_comment_info(){

import_data comment_info "select

id,

user_id,

sku_id,

spu_id,

order_id,

appraise,

comment_txt,

create_time

from comment_info

where date_format(create_time,'%Y-%m-%d')='$do_date'"

}

import_order_refund_info(){

import_data order_refund_info "select

id,

user_id,

order_id,

sku_id,

refund_type,

refund_num,

refund_amount,

refund_reason_type,

create_time

from order_refund_info

where date_format(create_time,'%Y-%m-%d')='$do_date'"

}

import_sku_info(){

import_data sku_info "select

id,

spu_id,

price,

sku_name,

sku_desc,

weight,

tm_id,

category3_id,

create_time

from sku_info where 1=1"

}

import_base_category1(){

import_data "base_category1" "select

id,

name

from base_category1 where 1=1"

}

import_base_category2(){

import_data "base_category2" "select

id,

name,

category1_id

from base_category2 where 1=1"

}

import_base_category3(){

import_data "base_category3" "select

id,

name,

category2_id

from base_category3 where 1=1"

}

import_base_province(){

import_data base_province "select

id,

name,

region_id,

area_code,

iso_code

from base_province

where 1=1"

}

import_base_region(){

import_data base_region "select

id,

region_name

from base_region

where 1=1"

}

import_base_trademark(){

import_data base_trademark "select

tm_id,

tm_name

from base_trademark

where 1=1"

}

import_spu_info(){

import_data spu_info "select

id,

spu_name,

category3_id,

tm_id

from spu_info

where 1=1"

}

import_favor_info(){

import_data favor_info "select

id,

user_id,

sku_id,

spu_id,

is_cancel,

create_time,

cancel_time

from favor_info

where 1=1"

}

import_cart_info(){

import_data cart_info "select

id,

user_id,

sku_id,

cart_price,

sku_num,

sku_name,

create_time,

operate_time,

is_ordered,

order_time

from cart_info

where 1=1"

}

import_coupon_info(){

import_data coupon_info "select

id,

coupon_name,

coupon_type,

condition_amount,

condition_num,

activity_id,

benefit_amount,

benefit_discount,

create_time,

range_type,

spu_id,

tm_id,

category3_id,

limit_num,

operate_time,

expire_time

from coupon_info

where 1=1"

}

import_activity_info(){

import_data activity_info "select

id,

activity_name,

activity_type,

start_time,

end_time,

create_time

from activity_info

where 1=1"

}

import_activity_rule(){

import_data activity_rule "select

id,

activity_id,

condition_amount,

condition_num,

benefit_amount,

benefit_discount,

benefit_level

from activity_rule

where 1=1"

}

import_base_dic(){

import_data base_dic "select

dic_code,

dic_name,

parent_code,

create_time,

operate_time

from base_dic

where 1=1"

}

# 传入要导入哪些表的数据

# 1. first : 全量导入27张表

# 2. all : 去除不变化表

# 3. other : 只导入具体的表

case $1 in

"order_info")

import_order_info

;;

"base_category1")

import_base_category1

;;

"base_category2")

import_base_category2

;;

"base_category3")

import_base_category3

;;

"order_detail")

import_order_detail

;;

"sku_info")

import_sku_info

;;

"user_info")

import_user_info

;;

"payment_info")

import_payment_info

;;

"base_province")

import_base_province

;;

"base_region")

import_base_region

;;

"base_trademark")

import_base_trademark

;;

"activity_info")

import_activity_info

;;

"activity_order")

import_activity_order

;;

"cart_info")

import_cart_info

;;

"comment_info")

import_comment_info

;;

"coupon_info")

import_coupon_info

;;

"coupon_use")

import_coupon_use

;;

"favor_info")

import_favor_info

;;

"order_refund_info")

import_order_refund_info

;;

"order_status_log")

import_order_status_log

;;

"spu_info")

import_spu_info

;;

"activity_rule")

import_activity_rule

;;

"base_dic")

import_base_dic

;;

"first")

import_base_category1

import_base_category2

import_base_category3

import_order_info

import_order_detail

import_sku_info

import_user_info

import_payment_info

import_base_province

import_base_region

import_base_trademark

import_activity_info

import_activity_order

import_cart_info

import_comment_info

import_coupon_use

import_coupon_info

import_favor_info

import_order_refund_info

import_order_status_log

import_spu_info

import_activity_rule

import_base_dic

;;

"all")

import_base_category1

import_base_category2

import_base_category3

import_order_info

import_order_detail

import_sku_info

import_user_info

import_payment_info

import_base_trademark

import_activity_info

import_activity_order

import_cart_info

import_comment_info

import_coupon_use

import_coupon_info

import_favor_info

import_order_refund_info

import_order_status_log

import_spu_info

import_activity_rule

import_base_dic

;;

esac

- 运行

nohup mysql-sqoop-hdfs first 2021-03-10 &> /tmp/mysql-sqoop-hdfs.log &

...

2021-05-17 11:02:09,772 INFO mapreduce.Job: Running job: job_1621220373303_0002

2021-05-17 11:02:15,930 INFO mapreduce.Job: Job job_1621220373303_0002 running in uber mode : false

2021-05-17 11:02:15,935 INFO mapreduce.Job: map 0% reduce 0%

2021-05-17 11:02:26,229 INFO mapreduce.Job: map 100% reduce 0%

2021-05-17 11:02:27,247 INFO mapreduce.Job: Job job_1621220373303_0002 completed successfully

...

- 验证

1.5 集群脚本

- 脚本

[omm@simwor01 ~]$ cat bin/cluster

#! /bin/bash

case $1 in

"start"){

echo " -------- 启动 集群 -------"

echo " -------- 启动 hadoop集群 -------"

ssh simwor01 "/opt/module/hadoop/sbin/start-dfs.sh"

ssh simwor02 "/opt/module/hadoop/sbin/start-yarn.sh"

#启动 Zookeeper集群

/home/omm/bin/zk start

sleep 4s;

#启动 Kafka采集集群

/home/omm/bin/kafka start

sleep 10s;

#启动 Flume采集集群

/home/omm/bin/file-flume-kafka start

#启动 Flume消费集群

/home/omm/bin/kafka-flume-hdfs start

};;

"stop"){

echo " -------- 停止 集群 -------"

#停止 Flume消费集群

/home/omm/bin/kafka-flume-hdfs stop

#停止 Flume采集集群

/home/omm/bin/file-flume-kafka stop

#停止 Kafka采集集群

/home/omm/bin/kafka stop

sleep 10s;

#停止 Zookeeper集群

/home/omm/bin/zk stop

echo " -------- 停止 hadoop集群 -------"

ssh simwor02 "/opt/module/hadoop/sbin/stop-yarn.sh"

ssh simwor01 "/opt/module/hadoop/sbin/stop-dfs.sh"

};;

esac

[omm@simwor01 ~]$

- 群起

[omm@simwor01 ~]$ cluster start

-------- 启动 集群 -------

-------- 启动 hadoop集群 -------

Starting namenodes on [simwor01]

Starting datanodes

Starting secondary namenodes [simwor03]

Starting resourcemanager

Starting nodemanagers

------------- simwor01 -------------

ZooKeeper JMX enabled by default

Using config: /opt/module/zookeeper/bin/../conf/zoo.cfg

Starting zookeeper ... STARTED

------------- simwor02 -------------

ZooKeeper JMX enabled by default

Using config: /opt/module/zookeeper/bin/../conf/zoo.cfg

Starting zookeeper ... STARTED

------------- simwor03 -------------

ZooKeeper JMX enabled by default

Using config: /opt/module/zookeeper/bin/../conf/zoo.cfg

Starting zookeeper ... STARTED

--------启动 simwor01 Kafka-------

--------启动 simwor02 Kafka-------

--------启动 simwor03 Kafka-------

--------启动 simwor01 采集flume-------

--------启动 simwor02 采集flume-------

--------启动 simwor03 消费flume-------

[omm@simwor01 ~]$

[omm@simwor01 ~]$ xcall jps

--------- simwor01 ----------

13361 Kafka

12548 DataNode

12391 NameNode

12827 NodeManager

13453 Application

12974 QuorumPeerMain

13566 Jps

--------- simwor02 ----------

6929 Jps

5666 DataNode

6820 Application

6742 Kafka

5847 ResourceManager

6348 QuorumPeerMain

4990 Application

6159 NodeManager

--------- simwor03 ----------

4742 DataNode

4854 SecondaryNameNode

4940 NodeManager

5468 Kafka

5085 QuorumPeerMain

5551 Application

5663 Jps

[omm@simwor01 ~]$

- 验证

# 日志产生前

[omm@simwor01 ~]$ hdfs dfs -ls /origin_data/gmall/log/topic_event/2021-05-16

Found 1 items

-rw-r--r-- 1 omm supergroup 321161 2021-05-16 16:17 /origin_data/gmall/log/topic_event/2021-05-16/logevent-.1621153059068.lzo

# 日志产生

[omm@simwor01 ~]$ genlog

# 日志产生后

[omm@simwor01 ~]$ hdfs dfs -ls /origin_data/gmall/log/topic_event/2021-05-16

Found 2 items

-rw-r--r-- 1 omm supergroup 321161 2021-05-16 16:17 /origin_data/gmall/log/topic_event/2021-05-16/logevent-.1621153059068.lzo

-rw-r--r-- 1 omm supergroup 438807 2021-05-16 16:20 /origin_data/gmall/log/topic_event/2021-05-16/logevent-.1621153218732.lzo

[omm@simwor01 ~]$

- 群停

[omm@simwor01 ~]$ cluster stop

-------- 停止 集群 -------

--------停止 simwor03 消费flume-------

--------停止 simwor02 采集flume-------

--------停止 simwor01 采集flume-------

/home/omm/bin/cluster: line 3: 18056 Killed /home/omm/bin/file-flume-kafka stop

--------停止 simwor01 Kafka-------

--------停止 simwor02 Kafka-------

--------停止 simwor03 Kafka-------

------------- simwor01 -------------

ZooKeeper JMX enabled by default

Using config: /opt/module/zookeeper/bin/../conf/zoo.cfg

Stopping zookeeper ... STOPPED

------------- simwor02 -------------

ZooKeeper JMX enabled by default

Using config: /opt/module/zookeeper/bin/../conf/zoo.cfg

Stopping zookeeper ... STOPPED

------------- simwor03 -------------

ZooKeeper JMX enabled by default

Using config: /opt/module/zookeeper/bin/../conf/zoo.cfg

Stopping zookeeper ... STOPPED

-------- 停止 hadoop集群 -------

Stopping nodemanagers

Stopping resourcemanager

Stopping namenodes on [simwor01]

Stopping datanodes

Stopping secondary namenodes [simwor03]

[omm@simwor01 ~]$

[omm@simwor01 ~]$ xcall jps

--------- simwor01 ----------

18634 Jps

--------- simwor02 ----------

11106 Jps

--------- simwor03 ----------

8546 Jps

[omm@simwor01 ~]$

1.6 读写压测

1.6.1 HDFS

[omm@simwor02 ~]$ hadoop jar /opt/module/hadoop/share/hadoop/mapreduce/hadoop-mapreduce-client-jobclient-3.1.3-tests.jar TestDFSIO -write -nrFiles 10 -fileSize 128MB

...

2021-05-13 21:15:31,325 INFO fs.TestDFSIO: ----- TestDFSIO ----- : write

2021-05-13 21:15:31,325 INFO fs.TestDFSIO: Date & time: Thu May 13 21:15:31 CST 2021

2021-05-13 21:15:31,325 INFO fs.TestDFSIO: Number of files: 10

2021-05-13 21:15:31,325 INFO fs.TestDFSIO: Total MBytes processed: 1280

2021-05-13 21:15:31,325 INFO fs.TestDFSIO: Throughput mb/sec: 37.64

2021-05-13 21:15:31,325 INFO fs.TestDFSIO: Average IO rate mb/sec: 72.93

2021-05-13 21:15:31,325 INFO fs.TestDFSIO: IO rate std deviation: 51.95

2021-05-13 21:15:31,325 INFO fs.TestDFSIO: Test exec time sec: 39.9

2021-05-13 21:15:31,325 INFO fs.TestDFSIO:

[omm@simwor02 ~]$

[omm@simwor02 ~]$ hadoop jar /opt/module/hadoop/share/hadoop/mapreduce/hadoop-mapreduce-client-jobclient-3.1.3-tests.jar TestDFSIO -read -nrFiles 10 -fileSize 128MB

...

2021-05-13 21:18:36,111 INFO fs.TestDFSIO: ----- TestDFSIO ----- : read

2021-05-13 21:18:36,112 INFO fs.TestDFSIO: Date & time: Thu May 13 21:18:36 CST 2021

2021-05-13 21:18:36,112 INFO fs.TestDFSIO: Number of files: 10

2021-05-13 21:18:36,112 INFO fs.TestDFSIO: Total MBytes processed: 1280

2021-05-13 21:18:36,112 INFO fs.TestDFSIO: Throughput mb/sec: 76.6

2021-05-13 21:18:36,113 INFO fs.TestDFSIO: Average IO rate mb/sec: 97.63

2021-05-13 21:18:36,113 INFO fs.TestDFSIO: IO rate std deviation: 55.87

2021-05-13 21:18:36,113 INFO fs.TestDFSIO: Test exec time sec: 29.07

2021-05-13 21:18:36,113 INFO fs.TestDFSIO:

[omm@simwor02 ~]$

[omm@simwor02 ~]$ hadoop jar /opt/module/hadoop/share/hadoop/mapreduce/hadoop-mapreduce-client-jobclient-3.1.3-tests.jar TestDFSIO -clean

2021-05-13 21:19:25,350 INFO fs.TestDFSIO: TestDFSIO.1.8

2021-05-13 21:19:25,352 INFO fs.TestDFSIO: nrFiles = 1

2021-05-13 21:19:25,352 INFO fs.TestDFSIO: nrBytes (MB) = 1.0

2021-05-13 21:19:25,352 INFO fs.TestDFSIO: bufferSize = 1000000

2021-05-13 21:19:25,352 INFO fs.TestDFSIO: baseDir = /benchmarks/TestDFSIO

2021-05-13 21:19:25,872 INFO fs.TestDFSIO: Cleaning up test files

[omm@simwor02 ~]$

1.6.2 Kafka

- 写压测

- record-size是一条信息有多大,单位是字节。

- num-records是总共发送多少条信息。

- throughput 是每秒多少条信息,设成-1,表示不限流,可测出生产者最大吞吐量。

[omm@simwor01 logs]$ kafka-producer-perf-test.sh --topic test --record-size 100 --num-records 100000 --throughput -1 --producer-props bootstrap.servers=simwor01:9092,simwor02:9092,simwor03:9092

100000 records sent, 105263.157895 records/sec (10.04 MB/sec), 243.79 ms avg latency, 327.00 ms max latency, 270 ms 50th, 305 ms 95th, 323 ms 99th, 326 ms 99.9th.

[omm@simwor01 logs]$

- 读压测

- --fetch-size 指定每次fetch的数据的大小

- --messages 总共要消费的消息个数

[omm@simwor01 logs]$ kafka-consumer-perf-test.sh --broker-list simwor01:9092,simwor02:9092,simwor03:9092 --topic test --fetch-size 10000 --messages 100000 --threads 1

start.time, end.time, data.consumed.in.MB, MB.sec, data.consumed.in.nMsg, nMsg.sec, rebalance.time.ms, fetch.time.ms, fetch.MB.sec, fetch.nMsg.sec

2021-05-16 13:33:16:184, 2021-05-16 13:33:17:248, 9.5399, 8.9661, 100033, 94015.9774, 1621143196431, -1621143195367, -0.0000, -0.0001

[omm@simwor01 logs]$

二、数仓概论

2.1 范式

目前业界范式有:第一范式(1NF)、第二范式(2NF)、第三范式(3NF)、巴斯-科德范式(BCNF)、第四范式(4NF)、第五范式(5NF)。

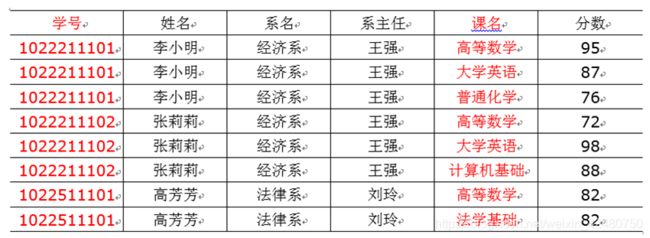

- 完全函数依赖

- 设X,Y是关系R的两个属性集合,X’是X的真子集,存在X→Y,但对每一个X’都有X’!→Y,则称Y完全函数依赖于X。

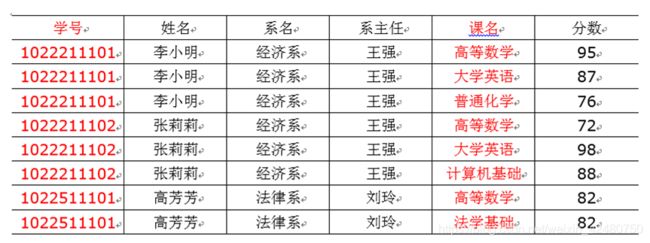

- 比如:通过,(学号,课程) 推出分数 ,但是单独用学号推断不出来分数,那么就可以说:分数 完全依赖于(学号,课程) 。

- 即:通过AB能得出C,但是AB单独得不出C,那么说C完全依赖于AB。

- 部分函数依赖

- 假如 Y函数依赖于 X,但同时 Y 并不完全函数依赖于 X,那么我们就称 Y 部分函数依赖于 X,记做:

- 比如:通过,(学号,课程) 推出姓名,因为其实直接可以通过,学号推出姓名,所以:姓名 部分依赖于 (学号,课程)

- 即:通过AB能得出C,通过A也能得出C,或者通过B也能得出C,那么说C部分依赖于AB。

- 传递函数依赖

- 设X,Y,Z是关系R中互不相同的属性集合,存在X→Y(Y !→X),Y→Z,则称Z传递函数依赖于X。记做:

- 比如:学号 推出 系名 , 系名 推出 系主任, 但是,系主任推不出学号,系主任主要依赖于系名。这种情况可以说:系主任 传递依赖于 学号

- 通过A得到B,通过B得到C,但是C得不到A,那么说C传递依赖于A。

遵循的范式越高,数据的冗余性越低,数据间的关系越复杂(即获取数据时,需要通过Join拼接出最后的数据)。

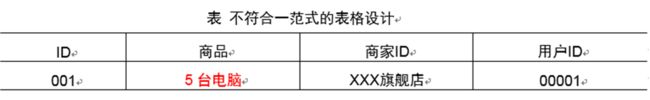

- 第一范式

第一范式1NF核心原则就是:属性不可切割

- 第二范式

第二范式2NF核心原则:不能存在“部分函数依赖”

以上表格明显存在,部分依赖。比如,这张表的主键是 (学号,课名),分数确实完全依赖于 (学号,课名),但是姓名并不完全依赖于(学号,课名)。

- 第三范式

第三范式 3NF核心原则:不能存在传递函数依赖。

在上面这张表中,存在传递函数依赖:学号->系名->系主任,但是系主任推不出学号。

2.2 建模

| 对比属性 | OLTP | OLAP |

|---|---|---|

| 读特性 | 每次查询只返回少量记录 | 对大量记录进行汇总 |

| 写特性 | 随机、低延时写入用户的输入 | 批量导入 |

| 使用场景 | 用户,Java EE项目 | 内部分析师,为决策提供支持 |

| 数据表征 | 最新数据状态 | 随时间变化的历史状态 |

| 数据规模 | GB | TB到PB |

- 当今的数据处理大致可以分成两大类:联机事务处理OLTP(on-line transaction processing)、联机分析处理OLAP(On-Line Analytical Processing)。

- OLTP是传统的关系型数据库的主要应用,主要是基本的、日常的事务处理,例如银行交易。

- OLAP是数据仓库系统的主要应用,支持复杂的分析操作,侧重决策支持,并且提供直观易懂的查询结果。

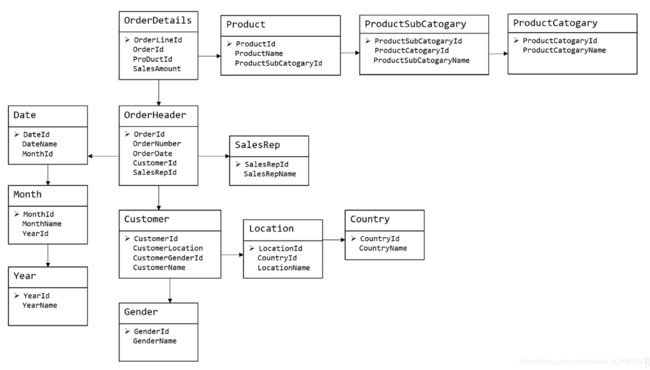

- 关系建模

- 关系模型如图所示,严格遵循第三范式(3NF);

- 从图中可以看出,较为松散、零碎,物理表数量多,而数据冗余程度低;

- 由于数据分布于众多的表中,这些数据可以更为灵活地被应用,功能性较强;

- 关系模型主要应用于OLTP系统中,为了保证数据的一致性以及避免冗余,所以大部分业务系统的表都是遵循第三范式的。

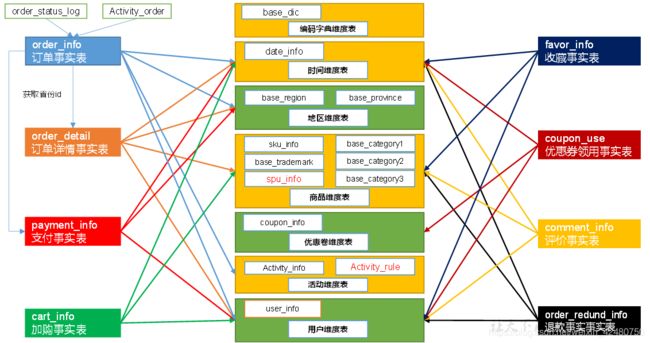

- 维度建模

- 维度模型如图所示,主要应用于OLAP系统中;

- 通常以某一个事实表为中心进行表的组织,主要面向业务,特征是可能存在数据的冗余,但是能方便的得到数据;

- 关系模型虽然冗余少,但是在大规模数据,跨表分析统计查询过程中,会造成多表关联,这会大大降低执行效率;

- 所以数仓项目中通常我们采用维度模型建模,把相关各种表整理成两种:事实表和维度表两种。

2.3 建表

- 维度表

维度表:一般是对事实的描述信息。每一张维表对应现实世界中的一个对象或者概念。 例如:用户、商品、日期、地区等。

- 维表的范围很宽(具有多个属性、列比较多)

- 跟事实表相比,行数相对较小:通常< 10万条

- 内容相对固定:编码表

- 事实表

事实表中的每行数据代表一个业务事件(下单、支付、退款、评价等)。“事实”这个术语表示的是业务事件的度量值(可统计次数、个数、金额等)。

- 每一个事实表的行包括:具有可加性的数值型的度量值、与维表相连接的外键、通常具有两个和两个以上的外键、外键之间表示维表之间多对多的关系;

- 事实表的特征:非常的大;内容相对的窄:列数较少;经常发生变化,每天会新增加很多。

- 事务型事实表:以每个事务或事件为单位,例如一个销售订单记录,一笔支付记录等,作为事实表里的一行数据。一旦事务被提交,事实表数据被插入,数据就不再进行更改,其更新方式为增量更新。

- 周期型快照事实表:周期型快照事实表中不会保留所有数据,只保留固定时间间隔的数据,例如每天或者每月的销售额,或每月的账户余额等。

- 累积型快照事实表:累计快照事实表用于跟踪业务事实的变化。例如,数据仓库中可能需要累积或者存储订单从下订单开始,到订单商品被打包、运输、和签收的各个业务阶段的时间点数据来跟踪订单声明周期的进展情况。当这个业务过程进行时,事实表的记录也要不断更新。

2.4 分层

- ODS层

- 保持数据原貌不做任何修改,起到备份数据的作用。

- 数据采用压缩,减少磁盘存储空间(例如:原始数据100G,可以压缩到10G左右)

- 创建分区表,防止后续的全表扫描

- DWD层

DWD层需 构建维度模型:选择业务过程 → 声明粒度 → 确认维度 → 确认事实

- 选择业务过程:在业务系统中,挑选我们感兴趣的业务线,比如下单业务,支付业务,退款业务,物流业务,一条业务线对应一张事实表。

- 声明粒度

2.1 数据粒度指数据仓库的表中保存数据的细化程度或综合程度的级别。

2.2 声明粒度意味着精确定义事实表中的一行数据表示什么,应该尽可能选择最小粒度,以此来应各种各样的需求。

2.3 典型的粒度声明如下:订单中,每个商品项作为下单事实表中的一行 - 确定维度:维度的主要作用是描述业务是事实,主要表示的是“谁,何处,何时”等信息。

- 确定事实

4.1 此处的**“事实”一词,指的是业务中的度量值**,例如订单金额、下单次数等。

4.2 在DWD层,以业务过程为建模驱动,基于每个具体业务过程的特点,构建最细粒度的明细层事实表。事实表可做适当的宽表化处理。

至此,数仓的维度建模已经完毕,DWS、DWT和ADS和维度建模已经没有关系了。

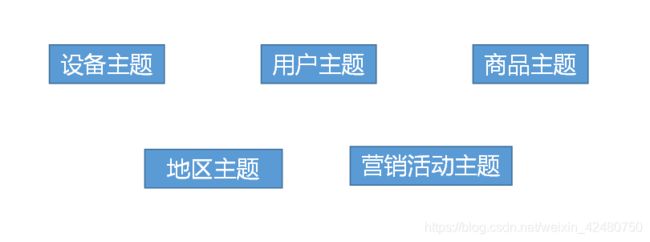

DWS和DWT都是建宽表,宽表都是按照主题去建。主题相当于观察问题的角度。对应着维度表。

| 时间 | 用户 | 地区 | 商品 | 优惠券 | 活动 | 编码 | 度量值 | |

|---|---|---|---|---|---|---|---|---|

| 订单 | √ | √ | √ | √ | 件数/金额 | |||

| 订单详情 | √ | √ | √ | 件数/金额 | ||||

| 支付 | √ | √ | 金额 | |||||

| 加购 | √ | √ | √ | 件数/金额 | ||||

| 收藏 | √ | √ | √ | 个数 | ||||

| 评价 | √ | √ | √ | 个数 | ||||

| 退款 | √ | √ | √ | 件数/金额 | ||||

| 优惠券领用 | √ | √ | √ | 个数 |

- DWS层

统计各个主题对象的当天行为,服务于DWT层的主题宽表。

- DWT层

以分析的主题对象为建模驱动,基于上层的应用和产品的指标需求,构建主题对象的全量宽表。

- ADS层

对电商系统各大主题指标分别进行分析。

三、数仓搭建

3.1 ODS 层

- 用户行为数据

- 用户事件日志转用户事件原始表

hive (default)> create database gmall;

hive (default)> use gmall;

hive (gmall)> drop table if exists ods_event_log;

hive (gmall)> CREATE EXTERNAL TABLE ods_event_log(`line` string)

> PARTITIONED BY (`dt` string)

> STORED AS

> INPUTFORMAT 'com.hadoop.mapred.DeprecatedLzoTextInputFormat'

> OUTPUTFORMAT 'org.apache.hadoop.hive.ql.io.HiveIgnoreKeyTextOutputFormat'

> LOCATION '/warehouse/gmall/ods/ods_event_log';

hive (gmall)> load data inpath '/origin_data/gmall/log/topic_event/2021-05-16' into table gmall.ods_event_log partition(dt='2021-05-16');

hive (gmall)> select * from ods_event_log limit 2;

ods_event_log.line ods_event_log.dt

1621150009268|{"cm":{"ln":"-50.6","sv":"V2.6.1","os":"8.0.8","g":"[email protected]","mid":"0","nw":"WIFI","l":"pt","vc":"0","hw":"640*960","ar":"MX","uid":"0","t":"1621136246955","la":"24.8","md":"HTC-11","vn":"1.1.8","ba":"HTC","sr":"H"},"ap":"app","et":[{"ett":"1621138028818","en":"display","kv":{"goodsid":"0","action":"2","extend1":"1","place":"2","category":"73"}},{"ett":"1621077773013","en":"loading","kv":{"extend2":"","loading_time":"21","action":"1","extend1":"","type":"3","type1":"","loading_way":"1"}},{"ett":"1621118651067","en":"notification","kv":{"ap_time":"1621112223280","action":"2","type":"4","content":""}},{"ett":"1621058356736","en":"error","kv":{"errorDetail":"java.lang.NullPointerException\\n at cn.lift.appIn.web.AbstractBaseController.validInbound(AbstractBaseController.java:72)\\n at cn.lift.dfdf.web.AbstractBaseController.validInbound","errorBrief":"at cn.lift.dfdf.web.AbstractBaseController.validInbound(AbstractBaseController.java:72)"}},{"ett":"1621098733764","en":"favorites","kv":{"course_id":9,"id":0,"add_time":"1621147636292","userid":1}},{"ett":"1621096613172","en":"praise","kv":{"target_id":7,"id":4,"type":3,"add_time":"1621113330503","userid":1}}]} 2021-05-16

1621150009275|{"cm":{"ln":"-72.1","sv":"V2.4.2","os":"8.0.5","g":"[email protected]","mid":"1","nw":"3G","l":"es","vc":"19","hw":"1080*1920","ar":"MX","uid":"1","t":"1621067543403","la":"14.1","md":"sumsung-15","vn":"1.3.9","ba":"Sumsung","sr":"Y"},"ap":"app","et":[{"ett":"1621084852590","en":"loading","kv":{"extend2":"","loading_time":"25","action":"3","extend1":"","type":"2","type1":"","loading_way":"1"}},{"ett":"1621134380392","en":"active_background","kv":{"active_source":"1"}},{"ett":"1621113098715","en":"error","kv":{"errorDetail":"java.lang.NullPointerException\\n at cn.lift.appIn.web.AbstractBaseController.validInbound(AbstractBaseController.java:72)\\n at cn.lift.dfdf.web.AbstractBaseController.validInbound","errorBrief":"at cn.lift.dfdf.web.AbstractBaseController.validInbound(AbstractBaseController.java:72)"}},{"ett":"1621065584231","en":"favorites","kv":{"course_id":0,"id":0,"add_time":"1621068899977","userid":1}},{"ett":"1621075696863","en":"praise","kv":{"target_id":8,"id":6,"type":2,"add_time":"1621123273271","userid":3}}]} 2021-05-16

Time taken: 1.2 seconds, Fetched: 2 row(s)

hive (gmall)>

建立 LZO 压缩索引

[omm@simwor01 bin]$ hadoop jar \

> /opt/module/hadoop/share/hadoop/common/hadoop-lzo-0.4.20.jar \

> com.hadoop.compression.lzo.DistributedLzoIndexer \

> /warehouse/gmall/ods/ods_event_log/dt=2021-05-16

- 用户启动日志转用户启动原始表

drop table if exists ods_start_log;

CREATE EXTERNAL TABLE ods_start_log (`line` string)

PARTITIONED BY (`dt` string)

STORED AS

INPUTFORMAT 'com.hadoop.mapred.DeprecatedLzoTextInputFormat'

OUTPUTFORMAT 'org.apache.hadoop.hive.ql.io.HiveIgnoreKeyTextOutputFormat'

LOCATION '/warehouse/gmall/ods/ods_start_log';

load data inpath '/origin_data/gmall/log/topic_start/2021-05-16' into table gmall.ods_start_log partition(dt='2021-05-16');

hadoop jar /opt/module/hadoop/share/hadoop/common/hadoop-lzo-0.4.20.jar com.hadoop.compression.lzo.DistributedLzoIndexer /warehouse/gmall/ods/ods_start_log/dt=2021-05-16

- 数据加载脚本

执行:hdfs-log-to-ods 2021-05-16

#!/bin/bash

db=gmall

hive=/opt/module/hive/bin/hive

do_date=`date -d '-1 day' +%F`

if [[ -n "$1" ]]; then

do_date=$1

fi

sql="

load data inpath '/origin_data/gmall/log/topic_start/$do_date' into table ${db}.ods_start_log partition(dt='$do_date');

load data inpath '/origin_data/gmall/log/topic_event/$do_date' into table ${db}.ods_event_log partition(dt='$do_date');

"

$hive -e "$sql"

hadoop jar /opt/module/hadoop-2.7.2/share/hadoop/common/hadoop-lzo-0.4.20.jar com.hadoop.compression.lzo.DistributedLzoIndexer /warehouse/gmall/ods/ods_start_log/dt=$do_date

hadoop jar /opt/module/hadoop-2.7.2/share/hadoop/common/hadoop-lzo-0.4.20.jar com.hadoop.compression.lzo.DistributedLzoIndexer /warehouse/gmall/ods/ods_event_log/dt=$do_date

- 业务数据

- 建表

-- 3.1.1 订单表(增量及更新)

drop table if exists ods_order_info;

create external table ods_order_info (

`id` string COMMENT '订单号',

`final_total_amount` decimal(10,2) COMMENT '订单金额',

`order_status` string COMMENT '订单状态',

`user_id` string COMMENT '用户id',

`out_trade_no` string COMMENT '支付流水号',

`create_time` string COMMENT '创建时间',

`operate_time` string COMMENT '操作时间',

`province_id` string COMMENT '省份ID',

`benefit_reduce_amount` decimal(10,2) COMMENT '优惠金额',

`original_total_amount` decimal(10,2) COMMENT '原价金额',

`feight_fee` decimal(10,2) COMMENT '运费'

) COMMENT '订单表'

PARTITIONED BY (`dt` string)

row format delimited fields terminated by '\t'

location '/warehouse/gmall/ods/ods_order_info/';

-- 3.1.2 订单详情表(增量)

drop table if exists ods_order_detail;

create external table ods_order_detail(

`id` string COMMENT '订单编号',

`order_id` string COMMENT '订单号',

`user_id` string COMMENT '用户id',

`sku_id` string COMMENT '商品id',

`sku_name` string COMMENT '商品名称',

`order_price` decimal(10,2) COMMENT '商品价格',

`sku_num` bigint COMMENT '商品数量',

`create_time` string COMMENT '创建时间'

) COMMENT '订单详情表'

PARTITIONED BY (`dt` string)

row format delimited fields terminated by '\t'

location '/warehouse/gmall/ods/ods_order_detail/';

-- 3.1.3 SKU商品表(全量)

drop table if exists ods_sku_info;

create external table ods_sku_info(

`id` string COMMENT 'skuId',

`spu_id` string COMMENT 'spuid',

`price` decimal(10,2) COMMENT '价格',

`sku_name` string COMMENT '商品名称',

`sku_desc` string COMMENT '商品描述',

`weight` string COMMENT '重量',

`tm_id` string COMMENT '品牌id',

`category3_id` string COMMENT '品类id',

`create_time` string COMMENT '创建时间'

) COMMENT 'SKU商品表'

PARTITIONED BY (`dt` string)

row format delimited fields terminated by '\t'

location '/warehouse/gmall/ods/ods_sku_info/';

-- 3.1.4 用户表(增量及更新)

drop table if exists ods_user_info;

create external table ods_user_info(

`id` string COMMENT '用户id',

`name` string COMMENT '姓名',

`birthday` string COMMENT '生日',

`gender` string COMMENT '性别',

`email` string COMMENT '邮箱',

`user_level` string COMMENT '用户等级',

`create_time` string COMMENT '创建时间',

`operate_time` string COMMENT '操作时间'

) COMMENT '用户表'

PARTITIONED BY (`dt` string)

row format delimited fields terminated by '\t'

location '/warehouse/gmall/ods/ods_user_info/';

-- 3.1.5 商品一级分类表(全量)

drop table if exists ods_base_category1;

create external table ods_base_category1(

`id` string COMMENT 'id',

`name` string COMMENT '名称'

) COMMENT '商品一级分类表'

PARTITIONED BY (`dt` string)

row format delimited fields terminated by '\t'

location '/warehouse/gmall/ods/ods_base_category1/';

-- 3.1.6 商品二级分类表(全量)

drop table if exists ods_base_category2;

create external table ods_base_category2(

`id` string COMMENT ' id',

`name` string COMMENT '名称',

category1_id string COMMENT '一级品类id'

) COMMENT '商品二级分类表'

PARTITIONED BY (`dt` string)

row format delimited fields terminated by '\t'

location '/warehouse/gmall/ods/ods_base_category2/';

-- 3.1.7 商品三级分类表(全量)

drop table if exists ods_base_category3;

create external table ods_base_category3(

`id` string COMMENT ' id',

`name` string COMMENT '名称',

category2_id string COMMENT '二级品类id'

) COMMENT '商品三级分类表'

PARTITIONED BY (`dt` string)

row format delimited fields terminated by '\t'

location '/warehouse/gmall/ods/ods_base_category3/';

-- 3.1.8 支付流水表(增量)

drop table if exists ods_payment_info;

create external table ods_payment_info(

`id` bigint COMMENT '编号',

`out_trade_no` string COMMENT '对外业务编号',

`order_id` string COMMENT '订单编号',

`user_id` string COMMENT '用户编号',

`alipay_trade_no` string COMMENT '支付宝交易流水编号',

`total_amount` decimal(16,2) COMMENT '支付金额',

`subject` string COMMENT '交易内容',

`payment_type` string COMMENT '支付类型',

`payment_time` string COMMENT '支付时间'

) COMMENT '支付流水表'

PARTITIONED BY (`dt` string)

row format delimited fields terminated by '\t'

location '/warehouse/gmall/ods/ods_payment_info/';

-- 3.1.9 省份表(特殊)

drop table if exists ods_base_province;

create external table ods_base_province (

`id` bigint COMMENT '编号',

`name` string COMMENT '省份名称',

`region_id` string COMMENT '地区ID',

`area_code` string COMMENT '地区编码',

`iso_code` string COMMENT 'iso编码'

) COMMENT '省份表'

row format delimited fields terminated by '\t'

location '/warehouse/gmall/ods/ods_base_province/';

-- 3.1.10 地区表(特殊)

drop table if exists ods_base_region;

create external table ods_base_region (

`id` bigint COMMENT '编号',

`region_name` string COMMENT '地区名称'

) COMMENT '地区表'

row format delimited fields terminated by '\t'

location '/warehouse/gmall/ods/ods_base_region/';

-- 3.1.11 品牌表(全量)

drop table if exists ods_base_trademark;

create external table ods_base_trademark (

`tm_id` bigint COMMENT '编号',

`tm_name` string COMMENT '品牌名称'

) COMMENT '品牌表'

PARTITIONED BY (`dt` string)

row format delimited fields terminated by '\t'

location '/warehouse/gmall/ods/ods_base_trademark/';

-- 3.1.12 订单状态表(增量)

drop table if exists ods_order_status_log;

create external table ods_order_status_log (

`id` bigint COMMENT '编号',

`order_id` string COMMENT '订单ID',

`order_status` string COMMENT '订单状态',

`operate_time` string COMMENT '修改时间'

) COMMENT '订单状态表'

PARTITIONED BY (`dt` string)

row format delimited fields terminated by '\t'

location '/warehouse/gmall/ods/ods_order_status_log/';

-- 3.1.13 SPU商品表(全量)

drop table if exists ods_spu_info;

create external table ods_spu_info(

`id` string COMMENT 'spuid',

`spu_name` string COMMENT 'spu名称',

`category3_id` string COMMENT '品类id',

`tm_id` string COMMENT '品牌id'

) COMMENT 'SPU商品表'

PARTITIONED BY (`dt` string)

row format delimited fields terminated by '\t'

location '/warehouse/gmall/ods/ods_spu_info/';

-- 3.1.14 商品评论表(增量)

drop table if exists ods_comment_info;

create external table ods_comment_info(

`id` string COMMENT '编号',

`user_id` string COMMENT '用户ID',

`sku_id` string COMMENT '商品sku',

`spu_id` string COMMENT '商品spu',

`order_id` string COMMENT '订单ID',

`appraise` string COMMENT '评价',

`create_time` string COMMENT '评价时间'

) COMMENT '商品评论表'

PARTITIONED BY (`dt` string)

row format delimited fields terminated by '\t'

location '/warehouse/gmall/ods/ods_comment_info/';

-- 3.1.15 退单表(增量)

drop table if exists ods_order_refund_info;

create external table ods_order_refund_info(

`id` string COMMENT '编号',

`user_id` string COMMENT '用户ID',

`order_id` string COMMENT '订单ID',

`sku_id` string COMMENT '商品ID',

`refund_type` string COMMENT '退款类型',

`refund_num` bigint COMMENT '退款件数',

`refund_amount` decimal(16,2) COMMENT '退款金额',

`refund_reason_type` string COMMENT '退款原因类型',

`create_time` string COMMENT '退款时间'

) COMMENT '退单表'

PARTITIONED BY (`dt` string)

row format delimited fields terminated by '\t'

location '/warehouse/gmall/ods/ods_order_refund_info/';

-- 3.1.16 加购表(全量)

drop table if exists ods_cart_info;

create external table ods_cart_info(

`id` string COMMENT '编号',

`user_id` string COMMENT '用户id',

`sku_id` string COMMENT 'skuid',

`cart_price` string COMMENT '放入购物车时价格',

`sku_num` string COMMENT '数量',

`sku_name` string COMMENT 'sku名称 (冗余)',

`create_time` string COMMENT '创建时间',

`operate_time` string COMMENT '修改时间',

`is_ordered` string COMMENT '是否已经下单',

`order_time` string COMMENT '下单时间'

) COMMENT '加购表'

PARTITIONED BY (`dt` string)

row format delimited fields terminated by '\t'

location '/warehouse/gmall/ods/ods_cart_info/';

-- 3.1.17 商品收藏表(全量)

drop table if exists ods_favor_info;

create external table ods_favor_info(

`id` string COMMENT '编号',

`user_id` string COMMENT '用户id',

`sku_id` string COMMENT 'skuid',

`spu_id` string COMMENT 'spuid',

`is_cancel` string COMMENT '是否取消',

`create_time` string COMMENT '收藏时间',

`cancel_time` string COMMENT '取消时间'

) COMMENT '商品收藏表'

PARTITIONED BY (`dt` string)

row format delimited fields terminated by '\t'

location '/warehouse/gmall/ods/ods_favor_info/';

-- 3.1.18 优惠券领用表(新增及变化)

drop table if exists ods_coupon_use;

create external table ods_coupon_use(

`id` string COMMENT '编号',

`coupon_id` string COMMENT '优惠券ID',

`user_id` string COMMENT 'skuid',

`order_id` string COMMENT 'spuid',

`coupon_status` string COMMENT '优惠券状态',

`get_time` string COMMENT '领取时间',

`using_time` string COMMENT '使用时间(下单)',

`used_time` string COMMENT '使用时间(支付)'

) COMMENT '优惠券领用表'

PARTITIONED BY (`dt` string)

row format delimited fields terminated by '\t'

location '/warehouse/gmall/ods/ods_coupon_use/';

-- 3.1.19 优惠券表(全量)

drop table if exists ods_coupon_info;

create external table ods_coupon_info(

`id` string COMMENT '购物券编号',

`coupon_name` string COMMENT '购物券名称',

`coupon_type` string COMMENT '购物券类型 1 现金券 2 折扣券 3 满减券 4 满件打折券',

`condition_amount` string COMMENT '满额数',

`condition_num` string COMMENT '满件数',

`activity_id` string COMMENT '活动编号',

`benefit_amount` string COMMENT '减金额',

`benefit_discount` string COMMENT '折扣',

`create_time` string COMMENT '创建时间',

`range_type` string COMMENT '范围类型 1、商品 2、品类 3、品牌',

`spu_id` string COMMENT '商品id',

`tm_id` string COMMENT '品牌id',

`category3_id` string COMMENT '品类id',

`limit_num` string COMMENT '最多领用次数',

`operate_time` string COMMENT '修改时间',

`expire_time` string COMMENT '过期时间'

) COMMENT '优惠券表'

PARTITIONED BY (`dt` string)

row format delimited fields terminated by '\t'

location '/warehouse/gmall/ods/ods_coupon_info/';

-- 3.1.20 活动表(全量)

drop table if exists ods_activity_info;

create external table ods_activity_info(

`id` string COMMENT '编号',

`activity_name` string COMMENT '活动名称',

`activity_type` string COMMENT '活动类型',

`start_time` string COMMENT '开始时间',

`end_time` string COMMENT '结束时间',

`create_time` string COMMENT '创建时间'

) COMMENT '活动表'

PARTITIONED BY (`dt` string)

row format delimited fields terminated by '\t'

location '/warehouse/gmall/ods/ods_activity_info/';

-- 3.1.21 活动订单关联表(增量)

drop table if exists ods_activity_order;

create external table ods_activity_order(

`id` string COMMENT '编号',

`activity_id` string COMMENT '优惠券ID',

`order_id` string COMMENT 'skuid',

`create_time` string COMMENT '领取时间'

) COMMENT '活动订单关联表'

PARTITIONED BY (`dt` string)

row format delimited fields terminated by '\t'

location '/warehouse/gmall/ods/ods_activity_order/';

-- 3.1.22 活动规则表(全量)

drop table if exists ods_activity_rule;

create external table ods_activity_rule(

`id` string COMMENT '编号',

`activity_id` string COMMENT '活动ID',

`condition_amount` string COMMENT '满减金额',

`condition_num` string COMMENT '满减件数',

`benefit_amount` string COMMENT '优惠金额',

`benefit_discount` string COMMENT '优惠折扣',

`benefit_level` string COMMENT '优惠级别'

) COMMENT '优惠规则表'

PARTITIONED BY (`dt` string)

row format delimited fields terminated by '\t'

location '/warehouse/gmall/ods/ods_activity_rule/';

-- 3.1.23 编码字典表(全量)

drop table if exists ods_base_dic;

create external table ods_base_dic(

`dic_code` string COMMENT '编号',

`dic_name` string COMMENT '编码名称',

`parent_code` string COMMENT '父编码',

`create_time` string COMMENT '创建日期',

`operate_time` string COMMENT '操作日期'

) COMMENT '编码字典表'

PARTITIONED BY (`dt` string)

row format delimited fields terminated by '\t'

location '/warehouse/gmall/ods/ods_base_dic/';

- 分区数据导入脚本

第一次导入:hdfs-db-to-ods first 2021-03-10

其它导入:hdfs-db-to-ods all 2021-03-12

#!/bin/bash

APP=gmall

hive=/opt/module/hive/bin/hive

# 如果是输入的日期按照取输入日期;如果没输入日期取当前时间的前一天

if [ -n "$2" ] ;then

do_date=$2

else

do_date=`date -d "-1 day" +%F`

fi

sql1="

load data inpath '/origin_data/$APP/db/order_info/$do_date' OVERWRITE into table ${APP}.ods_order_info partition(dt='$do_date');

load data inpath '/origin_data/$APP/db/order_detail/$do_date' OVERWRITE into table ${APP}.ods_order_detail partition(dt='$do_date');

load data inpath '/origin_data/$APP/db/sku_info/$do_date' OVERWRITE into table ${APP}.ods_sku_info partition(dt='$do_date');

load data inpath '/origin_data/$APP/db/user_info/$do_date' OVERWRITE into table ${APP}.ods_user_info partition(dt='$do_date');

load data inpath '/origin_data/$APP/db/payment_info/$do_date' OVERWRITE into table ${APP}.ods_payment_info partition(dt='$do_date');

load data inpath '/origin_data/$APP/db/base_category1/$do_date' OVERWRITE into table ${APP}.ods_base_category1 partition(dt='$do_date');

load data inpath '/origin_data/$APP/db/base_category2/$do_date' OVERWRITE into table ${APP}.ods_base_category2 partition(dt='$do_date');

load data inpath '/origin_data/$APP/db/base_category3/$do_date' OVERWRITE into table ${APP}.ods_base_category3 partition(dt='$do_date');

load data inpath '/origin_data/$APP/db/base_trademark/$do_date' OVERWRITE into table ${APP}.ods_base_trademark partition(dt='$do_date');

load data inpath '/origin_data/$APP/db/activity_info/$do_date' OVERWRITE into table ${APP}.ods_activity_info partition(dt='$do_date');

load data inpath '/origin_data/$APP/db/activity_order/$do_date' OVERWRITE into table ${APP}.ods_activity_order partition(dt='$do_date');

load data inpath '/origin_data/$APP/db/cart_info/$do_date' OVERWRITE into table ${APP}.ods_cart_info partition(dt='$do_date');

load data inpath '/origin_data/$APP/db/comment_info/$do_date' OVERWRITE into table ${APP}.ods_comment_info partition(dt='$do_date');

load data inpath '/origin_data/$APP/db/coupon_info/$do_date' OVERWRITE into table ${APP}.ods_coupon_info partition(dt='$do_date');

load data inpath '/origin_data/$APP/db/coupon_use/$do_date' OVERWRITE into table ${APP}.ods_coupon_use partition(dt='$do_date');

load data inpath '/origin_data/$APP/db/favor_info/$do_date' OVERWRITE into table ${APP}.ods_favor_info partition(dt='$do_date');

load data inpath '/origin_data/$APP/db/order_refund_info/$do_date' OVERWRITE into table ${APP}.ods_order_refund_info partition(dt='$do_date');

load data inpath '/origin_data/$APP/db/order_status_log/$do_date' OVERWRITE into table ${APP}.ods_order_status_log partition(dt='$do_date');

load data inpath '/origin_data/$APP/db/spu_info/$do_date' OVERWRITE into table ${APP}.ods_spu_info partition(dt='$do_date');

load data inpath '/origin_data/$APP/db/activity_rule/$do_date' OVERWRITE into table ${APP}.ods_activity_rule partition(dt='$do_date');

load data inpath '/origin_data/$APP/db/base_dic/$do_date' OVERWRITE into table ${APP}.ods_base_dic partition(dt='$do_date');

"

sql2="

load data inpath '/origin_data/$APP/db/base_province/$do_date' OVERWRITE into table ${APP}.ods_base_province;

load data inpath '/origin_data/$APP/db/base_region/$do_date' OVERWRITE into table ${APP}.ods_base_region;

"

case $1 in

"first"){

$hive -e "$sql1$sql2"

};;

"all"){

$hive -e "$sql1"

};;

esac

- 数据验证

hive (gmall)> select count(*) from ods_favor_info;

_c0

700

hive (gmall)> select * from ods_favor_info limit 3;

ods_favor_info.id ods_favor_info.user_id ods_favor_info.sku_id ods_favor_info.spu_id ods_favor_info.is_cancel ods_favor_info.create_time ods_favor_info.cancel_time ods_favor_info.dt

1394110075715887105 26 11 null 0 2021-03-10 00:00:00.0 null 2021-03-10

1394110075715887106 20 10 null 0 2021-03-10 00:00:00.0 null 2021-03-10

1394110075715887107 32 10 null 0 2021-03-10 00:00:00.0 null 2021-03-10

3.2 DWD 层

3.2.1 用户启动日志

- 建表

drop table if exists dwd_start_log;

CREATE EXTERNAL TABLE dwd_start_log(

`mid_id` string,

`user_id` string,

`version_code` string,

`version_name` string,

`lang` string,

`source` string,

`os` string,

`area` string,

`model` string,

`brand` string,

`sdk_version` string,

`gmail` string,

`height_width` string,

`app_time` string,

`network` string,

`lng` string,

`lat` string,

`entry` string,

`open_ad_type` string,

`action` string,

`loading_time` string,

`detail` string,

`extend1` string

)

PARTITIONED BY (dt string)

stored as parquet

location '/warehouse/gmall/dwd/dwd_start_log/'

TBLPROPERTIES('parquet.compression'='lzo');

- ods -> dwd

get_json_object 可以解析标准的JSON字符串

insert overwrite table dwd_start_log

PARTITION (dt='2021-05-16')

select

get_json_object(line,'$.mid') mid_id,

get_json_object(line,'$.uid') user_id,

get_json_object(line,'$.vc') version_code,

get_json_object(line,'$.vn') version_name,

get_json_object(line,'$.l') lang,

get_json_object(line,'$.sr') source,

get_json_object(line,'$.os') os,

get_json_object(line,'$.ar') area,

get_json_object(line,'$.md') model,

get_json_object(line,'$.ba') brand,

get_json_object(line,'$.sv') sdk_version,

get_json_object(line,'$.g') gmail,

get_json_object(line,'$.hw') height_width,

get_json_object(line,'$.t') app_time,

get_json_object(line,'$.nw') network,

get_json_object(line,'$.ln') lng,

get_json_object(line,'$.la') lat,

get_json_object(line,'$.entry') entry,

get_json_object(line,'$.open_ad_type') open_ad_type,

get_json_object(line,'$.action') action,

get_json_object(line,'$.loading_time') loading_time,

get_json_object(line,'$.detail') detail,

get_json_object(line,'$.extend1') extend1

from ods_start_log

where dt='2021-05-16';

- 表转换导入脚本

#!/bin/bash

# ods_to_dwd_start_log.sh

# 定义变量方便修改

APP=gmall

hive=/opt/module/hive/bin/hive

# 如果是输入的日期按照取输入日期;如果没输入日期取当前时间的前一天

if [ -n "$1" ] ;then

do_date=$1

else

do_date=`date -d "-1 day" +%F`

fi

# 设置hive.input.format为HiveInputFormat,否则LZO索引文件会被当做数据导入。

sql="

SET hive.input.format=org.apache.hadoop.hive.ql.io.HiveInputFormat;

insert overwrite table "$APP".dwd_start_log

PARTITION (dt='$do_date')

select

get_json_object(line,'$.mid') mid_id,

get_json_object(line,'$.uid') user_id,

get_json_object(line,'$.vc') version_code,

get_json_object(line,'$.vn') version_name,

get_json_object(line,'$.l') lang,

get_json_object(line,'$.sr') source,

get_json_object(line,'$.os') os,

get_json_object(line,'$.ar') area,

get_json_object(line,'$.md') model,

get_json_object(line,'$.ba') brand,

get_json_object(line,'$.sv') sdk_version,

get_json_object(line,'$.g') gmail,

get_json_object(line,'$.hw') height_width,

get_json_object(line,'$.t') app_time,

get_json_object(line,'$.nw') network,

get_json_object(line,'$.ln') lng,

get_json_object(line,'$.la') lat,

get_json_object(line,'$.entry') entry,

get_json_object(line,'$.open_ad_type') open_ad_type,

get_json_object(line,'$.action') action,

get_json_object(line,'$.loading_time') loading_time,

get_json_object(line,'$.detail') detail,

get_json_object(line,'$.extend1') extend1

from "$APP".ods_start_log

where dt='$do_date';

"

$hive -e "$sql"

3.2.2 用户行为日志

- 思路分析

用户行为日志字段比较复杂,可以通过自定义函数解析各个字段。

- UDF 函数

通过 key 来取出复杂字符串的各个字段。

package com.simwor.ds.hive;

import org.apache.commons.lang.StringUtils;

import org.apache.hadoop.hive.ql.exec.UDF;

import org.json.JSONObject;

public class LogUDF extends UDF {

public String evaluate(String line, String key) {

if(StringUtils.isBlank(line) || StringUtils.isBlank(key))

return "";

String[] split = line.split("\\|");

if(split.length != 2)

return "";

String serverTime = split[0].trim();

JSONObject jsonObject = new JSONObject(split[1].trim());

if(key.equals("st"))

return serverTime;

else if(key.equals("et")) {

if(jsonObject.has("et"))

return jsonObject.getString("et");

} else {

JSONObject cm = jsonObject.getJSONObject("cm");

if(cm.has(key))

return cm.getString(key);

}

return "";

}

}

- UDTF 函数

用户行为日志中一条日志中包含多个用户行为,此UDTF函数将用户行为数组分割成多条用户行为记录。

package com.simwor.ds.hive;

import org.apache.hadoop.hive.ql.exec.UDFArgumentException;

import org.apache.hadoop.hive.ql.metadata.HiveException;

import org.apache.hadoop.hive.ql.udf.generic.GenericUDTF;

import org.apache.hadoop.hive.serde2.objectinspector.*;

import org.apache.hadoop.hive.serde2.objectinspector.primitive.PrimitiveObjectInspectorFactory;

import org.json.JSONArray;

import java.util.ArrayList;

import java.util.List;

public class LogUDTF extends GenericUDTF {

@Override

public StructObjectInspector initialize(StructObjectInspector argOIs) throws UDFArgumentException {

//输入参数校验

List<? extends StructField> allStructFieldRefs = argOIs.getAllStructFieldRefs();

if(allStructFieldRefs.size() != 1)

throw new UDFArgumentException("参数个数不为1");

if(!"string".equals(allStructFieldRefs.get(0).getFieldObjectInspector().getTypeName()))

throw new UDFArgumentException("参数类型不为string");

//规定输出参数

ArrayList<String> fieldNames = new ArrayList<>();

ArrayList<ObjectInspector> fieldOIs = new ArrayList<>();

fieldNames.add("event_name");

fieldNames.add("event_json");

fieldOIs.add(PrimitiveObjectInspectorFactory.javaStringObjectInspector);

fieldOIs.add(PrimitiveObjectInspectorFactory.javaStringObjectInspector);

return ObjectInspectorFactory.getStandardStructObjectInspector(fieldNames, fieldOIs);

}

@Override

public void process(Object[] args) throws HiveException {

String eventArray = args[0].toString();

JSONArray jsonArray = new JSONArray(eventArray);

// 遍历事件数组中每个事件,解析其事件类型;

// 每个事件输出一行

for(int i=0; i<jsonArray.length(); i++) {

String[] result = new String[2];

result[0] = jsonArray.getJSONObject(i).getString("en");

result[1] = jsonArray.getString(i);

forward(result);

}

}

@Override

public void close() throws HiveException {

}

}

- 创建永久用户自定义函数

[omm@simwor01 ~]$ hdfs dfs -mkdir -p /user/hive/jars

[omm@simwor01 ~]$ hdfs dfs -put Hive-1.0-SNAPSHOT.jar /user/hive/jars/

[omm@simwor01 ~]$ hdfs dfs -ls /user/hive/jars/

Found 1 items

-rw-r--r-- 1 omm supergroup 4643 2021-05-23 14:32 /user/hive/jars/Hive-1.0-SNAPSHOT.jar

[omm@simwor01 ~]$

hive (gmall)> create function base_analizer as 'com.simwor.ds.hive.LogUDF' using jar 'hdfs://simwor01:8020/user/hive/jars/Hive-1.0-SNAPSHOT.jar';

hive (gmall)> create function flat_analizer as 'com.simwor.ds.hive.LogUDTF' using jar 'hdfs://simwor01:8020/user/hive/jars/Hive-1.0-SNAPSHOT.jar';

- 建表

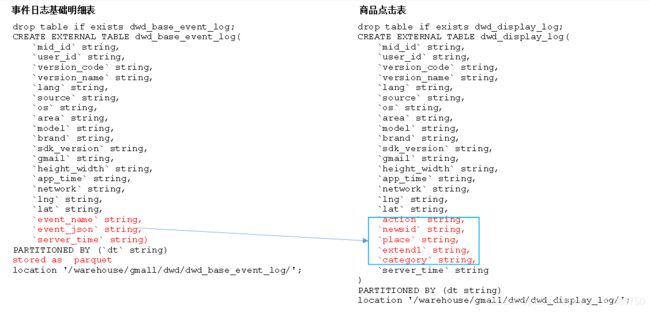

drop table if exists dwd_base_event_log;

CREATE EXTERNAL TABLE dwd_base_event_log(

`mid_id` string,

`user_id` string,

`version_code` string,

`version_name` string,

`lang` string,

`source` string,

`os` string,

`area` string,

`model` string,

`brand` string,

`sdk_version` string,

`gmail` string,

`height_width` string,

`app_time` string,

`network` string,

`lng` string,

`lat` string,

`event_name` string,

`event_json` string,

`server_time` string)

PARTITIONED BY (`dt` string)

stored as parquet

location '/warehouse/gmall/dwd/dwd_base_event_log/'

TBLPROPERTIES('parquet.compression'='lzo');

- 插入数据

insert overwrite table dwd_base_event_log partition(dt='2021-05-16')

select

base_analizer(line,'mid') as mid_id,

base_analizer(line,'uid') as user_id,

base_analizer(line,'vc') as version_code,

base_analizer(line,'vn') as version_name,

base_analizer(line,'l') as lang,

base_analizer(line,'sr') as source,

base_analizer(line,'os') as os,

base_analizer(line,'ar') as area,

base_analizer(line,'md') as model,

base_analizer(line,'ba') as brand,

base_analizer(line,'sv') as sdk_version,

base_analizer(line,'g') as gmail,

base_analizer(line,'hw') as height_width,

base_analizer(line,'t') as app_time,

base_analizer(line,'nw') as network,

base_analizer(line,'ln') as lng,

base_analizer(line,'la') as lat,

event_name,

event_json,

base_analizer(line,'st') as server_time

from ods_event_log lateral view flat_analizer(base_analizer(line,'et')) tmp_flat as event_name,event_json

where dt='2021-05-16' and base_analizer(line,'et')<>'';

- 插入数据脚本

#!/bin/bash

# ods_to_dwd_base_event_log.sh

# 定义变量方便修改

APP=gmall

hive=/opt/module/hive/bin/hive

# 如果是输入的日期按照取输入日期;如果没输入日期取当前时间的前一天

if [ -n "$1" ] ;then

do_date=$1

else

do_date=`date -d "-1 day" +%F`

fi

sql="

SET hive.input.format=org.apache.hadoop.hive.ql.io.HiveInputFormat;

insert overwrite table ${APP}.dwd_base_event_log partition(dt='$do_date')

select

${APP}.base_analizer(line,'mid') as mid_id,

${APP}.base_analizer(line,'uid') as user_id,

${APP}.base_analizer(line,'vc') as version_code,

${APP}.base_analizer(line,'vn') as version_name,

${APP}.base_analizer(line,'l') as lang,

${APP}.base_analizer(line,'sr') as source,

${APP}.base_analizer(line,'os') as os,

${APP}.base_analizer(line,'ar') as area,

${APP}.base_analizer(line,'md') as model,

${APP}.base_analizer(line,'ba') as brand,

${APP}.base_analizer(line,'sv') as sdk_version,

${APP}.base_analizer(line,'g') as gmail,

${APP}.base_analizer(line,'hw') as height_width,

${APP}.base_analizer(line,'t') as app_time,

${APP}.base_analizer(line,'nw') as network,

${APP}.base_analizer(line,'ln') as lng,

${APP}.base_analizer(line,'la') as lat,

event_name,

event_json,

${APP}.base_analizer(line,'st') as server_time

from ${APP}.ods_event_log lateral view ${APP}.flat_analizer(${APP}.base_analizer(line,'et')) tem_flat as event_name,event_json

where dt='$do_date' and ${APP}.base_analizer(line,'et')<>'';

"

$hive -e "$sql";

- 每种用户行为拆分成一张表 – 商品曝光表

drop table if exists dwd_display_log;

CREATE EXTERNAL TABLE dwd_display_log(

`mid_id` string,

`user_id` string,

`version_code` string,

`version_name` string,

`lang` string,

`source` string,

`os` string,

`area` string,

`model` string,

`brand` string,

`sdk_version` string,

`gmail` string,

`height_width` string,

`app_time` string,

`network` string,

`lng` string,

`lat` string,

`action` string,

`goodsid` string,

`place` string,

`extend1` string,

`category` string,

`server_time` string

)

PARTITIONED BY (dt string)

stored as parquet

location '/warehouse/gmall/dwd/dwd_display_log/'

TBLPROPERTIES('parquet.compression'='lzo');

insert overwrite table dwd_display_log

PARTITION (dt='2021-05-16')

select

mid_id,

user_id,

version_code,

version_name,

lang,

source,

os,

area,

model,

brand,

sdk_version,

gmail,

height_width,

app_time,

network,

lng,

lat,

get_json_object(event_json,'$.kv.action') action,

get_json_object(event_json,'$.kv.goodsid') goodsid,

get_json_object(event_json,'$.kv.place') place,

get_json_object(event_json,'$.kv.extend1') extend1,

get_json_object(event_json,'$.kv.category') category,

server_time

from dwd_base_event_log

where dt='2021-05-16' and event_name='display';

- 10种用户行为批量建表

drop table if exists dwd_display_log;

CREATE EXTERNAL TABLE dwd_display_log(

`mid_id` string,

`user_id` string,

`version_code` string,

`version_name` string,

`lang` string,

`source` string,

`os` string,

`area` string,

`model` string,

`brand` string,

`sdk_version` string,

`gmail` string,

`height_width` string,

`app_time` string,

`network` string,

`lng` string,

`lat` string,

`action` string,

`goodsid` string,

`place` string,

`extend1` string,

`category` string,

`server_time` string

)

PARTITIONED BY (dt string)

stored as parquet

location '/warehouse/gmall/dwd/dwd_display_log/'

TBLPROPERTIES('parquet.compression'='lzo');

drop table if exists dwd_newsdetail_log;

CREATE EXTERNAL TABLE dwd_newsdetail_log(

`mid_id` string,

`user_id` string,

`version_code` string,

`version_name` string,

`lang` string,

`source` string,

`os` string,

`area` string,

`model` string,