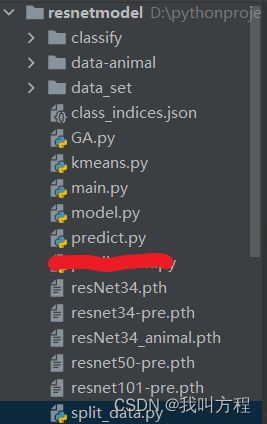

智能优化算法课设-遗传算法搜索kmeans图像聚类最优聚类数k

文章目录

- 前言

- 一、项目简介

- 二、训练模型

-

- 1.分割数据集工具

- 2.训练resnet

- 2.demo文件

- 二、遗传算法搜索kmeans最优聚类数k

-

- 1.kmeans测试文件

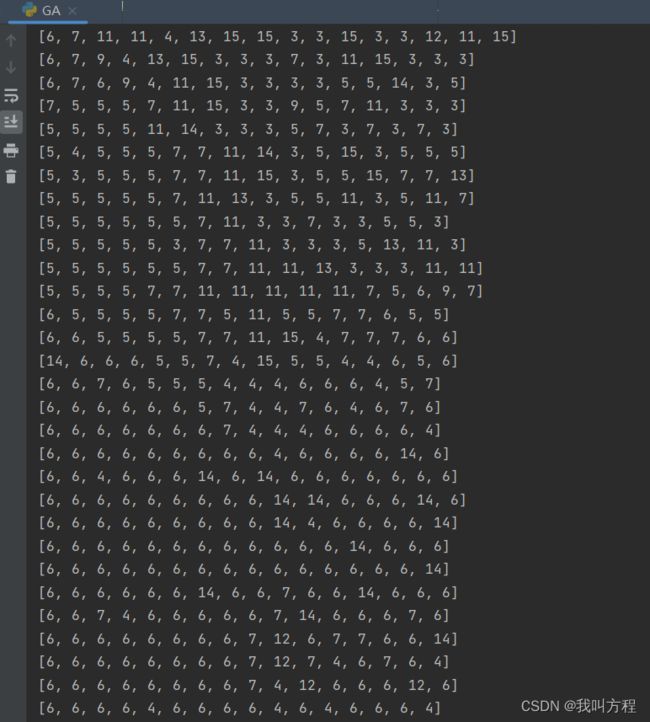

- 2.遗传算法搜索k

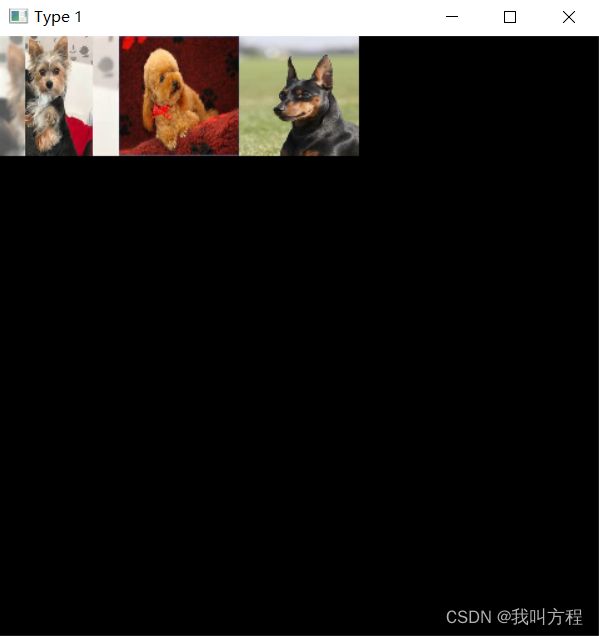

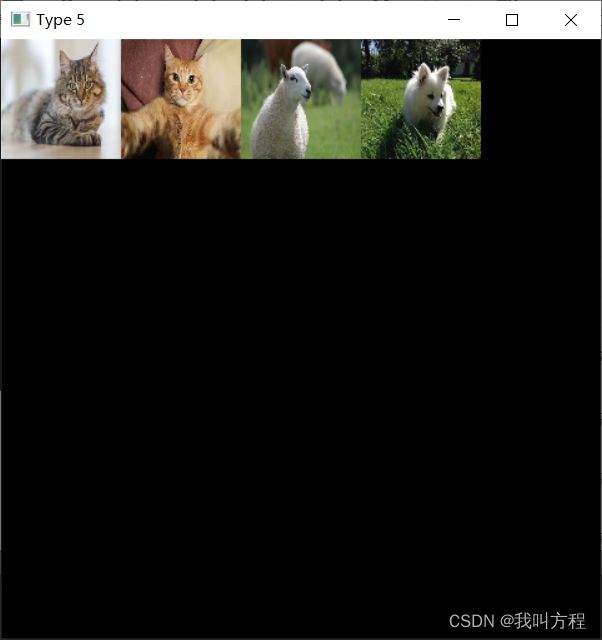

- 三、实验结果

前言

用resnet提取图像数据集的特征,利用kmeans进行图像聚类,其中利用遗传算法对kmeans进行最优k搜索,自动完成聚类。

其实我我对下面这些东西都不是很懂,只是当工具在用,理解大概率是错的,做这个是因为上智能优化算法被分到要完成遗传算法实现图像聚类这个课设。

下面基本之说怎么做,还有我认为可以改可以从文献里面复现的内容,这个遗传算法花了我很多时间,网上没有什么资源,一点一点摸出来的,实际上理解后也就那么回事,很多代码参考了其他博主的内容进行了移植,但是还是花了不少功夫。

一、项目简介

一个给动物图片自动聚类的项目。

动物数据集一共有十个分类(数据集链接)。对resnet预训练模型进行迁移学习,去掉模型的全连接层,用kmeans进行聚类,kmeans聚类的聚类数k利用遗传算法进行最优解搜索,然后自动完成聚类。

其实我感觉有点为了用kmeans而用kmeans,为了完成作业,下面直接上代码。

二、训练模型

1.分割数据集工具

把数据集分割成训练集和验证集

#split_data.py

import torch

from model import resnet34

from PIL import Image

from torchvision import transforms

import matplotlib.pyplot as plt

import json

data_transform = transforms.Compose(

[transforms.Resize(256),

transforms.CenterCrop(224),

transforms.ToTensor(),

transforms.Normalize([0.485, 0.456, 0.406], [0.229, 0.224, 0.225])])

# load image

img = Image.open("猫.jpg")

plt.imshow(img)

# [N, C, H, W]

img = data_transform(img)

# expand batch dimension

img = torch.unsqueeze(img, dim=0)

# read class_indict

try:

json_file = open('./class_indices.json', 'r')

class_indict = json.load(json_file)

except Exception as e:

print(e)

exit(-1)

# create model

model = resnet34(num_classes=10)

# load model weights

model_weight_path = "./resNet34_animal.pth"

model.load_state_dict(torch.load(model_weight_path))

model.eval()

with torch.no_grad():

# predict class

output = torch.squeeze(model(img))

predict = torch.softmax(output, dim=0)

predict_cla = torch.argmax(predict).numpy()

print(class_indict[str(predict_cla)], predict[predict_cla].numpy())

plt.show()

2.训练resnet

pytorch版本1.11

#model.py

import torch.nn as nn

import torch

class BasicBlock(nn.Module):

expansion = 1

def __init__(self, in_channel, out_channel, stride=1, downsample=None, **kwargs):

super(BasicBlock, self).__init__()

self.conv1 = nn.Conv2d(in_channels=in_channel, out_channels=out_channel,

kernel_size=3, stride=stride, padding=1, bias=False)

self.bn1 = nn.BatchNorm2d(out_channel)

self.relu = nn.ReLU()

self.conv2 = nn.Conv2d(in_channels=out_channel, out_channels=out_channel,

kernel_size=3, stride=1, padding=1, bias=False)

self.bn2 = nn.BatchNorm2d(out_channel)

self.downsample = downsample

def forward(self, x):

identity = x

if self.downsample is not None:

identity = self.downsample(x)

out = self.conv1(x)

out = self.bn1(out)

out = self.relu(out)

out = self.conv2(out)

out = self.bn2(out)

out += identity

out = self.relu(out)

return out

class Bottleneck(nn.Module):

"""

注意:原论文中,在虚线残差结构的主分支上,第一个1x1卷积层的步距是2,第二个3x3卷积层步距是1。

但在pytorch官方实现过程中是第一个1x1卷积层的步距是1,第二个3x3卷积层步距是2,

这么做的好处是能够在top1上提升大概0.5%的准确率。

可参考Resnet v1.5 https://ngc.nvidia.com/catalog/model-scripts/nvidia:resnet_50_v1_5_for_pytorch

"""

expansion = 4

def __init__(self, in_channel, out_channel, stride=1, downsample=None,

groups=1, width_per_group=64):

super(Bottleneck, self).__init__()

width = int(out_channel * (width_per_group / 64.)) * groups

self.conv1 = nn.Conv2d(in_channels=in_channel, out_channels=width,

kernel_size=1, stride=1, bias=False) # squeeze channels

self.bn1 = nn.BatchNorm2d(width)

# -----------------------------------------

self.conv2 = nn.Conv2d(in_channels=width, out_channels=width, groups=groups,

kernel_size=3, stride=stride, bias=False, padding=1)

self.bn2 = nn.BatchNorm2d(width)

# -----------------------------------------

self.conv3 = nn.Conv2d(in_channels=width, out_channels=out_channel*self.expansion,

kernel_size=1, stride=1, bias=False) # unsqueeze channels

self.bn3 = nn.BatchNorm2d(out_channel*self.expansion)

self.relu = nn.ReLU(inplace=True)

self.downsample = downsample

def forward(self, x):

identity = x

if self.downsample is not None:

identity = self.downsample(x)

out = self.conv1(x)

out = self.bn1(out)

out = self.relu(out)

out = self.conv2(out)

out = self.bn2(out)

out = self.relu(out)

out = self.conv3(out)

out = self.bn3(out)

out += identity

out = self.relu(out)

return out

class ResNet(nn.Module):

def __init__(self,

block,

blocks_num,

num_classes=1000,

include_top=True,

groups=1,

width_per_group=64):

super(ResNet, self).__init__()

self.include_top = include_top

self.in_channel = 64

self.groups = groups

self.width_per_group = width_per_group

self.conv1 = nn.Conv2d(3, self.in_channel, kernel_size=7, stride=2,

padding=3, bias=False)

self.bn1 = nn.BatchNorm2d(self.in_channel)

self.relu = nn.ReLU(inplace=True)

self.maxpool = nn.MaxPool2d(kernel_size=3, stride=2, padding=1)

self.layer1 = self._make_layer(block, 64, blocks_num[0])

self.layer2 = self._make_layer(block, 128, blocks_num[1], stride=2)

self.layer3 = self._make_layer(block, 256, blocks_num[2], stride=2)

self.layer4 = self._make_layer(block, 512, blocks_num[3], stride=2)

if self.include_top:

self.avgpool = nn.AdaptiveAvgPool2d((1, 1)) # output size = (1, 1)

self.fc = nn.Linear(512 * block.expansion, num_classes)

for m in self.modules():

if isinstance(m, nn.Conv2d):

nn.init.kaiming_normal_(m.weight, mode='fan_out', nonlinearity='relu')

def _make_layer(self, block, channel, block_num, stride=1):

downsample = None

if stride != 1 or self.in_channel != channel * block.expansion:

downsample = nn.Sequential(

nn.Conv2d(self.in_channel, channel * block.expansion, kernel_size=1, stride=stride, bias=False),

nn.BatchNorm2d(channel * block.expansion))

layers = []

layers.append(block(self.in_channel,

channel,

downsample=downsample,

stride=stride,

groups=self.groups,

width_per_group=self.width_per_group))

self.in_channel = channel * block.expansion

for _ in range(1, block_num):

layers.append(block(self.in_channel,

channel,

groups=self.groups,

width_per_group=self.width_per_group))

return nn.Sequential(*layers)

def forward(self, x):

x = self.conv1(x)

x = self.bn1(x)

x = self.relu(x)

x = self.maxpool(x)

x = self.layer1(x)

x = self.layer2(x)

x = self.layer3(x)

x = self.layer4(x)

if self.include_top:

x = self.avgpool(x)

x = torch.flatten(x, 1)

x = self.fc(x)

return x

def resnet34(num_classes=1000, include_top=True):

# https://download.pytorch.org/models/resnet34-333f7ec4.pth

return ResNet(BasicBlock, [3, 4, 6, 3], num_classes=num_classes, include_top=include_top)

def resnet50(num_classes=1000, include_top=True):

# https://download.pytorch.org/models/resnet50-19c8e357.pth

return ResNet(Bottleneck, [3, 4, 6, 3], num_classes=num_classes, include_top=include_top)

def resnet101(num_classes=1000, include_top=True):

# https://download.pytorch.org/models/resnet101-5d3b4d8f.pth

return ResNet(Bottleneck, [3, 4, 23, 3], num_classes=num_classes, include_top=include_top)

def resnext50_32x4d(num_classes=1000, include_top=True):

# https://download.pytorch.org/models/resnext50_32x4d-7cdf4587.pth

groups = 32

width_per_group = 4

return ResNet(Bottleneck, [3, 4, 6, 3],

num_classes=num_classes,

include_top=include_top,

groups=groups,

width_per_group=width_per_group)

def resnext101_32x8d(num_classes=1000, include_top=True):

# https://download.pytorch.org/models/resnext101_32x8d-8ba56ff5.pth

groups = 32

width_per_group = 8

return ResNet(Bottleneck, [3, 4, 23, 3],

num_classes=num_classes,

include_top=include_top,

groups=groups,

width_per_group=width_per_group)

#train.py

import torch

import torch.nn as nn

from torchvision import transforms, datasets

import json

import matplotlib.pyplot as plt

import os

import torch.optim as optim

from model import resnet34, resnet101

import torchvision.models.resnet

model_urls = {

'resnet18': 'https://download.pytorch.org/models/resnet18-5c106cde.pth',

'resnet34': 'https://download.pytorch.org/models/resnet34-333f7ec4.pth',

'resnet50': 'https://download.pytorch.org/models/resnet50-19c8e357.pth',

'resnet101': 'https://download.pytorch.org/models/resnet101-5d3b4d8f.pth',

'resnet152': 'https://download.pytorch.org/models/resnet152-b121ed2d.pth',

'resnext50_32x4d': 'https://download.pytorch.org/models/resnext50_32x4d-7cdf4587.pth',

'resnext101_32x8d': 'https://download.pytorch.org/models/resnext101_32x8d-8ba56ff5.pth',

'wide_resnet50_2': 'https://download.pytorch.org/models/wide_resnet50_2-95faca4d.pth',

'wide_resnet101_2': 'https://download.pytorch.org/models/wide_resnet101_2-32ee1156.pth',

}

device = torch.device("cuda:0" if torch.cuda.is_available() else "cpu")

print(device)

data_transform = {

"train": transforms.Compose([transforms.RandomResizedCrop(224),

transforms.RandomHorizontalFlip(),

transforms.ToTensor(),

transforms.Normalize([0.485, 0.456, 0.406], [0.229, 0.224, 0.225])]),

"val": transforms.Compose([transforms.Resize(256),

transforms.CenterCrop(224),

transforms.ToTensor(),

transforms.Normalize([0.485, 0.456, 0.406], [0.229, 0.224, 0.225])])}

data_root = os.path.abspath(os.path.join(os.getcwd())) # get data root path

image_path = data_root + "/data-animal/" # flower data set path

train_dataset = datasets.ImageFolder(root=image_path+"train",

transform=data_transform["train"])

train_num = len(train_dataset)

flower_list = train_dataset.class_to_idx

cla_dict = dict((val, key) for key, val in flower_list.items())

# write dict into json file

json_str = json.dumps(cla_dict, indent=10)

with open('class_indices.json', 'w') as json_file:

json_file.write(json_str)

batch_size = 32

train_loader = torch.utils.data.DataLoader(train_dataset,

batch_size=batch_size, shuffle=True,

num_workers=0)

validate_dataset = datasets.ImageFolder(root=image_path + "val",

transform=data_transform["val"])

val_num = len(validate_dataset)

validate_loader = torch.utils.data.DataLoader(validate_dataset,

batch_size=batch_size, shuffle=False,

num_workers=0)

net = resnet34()

# load pretrain weights

model_weight_path = "./resnet34-pre.pth"

missing_keys, unexpected_keys = net.load_state_dict(torch.load(model_weight_path), strict=False)

# for param in net.parameters():

# param.requires_grad = False

# change fc layer structure

inchannel = net.fc.in_features

net.fc = nn.Linear(inchannel, 10)#输入通道数,输出通道数

net.to(device)

loss_function = nn.CrossEntropyLoss()

optimizer = optim.Adam(net.parameters(), lr=0.0001)#adam优化器

best_acc = 0.0

save_path = './resNet34_animal.pth'

for epoch in range(10):

# train

net.train()

running_loss = 0.0

for step, data in enumerate(train_loader, start=0):

images, labels = data

optimizer.zero_grad()

logits = net(images.to(device))

loss = loss_function(logits, labels.to(device))

loss.backward()

optimizer.step()

# print statistics

running_loss += loss.item()

# print train process

rate = (step+1)/len(train_loader)

a = "*" * int(rate * 50)

b = "." * int((1 - rate) * 50)

print("\rtrain loss: {:^3.0f}%[{}->{}]{:.4f}".format(int(rate*100), a, b, loss), end="")

print()

# validate

net.eval()

acc = 0.0 # accumulate accurate number / epoch

with torch.no_grad():

for val_data in validate_loader:

val_images, val_labels = val_data

outputs = net(val_images.to(device)) # eval model only have last output layer

# loss = loss_function(outputs, test_labels)

predict_y = torch.max(outputs, dim=1)[1]

acc += (predict_y == val_labels.to(device)).sum().item()

val_accurate = acc / val_num

if val_accurate > best_acc:

best_acc = val_accurate

torch.save(net.state_dict(), save_path)

print('[epoch %d] train_loss: %.3f test_accuracy: %.3f' %

(epoch + 1, running_loss / step, val_accurate))

print('Finished Training')

2.demo文件

用这个文件改进可以用在其他的项目

#predict.py

import torch

from model import resnet34

from PIL import Image

from torchvision import transforms

import matplotlib.pyplot as plt

import json

data_transform = transforms.Compose(

[transforms.Resize(256),

transforms.CenterCrop(224),

transforms.ToTensor(),

transforms.Normalize([0.485, 0.456, 0.406], [0.229, 0.224, 0.225])])

# load image

img = Image.open("猫.jpg")

plt.imshow(img)

# [N, C, H, W]

img = data_transform(img)

# expand batch dimension

img = torch.unsqueeze(img, dim=0)

# read class_indict

try:

json_file = open('./class_indices.json', 'r')

class_indict = json.load(json_file)

except Exception as e:

print(e)

exit(-1)

# create model

model = resnet34(num_classes=10)

# load model weights

model_weight_path = "./resNet34_animal.pth"

model.load_state_dict(torch.load(model_weight_path))

model.eval()

with torch.no_grad():

# predict class

output = torch.squeeze(model(img))

predict = torch.softmax(output, dim=0)

predict_cla = torch.argmax(predict).numpy()

print(class_indict[str(predict_cla)], predict[predict_cla].numpy())

plt.show()

二、遗传算法搜索kmeans最优聚类数k

1.kmeans测试文件

这个文件是这样的,是用来测试的,没有遗传算法的成分,但是可以更好看出kmeans是怎么用的,怎么与人工神经网络结合,怎么手动去除fc层。

#kmeans.py

import os

import numpy as np

import sklearn

from sklearn.cluster import KMeans

import cv2

from imutils import build_montages

import torch.nn as nn

import torchvision.models as models

from PIL import Image

from torchvision import transforms

import torch

from sklearn.metrics import calinski_harabasz_score

from model import resnet34

#手动不加载fc层

model = models.resnet34(pretrained=False)

pretrained_dict = torch.load('resnet34_.pth')

model_dict = model.state_dict()

pretrained_dict = {k: v for k, v in pretrained_dict.items() if (k in model_dict and 'fc' not in k)} # 将'fc'这一层的权重选择不加载即可。

model_dict.update(pretrained_dict) # 更新权重

model.load_state_dict(model_dict)

model.eval()

image_path = []

all_images = []

images = os.listdir('./data-animal/classify/')

for image_name in images:

image_path.append('./data-animal/classify/' + image_name)

for path in image_path:

image = Image.open(path).convert('RGB')

image = transforms.Resize([224, 244])(image)

image = transforms.ToTensor()(image)

image = image.unsqueeze(0)

image = model(image)

image = image.reshape(-1, )

all_images.append(image.detach().numpy())

i=7

clt = KMeans(n_clusters=i)

clt.fit(all_images)

score = sklearn.metrics.calinski_harabasz_score(all_images,clt.labels_)

print('数据聚%d类calinski_harabaz指数为:%f'%(i,score))

labelIDs = np.unique(clt.labels_)

for labelID in labelIDs:

idxs = np.where(clt.labels_ == labelID)[0]

idxs = np.random.choice(idxs, size=min(25, len(idxs)),

replace=False)

show_box = []

for i in idxs:

image = cv2.imread(image_path[i])

image = cv2.resize(image, (96, 96))

show_box.append(image)

montage = build_montages(show_box, (96, 96), (5, 5))[0]

title = "Type {}".format(labelID)

cv2.imshow(title, montage)

cv2.waitKey(0)

2.遗传算法搜索k

遗传算法从我使用的角度来说,我需要做的就是寻找适应函数,给怎么样是好的结果一个函数,我这里使用的calinski_harabasz_score,可以对聚类结果进行打分,但是使用这个也会有一定问题,比如在聚类数比较少的时候,较低的聚类数会得分比较高,并且遗传算法每次结果也不一样,不少每一次都是全局最优解,但是这不是遗传算法考虑的问题。

注意一般二分类得分比较高,我排除了二分类,kmeans最低要求两类,我这个编码方式和后面繁殖变异就不算太公平,不过影响不大。用在其他函数搜索参数的时候可以根据需要调节参数。

GA.py

import os

import numpy as np

import sklearn

from sklearn.cluster import KMeans

import cv2

from imutils import build_montages

import torch.nn as nn

import torchvision.models as models

from PIL import Image

from torchvision import transforms

import torch

from sklearn.metrics import calinski_harabasz_score

from model import resnet34

class GA: # 定义遗传算法类

def __init__(self, all_images, M): # 构造函数,进行初始化以及编码

self.data = all_images

self.M = M # 初始化种群的个体数

self.length = 4 # 每条染色体基因长度为4(后面染色体的参数范围0-15)

self.species = np.random.randint(3, 16, self.M) # 给种群随机编码,我搜索的范围就是3到15

self.select_rate = 0.5 # 选择的概率(用于适应性没那么强的染色体),小于该概率则被选,否则被丢弃

self.strong_rate = 0.3 # 选择适应性强染色体的比率

self.bianyi_rate = 0.05 # 变异的概率

def Adaptation(self, data, ranseti): # 进行染色体适应度的评估

clt = KMeans(n_clusters=ranseti)

clt.fit(data)

fit = sklearn.metrics.calinski_harabasz_score(data, clt.labels_)

return fit

def selection(self): # 进行个体的选择,前百分之30的个体直接留下,后百分之70按概率选择

fitness = []

for ranseti in self.species: # 循环遍历种群的每一条染色体,计算保存该条染色体的适应度

fitness.append((self.Adaptation(self.data,ranseti), ranseti))

fitness1 = sorted(fitness, reverse=True) # 逆序排序,适应度高的染色体排前面

for i, j in zip(fitness1, range(self.M)):

fitness[j] = i[1]

parents = fitness[:int(len(fitness) *

self.strong_rate)] # 适应性特别强的直接留下来

for ranseti in fitness[int(len(fitness) *

self.strong_rate):]: # 挑选适应性没那么强的染色体

if np.random.random() < self.select_rate: # 随机比率,小于则留下

parents.append(ranseti)

return parents

def crossover(self, parents): # 进行个体的交叉

children = []

child_count = len(self.species) - len(parents) # 需要产生新个体的数量

while len(children) < child_count:

fu = np.random.randint(0, len(parents)) # 随机选择父亲

mu = np.random.randint(0, len(parents)) # 随机选择母亲

if fu != mu:

position = np.random.randint(0,self.length) # 随机选取交叉的基因位置(从右向左)

mask = 0

for i in range(position): # 位运算

mask = mask | (1 << i) # mask的二进制串最终为position个1

fu = parents[fu]

mu = parents[mu]

child = (fu & mask) | (mu & ~mask) # 孩子获得父亲在交叉点右边的基因、母亲在交叉点左边(包括交叉点)的基因,不是得到两个新孩子

if child <= 2:

child = 3#kmeans算法不允许小于两类

children.append(child)

self.species = parents + children # 产生的新的种群

def bianyi(self): # 进行个体的变异

for i in range(len(self.species)):

if np.random.random() < self.bianyi_rate: # 小于该概率则进行变异,否则保持原状

j = np.random.randint(0, self.length) # 随机选取变异基因位置点

self.species[i] = self.species[i] ^ (1 << j) # 在j位置取反

if self.species[i] <= 2:

self.species[i] = 3#kmeans算法不允许小于两类

def evolution(self): # 进行个体的进化

parents = self.selection()

self.crossover(parents)

self.bianyi()

def k(self): # 返回适应度最高的这条染色体,为最佳阈值

fitness = []

for ranseti in self.species: # 循环遍历种群的每一条染色体,计算保存该条染色体的适应度

fitness.append((self.Adaptation(self.data,ranseti), ranseti))

fitness1 = sorted(fitness, reverse=True) # 逆序排序,适应度高的染色体排前面

for i, j in zip(fitness1, range(self.M)):

fitness[j] = i[1]

return fitness[0]

def main():

# 手动不加载fc层

model = models.resnet34(pretrained=False)

pretrained_dict = torch.load('resNet34_animal.pth')

model_dict = model.state_dict()

pretrained_dict = {k: v for k, v in pretrained_dict.items() if

(k in model_dict and 'fc' not in k)} # 将'fc'这一层的权重选择不加载即可。

model_dict.update(pretrained_dict) # 更新权重

model.load_state_dict(model_dict)

model.eval()

image_path = []

all_images = []

images = os.listdir('./data-animal/classify')

for image_name in images:

image_path.append('./data-animal/classify/' + image_name)

for path in image_path:

image = Image.open(path).convert('RGB')

image = transforms.Resize([224, 244])(image)

image = transforms.ToTensor()(image)

image = image.unsqueeze(0)

image = model(image)

image = image.reshape(-1, )

all_images.append(image.detach().numpy())

ga = GA(all_images, 16)

for x in range(30): # 假设迭代次数为100

ga.evolution()

print(ga.species)

max = ga.k()

clt = KMeans(n_clusters=max)

clt.fit(all_images)

labelIDs = np.unique(clt.labels_)

for labelID in labelIDs:

idxs = np.where(clt.labels_ == labelID)[0]

idxs = np.random.choice(idxs, size=min(25, len(idxs)),

replace=False)

show_box = []

for i in idxs:

image = cv2.imread(image_path[i])

image = cv2.resize(image, (96, 96))

show_box.append(image)

montage = build_montages(show_box, (96, 96), (5, 5))[0]

title = "Type {}".format(labelID)

cv2.imshow(title, montage)

cv2.waitKey(0)

main()