Ubuntu20.04环境下编译MNN并部署mnist

Ubuntu20.04环境下编译MNN

编译环境准备

- cmake(建议使用3.10或以上版本)

- protobuf(使用3.0或以上版本)

- gcc(使用4.9或以上版本)

1.gcc安装

sudo apt update

sudo apt install build-essential

2.cmake安装

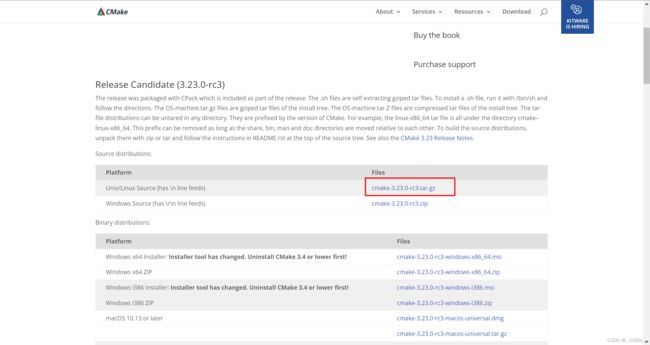

tar -zxv -f cmake-3.23.0-rc3.tar.gz

- 进入解压后的文件夹中执行

./bootstrap

如果出现下面的错误,则需安装libssl-dev,安装完后再重新执行./bootstrap

sudo apt-get install libssl-dev

![]()

- 编译构建cmake

make

- 安装cmake

sudo make install

- 安装完后输入

cmake --version验证是否安装完成

3.安装protobuf

- 安装依赖

sudo apt-get install autoconf automake libtool curl make g++ unzip libffi-dev -y

- 下载protobuf源码

下载地址:protobuf - 解压压缩包

tar -zxv -f protobuf-cpp-3.20.0-rc-1.tar.gz

- 进入解压后的文件夹,生成配置文件

cd protobuf-3.20.0-rc-1/

./autogen.sh

- 配置环境

./configure

- 编译源码

make

- 安装

sudo make install

- 刷新动态库

sudo ldconfig

- 输入

protoc --version查看是否安装成功

编译MNN

官方文档

- 下载 MNN源码并解压

下载地址:MNN - 解压

unzip MNN-master.zip

- 进入解压后的文件夹中并执行

cd MNN

./schema/generate.sh

- 本地编译,编译完成后本地出现MNN的动态库

mkdir build && cd build && cmake … && make -j8

Android编译

- 在https://developer.android.com/ndk/downloads/下载安装NDK,建议使用最新稳定版本

- 在 .bashrc 或者 .bash_profile 中设置 NDK 环境变量,例如:export ANDROID_NDK=/Users/username/path/to/android-ndk-r14b

- cd /path/to/MNN

- ./schema/generate.sh

- ./tools/script/get_model.sh(可选,模型仅demo工程需要)。注意get_model.sh需要事先编译好模型转换工具,参见这里。

- cd project/android

- 编译armv7动态库:mkdir build_32 && cd build_32 && …/build_32.sh

- 编译armv8动态库:mkdir build_64 && cd build_64 && …/build_64.sh

部署mnist

安装opencv库

部署mnist 还需要opencv库,所以还需要安装一下opencv

可以根据这篇文章通过克隆OpenCV源代码进行安装

博客

根据博客安装完成后,编写一个简单的opencv程序验证是否可用

创建main.cpp文件,并使用gcc编译并运行

g++ main.cpp -o output `pkg-config --cflags --libs opencv4`

./output

#include (y,x)[c]) << std::endl;

std::cout << "pixel value at y, x ,c"<<static_cast<unsigned>(image.at<cv::Vec3b>(y,x)[c]) << std::endl;

return 0;

}

sudo vi /etc/ld.so.conf.d/opencv.conf

在/etc/ld.so.conf.d/目录下sudo vim /etc/ld.so.conf.d/opencv.conf

写入两行:

/usr/local/lib

~/opencv_build/opencv/build/lib(这里指的是你安装的opencv路径下的lib)

保存退出,运行sudo ldconfig,问题解决

再运行./output能够输出正确信息说明opencv安装成功

部署mnist

创建c++文件,按照MNN官方文档推理过程编写程序,mnn模型下载地址

#include "Backend.hpp"

#include "Interpreter.hpp"

#include "MNNDefine.h"

#include "Interpreter.hpp"

#include "Tensor.hpp"

#include 编写CMakeLists.txt,注意要将里面MNN的地址换成自己编译的MNN地址

cmake_minimum_required(VERSION 3.10)

project(mnist)

set(CMAKE_CXX_STANDARD 11)

find_package(OpenCV REQUIRED)

set(MNN_DIR /home/chen/MNN)

include_directories(${MNN_DIR}/include)

include_directories(${MNN_DIR}/include/MNN)

include_directories(${MNN_DIR}/tools)

include_directories(${MNN_DIR}/tools/cpp)

include_directories(${MNN_DIR}/source)

include_directories(${MNN_DIR}/source/backend)

include_directories(${MNN_DIR}/source/core)

LINK_DIRECTORIES(${MNN_DIR}/build)

add_executable(mnist main.cpp)

target_link_libraries(mnist -lMNN ${OpenCV_LIBS})

编译

cmake .

make

编译完成后出现mnist可执行文件,输入./mnist运行,可以看到已经可以成功预测出手写数字