计算yolov5中detect.py生成图像的mAP

提示:文章写完后,目录可以自动生成,如何生成可参考右边的帮助文档

文章目录

- 前言

- 一、用YoloV5的detect.py生成预测图,预测类别,预测框坐标,预测置信度

-

- 1、跑detect.py程序

- 二、将测试图的Annotation的XML文件转化为txt文件,使用yolo坐标格式表示

- 三、将YoloV5和GroundTruth的yolo坐标转换为voc坐标

-

- 1.将groundtruth改成voc坐标:

- 2.将yolo结果改成voc坐标:

- 四、对某些应yolov5未检测到物体而不生成的txt文件,去除groundTruth中对应文件,然后测试mAP

前言

本文适用背景:

- 准备直接用yolov5提供的预训练模型coco128测试自己图像,而不是自己训练模型,然后进行目标检测。

- 准备对自己测试后的图像进行计算mAP等指标。

本文参考了许多文章,从中拷贝部分程序。如有侵权问题,请及时联系我,我会进行删改。

提示:以下是本篇文章正文内容,下面案例可供参考

一、用YoloV5的detect.py生成预测图,预测类别,预测框坐标,预测置信度

下载yolov5程序包,安装好环境,下载好权重文件放在weights文件夹这种就不说了,不会的自己百度。

1、跑detect.py程序

yolov5中主要有三个程序,train.py,val.py,detect.py。一般正常的训练自己数据集,然后测试。就用到train.py和val.py。但谁让我们不正常呢?我们只是用他的预训练模型来测试自己的图片。所以,首先要保证,你要测试的东西是他的预训练模型能测得到的。coco128.yaml的测试范围如下:

如果你的类别在里面,那恭喜进入下一步。

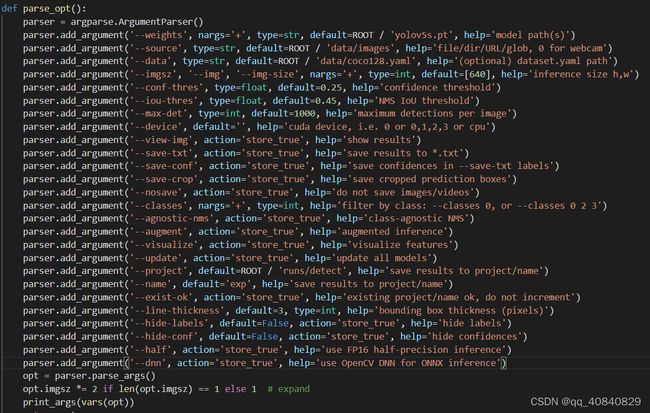

这么多超参数,你其实要改的不多,第一个weights是权重文件的路径。source是你的原图地址。classes是你在上面那么多类别中选择的类别,比如你的图片有人和车,但你的Annotation中只有人这一个类别,你直接跑的化,会输出车和人的预测结果,但你只要人的结果,你就可以选择–classes 0,表示只检测人。比如我的测试集要测试的类别为:

![]()

那对应到coco.yaml中就是 0 2 3 5 7 9,这个数字不一定要从小到大,它只是个标签。

然后–save-txt就是把预测类别,框坐标存在txt文件中。yolo的坐标格式为:

<class_id> <x_center> <y_center> <width> <height>

–save-conf 就是加上置信度,变成:

<class_id> <x_center> <y_center> <width> <height> <confifence>

所以,我的代码最后再终端运行的是:

python detect.py --source /home/test/Mytest --weights weights/yolov5l.pt --classes 0 2 3 5 7 9 --save-txt --save-conf

然后会在runs/detect/exp中生成你要的图片和文件。

上面是训练的基础,但我们需要修改代码。因为大家也看到了coco.yaml记录了图片的类别和标签,即person:0,car:2,bus:5。但我们的测试集的类别是people:0,bus:1, car:2.怎么办呢?

直接上代码:

# Write results

for *xyxy, conf, cls in reversed(det):

# print(cls)

if cls ==torch.tensor(0., device='cuda:0'):cls = torch.tensor(0., device='cuda:0')

if cls ==torch.tensor(5., device='cuda:0'):cls =torch.tensor(1., device='cuda:0')

if cls ==torch.tensor(2., device='cuda:0'):cls =torch.tensor(2., device='cuda:0')

if cls ==torch.tensor(3., device='cuda:0'):cls =torch.tensor(3., device='cuda:0')

if cls ==torch.tensor(9., device='cuda:0'):cls =torch.tensor(4., device='cuda:0')

if cls ==torch.tensor(7., device='cuda:0'):cls =torch.tensor(5., device='cuda:0')

if save_txt: # Write to file

xywh = (xyxy2xywh(torch.tensor(xyxy).view(1, 4)) / gn).view(-1).tolist() # normalized xywh

line = (cls, *xywh, conf) if save_conf else (cls, *xywh) # label format

with open(txt_path + '.txt', 'a') as f:

f.write(('%g ' * len(line)).rstrip() % line + '\n')

if save_img or save_crop or view_img: # Add bbox to image

if cls ==torch.tensor(0., device='cuda:0'):cls = torch.tensor(0., device='cuda:0')

if cls ==torch.tensor(1., device='cuda:0'):cls =torch.tensor(5., device='cuda:0')

if cls ==torch.tensor(2., device='cuda:0'):cls =torch.tensor(2., device='cuda:0')

if cls ==torch.tensor(3., device='cuda:0'):cls =torch.tensor(3., device='cuda:0')

if cls ==torch.tensor(4., device='cuda:0'):cls =torch.tensor(9., device='cuda:0')

if cls ==torch.tensor(5., device='cuda:0'):cls =torch.tensor(7., device='cuda:0')

c = int(cls) # integer class

label = None if hide_labels else (names[c] if hide_conf else f'{names[c]} {conf:.2f}')

annotator.box_label(xyxy, label, color=colors(c, True))

if save_crop:

save_one_box(xyxy, imc, file=save_dir / 'crops' / names[c] / f'{p.stem}.jpg', BGR=True)

去detect.py中找到上述代码段,加上我改的那些个if语句.也就是模型检测到一辆bus,他给出标签为5,那我们就在存储到txt前将5改为1.总共两段if代码段,前一段是改txt的,后一段是改回来,避免给图片写名字时写错.

这样,我们就获得了yolov5的预测结果.

如果你仔细看生成的txt文件,你会发现部分图片没有生成txt,这是因为模型没在你那张图片上检测到物体,他就不生成txt文件,txt文件名会和图片名一致

二、将测试图的Annotation的XML文件转化为txt文件,使用yolo坐标格式表示

如何将我们测试图的GroundTruth标签从xml转换成txt(这边xml名字要和前面图片名一致)

直接上代码:

voc_label.py

# -*- coding: utf-8 -*-

# xml解析包

import xml.etree.ElementTree as ET

import os

from os import getcwd

import shutil

sets = ['test_m3df']

classes = ['People', 'Bus', 'Car', 'Motorcycle', 'Lamp', 'Truck']

style = '.png'

# 进行归一化操作

def convert(size, box): # size:(原图w,原图h) , box:(xmin,xmax,ymin,ymax)

dw = 1. / size[0] # 1/w

dh = 1. / size[1] # 1/h

x = (box[0] + box[1]) / 2.0 # 物体在图中的中心点x坐标

y = (box[2] + box[3]) / 2.0 # 物体在图中的中心点y坐标

w = box[1] - box[0] # 物体实际像素宽度

h = box[3] - box[2] # 物体实际像素高度

x = x * dw # 物体中心点x的坐标比(相当于 x/原图w)

w = w * dw # 物体宽度的宽度比(相当于 w/原图w)

y = y * dh # 物体中心点y的坐标比(相当于 y/原图h)

h = h * dh # 物体宽度的宽度比(相当于 h/原图h)

return (x, y, w, h) # 返回 相对于原图的物体中心点的x坐标比,y坐标比,宽度比,高度比,取值范围[0-1]

# year ='2012', 对应图片的id(文件名)

def convert_annotation(image_id):

'''

将对应文件名的xml文件转化为label文件,xml文件包含了对应的bunding框以及图片长款大小等信息,

通过对其解析,然后进行归一化最终读到label文件中去,也就是说

一张图片文件对应一个xml文件,然后通过解析和归一化,能够将对应的信息保存到唯一一个label文件中去

labal文件中的格式:calss x y w h 同时,一张图片对应的类别有多个,所以对应的bunding的信息也有多个

'''

# 对应的通过year 找到相应的文件夹,并且打开相应image_id的xml文件,其对应bund文件

in_file = open('./data/Annotations/%s.xml' % (image_id), encoding='utf-8')

# 准备在对应的image_id 中写入对应的label,分别为

#

out_file = open('./data/labels/%s.txt' % (image_id), 'w', encoding='utf-8')

# 解析xml文件

tree = ET.parse(in_file)

# 获得对应的键值对

root = tree.getroot()

# 获得图片的尺寸大小

size = root.find('size')

# 如果xml内的标记为空,增加判断条件

if size != None:

# 获得宽

w = int(size.find('width').text)

# 获得高

h = int(size.find('height').text)

# 遍历目标obj

for obj in root.iter('object'):

# 获得difficult ??

difficult = obj.find('difficult').text

# 获得类别 =string 类型

cls = obj.find('name').text

# 如果类别不是对应在我们预定好的class文件中,或difficult==1则跳过

if cls not in classes or int(difficult) == 1:

continue

# 通过类别名称找到id

cls_id = classes.index(cls)

# 找到bndbox 对象

xmlbox = obj.find('bndbox')

# 获取对应的bndbox的数组 = ['xmin','xmax','ymin','ymax']

b = (float(xmlbox.find('xmin').text), float(xmlbox.find('xmax').text), float(xmlbox.find('ymin').text),

float(xmlbox.find('ymax').text))

print(image_id, cls, b)

# 带入进行归一化操作

# w = 宽, h = 高, b= bndbox的数组 = ['xmin','xmax','ymin','ymax']

bb = convert((w, h), b)

# bb 对应的是归一化后的(x,y,w,h)

# 生成 calss x y w h 在label文件中

out_file.write(str(cls_id) + " " + " ".join([str(a) for a in bb]) + '\n')

# 返回当前工作目录

wd = getcwd()

print(wd)

# 先找labels文件夹如果不存在则创建

labels = './data/labels'

if os.path.exists(labels):

shutil.rmtree(labels) # delete output folder

os.makedirs(labels) # make new output folder

for image_set in sets:

'''

对所有的文件数据集进行遍历

做了两个工作:

1.将所有图片文件都遍历一遍,并且将其所有的全路径都写在对应的txt文件中去,方便定位

2.同时对所有的图片文件进行解析和转化,将其对应的bundingbox 以及类别的信息全部解析写到label 文件中去

最后再通过直接读取文件,就能找到对应的label 信息

'''

# 读取在ImageSets/Main 中的train、test..等文件的内容

# 包含对应的文件名称

# image_ids = open('./data/ImageSets/%s.txt' % (image_set)).read().strip().split()

image_ids = os.listdir('图片地址')

image_id_s = []

for i in image_ids:

file_name=i.split('.')[0]

image_id_s.append(file_name)

# 打开对应的2012_train.txt 文件对其进行写入准备

txt_name = './data/%s.txt' % (image_set)

if os.path.exists(txt_name):

os.remove(txt_name)

else:

open(txt_name, 'w')

list_file = open(txt_name, 'w')

# 将对应的文件_id以及全路径写进去并换行

for image_id in image_id_s:

list_file.write('data/images/%s%s\n' % (image_id, style))

# 调用 year = 年份 image_id = 对应的文件名_id

convert_annotation(image_id)

# 关闭文件

list_file.close()

参考该文:https://blog.csdn.net/weixin_42182534/article/details/123608276

这样我们就获得了标签的yolo格式坐标的txt

三、将YoloV5和GroundTruth的yolo坐标转换为voc坐标

1.将groundtruth改成voc坐标:

直接上代码:

get_GT.py

import numpy as np

import cv2

import torch

import os

label_path = './test_label'

image_path = './test'

# 坐标转换,原始存储的是YOLOv5格式

# Convert nx4 boxes from [x, y, w, h] normalized to [x1, y1, x2, y2] where xy1=top-left, xy2=bottom-right

def xywhn2xyxy(x, w=800, h=800, padw=0, padh=0):

y = x.clone() if isinstance(x, torch.Tensor) else np.copy(x)

y[:, 0] = w * (x[:, 0] - x[:, 2] / 2) + padw # top left x

y[:, 1] = h * (x[:, 1] - x[:, 3] / 2) + padh # top left y

y[:, 2] = w * (x[:, 0] + x[:, 2] / 2) + padw # bottom right x

y[:, 3] = h * (x[:, 1] + x[:, 3] / 2) + padh # bottom right y

return y

folder = os.path.exists('GT')

if not folder:

os.makedirs('GT')

folderlist = os.listdir(label_path)

for i in folderlist:

label_path_new = os.path.join(label_path, i)

with open(label_path_new, 'r') as f:

lb = np.array([x.split() for x in f.read().strip().splitlines()], dtype=np.float32) # predict_label

read_label = label_path_new.replace(".txt", ".png")

read_label_path = read_label.replace('test_label', 'test')

print(read_label_path)

img = cv2.imread(str(read_label_path))

h, w = img.shape[:2]

lb[:, 1:] = xywhn2xyxy(lb[:, 1:], w, h, 0, 0) # 反归一化

for _, x in enumerate(lb):

class_label = int(x[0]) # class

cv2.rectangle(img, (round(x[1]), round(x[2])), (round(x[3]), round(x[4])), (0, 255, 0))

with open('GT/' + i, 'a') as fw:

fw.write(str(x[0]) + ' ' + str(x[1]) + ' ' + str(x[2]) + ' ' + str(x[3]) + ' ' + str(

x[4]) + '\n')

2.将yolo结果改成voc坐标:

get_DR.py

import numpy as np

import cv2

import torch

import os

label_path = './predict_label'

image_path = './test'

# 坐标转换,原始存储的是YOLOv5格式

# Convert nx4 boxes from [x, y, w, h] normalized to [x1, y1, x2, y2] where xy1=top-left, xy2=bottom-right

def xywhn2xyxy(x, w=800, h=800, padw=0, padh=0):

y = x.clone() if isinstance(x, torch.Tensor) else np.copy(x)

y[:, 0] = w * (x[:, 0] - x[:, 2] / 2) + padw # top left x

y[:, 1] = h * (x[:, 1] - x[:, 3] / 2) + padh # top left y

y[:, 2] = w * (x[:, 0] + x[:, 2] / 2) + padw # bottom right x

y[:, 3] = h * (x[:, 1] + x[:, 3] / 2) + padh # bottom right y

return y

folder = os.path.exists('DR')

if not folder:

os.makedirs('DR')

folderlist = os.listdir(label_path)

for i in folderlist:

label_path_new = os.path.join(label_path, i)

with open(label_path_new, 'r') as f:

lb = np.array([x.split() for x in f.read().strip().splitlines()], dtype=np.float32) # predict_label

# print(lb)

read_label = label_path_new.replace(".txt", ".png")

read_label_path = read_label.replace('predict_label', 'test')

img = cv2.imread(str(read_label_path))

h, w = img.shape[:2]

lb[:, 1:] = xywhn2xyxy(lb[:, 1:], w, h, 0, 0) # 反归一化

# 绘图

for _, x in enumerate(lb):

class_label = int(x[0]) # class

cv2.rectangle(img, (round(x[1]), round(x[2])), (round(x[3]), round(x[4])), (0, 255, 0))

cv2.putText(img, str(class_label), (int(x[1]), int(x[2] - 2)), fontFace=cv2.FONT_HERSHEY_SIMPLEX, fontScale=1,

color=(0, 0, 255), thickness=2)

with open('DR/' + i, 'a') as fw: # 这里需要把confidence放到第二位

# print(fw)

fw.write(str(x[0]) + ' ' + str(x[5]) + ' ' + str(x[1]) + ' ' + str(x[2]) + ' ' + str(x[3]) + ' ' + str(

x[4]) + '\n')

# cv2.imshow('show', img)

# cv2.waitKey(0) # 按键结束

# cv2.destroyAllWindows()

此处参考:https://blog.csdn.net/qq_38412266/article/details/119559719

不过他的两个get代码在最后第两行,'0'写错了,改成str(x[0]),然后 cv2.rectangle要输入为整数.直接用我的就行

四、对某些应yolov5未检测到物体而不生成的txt文件,去除groundTruth中对应文件,然后测试mAP

这边直接用:

get_map.py

import glob

import json

import os

import shutil

import operator

import sys

import argparse

import math

import numpy as np

MINOVERLAP = 0.4

parser = argparse.ArgumentParser()

parser.add_argument('-na', '--no-animation', help="no animation is shown.", action="store_true")

parser.add_argument('-np', '--no-plot', help="no plot is shown.", action="store_true")

parser.add_argument('-q', '--quiet', help="minimalistic console output.", action="store_true")

parser.add_argument('-i', '--ignore', nargs='+', type=str, help="ignore a list of classes.")

parser.add_argument('--set-class-iou', nargs='+', type=str, help="set IoU for a specific class.")

args = parser.parse_args()

'''

0,0 ------> x (width)

|

| (Left,Top)

| *_________

| | |

| |

y |_________|

(height) *

(Right,Bottom)

'''

if args.ignore is None:

args.ignore = []

specific_iou_flagged = False

if args.set_class_iou is not None:

specific_iou_flagged = True

os.chdir(os.path.dirname(os.path.abspath(__file__)))

GT_PATH = './GT'

DR_PATH = './DR'

IMG_PATH = './test'

if os.path.exists(IMG_PATH):

for dirpath, dirnames, files in os.walk(IMG_PATH):

if not files:

args.no_animation = True

else:

args.no_animation = True

show_animation = False

if not args.no_animation:

try:

import cv2

show_animation = True

except ImportError:

print("\"opencv-python\" not found, please install to visualize the results.")

args.no_animation = True

draw_plot = False

if not args.no_plot:

try:

import matplotlib.pyplot as plt

draw_plot = True

except ImportError:

print("\"matplotlib\" not found, please install it to get the resulting plots.")

args.no_plot = True

def log_average_miss_rate(precision, fp_cumsum, num_images):

"""

log-average miss rate:

Calculated by averaging miss rates at 9 evenly spaced FPPI points

between 10e-2 and 10e0, in log-space.

output:

lamr | log-average miss rate

mr | miss rate

fppi | false positives per image

references:

[1] Dollar, Piotr, et al. "Pedestrian Detection: An Evaluation of the

State of the Art." Pattern Analysis and Machine Intelligence, IEEE

Transactions on 34.4 (2012): 743 - 761.

"""

if precision.size == 0:

lamr = 0

mr = 1

fppi = 0

return lamr, mr, fppi

fppi = fp_cumsum / float(num_images)

mr = (1 - precision)

fppi_tmp = np.insert(fppi, 0, -1.0)

mr_tmp = np.insert(mr, 0, 1.0)

ref = np.logspace(-2.0, 0.0, num=9)

for i, ref_i in enumerate(ref):

j = np.where(fppi_tmp <= ref_i)[-1][-1]

ref[i] = mr_tmp[j]

lamr = math.exp(np.mean(np.log(np.maximum(1e-10, ref))))

return lamr, mr, fppi

"""

throw error and exit

"""

def error(msg):

print(msg)

sys.exit(0)

"""

check if the number is a float between 0.0 and 1.0

"""

def is_float_between_0_and_1(value):

try:

val = float(value)

if val > 0.0 and val < 1.0:

return True

else:

return False

except ValueError:

return False

"""

Calculate the AP given the recall and precision array

1st) We compute a version of the measured precision/recall curve with

precision monotonically decreasing

2nd) We compute the AP as the area under this curve by numerical integration.

"""

def voc_ap(rec, prec):

"""

--- Official matlab code VOC2012---

mrec=[0 ; rec ; 1];

mpre=[0 ; prec ; 0];

for i=numel(mpre)-1:-1:1

mpre(i)=max(mpre(i),mpre(i+1));

end

i=find(mrec(2:end)~=mrec(1:end-1))+1;

ap=sum((mrec(i)-mrec(i-1)).*mpre(i));

"""

rec.insert(0, 0.0) # insert 0.0 at begining of list

rec.append(1.0) # insert 1.0 at end of list

mrec = rec[:]

prec.insert(0, 0.0) # insert 0.0 at begining of list

prec.append(0.0) # insert 0.0 at end of list

mpre = prec[:]

"""

This part makes the precision monotonically decreasing

(goes from the end to the beginning)

matlab: for i=numel(mpre)-1:-1:1

mpre(i)=max(mpre(i),mpre(i+1));

"""

for i in range(len(mpre) - 2, -1, -1):

mpre[i] = max(mpre[i], mpre[i + 1])

"""

This part creates a list of indexes where the recall changes

matlab: i=find(mrec(2:end)~=mrec(1:end-1))+1;

"""

i_list = []

for i in range(1, len(mrec)):

if mrec[i] != mrec[i - 1]:

i_list.append(i) # if it was matlab would be i + 1

"""

The Average Precision (AP) is the area under the curve

(numerical integration)

matlab: ap=sum((mrec(i)-mrec(i-1)).*mpre(i));

"""

ap = 0.0

for i in i_list:

ap += ((mrec[i] - mrec[i - 1]) * mpre[i])

return ap, mrec, mpre

"""

Convert the lines of a file to a list

"""

def file_lines_to_list(path):

# open txt file lines to a list

with open(path) as f:

content = f.readlines()

# remove whitespace characters like `\n` at the end of each line

content = [x.strip() for x in content]

return content

"""

Draws text in image

"""

def draw_text_in_image(img, text, pos, color, line_width):

font = cv2.FONT_HERSHEY_PLAIN

fontScale = 1

lineType = 1

bottomLeftCornerOfText = pos

cv2.putText(img, text,

bottomLeftCornerOfText,

font,

fontScale,

color,

lineType)

text_width, _ = cv2.getTextSize(text, font, fontScale, lineType)[0]

return img, (line_width + text_width)

"""

Plot - adjust axes

"""

def adjust_axes(r, t, fig, axes):

# get text width for re-scaling

bb = t.get_window_extent(renderer=r)

text_width_inches = bb.width / fig.dpi

# get axis width in inches

current_fig_width = fig.get_figwidth()

new_fig_width = current_fig_width + text_width_inches

propotion = new_fig_width / current_fig_width

# get axis limit

x_lim = axes.get_xlim()

axes.set_xlim([x_lim[0], x_lim[1] * propotion])

"""

Draw plot using Matplotlib

"""

def draw_plot_func(dictionary, n_classes, window_title, plot_title, x_label, output_path, to_show, plot_color,

true_p_bar):

# sort the dictionary by decreasing value, into a list of tuples

sorted_dic_by_value = sorted(dictionary.items(), key=operator.itemgetter(1))

# unpacking the list of tuples into two lists

sorted_keys, sorted_values = zip(*sorted_dic_by_value)

#

if true_p_bar != "":

"""

Special case to draw in:

- green -> TP: True Positives (object detected and matches ground-truth)

- red -> FP: False Positives (object detected but does not match ground-truth)

- orange -> FN: False Negatives (object not detected but present in the ground-truth)

"""

fp_sorted = []

tp_sorted = []

for key in sorted_keys:

fp_sorted.append(dictionary[key] - true_p_bar[key])

tp_sorted.append(true_p_bar[key])

plt.barh(range(n_classes), fp_sorted, align='center', color='crimson', label='False Positive')

plt.barh(range(n_classes), tp_sorted, align='center', color='forestgreen', label='True Positive',

left=fp_sorted)

# add legend

plt.legend(loc='lower right')

"""

Write number on side of bar

"""

fig = plt.gcf() # gcf - get current figure

axes = plt.gca()

r = fig.canvas.get_renderer()

for i, val in enumerate(sorted_values):

fp_val = fp_sorted[i]

tp_val = tp_sorted[i]

fp_str_val = " " + str(fp_val)

tp_str_val = fp_str_val + " " + str(tp_val)

# trick to paint multicolor with offset:

# first paint everything and then repaint the first number

t = plt.text(val, i, tp_str_val, color='forestgreen', va='center', fontweight='bold')

plt.text(val, i, fp_str_val, color='crimson', va='center', fontweight='bold')

if i == (len(sorted_values) - 1): # largest bar

adjust_axes(r, t, fig, axes)

else:

plt.barh(range(n_classes), sorted_values, color=plot_color)

"""

Write number on side of bar

"""

fig = plt.gcf() # gcf - get current figure

axes = plt.gca()

r = fig.canvas.get_renderer()

for i, val in enumerate(sorted_values):

str_val = " " + str(val) # add a space before

if val < 1.0:

str_val = " {0:.2f}".format(val)

t = plt.text(val, i, str_val, color=plot_color, va='center', fontweight='bold')

# re-set axes to show number inside the figure

if i == (len(sorted_values) - 1): # largest bar

adjust_axes(r, t, fig, axes)

# set window title

fig.canvas.set_window_title(window_title)

# write classes in y axis

tick_font_size = 12

plt.yticks(range(n_classes), sorted_keys, fontsize=tick_font_size)

"""

Re-scale height accordingly

"""

init_height = fig.get_figheight()

# comput the matrix height in points and inches

dpi = fig.dpi

height_pt = n_classes * (tick_font_size * 1.4) # 1.4 (some spacing)

height_in = height_pt / dpi

# compute the required figure height

top_margin = 0.15 # in percentage of the figure height

bottom_margin = 0.05 # in percentage of the figure height

figure_height = height_in / (1 - top_margin - bottom_margin)

# set new height

if figure_height > init_height:

fig.set_figheight(figure_height)

# set plot title

plt.title(plot_title, fontsize=14)

# set axis titles

# plt.xlabel('classes')

plt.xlabel(x_label, fontsize='large')

# adjust size of window

fig.tight_layout()

# save the plot

fig.savefig(output_path)

# show image

if to_show:

plt.show()

# close the plot

plt.close()

"""

Create a ".temp_files/" and "results/" directory

"""

TEMP_FILES_PATH = ".temp_files"

if not os.path.exists(TEMP_FILES_PATH): # if it doesn't exist already

os.makedirs(TEMP_FILES_PATH)

results_files_path = "results"

if os.path.exists(results_files_path): # if it exist already

# reset the results directory

shutil.rmtree(results_files_path)

os.makedirs(results_files_path)

if draw_plot:

os.makedirs(os.path.join(results_files_path, "AP"))

os.makedirs(os.path.join(results_files_path, "F1"))

os.makedirs(os.path.join(results_files_path, "Recall"))

os.makedirs(os.path.join(results_files_path, "Precision"))

if show_animation:

os.makedirs(os.path.join(results_files_path, "images", "detections_one_by_one"))

"""

ground-truth

Load each of the ground-truth files into a temporary ".json" file.

Create a list of all the class names present in the ground-truth (gt_classes).

"""

# get a list with the ground-truth files

ground_truth_files_list = glob.glob(GT_PATH + '/*.txt')

if len(ground_truth_files_list) == 0:

error("Error: No ground-truth files found!")

ground_truth_files_list.sort()

# dictionary with counter per class

gt_counter_per_class = {}

counter_images_per_class = {}

for txt_file in ground_truth_files_list:

# print(txt_file)

file_id = txt_file.split(".txt", 1)[0]

file_id = os.path.basename(os.path.normpath(file_id))

# check if there is a correspondent detection-results file

temp_path = os.path.join(DR_PATH, (file_id + ".txt"))

if not os.path.exists(temp_path):

error_msg = "Error. File not found: {}\n".format(temp_path)

error_msg += "(You can avoid this error message by running extra/intersect-gt-and-dr.py)"

error(error_msg)

lines_list = file_lines_to_list(txt_file)

# create ground-truth dictionary

bounding_boxes = []

is_difficult = False

already_seen_classes = []

for line in lines_list:

try:

if "difficult" in line:

class_name, left, top, right, bottom, _difficult = line.split()

is_difficult = True

else:

class_name, left, top, right, bottom = line.split()

except:

if "difficult" in line:

line_split = line.split()

_difficult = line_split[-1]

bottom = line_split[-2]

right = line_split[-3]

top = line_split[-4]

left = line_split[-5]

class_name = ""

for name in line_split[:-5]:

class_name += name + " "

class_name = class_name[:-1]

is_difficult = True

else:

line_split = line.split()

bottom = line_split[-1]

right = line_split[-2]

top = line_split[-3]

left = line_split[-4]

class_name = ""

for name in line_split[:-4]:

class_name += name + " "

class_name = class_name[:-1]

if class_name in args.ignore:

continue

bbox = left + " " + top + " " + right + " " + bottom

if is_difficult:

bounding_boxes.append({"class_name": class_name, "bbox": bbox, "used": False, "difficult": True})

is_difficult = False

else:

bounding_boxes.append({"class_name": class_name, "bbox": bbox, "used": False})

if class_name in gt_counter_per_class:

gt_counter_per_class[class_name] += 1

else:

gt_counter_per_class[class_name] = 1

if class_name not in already_seen_classes:

if class_name in counter_images_per_class:

counter_images_per_class[class_name] += 1

else:

counter_images_per_class[class_name] = 1

already_seen_classes.append(class_name)

with open(TEMP_FILES_PATH + "/" + file_id + "_ground_truth.json", 'w') as outfile:

json.dump(bounding_boxes, outfile)

gt_classes = list(gt_counter_per_class.keys())

gt_classes = sorted(gt_classes)

n_classes = len(gt_classes)

"""

Check format of the flag --set-class-iou (if used)

e.g. check if class exists

"""

if specific_iou_flagged:

n_args = len(args.set_class_iou)

error_msg = \

'\n --set-class-iou [class_1] [IoU_1] [class_2] [IoU_2] [...]'

if n_args % 2 != 0:

error('Error, missing arguments. Flag usage:' + error_msg)

# [class_1] [IoU_1] [class_2] [IoU_2]

# specific_iou_classes = ['class_1', 'class_2']

specific_iou_classes = args.set_class_iou[::2] # even

# iou_list = ['IoU_1', 'IoU_2']

iou_list = args.set_class_iou[1::2] # odd

if len(specific_iou_classes) != len(iou_list):

error('Error, missing arguments. Flag usage:' + error_msg)

for tmp_class in specific_iou_classes:

if tmp_class not in gt_classes:

error('Error, unknown class \"' + tmp_class + '\". Flag usage:' + error_msg)

for num in iou_list:

if not is_float_between_0_and_1(num):

error('Error, IoU must be between 0.0 and 1.0. Flag usage:' + error_msg)

"""

detection-results

Load each of the detection-results files into a temporary ".json" file.

"""

dr_files_list = glob.glob(DR_PATH + '/*.txt')

dr_files_list.sort()

for class_index, class_name in enumerate(gt_classes):

bounding_boxes = []

for txt_file in dr_files_list:

file_id = txt_file.split(".txt", 1)[0]

file_id = os.path.basename(os.path.normpath(file_id))

temp_path = os.path.join(GT_PATH, (file_id + ".txt"))

if class_index == 0:

if not os.path.exists(temp_path):

error_msg = "Error. File not found: {}\n".format(temp_path)

error_msg += "(You can avoid this error message by running extra/intersect-gt-and-dr.py)"

error(error_msg)

lines = file_lines_to_list(txt_file)

for line in lines:

try:

tmp_class_name, confidence, left, top, right, bottom = line.split()

except:

line_split = line.split()

bottom = line_split[-1]

right = line_split[-2]

top = line_split[-3]

left = line_split[-4]

confidence = line_split[-5]

tmp_class_name = ""

for name in line_split[:-5]:

tmp_class_name += name + " "

tmp_class_name = tmp_class_name[:-1]

if tmp_class_name == class_name:

bbox = left + " " + top + " " + right + " " + bottom

bounding_boxes.append({"confidence": confidence, "file_id": file_id, "bbox": bbox})

bounding_boxes.sort(key=lambda x: float(x['confidence']), reverse=True)

with open(TEMP_FILES_PATH + "/" + class_name + "_dr.json", 'w') as outfile:

json.dump(bounding_boxes, outfile)

"""

Calculate the AP for each class

"""

sum_AP = 0.0

ap_dictionary = {}

lamr_dictionary = {}

with open(results_files_path + "/results.txt", 'w') as results_file:

results_file.write("# AP and precision/recall per class\n")

count_true_positives = {}

for class_index, class_name in enumerate(gt_classes):

count_true_positives[class_name] = 0

"""

Load detection-results of that class

"""

dr_file = TEMP_FILES_PATH + "/" + class_name + "_dr.json"

dr_data = json.load(open(dr_file))

"""

Assign detection-results to ground-truth objects

"""

nd = len(dr_data)

tp = [0] * nd

fp = [0] * nd

score = [0] * nd

score05_idx = 0

for idx, detection in enumerate(dr_data):

file_id = detection["file_id"]

score[idx] = float(detection["confidence"])

if score[idx] > 0.5:

score05_idx = idx

if show_animation:

ground_truth_img = glob.glob1(IMG_PATH, file_id + ".*")

if len(ground_truth_img) == 0:

error("Error. Image not found with id: " + file_id)

elif len(ground_truth_img) > 1:

error("Error. Multiple image with id: " + file_id)

else:

img = cv2.imread(IMG_PATH + "/" + ground_truth_img[0])

img_cumulative_path = results_files_path + "/images/" + ground_truth_img[0]

if os.path.isfile(img_cumulative_path):

img_cumulative = cv2.imread(img_cumulative_path)

else:

img_cumulative = img.copy()

bottom_border = 60

BLACK = [0, 0, 0]

img = cv2.copyMakeBorder(img, 0, bottom_border, 0, 0, cv2.BORDER_CONSTANT, value=BLACK)

gt_file = TEMP_FILES_PATH + "/" + file_id + "_ground_truth.json"

ground_truth_data = json.load(open(gt_file))

ovmax = -1

gt_match = -1

bb = [float(x) for x in detection["bbox"].split()]

for obj in ground_truth_data:

if obj["class_name"] == class_name:

bbgt = [float(x) for x in obj["bbox"].split()]

bi = [max(bb[0], bbgt[0]), max(bb[1], bbgt[1]), min(bb[2], bbgt[2]), min(bb[3], bbgt[3])]

iw = bi[2] - bi[0] + 1

ih = bi[3] - bi[1] + 1

if iw > 0 and ih > 0:

# compute overlap (IoU) = area of intersection / area of union

ua = (bb[2] - bb[0] + 1) * (bb[3] - bb[1] + 1) + (bbgt[2] - bbgt[0]

+ 1) * (bbgt[3] - bbgt[1] + 1) - iw * ih

ov = iw * ih / ua

if ov > ovmax:

ovmax = ov

gt_match = obj

if show_animation:

status = "NO MATCH FOUND!"

min_overlap = MINOVERLAP

if specific_iou_flagged:

if class_name in specific_iou_classes:

index = specific_iou_classes.index(class_name)

min_overlap = float(iou_list[index])

if ovmax >= min_overlap:

if "difficult" not in gt_match:

if not bool(gt_match["used"]):

tp[idx] = 1

gt_match["used"] = True

count_true_positives[class_name] += 1

with open(gt_file, 'w') as f:

f.write(json.dumps(ground_truth_data))

if show_animation:

status = "MATCH!"

else:

fp[idx] = 1

if show_animation:

status = "REPEATED MATCH!"

else:

fp[idx] = 1

if ovmax > 0:

status = "INSUFFICIENT OVERLAP"

"""

Draw image to show animation

"""

if show_animation:

height, widht = img.shape[:2]

# colors (OpenCV works with BGR)

white = (255, 255, 255)

light_blue = (255, 200, 100)

green = (0, 255, 0)

light_red = (30, 30, 255)

# 1st line

margin = 10

v_pos = int(height - margin - (bottom_border / 2.0))

text = "Image: " + ground_truth_img[0] + " "

img, line_width = draw_text_in_image(img, text, (margin, v_pos), white, 0)

text = "Class [" + str(class_index) + "/" + str(n_classes) + "]: " + class_name + " "

img, line_width = draw_text_in_image(img, text, (margin + line_width, v_pos), light_blue, line_width)

if ovmax != -1:

color = light_red

if status == "INSUFFICIENT OVERLAP":

text = "IoU: {0:.2f}% ".format(ovmax * 100) + "< {0:.2f}% ".format(min_overlap * 100)

else:

text = "IoU: {0:.2f}% ".format(ovmax * 100) + ">= {0:.2f}% ".format(min_overlap * 100)

color = green

img, _ = draw_text_in_image(img, text, (margin + line_width, v_pos), color, line_width)

# 2nd line

v_pos += int(bottom_border / 2.0)

rank_pos = str(idx + 1) # rank position (idx starts at 0)

text = "Detection #rank: " + rank_pos + " confidence: {0:.2f}% ".format(

float(detection["confidence"]) * 100)

img, line_width = draw_text_in_image(img, text, (margin, v_pos), white, 0)

color = light_red

if status == "MATCH!":

color = green

text = "Result: " + status + " "

img, line_width = draw_text_in_image(img, text, (margin + line_width, v_pos), color, line_width)

font = cv2.FONT_HERSHEY_SIMPLEX

if ovmax > 0: # if there is intersections between the bounding-boxes

bbgt = [int(round(float(x))) for x in gt_match["bbox"].split()]

cv2.rectangle(img, (bbgt[0], bbgt[1]), (bbgt[2], bbgt[3]), light_blue, 2)

cv2.rectangle(img_cumulative, (bbgt[0], bbgt[1]), (bbgt[2], bbgt[3]), light_blue, 2)

cv2.putText(img_cumulative, class_name, (bbgt[0], bbgt[1] - 5), font, 0.6, light_blue, 1,

cv2.LINE_AA)

bb = [int(i) for i in bb]

cv2.rectangle(img, (bb[0], bb[1]), (bb[2], bb[3]), color, 2)

cv2.rectangle(img_cumulative, (bb[0], bb[1]), (bb[2], bb[3]), color, 2)

cv2.putText(img_cumulative, class_name, (bb[0], bb[1] - 5), font, 0.6, color, 1, cv2.LINE_AA)

# show image

cv2.imshow("Animation", img)

cv2.waitKey(20) # show for 20 ms

# save image to results

output_img_path = results_files_path + "/images/detections_one_by_one/" + class_name + "_detection" + str(

idx) + ".jpg"

cv2.imwrite(output_img_path, img)

# save the image with all the objects drawn to it

cv2.imwrite(img_cumulative_path, img_cumulative)

cumsum = 0

for idx, val in enumerate(fp):

fp[idx] += cumsum

cumsum += val

cumsum = 0

for idx, val in enumerate(tp):

tp[idx] += cumsum

cumsum += val

rec = tp[:]

for idx, val in enumerate(tp):

rec[idx] = float(tp[idx]) / np.maximum(gt_counter_per_class[class_name], 1)

prec = tp[:]

for idx, val in enumerate(tp):

prec[idx] = float(tp[idx]) / np.maximum((fp[idx] + tp[idx]), 1)

ap, mrec, mprec = voc_ap(rec[:], prec[:])

F1 = np.array(rec) * np.array(prec) * 2 / np.where((np.array(prec) + np.array(rec)) == 0, 1,

(np.array(prec) + np.array(rec)))

sum_AP += ap

text = "{0:.2f}%".format(ap * 100) + " = " + class_name + " AP " # class_name + " AP = {0:.2f}%".format(ap*100)

if len(prec) > 0:

F1_text = "{0:.2f}".format(F1[score05_idx]) + " = " + class_name + " F1 "

Recall_text = "{0:.2f}%".format(rec[score05_idx] * 100) + " = " + class_name + " Recall "

Precision_text = "{0:.2f}%".format(prec[score05_idx] * 100) + " = " + class_name + " Precision "

else:

F1_text = "0.00" + " = " + class_name + " F1 "

Recall_text = "0.00%" + " = " + class_name + " Recall "

Precision_text = "0.00%" + " = " + class_name + " Precision "

rounded_prec = ['%.2f' % elem for elem in prec]

rounded_rec = ['%.2f' % elem for elem in rec]

results_file.write(text + "\n Precision: " + str(rounded_prec) + "\n Recall :" + str(rounded_rec) + "\n\n")

if not args.quiet:

if len(prec) > 0:

print(text + "\t||\tscore_threhold=0.5 : " + "F1=" + "{0:.2f}".format(F1[score05_idx]) \

+ " ; Recall=" + "{0:.2f}%".format(rec[score05_idx] * 100) + " ; Precision=" + "{0:.2f}%".format(

prec[score05_idx] * 100))

else:

print(text + "\t||\tscore_threhold=0.5 : F1=0.00% ; Recall=0.00% ; Precision=0.00%")

ap_dictionary[class_name] = ap

n_images = counter_images_per_class[class_name]

lamr, mr, fppi = log_average_miss_rate(np.array(rec), np.array(fp), n_images)

lamr_dictionary[class_name] = lamr

"""

Draw plot

"""

if draw_plot:

plt.plot(rec, prec, '-o')

area_under_curve_x = mrec[:-1] + [mrec[-2]] + [mrec[-1]]

area_under_curve_y = mprec[:-1] + [0.0] + [mprec[-1]]

plt.fill_between(area_under_curve_x, 0, area_under_curve_y, alpha=0.2, edgecolor='r')

fig = plt.gcf()

fig.canvas.set_window_title('AP ' + class_name)

plt.title('class: ' + text)

plt.xlabel('Recall')

plt.ylabel('Precision')

axes = plt.gca()

axes.set_xlim([0.0, 1.0])

axes.set_ylim([0.0, 1.05])

fig.savefig(results_files_path + "/AP/" + class_name + ".png")

plt.cla()

plt.plot(score, F1, "-", color='orangered')

plt.title('class: ' + F1_text + "\nscore_threhold=0.5")

plt.xlabel('Score_Threhold')

plt.ylabel('F1')

axes = plt.gca()

axes.set_xlim([0.0, 1.0])

axes.set_ylim([0.0, 1.05])

fig.savefig(results_files_path + "/F1/" + class_name + ".png")

plt.cla()

plt.plot(score, rec, "-H", color='gold')

plt.title('class: ' + Recall_text + "\nscore_threhold=0.5")

plt.xlabel('Score_Threhold')

plt.ylabel('Recall')

axes = plt.gca()

axes.set_xlim([0.0, 1.0])

axes.set_ylim([0.0, 1.05])

fig.savefig(results_files_path + "/Recall/" + class_name + ".png")

plt.cla()

plt.plot(score, prec, "-s", color='palevioletred')

plt.title('class: ' + Precision_text + "\nscore_threhold=0.5")

plt.xlabel('Score_Threhold')

plt.ylabel('Precision')

axes = plt.gca()

axes.set_xlim([0.0, 1.0])

axes.set_ylim([0.0, 1.05])

fig.savefig(results_files_path + "/Precision/" + class_name + ".png")

plt.cla()

if show_animation:

cv2.destroyAllWindows()

results_file.write("\n# mAP of all classes\n")

mAP = sum_AP / n_classes

text = "mAP = {0:.2f}%".format(mAP * 100)

results_file.write(text + "\n")

print(text)

# remove the temp_files directory

shutil.rmtree(TEMP_FILES_PATH)

"""

Count total of detection-results

"""

# iterate through all the files

det_counter_per_class = {}

for txt_file in dr_files_list:

# get lines to list

lines_list = file_lines_to_list(txt_file)

for line in lines_list:

class_name = line.split()[0]

# check if class is in the ignore list, if yes skip

if class_name in args.ignore:

continue

# count that object

if class_name in det_counter_per_class:

det_counter_per_class[class_name] += 1

else:

# if class didn't exist yet

det_counter_per_class[class_name] = 1

# print(det_counter_per_class)

dr_classes = list(det_counter_per_class.keys())

"""

Plot the total number of occurences of each class in the ground-truth

"""

if draw_plot:

window_title = "ground-truth-info"

plot_title = "ground-truth\n"

plot_title += "(" + str(len(ground_truth_files_list)) + " files and " + str(n_classes) + " classes)"

x_label = "Number of objects per class"

output_path = results_files_path + "/ground-truth-info.png"

to_show = False

plot_color = 'forestgreen'

draw_plot_func(

gt_counter_per_class,

n_classes,

window_title,

plot_title,

x_label,

output_path,

to_show,

plot_color,

'',

)

"""

Write number of ground-truth objects per class to results.txt

"""

with open(results_files_path + "/results.txt", 'a') as results_file:

results_file.write("\n# Number of ground-truth objects per class\n")

for class_name in sorted(gt_counter_per_class):

results_file.write(class_name + ": " + str(gt_counter_per_class[class_name]) + "\n")

"""

Finish counting true positives

"""

for class_name in dr_classes:

# if class exists in detection-result but not in ground-truth then there are no true positives in that class

if class_name not in gt_classes:

count_true_positives[class_name] = 0

# print(count_true_positives)

"""

Plot the total number of occurences of each class in the "detection-results" folder

"""

if draw_plot:

window_title = "detection-results-info"

# Plot title

plot_title = "detection-results\n"

plot_title += "(" + str(len(dr_files_list)) + " files and "

count_non_zero_values_in_dictionary = sum(int(x) > 0 for x in list(det_counter_per_class.values()))

plot_title += str(count_non_zero_values_in_dictionary) + " detected classes)"

# end Plot title

x_label = "Number of objects per class"

output_path = results_files_path + "/detection-results-info.png"

to_show = False

plot_color = 'forestgreen'

true_p_bar = count_true_positives

draw_plot_func(

det_counter_per_class,

len(det_counter_per_class),

window_title,

plot_title,

x_label,

output_path,

to_show,

plot_color,

true_p_bar

)

"""

Write number of detected objects per class to results.txt

"""

with open(results_files_path + "/results.txt", 'a') as results_file:

results_file.write("\n# Number of detected objects per class\n")

for class_name in sorted(dr_classes):

n_det = det_counter_per_class[class_name]

text = class_name + ": " + str(n_det)

text += " (tp:" + str(count_true_positives[class_name]) + ""

text += ", fp:" + str(n_det - count_true_positives[class_name]) + ")\n"

results_file.write(text)

"""

Draw log-average miss rate plot (Show lamr of all classes in decreasing order)

"""

if draw_plot:

window_title = "lamr"

plot_title = "log-average miss rate"

x_label = "log-average miss rate"

output_path = results_files_path + "/lamr.png"

to_show = False

plot_color = 'royalblue'

draw_plot_func(

lamr_dictionary,

n_classes,

window_title,

plot_title,

x_label,

output_path,

to_show,

plot_color,

""

)

"""

Draw mAP plot (Show AP's of all classes in decreasing order)

"""

if draw_plot:

window_title = "mAP"

plot_title = "mAP = {0:.2f}%".format(mAP * 100)

x_label = "Average Precision"

output_path = results_files_path + "/mAP.png"

to_show = True

plot_color = 'royalblue'

draw_plot_func(

ap_dictionary,

n_classes,

window_title,

plot_title,

x_label,

output_path,

to_show,

plot_color,

""

)

可能代码会报错,缺少了intersect_gt_and_dr.py,这是因为我们上面说的,有些图检测不出目标,就不生成txt文件,现在匹配不了.

用下面代码:(它就是intersect_gt_and_dr.py),运行完之后在跑上面的get_map.py

import sys

import os

import glob

## This script ensures same number of files in ground-truth and detection-results folder.

## When you encounter file not found error, it's usually because you have

## mismatched numbers of ground-truth and detection-results files.

## You can use this script to move ground-truth and detection-results files that are

## not in the intersection into a backup folder (backup_no_matches_found).

## This will retain only files that have the same name in both folders.

# make sure that the cwd() in the beginning is the location of the python script (so that every path makes sense)

GT_PATH = 'F:\shiyanshi\yolov5-master\GT'

DR_PATH = 'F:\shiyanshi\yolov5-master\DR'

backup_folder = 'backup_no_matches_found' # must end without slash

os.chdir(GT_PATH)

gt_files = glob.glob('*.txt')

if len(gt_files) == 0:

print("Error: no .txt files found in", GT_PATH)

sys.exit()

os.chdir(DR_PATH)

dr_files = glob.glob('*.txt')

if len(dr_files) == 0:

print("Error: no .txt files found in", DR_PATH)

sys.exit()

gt_files = set(gt_files)

dr_files = set(dr_files)

print('total ground-truth files:', len(gt_files))

print('total detection-results files:', len(dr_files))

print()

gt_backup = gt_files - dr_files

dr_backup = dr_files - gt_files

def backup(src_folder, backup_files, backup_folder):

# non-intersection files (txt format) will be moved to a backup folder

if not backup_files:

print('No backup required for', src_folder)

return

os.chdir(src_folder)

## create the backup dir if it doesn't exist already

if not os.path.exists(backup_folder):

os.makedirs(backup_folder)

for file in backup_files:

os.rename(file, backup_folder + '/' + file)

backup(GT_PATH, gt_backup, backup_folder)

backup(DR_PATH, dr_backup, backup_folder)

if gt_backup:

print('total ground-truth backup files:', len(gt_backup))

if dr_backup:

print('total detection-results backup files:', len(dr_backup))

intersection = gt_files & dr_files

print('total intersected files:', len(intersection))

print("Intersection completed!")

以上代码参考:https://blog.csdn.net/qq_38412266/article/details/119559719