TensorFlow上分之路—MCNN(人群密度估计算法)

TensorFlow上分之路—MCNN(人群密度估计算法)

- 算法介绍

-

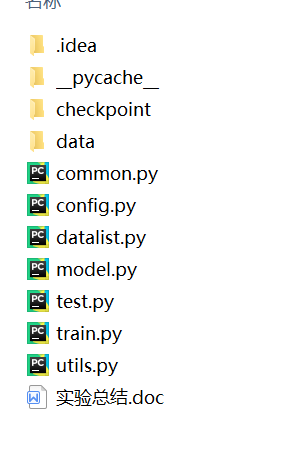

- 项目目录

- common

- config

- datalist

- model

- utils

- train

- test

- 数据集

- Model_result

算法介绍

该算法是我在读研究生期间做的毕业课题,受到当时MCNN算法的启发做的课题内容,当然很水,之前在学校的时候用按照github的pytorch版本来的,现在在详细温习过tensorflow1.x之后,决定用tensorflow实现一这遍个的效果。

项目目录

common

import tensorflow as tf

def convolutional(input_data,filters_shape,trainable,name,downsample=False,activate=True,bn=True):

with tf.variable_scope(name):

if downsample:

pad_h,pad_w=(filters_shape[0]-2)//2+1,(filters_shape[1]-2)//2+1

paddings=tf.constant([0,0],[pad_h,pad_h],[pad_w,[pad_w],[0,0]])

input_data=tf.pad(input_data,paddings,"CONSTANT")

strides=(1,2,2,1)

padding="VALID"

else:

strides=(1,1,1,1)

padding="SAME"

weight=tf.get_variable(name="weight",dtype=tf.float32,trainable=True,shape=filters_shape,initializer=tf.random_normal_initializer(stddev=0.01))

conv=tf.nn.conv2d(input=input_data,filter=weight,strides=strides,padding=padding)

if bn:

conv=tf.layers.batch_normalization(conv,beta_initializer=tf.zeros_initializer(),

gamma_initializer=tf.ones_initializer(),

moving_mean_initializer=tf.zeros_initializer(),

moving_variance_initializer=tf.ones_initializer(),

trainable=trainable)

else:

bias=tf.get_variable(name="bias",shape=filters_shape[-1],trainable=True,dtype=tf.float32,initializer=tf.constant_initializer(0.0))

conv=tf.nn.bias_add(conv,bias)

if activate:

conv=tf.nn.leaky_relu(conv,alpha=0.1)

def variables_weights( shape, name):

in_num = shape[0] * shape[1] * shape[2]

out_num = shape[3]

with tf.variable_scope(name):

stddev = (2 / (in_num + out_num)) ** 0.5

init = tf.random_normal_initializer(stddev=stddev)

weights = tf.get_variable('weights', initializer=init, shape=shape)

return weights

def variables_biases( shape, name):

with tf.variable_scope(name):

init = tf.constant_initializer(0.1)

biases = tf.get_variable('biases', initializer=init, shape=shape)

return biases

def conv_layer(bottom, shape, name):

with tf.name_scope(name):

kernel = variables_weights(shape, name)

conv = tf.nn.conv2d(bottom, kernel, strides=[1, 1, 1, 1], padding='SAME')

bias = variables_biases(shape[3], name)

biases = tf.nn.bias_add(conv, bias)

conv_out = tf.nn.relu(biases)

return conv_out

def max_pool_2x2( bottom, name):

with tf.name_scope(name):

pool = tf.nn.max_pool(bottom, ksize=[1, 2, 2, 1], strides=[1, 2, 2, 1], padding='SAME')

return pool

def fc_layer( bottom, out_num, name, relu=False):

bottom_shape = bottom.get_shape().as_list()

if name == 'fc3':

in_num = bottom_shape[1] * bottom_shape[2] * bottom_shape[3]

bottom = tf.reshape(bottom, shape=(-1, in_num))

else:

in_num = bottom_shape[1]

with tf.name_scope(name):

weights = fc_weights(in_num, out_num, name)

biases = variables_biases(out_num, name)

output = tf.matmul(bottom, weights) + biases

if relu:

output = tf.nn.relu(output)

return output

def fc_weights(self, in_num, out_num, name):

with tf.variable_scope(name):

stddev = (2 / (in_num + out_num)) ** 0.5

init = tf.random_normal_initializer(stddev=stddev)

return tf.get_variable('weights', initializer=init, shape=[in_num, out_num])

config

from easydict import EasyDict

__C=EasyDict()

cfg=__C

__C.train_epoch=100

__C.train_batch_size=1

__C.train_learning_rate=0.00001

__C.train_momentum=0.9

__C.train_data_path="./data/shanghaitech_part_A/train/img"

__C.train_data_gt_path="./data/shanghaitech_part_A/train/den"

__C.test_data_path="./data/shanghaitech_part_A/test/img"

__C.test_data_gt_path="./data/shanghaitech_part_A/test/den"

__C.test_batch_size=1

直接输入1次#,并按下space后,将生成1级标题。

输入2次#,并按下space后,将生成2级标题。

以此类推,我们支持6级标题。有助于使用TOC语法后生成一个完美的目录。

datalist

import numpy as np

import pandas as pd

import os

import cv2

import random

from config import cfg

import tensorflow as tf

from utils import image_preprocess,label_process

class Dataset(object):

def __init__(self,data_type,shuffle=True):

self.data_type=data_type

self.batch_size = cfg.train_batch_size if data_type == 'train' else cfg.test_batch_size

self.data_path = cfg.train_data_path if data_type == "train" else cfg.test_data_path

self.gt_path = cfg.train_data_gt_path if data_type == "train" else cfg.test_data_gt_path

self.data_files = [filename for filename in os.listdir(self.data_path) if

os.path.isfile(os.path.join(self.data_path, filename))]

self.data_files.sort()#按名字整理

self.down_sampling=False

if shuffle:

random.seed(2468)

self.num_samples = len(self.data_files)

self.num_batches = int(np.ceil(self.num_samples / self.batch_size))

self.batch_count = 0

self.id_list = range(0, self.num_samples)

self.blob_list = self.preload_data()

def preload_data(self):

print(self.data_type, "data preload will cost a long time, please be patient....")

blob_list={}

idx=0

for fname in self.data_files:

img=cv2.imread(os.path.join(self.data_path,fname),0)

img=img.astype(np.float32,copy=False)

ht=img.shape[0]

wd=img.shape[1]

ht_1=(ht//4)*4

wd_1=(wd//4)*4

img=cv2.resize(img,(wd_1,ht_1))

img=img.reshape((1,img.shape[0],img.shape[1],1))

den=pd.read_csv(os.path.join(self.gt_path,os.path.splitext(fname)[0]+'.csv'),sep=',',header=None).values

den=den.astype(np.float32,copy=False)

if self.down_sampling:

wd_1 = wd_1 // 4

ht_1 = ht_1 // 4

den = cv2.resize(den, (wd_1, ht_1))

den = den * ((wd * ht) / (wd_1 * ht_1))

else:

den = cv2.resize(den, (wd_1, ht_1))

den = den * ((wd * ht) / (wd_1 * ht_1))

den=den.reshape((1,den.shape[0],den.shape[1],1))

blob={}

blob['data']=img

blob['gt_density']=den

blob['fname']=fname

blob_list[idx]=blob

idx=idx+1

print(self.data_type, "Complete load data")

return blob_list

def __iter__(self):

return self

def __next__(self):

with tf.device('/gpu:1'):

num=0

if self.batch_count < self.num_batches:

while num < self.batch_size:

index = self.batch_count * self.batch_size + num

if index >= self.num_samples:

index -= self.num_samples

label=self.blob_list[index]['gt_density']

image = normalization(self.blob_list[index]['data'])

num += 1

self.batch_count += 1

return image, label

else:

self.batch_count = 0

raise StopIteration

def __len__(self):

return self.num_batches

if __name__=="__main__":

Dataset('test')

def normalization(data):

_range=np.max(data)-np.min(data)

return (data-np.min(data))/_range

model

import tensorflow as tf

from common import *

class MCNN(object):

def __init__(self,input_data,input_label):

self.prediction=self.build_model(input_data)

self.MSEloss=self.compute_loss(input_label)

def build_model(self, input_data):

with tf.variable_scope("large"):

large_conv1 = conv_layer(input_data, [9, 9, 1, 16], name='conv1')

large_conv2 = conv_layer(large_conv1, [7, 7, 16, 32], name='conv2')

large_conv2_maxpool = max_pool_2x2(large_conv2, name='pool1')

large_conv3 = conv_layer(large_conv2_maxpool, [7, 7, 32, 16], name='conv3')

large_conv3_maxpool = max_pool_2x2(large_conv3, name='pool2')

large_conv4 = conv_layer(large_conv3_maxpool, [7, 7, 16, 8], name='conv4')

with tf.variable_scope("middle"):

middle_conv1 = conv_layer(input_data, [7, 7, 1, 20], name='conv1')

middle_conv2 = conv_layer(middle_conv1, [5, 5, 20, 40], name='conv2')

middle_conv2_maxpool = max_pool_2x2(middle_conv2, name='pool1')

middle_conv3 = conv_layer(middle_conv2_maxpool, [5, 5, 40, 20], name='conv3')

middle_conv3_maxpool = max_pool_2x2(middle_conv3, name='pool2')

middle_conv4 = conv_layer(middle_conv3_maxpool, [5, 5, 20, 10], name='conv4')

with tf.variable_scope("small"):

small_conv1 = conv_layer(input_data, [5, 5, 1, 24], name='conv1')

small_conv2 = conv_layer(small_conv1, [3, 3, 24, 48], name='conv2')

small_conv2_maxpool = max_pool_2x2(small_conv2, name='pool1')

small_conv3 = conv_layer(small_conv2_maxpool, [3, 3, 48, 24], name='conv3')

small_conv3_maxpool = max_pool_2x2(small_conv3, name='pool2')

small_conv4 = conv_layer(small_conv3_maxpool, [3, 3, 24, 12], name='conv4')

net = tf.concat([large_conv4, middle_conv4, small_conv4], axis=3)

result = conv_layer(net, [1, 1, 30, 1], name='result')

return result

def compute_loss(self,input_label):

loss=tf.sqrt(tf.reduce_mean(tf.square(tf.reduce_sum(input_label)-tf.reduce_sum(self.prediction))))

return loss

class MCNNTest(object):

def __init__(self,input_data):

self.prediction=self.build_model(input_data)

def build_model(self, input_data):

with tf.variable_scope("large"):

large_conv1 = conv_layer(input_data, [9, 9, 1, 16], name='conv1')

large_conv2 = conv_layer(large_conv1, [7, 7, 16, 32], name='conv2')

large_conv2_maxpool = max_pool_2x2(large_conv2, name='pool1')

large_conv3 = conv_layer(large_conv2_maxpool, [7, 7, 32, 16], name='conv3')

large_conv3_maxpool = max_pool_2x2(large_conv3, name='pool2')

large_conv4 = conv_layer(large_conv3_maxpool, [7, 7, 16, 8], name='conv4')

with tf.variable_scope("middle"):

middle_conv1 = conv_layer(input_data, [7, 7, 1, 20], name='conv1')

middle_conv2 = conv_layer(middle_conv1, [5, 5, 20, 40], name='conv2')

middle_conv2_maxpool = max_pool_2x2(middle_conv2, name='pool1')

middle_conv3 = conv_layer(middle_conv2_maxpool, [5, 5, 40, 20], name='conv3')

middle_conv3_maxpool = max_pool_2x2(middle_conv3, name='pool2')

middle_conv4 = conv_layer(middle_conv3_maxpool, [5, 5, 20, 10], name='conv4')

with tf.variable_scope("small"):

small_conv1 = conv_layer(input_data, [5, 5, 1, 24], name='conv1')

small_conv2 = conv_layer(small_conv1, [3, 3, 24, 48], name='conv2')

small_conv2_maxpool = max_pool_2x2(small_conv2, name='pool1')

small_conv3 = conv_layer(small_conv2_maxpool, [3, 3, 48, 24], name='conv3')

small_conv3_maxpool = max_pool_2x2(small_conv3, name='pool2')

small_conv4 = conv_layer(small_conv3_maxpool, [3, 3, 24, 12], name='conv4')

net = tf.concat([large_conv4, middle_conv4, small_conv4], axis=3)

result = conv_layer(net, [1, 1, 30, 1], name='result')

return tf.reduce_sum(result)

utils

import cv2

import numpy as np

def image_preprocess(image,target_size):

image=cv2.cvtColor(image,cv2.COLOR_BGR2RGB).astype(np.float32)

ih,iw=target_size

h,w,_=image.shape

scale=min(iw/w,ih/h)

nw,nh=int(scale*w),int(scale*h)

image_resized=cv2.resize(image,(nw,nh))

image_paded=np.full(shape=[ih,iw,3],fill_value=0)

dw,dh=(iw-nw)//2,(ih-nh)//2

image_paded[dh:nh+dh,dw:nw+dw,:]=image_resized

image_paded=image_paded/255

return image_paded

def label_process(label,target_size):

label=np.asarray(label).astype(np.float32)

dst_w,dst_h=target_size

label=np.squeeze(label,0)

_,src_h,src_w=label.shape

dst=cv2.resize(label,(dst_w,dst_h), interpolation=cv2.INTER_AREA)

dst=dst*(src_w/dst_w)*(src_h/dst_h)

dst=np.expand_dims(dst,axis=-1)

assert np.abs(np.sum(dst)-np.sum(label))<=1 ,'ERROR: value distortion'

return dst

train

from config import cfg

import tensorflow as tf

import os

import shutil

from tqdm import tqdm

from datalist import Dataset

from model import MCNN

import numpy as np

class MCNNTrain(object):

def __init__(self):

self.train_epoch = cfg.train_epoch

self.train_ckpt_dir = "./checkpoint/"

self.batch_size = cfg.train_batch_size

self.trainset = Dataset('train')

self.testset = Dataset('test')

self.inital_weight=r"C:\Users\lth\Desktop\pytorch_test\CrowdCounting\checkpoint\test_loss=424639.3750.ckpt"

self.sess = tf.Session(config=tf.ConfigProto(allow_soft_placement=True))

with tf.name_scope("define_input"):

self.input_image = tf.placeholder(dtype=tf.float32, name="input_image")

self.input_label = tf.placeholder(dtype=tf.float32, name="input_label")

with tf.name_scope("define_loss"):

self.model = MCNN(self.input_image, self.input_label)

self.net_var = tf.global_variables()

self.MSEloss = self.model.MSEloss

with tf.name_scope("learn_rate"):

self.global_step = tf.Variable(1.0, dtype=tf.float64, trainable=False, name="global_step")

self.learning_rate = cfg.train_learning_rate

global_step_update = tf.assign_add(self.global_step, 1.0)

with tf.name_scope("define_train"):

trainable_var_list = tf.trainable_variables()

optimizer = tf.train.AdamOptimizer(self.learning_rate).minimize(self.MSEloss, var_list=trainable_var_list)

with tf.control_dependencies(tf.get_collection(tf.GraphKeys.UPDATE_OPS)):

with tf.control_dependencies([optimizer, global_step_update]):

self.train_op_with_all_variables = tf.no_op()

with tf.name_scope("loader_and_saver"):

self.loader = tf.train.Saver(self.net_var)

self.saver = tf.train.Saver(tf.global_variables(), max_to_keep=10)

with tf.name_scope("summary"):

tf.summary.scalar("learn_rate", self.learning_rate)

tf.summary.scalar("MSEloss", self.MSEloss)

logdir = "./data/log"

if os.path.exists(logdir):

shutil.rmtree(logdir)

os.mkdir(logdir)

self.write_op = tf.summary.merge_all()

self.summary_writer = tf.summary.FileWriter(logdir, graph=self.sess.graph)

def train(self):

self.sess.run(tf.global_variables_initializer())

try:

model_file=tf.train.latest_checkpoint(r"C:\Users\lth\Desktop\pytorch_test\CrowdCounting\checkpoint")

# model_file=r"C:\Users\lth\Desktop\pytorch_test\CrowdCounting\checkpoint\test_loss=424639.3750.ckpt-100"

print("=> Restoring weights from : %s ..." % model_file)

self.loader.restore(self.sess,model_file)

print("loading model finish")

except:

print('=> %s does not exist !!!'%model_file)

print('=> Now it starts to train MCNN from scratch')

for epoch in range(1, 1 + self.train_epoch):

train_op = self.train_op_with_all_variables

pbar = tqdm(self.trainset)

train_epoch_loss, test_epoch_loss = [], []

for train_data in pbar:

_, summary, train_step_loss, globale_step_val = self.sess.run(

[train_op, self.write_op, self.MSEloss, self.global_step],

feed_dict={

self.input_image: train_data[0],

self.input_label: train_data[1],

}

)

train_epoch_loss.append(train_step_loss)

self.summary_writer.add_summary(summary, globale_step_val)

pbar.set_description("train MSEloss: %.2f" % train_step_loss)

for test_data in self.testset:

test_step_loss = self.sess.run(

self.MSEloss,

feed_dict={

self.input_image: test_data[0],

self.input_label: test_data[1]

}

)

test_epoch_loss.append(test_step_loss)

#

train_epoch_loss, test_epoch_loss = np.mean(train_epoch_loss), np.mean(test_epoch_loss)

ckpt_file = "./checkpoint/test_loss=%.4f.ckpt" % test_epoch_loss

print('=>Epoch: %2d Train loss: %.2f Test MSEloss: %.2f Saving %s .....'

% (epoch, train_epoch_loss, test_epoch_loss, ckpt_file))

self.saver.save(self.sess, ckpt_file, global_step=epoch)

if __name__ == '__main__':

MCNNTrain().train()

test

from config import cfg

import tensorflow as tf

import os

import shutil

from tqdm import tqdm

from datalist import Dataset

from model import MCNN

import numpy as np

class MCNNTrain(object):

def __init__(self):

self.train_epoch = cfg.train_epoch

self.train_ckpt_dir = "./checkpoint/"

self.batch_size = cfg.train_batch_size

self.trainset = Dataset('train')

self.testset = Dataset('test')

self.inital_weight=r"C:\Users\lth\Desktop\pytorch_test\CrowdCounting\checkpoint\test_loss=424639.3750.ckpt"

self.sess = tf.Session(config=tf.ConfigProto(allow_soft_placement=True))

with tf.name_scope("define_input"):

self.input_image = tf.placeholder(dtype=tf.float32, name="input_image")

self.input_label = tf.placeholder(dtype=tf.float32, name="input_label")

with tf.name_scope("define_loss"):

self.model = MCNN(self.input_image, self.input_label)

self.net_var = tf.global_variables()

self.MSEloss = self.model.MSEloss

with tf.name_scope("learn_rate"):

self.global_step = tf.Variable(1.0, dtype=tf.float64, trainable=False, name="global_step")

self.learning_rate = cfg.train_learning_rate

global_step_update = tf.assign_add(self.global_step, 1.0)

with tf.name_scope("define_train"):

trainable_var_list = tf.trainable_variables()

optimizer = tf.train.AdamOptimizer(self.learning_rate).minimize(self.MSEloss, var_list=trainable_var_list)

with tf.control_dependencies(tf.get_collection(tf.GraphKeys.UPDATE_OPS)):

with tf.control_dependencies([optimizer, global_step_update]):

self.train_op_with_all_variables = tf.no_op()

with tf.name_scope("loader_and_saver"):

self.loader = tf.train.Saver(self.net_var)

self.saver = tf.train.Saver(tf.global_variables(), max_to_keep=10)

with tf.name_scope("summary"):

tf.summary.scalar("learn_rate", self.learning_rate)

tf.summary.scalar("MSEloss", self.MSEloss)

logdir = "./data/log"

if os.path.exists(logdir):

shutil.rmtree(logdir)

os.mkdir(logdir)

self.write_op = tf.summary.merge_all()

self.summary_writer = tf.summary.FileWriter(logdir, graph=self.sess.graph)

def train(self):

self.sess.run(tf.global_variables_initializer())

try:

model_file=tf.train.latest_checkpoint(r"C:\Users\lth\Desktop\pytorch_test\CrowdCounting\checkpoint")

# model_file=r"C:\Users\lth\Desktop\pytorch_test\CrowdCounting\checkpoint\test_loss=424639.3750.ckpt-100"

print("=> Restoring weights from : %s ..." % model_file)

self.loader.restore(self.sess,model_file)

print("loading model finish")

except:

print('=> %s does not exist !!!'%model_file)

print('=> Now it starts to train MCNN from scratch')

for epoch in range(1, 1 + self.train_epoch):

train_op = self.train_op_with_all_variables

pbar = tqdm(self.trainset)

train_epoch_loss, test_epoch_loss = [], []

for train_data in pbar:

_, summary, train_step_loss, globale_step_val = self.sess.run(

[train_op, self.write_op, self.MSEloss, self.global_step],

feed_dict={

self.input_image: train_data[0],

self.input_label: train_data[1],

}

)

train_epoch_loss.append(train_step_loss)

self.summary_writer.add_summary(summary, globale_step_val)

pbar.set_description("train MSEloss: %.2f" % train_step_loss)

for test_data in self.testset:

test_step_loss = self.sess.run(

self.MSEloss,

feed_dict={

self.input_image: test_data[0],

self.input_label: test_data[1]

}

)

test_epoch_loss.append(test_step_loss)

#

train_epoch_loss, test_epoch_loss = np.mean(train_epoch_loss), np.mean(test_epoch_loss)

ckpt_file = "./checkpoint/test_loss=%.4f.ckpt" % test_epoch_loss

print('=>Epoch: %2d Train loss: %.2f Test MSEloss: %.2f Saving %s .....'

% (epoch, train_epoch_loss, test_epoch_loss, ckpt_file))

self.saver.save(self.sess, ckpt_file, global_step=epoch)

if __name__ == '__main__':

MCNNTrain().train()

数据集

数据来自上海科技大学出版的shanghaitechAB,这种数据集做起来挺麻烦的,都是烧钱的主,网上很容易找到

Model_result

这里训练了差不多3天左右,用的是shanghaitechA的数据集,最好的效果可以达到论文上的结果,但训练着实费劲,另外在测试的时候发现人少的时候误差很大,这是没训练B的结果,因为两个数据集一个密集一个稀疏

这里训练了差不多3天左右,用的是shanghaitechA的数据集,最好的效果可以达到论文上的结果,但训练着实费劲,另外在测试的时候发现人少的时候误差很大,这是没训练B的结果,因为两个数据集一个密集一个稀疏

pytorch版本的github,代码也是从这里参考的

另外,欢迎大佬虐我