从零搭建机器学习平台Kubeflow

1 Kubeflow简介

1.1 什么是Kubeflow

来自官网的一段介绍: Kubeflow 项目致力于使机器学习 (ML) 工作流在 Kubernetes 上的部署变得简单、可移植和可扩展。 Kubeflow的目标不是重新创建其他服务,而是提供一种直接的方法,将用于 ML 的同类最佳开源系统部署到不同的基础设施中。 在任何运行 Kubernetes 的地方,开发者都应该能够运行 Kubeflow。

从官网这段介绍可以看出,Kubeflow与Kubernetes是形影不离的。总的来说,Kubeflow是 google 开源的一个基于 Kubernetes的 ML workflow 平台,其集成了大量的机器学习工具,比如用于交互性实验的 jupyterlab 环境,用于超参数调整的 katib,用于 pipeline 工作流控制的 argo workflow等。作为一个“大型工具箱”集合,kubeflow 为机器学习开发者提供了大量可选的工具,同时也为机器学习的工程落地提供了可行性工具。

1.2 Kubeflow 背景

Kubernetes 本来是一个用来管理无状态应用的容器平台,但是在近两年,有越来越多的公司用它来运行各种各样的工作负载,尤其是机器学习炼丹。各种 AI 公司或者互联网公司的 AI 部门都会尝试在 Kubernetes 上运行 TensorFlow,Caffe,MXNet 等等分布式学习的任务,这为 Kubernetes 带来了新的挑战。

首先,分布式的机器学习任务一般会涉及参数服务器(以下称为 PS)和工作节点(以下成为 worker)两种不同的工作类型。而且不同领域的学习任务对 PS 和 worker 有不同的需求,这体现在 Kubernetes 中就是配置难的问题。以 TensorFlow 为例,TensorFlow 的分布式学习任务通常会启动多个 PS 和多个 worker,而且在 TensorFlow 提供的最佳实践中,每个 worker 和 PS 要求传入不同的命令行参数。

其次,Kubernetes 默认的调度器对于机器学习任务的调度并不友好。如果说之前的问题只是在应用与部署阶段比较麻烦,那调度引发的资源利用率低,或者机器学习任务效率下降的问题,就格外值得关注。机器学习任务对于计算和网络的要求相对较高,一般而言所有的 worker 都会使用 GPU 进行训练,而且为了能够得到一个较好的网络支持,尽可能地同一个机器学习任务的 PS 和 worker 放在同一台机器或者网络较好的相邻机器上会降低训练所需的时间。

针对这些问题,Kubeflow 项目应运而生,它以 TensorFlow 作为第一个支持的框架,在 Kubernetes 上定义了一个新的资源类型:TFJob,即 TensorFlow Job 的缩写。通过这样一个资源类型,使用 TensorFlow 进行机器学习训练的工程师们不再需要编写繁杂的配置,只需要按照他们对业务的理解,确定 PS 与 worker 的个数以及数据与日志的输入输出,就可以进行一次训练任务。

一句话总结就是:Kubeflow 是一个为 Kubernetes 构建的可组合,便携式,可扩展的机器学习技术栈。

以上来自文章kubeflow–简介 https://www.jianshu.com/p/192f22a0b857,这段引言很好地解释了kubeflow的前生今世,对kubeflow的理解有了更深一层的认识,对于新手的我简直太需要了。

1.3 Kubeflow与机器学习

Kubeflow 是一个面向希望构建和进行 ML 任务的数据科学家的平台。Kubeflow 还适用于希望将 ML 系统部署到各种环境以进行开发、测试和生产级服务的 ML 工程师和运营团队。

Kubeflow 是 Kubernetes的 ML 工具包。

下图显示了 Kubeflow 作为在 Kubernetes 基础之上构建机器学习系统组件的平台:

kubeflow是一个胶水项目,它把诸多对机器学习的支持,比如模型训练,超参数训练,模型部署等进行组合并已容器化的方式进行部署,提供整个流程各个系统的高可用及方便的进行扩展部署了 kubeflow的用户就可以利用它进行不同的机器学习任务。

下图按顺序展示了机器学习工作流。工作流末尾的箭头指向流程表示机器学习任务是一个逐渐迭代的过程:

在实验阶段,您根据初始假设开发模型,并迭代测试和更新模型以产生您正在寻找的结果:

- 确定希望 ML 系统解决的问题;

- 收集和分析训练 ML 模型所需的数据;

- 选择 ML 框架和算法,并对模型的初始版本进行编码;

- 试验数据并训练您的模型。

- 调整模型超参数以确保最高效的处理和最准确的结果。

在生产阶段,您部署一个执行以下过程的系统:

- 将数据转换为训练系统需要的格式;

- 为确保模型在训练和预测期间表现一致,转换过程在实验和生产阶段必须相同。

- 训练 ML 模型。

- 为在线预测或以批处理模式运行的模型提供服务。

- 监控模型的性能,并将结果提供给您的流程以调整或重新训练模型。

1.4 核心组件

构成 Kubeflow 的核心组件,官网这里https://www.kubeflow.org/docs/components/有具体介绍,下面是一个我画的思维导图:

2 Kubeflow安装引导

2.1 常用链接

- 官方定制化安装指南仓库:https://github.com/kubeflow/manifests

- kubeflow官方仓库:https://github.com/kubeflow/

- kubernetes官网:https://kubernetes.io/zh-cn/

- github代理加速:https://ghproxy.com/

2.2 安装环境

安装环境:

- 系统版本

cat /etc/redhat-release

CentOS Linux release 7.9.2009 (Core)

- 运行内存

free -h

total used free shared buff/cache available

Mem: 110G 3.4G 105G 3.8M 891M 105G

Swap: 4.0G 0B 4.0G

- cpu

cat /proc/cpuinfo | grep name | sort | uniq

model name : Intel(R) Xeon(R) Platinum 8163 CPU @ 2.50GHz

cat /proc/cpuinfo | grep "physical id" | sort | uniq | wc -l

42

- gpu

nvidia-smi

Sat Dec 24 13:01:37 2022

+-----------------------------------------------------------------------------+

| NVIDIA-SMI 460.32.03 Driver Version: 460.32.03 CUDA Version: 11.2 |

|-------------------------------+----------------------+----------------------+

| GPU Name Persistence-M| Bus-Id Disp.A | Volatile Uncorr. ECC |

| Fan Temp Perf Pwr:Usage/Cap| Memory-Usage | GPU-Util Compute M. |

| | | MIG M. |

|===============================+======================+======================|

| 0 Tesla T4 Off | 00000000:00:06.0 Off | 0 |

| N/A 38C P0 25W / 70W | 0MiB / 15109MiB | 0% Default |

| | | N/A |

+-------------------------------+----------------------+----------------------+

| 1 Tesla T4 Off | 00000000:00:07.0 Off | 0 |

| N/A 34C P0 26W / 70W | 0MiB / 15109MiB | 0% Default |

| | | N/A |

+-------------------------------+----------------------+----------------------+

+-----------------------------------------------------------------------------+

| Processes: |

| GPU GI CI PID Type Process name GPU Memory |

| ID ID Usage |

|=============================================================================|

| No running processes found |

+-----------------------------------------------------------------------------+

2.3 前置环境

安装kubeflow需要的前置环境主要包括以下工具:

- Kubernetes :最高1.21

- kustomize :3.2.0

- kubectl

https://github.com/kubeflow/manifests#prerequisites

3 Kubernetes 安装

k8s集群由Master节点和Node(Worker)节点组成,在这里我们只用1台机器,安装kubernetes。

3.1 查看ip

(base) [root@server-szry1agd ~]# ip add

1: lo: mtu 65536 qdisc noqueue state UNKNOWN group default qlen 1000

link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00

inet 127.0.0.1/8 scope host lo

valid_lft forever preferred_lft forever

inet6 ::1/128 scope host

valid_lft forever preferred_lft forever

2: eth0: mtu 1450 qdisc pfifo_fast state UP group default qlen 1000

link/ether fa:16:3e:44:6c:3c brd ff:ff:ff:ff:ff:ff

inet 192.168.3.130/22 brd 192.168.3.255 scope global noprefixroute dynamic eth0

valid_lft 80254sec preferred_lft 80254sec

inet6 fe80::f816:3eff:fe44:6c3c/64 scope link

valid_lft forever preferred_lft forever

3.2 修改主机名称

这一步不是必须的,我看到有的文章里面讲到主机名称不能有下划线

(base) [root@server-szry1agd ~]# hostnamectl set-hostname kubuflow && bash

3.3 添加host

这里需要改成自己的ip和主机名称

(base) [root@kubuflow ~]# cat >> /etc/hosts << EOF

> 192.168.3.130 kubuflow

> EOF

查看hosts

(base) [root@kubuflow ~]# cat /etc/hosts

127.0.0.1 localhost localhost.localdomain localhost4 localhost4.localdomain4

::1 localhost localhost.localdomain localhost6 localhost6.localdomain6

0.0.0.0 server-szry1agd.novalocal

192.168.3.130 kubuflow

3.4 关闭防火墙,关闭selinux

(base) [root@kubuflow ~]# systemctl stop firewalld

(base) [root@kubuflow ~]# systemctl disable firewalld

(base) [root@kubuflow ~]# sed -i 's/enforcing/disabled/' /etc/selinux/config # 永久

(base) [root@kubuflow ~]# setenforce 0 # 临时

setenforce: SELinux is disabled

3.5 关闭swap

(base) [root@kubuflow ~]# swapoff -a

(base) [root@kubuflow ~]# sed -i 's/.*swap.*/#&/' /etc/fstab

3.6 转发 IPv4 并让 iptables 看到桥接流量

通过运行 lsmod | grep br_netfilter 来验证 br_netfilter 模块是否已加载。 若要显式加载此模块,请运行 sudo modprobe br_netfilter。 为了让 Linux 节点的 iptables 能够正确查看桥接流量,请确认 sysctl 配置中的 net.bridge.bridge-nf-call-iptables 设置为 1。

cat <3.7 时间同步

(base) [root@kubuflow ~]# yum install ntpdate -y

(base) [root@kubuflow ~]# ntpdate time.windows.com

24 Dec 14:21:55 ntpdate[18177]: adjust time server 52.231.114.183 offset 0.003717 sec

3.8 安装docker

wget https://mirrors.aliyun.com/docker-ce/linux/centos/docker-ce.repo -O /etc/yum.repos.d/docker-ce.repo

yum -y install docker-ce

systemctl enable docker && systemctl start docker && systemctl status docker

安装成功

(base) [root@kubuflow ~]# docker --version

Docker version 20.10.22, build 3a2c30b

(base) [root@kubuflow ~]# docker ps

CONTAINER ID IMAGE COMMAND CREATED STATUS PORTS NAMES

(base) [root@kubuflow ~]#

3.9 docker添加国内镜像源

(base) [root@kubuflow ~]# cat > /etc/docker/daemon.json << EOF

> {

> "registry-mirrors": [

> "http://hub-mirror.c.163.com",

> "https://docker.mirrors.ustc.edu.cn",

> "https://registry.docker-cn.com"

> ]

> }

> EOF

(base) [root@kubuflow ~]# # 使配置生效

(base) [root@kubuflow ~]# systemctl daemon-reload

(base) [root@kubuflow ~]#

(base) [root@kubuflow ~]# # 重启Docker

(base) [root@kubuflow ~]# systemctl restart docker

3.10 添加kubernetes的yum源

(base) [root@kubuflow ~]# cat > /etc/yum.repos.d/kubernetes.repo << EOF

> [kubernetes]

> name=Kubernetes

> baseurl=https://mirrors.aliyun.com/kubernetes/yum/repos/kubernetes-el7-x86_64

> enabled=1

> gpgcheck=0

> repo_gpgcheck=0

> gpgkey=https://mirrors.aliyun.com/kubernetes/yum/doc/yum-key.gpg

> https://mirrors.aliyun.com/kubernetes/yum/doc/rpm-package-key.gpg

> EOF

3.11 安装kubeadm,kubelet 和kubectl

(base) [root@kubuflow ~]# yum -y install kubelet-1.21.5-0 kubeadm-1.21.5-0 kubectl-1.21.5-0

(base) [root@kubuflow ~]# systemctl enable kubelet

3.12 部署Kubernetes Master

(base) [root@kubuflow ~]# kubeadm init --apiserver-advertise-address=192.168.3.130 --image-repository registry.aliyuncs.com/google_containers --kubernetes-version v1.21.5 --service-cidr=10.96.0.0/12 --pod-network-cidr=10.244.0.0/16 --ignore-preflight-errors=all

参数说明:

- –apiserver-advertise-address=192.168.3.130

这个参数就是master主机的IP地址,例如我的Master主机的IP是:192.168.3.130,也是我们在2.4.1看到的ip地址 - –image-repository registry.aliyuncs.com/google_containers

这个是镜像地址,由于国外地址无法访问,故使用的阿里云仓库地址:repository

registry.aliyuncs.com/google_containers - –kubernetes-version=v1.21.5 这个参数是下载的k8s软件版本号

- –service-cidr=10.96.0.0/12 这个参数后的IP地址直接就套用10.96.0.0/12

,以后安装时也套用即可,不要更改 - –pod-network-cidr=10.244.0.0/16

k8s内部的pod节点之间网络可以使用的IP段,不能和service-cidr写一样,如果不知道怎么配,就先用这个10.244.0.0/16 - –ignore-preflight-errors=all 添加这个会忽略错误

执行语句后,看到如下的信息说明就安装成功了。

[addons] Applied essential addon: CoreDNS

[addons] Applied essential addon: kube-proxy

Your Kubernetes control-plane has initialized successfully!

To start using your cluster, you need to run the following as a regular user:

mkdir -p $HOME/.kube

sudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/config

sudo chown $(id -u):$(id -g) $HOME/.kube/config

Alternatively, if you are the root user, you can run:

export KUBECONFIG=/etc/kubernetes/admin.conf

You should now deploy a pod network to the cluster.

Run "kubectl apply -f [podnetwork].yaml" with one of the options listed at:

https://kubernetes.io/docs/concepts/cluster-administration/addons/

Then you can join any number of worker nodes by running the following on each as root:

kubeadm join 192.168.3.130:6443 --token nupk90.vnoqbfgexf8d2lhp \

--discovery-token-ca-cert-hash sha256:715fac4463bd6b5b4de53e9356002eed12652fa8c6def12789ccb5d6f73fefaa

(base) [root@kubuflow ~]#

3.13 创建kube配置文件

(base) [root@kubuflow ~]# mkdir -p $HOME/.kube

(base) [root@kubuflow ~]# sudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/config

(base) [root@kubuflow ~]# sudo chown $(id -u):$(id -g) $HOME/.kube/config

(base) [root@kubuflow ~]# kubectl get nodes

NAME STATUS ROLES AGE VERSION

kubuflow NotReady control-plane,master 5m45s v1.21.5

3.14 安装Pod 网络插件(CNI)

cat > calico.yaml << EOF

---

# Source: calico/templates/calico-config.yaml

# This ConfigMap is used to configure a self-hosted Calico installation.

kind: ConfigMap

apiVersion: v1

metadata:

name: calico-config

namespace: kube-system

data:

# Typha is disabled.

typha_service_name: "none"

# Configure the backend to use.

calico_backend: "bird"

# Configure the MTU to use

veth_mtu: "1440"

# The CNI network configuration to install on each node. The special

# values in this config will be automatically populated.

cni_network_config: |-

{

"name": "k8s-pod-network",

"cniVersion": "0.3.1",

"plugins": [

{

"type": "calico",

"log_level": "info",

"datastore_type": "kubernetes",

"nodename": "__KUBERNETES_NODE_NAME__",

"mtu": __CNI_MTU__,

"ipam": {

"type": "calico-ipam"

},

"policy": {

"type": "k8s"

},

"kubernetes": {

"kubeconfig": "__KUBECONFIG_FILEPATH__"

}

},

{

"type": "portmap",

"snat": true,

"capabilities": {"portMappings": true}

}

]

}

---

# Source: calico/templates/kdd-crds.yaml

apiVersion: apiextensions.k8s.io/v1beta1

kind: CustomResourceDefinition

metadata:

name: felixconfigurations.crd.projectcalico.org

spec:

scope: Cluster

group: crd.projectcalico.org

version: v1

names:

kind: FelixConfiguration

plural: felixconfigurations

singular: felixconfiguration

---

apiVersion: apiextensions.k8s.io/v1beta1

kind: CustomResourceDefinition

metadata:

name: ipamblocks.crd.projectcalico.org

spec:

scope: Cluster

group: crd.projectcalico.org

version: v1

names:

kind: IPAMBlock

plural: ipamblocks

singular: ipamblock

---

apiVersion: apiextensions.k8s.io/v1beta1

kind: CustomResourceDefinition

metadata:

name: blockaffinities.crd.projectcalico.org

spec:

scope: Cluster

group: crd.projectcalico.org

version: v1

names:

kind: BlockAffinity

plural: blockaffinities

singular: blockaffinity

---

apiVersion: apiextensions.k8s.io/v1beta1

kind: CustomResourceDefinition

metadata:

name: ipamhandles.crd.projectcalico.org

spec:

scope: Cluster

group: crd.projectcalico.org

version: v1

names:

kind: IPAMHandle

plural: ipamhandles

singular: ipamhandle

---

apiVersion: apiextensions.k8s.io/v1beta1

kind: CustomResourceDefinition

metadata:

name: ipamconfigs.crd.projectcalico.org

spec:

scope: Cluster

group: crd.projectcalico.org

version: v1

names:

kind: IPAMConfig

plural: ipamconfigs

singular: ipamconfig

---

apiVersion: apiextensions.k8s.io/v1beta1

kind: CustomResourceDefinition

metadata:

name: bgppeers.crd.projectcalico.org

spec:

scope: Cluster

group: crd.projectcalico.org

version: v1

names:

kind: BGPPeer

plural: bgppeers

singular: bgppeer

---

apiVersion: apiextensions.k8s.io/v1beta1

kind: CustomResourceDefinition

metadata:

name: bgpconfigurations.crd.projectcalico.org

spec:

scope: Cluster

group: crd.projectcalico.org

version: v1

names:

kind: BGPConfiguration

plural: bgpconfigurations

singular: bgpconfiguration

---

apiVersion: apiextensions.k8s.io/v1beta1

kind: CustomResourceDefinition

metadata:

name: ippools.crd.projectcalico.org

spec:

scope: Cluster

group: crd.projectcalico.org

version: v1

names:

kind: IPPool

plural: ippools

singular: ippool

---

apiVersion: apiextensions.k8s.io/v1beta1

kind: CustomResourceDefinition

metadata:

name: hostendpoints.crd.projectcalico.org

spec:

scope: Cluster

group: crd.projectcalico.org

version: v1

names:

kind: HostEndpoint

plural: hostendpoints

singular: hostendpoint

---

apiVersion: apiextensions.k8s.io/v1beta1

kind: CustomResourceDefinition

metadata:

name: clusterinformations.crd.projectcalico.org

spec:

scope: Cluster

group: crd.projectcalico.org

version: v1

names:

kind: ClusterInformation

plural: clusterinformations

singular: clusterinformation

---

apiVersion: apiextensions.k8s.io/v1beta1

kind: CustomResourceDefinition

metadata:

name: globalnetworkpolicies.crd.projectcalico.org

spec:

scope: Cluster

group: crd.projectcalico.org

version: v1

names:

kind: GlobalNetworkPolicy

plural: globalnetworkpolicies

singular: globalnetworkpolicy

---

apiVersion: apiextensions.k8s.io/v1beta1

kind: CustomResourceDefinition

metadata:

name: globalnetworksets.crd.projectcalico.org

spec:

scope: Cluster

group: crd.projectcalico.org

version: v1

names:

kind: GlobalNetworkSet

plural: globalnetworksets

singular: globalnetworkset

---

apiVersion: apiextensions.k8s.io/v1beta1

kind: CustomResourceDefinition

metadata:

name: networkpolicies.crd.projectcalico.org

spec:

scope: Namespaced

group: crd.projectcalico.org

version: v1

names:

kind: NetworkPolicy

plural: networkpolicies

singular: networkpolicy

---

apiVersion: apiextensions.k8s.io/v1beta1

kind: CustomResourceDefinition

metadata:

name: networksets.crd.projectcalico.org

spec:

scope: Namespaced

group: crd.projectcalico.org

version: v1

names:

kind: NetworkSet

plural: networksets

singular: networkset

---

# Source: calico/templates/rbac.yaml

# Include a clusterrole for the kube-controllers component,

# and bind it to the calico-kube-controllers serviceaccount.

kind: ClusterRole

apiVersion: rbac.authorization.k8s.io/v1

metadata:

name: calico-kube-controllers

rules:

# Nodes are watched to monitor for deletions.

- apiGroups: [""]

resources:

- nodes

verbs:

- watch

- list

- get

# Pods are queried to check for existence.

- apiGroups: [""]

resources:

- pods

verbs:

- get

# IPAM resources are manipulated when nodes are deleted.

- apiGroups: ["crd.projectcalico.org"]

resources:

- ippools

verbs:

- list

- apiGroups: ["crd.projectcalico.org"]

resources:

- blockaffinities

- ipamblocks

- ipamhandles

verbs:

- get

- list

- create

- update

- delete

# Needs access to update clusterinformations.

- apiGroups: ["crd.projectcalico.org"]

resources:

- clusterinformations

verbs:

- get

- create

- update

---

kind: ClusterRoleBinding

apiVersion: rbac.authorization.k8s.io/v1

metadata:

name: calico-kube-controllers

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: ClusterRole

name: calico-kube-controllers

subjects:

- kind: ServiceAccount

name: calico-kube-controllers

namespace: kube-system

---

# Include a clusterrole for the calico-node DaemonSet,

# and bind it to the calico-node serviceaccount.

kind: ClusterRole

apiVersion: rbac.authorization.k8s.io/v1

metadata:

name: calico-node

rules:

# The CNI plugin needs to get pods, nodes, and namespaces.

- apiGroups: [""]

resources:

- pods

- nodes

- namespaces

verbs:

- get

- apiGroups: [""]

resources:

- endpoints

- services

verbs:

# Used to discover service IPs for advertisement.

- watch

- list

# Used to discover Typhas.

- get

- apiGroups: [""]

resources:

- nodes/status

verbs:

# Needed for clearing NodeNetworkUnavailable flag.

- patch

# Calico stores some configuration information in node annotations.

- update

# Watch for changes to Kubernetes NetworkPolicies.

- apiGroups: ["networking.k8s.io"]

resources:

- networkpolicies

verbs:

- watch

- list

# Used by Calico for policy information.

- apiGroups: [""]

resources:

- pods

- namespaces

- serviceaccounts

verbs:

- list

- watch

# The CNI plugin patches pods/status.

- apiGroups: [""]

resources:

- pods/status

verbs:

- patch

# Calico monitors various CRDs for config.

- apiGroups: ["crd.projectcalico.org"]

resources:

- globalfelixconfigs

- felixconfigurations

- bgppeers

- globalbgpconfigs

- bgpconfigurations

- ippools

- ipamblocks

- globalnetworkpolicies

- globalnetworksets

- networkpolicies

- networksets

- clusterinformations

- hostendpoints

- blockaffinities

verbs:

- get

- list

- watch

# Calico must create and update some CRDs on startup.

- apiGroups: ["crd.projectcalico.org"]

resources:

- ippools

- felixconfigurations

- clusterinformations

verbs:

- create

- update

# Calico stores some configuration information on the node.

- apiGroups: [""]

resources:

- nodes

verbs:

- get

- list

- watch

# These permissions are only requried for upgrade from v2.6, and can

# be removed after upgrade or on fresh installations.

- apiGroups: ["crd.projectcalico.org"]

resources:

- bgpconfigurations

- bgppeers

verbs:

- create

- update

# These permissions are required for Calico CNI to perform IPAM allocations.

- apiGroups: ["crd.projectcalico.org"]

resources:

- blockaffinities

- ipamblocks

- ipamhandles

verbs:

- get

- list

- create

- update

- delete

- apiGroups: ["crd.projectcalico.org"]

resources:

- ipamconfigs

verbs:

- get

# Block affinities must also be watchable by confd for route aggregation.

- apiGroups: ["crd.projectcalico.org"]

resources:

- blockaffinities

verbs:

- watch

# The Calico IPAM migration needs to get daemonsets. These permissions can be

# removed if not upgrading from an installation using host-local IPAM.

- apiGroups: ["apps"]

resources:

- daemonsets

verbs:

- get

---

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRoleBinding

metadata:

name: calico-node

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: ClusterRole

name: calico-node

subjects:

- kind: ServiceAccount

name: calico-node

namespace: kube-system

---

# Source: calico/templates/calico-node.yaml

# This manifest installs the calico-node container, as well

# as the CNI plugins and network config on

# each master and worker node in a Kubernetes cluster.

kind: DaemonSet

apiVersion: apps/v1

metadata:

name: calico-node

namespace: kube-system

labels:

k8s-app: calico-node

spec:

selector:

matchLabels:

k8s-app: calico-node

updateStrategy:

type: RollingUpdate

rollingUpdate:

maxUnavailable: 1

template:

metadata:

labels:

k8s-app: calico-node

annotations:

# This, along with the CriticalAddonsOnly toleration below,

# marks the pod as a critical add-on, ensuring it gets

# priority scheduling and that its resources are reserved

# if it ever gets evicted.

scheduler.alpha.kubernetes.io/critical-pod: ''

spec:

nodeSelector:

beta.kubernetes.io/os: linux

hostNetwork: true

tolerations:

# Make sure calico-node gets scheduled on all nodes.

- effect: NoSchedule

operator: Exists

# Mark the pod as a critical add-on for rescheduling.

- key: CriticalAddonsOnly

operator: Exists

- effect: NoExecute

operator: Exists

serviceAccountName: calico-node

# Minimize downtime during a rolling upgrade or deletion; tell Kubernetes to do a "force

# deletion": https://kubernetes.io/docs/concepts/workloads/pods/pod/#termination-of-pods.

terminationGracePeriodSeconds: 0

priorityClassName: system-node-critical

initContainers:

# This container performs upgrade from host-local IPAM to calico-ipam.

# It can be deleted if this is a fresh installation, or if you have already

# upgraded to use calico-ipam.

- name: upgrade-ipam

image: calico/cni:v3.11.3

command: ["/opt/cni/bin/calico-ipam", "-upgrade"]

env:

- name: KUBERNETES_NODE_NAME

valueFrom:

fieldRef:

fieldPath: spec.nodeName

- name: CALICO_NETWORKING_BACKEND

valueFrom:

configMapKeyRef:

name: calico-config

key: calico_backend

volumeMounts:

- mountPath: /var/lib/cni/networks

name: host-local-net-dir

- mountPath: /host/opt/cni/bin

name: cni-bin-dir

securityContext:

privileged: true

# This container installs the CNI binaries

# and CNI network config file on each node.

- name: install-cni

image: calico/cni:v3.11.3

command: ["/install-cni.sh"]

env:

# Name of the CNI config file to create.

- name: CNI_CONF_NAME

value: "10-calico.conflist"

# The CNI network config to install on each node.

- name: CNI_NETWORK_CONFIG

valueFrom:

configMapKeyRef:

name: calico-config

key: cni_network_config

# Set the hostname based on the k8s node name.

- name: KUBERNETES_NODE_NAME

valueFrom:

fieldRef:

fieldPath: spec.nodeName

# CNI MTU Config variable

- name: CNI_MTU

valueFrom:

configMapKeyRef:

name: calico-config

key: veth_mtu

# Prevents the container from sleeping forever.

- name: SLEEP

value: "false"

volumeMounts:

- mountPath: /host/opt/cni/bin

name: cni-bin-dir

- mountPath: /host/etc/cni/net.d

name: cni-net-dir

securityContext:

privileged: true

# Adds a Flex Volume Driver that creates a per-pod Unix Domain Socket to allow Dikastes

# to communicate with Felix over the Policy Sync API.

- name: flexvol-driver

image: calico/pod2daemon-flexvol:v3.11.3

volumeMounts:

- name: flexvol-driver-host

mountPath: /host/driver

securityContext:

privileged: true

containers:

# Runs calico-node container on each Kubernetes node. This

# container programs network policy and routes on each

# host.

- name: calico-node

image: calico/node:v3.11.3

env:

# Use Kubernetes API as the backing datastore.

- name: DATASTORE_TYPE

value: "kubernetes"

# Wait for the datastore.

- name: WAIT_FOR_DATASTORE

value: "true"

# Set based on the k8s node name.

- name: NODENAME

valueFrom:

fieldRef:

fieldPath: spec.nodeName

# Choose the backend to use.

- name: CALICO_NETWORKING_BACKEND

valueFrom:

configMapKeyRef:

name: calico-config

key: calico_backend

# Cluster type to identify the deployment type

- name: CLUSTER_TYPE

value: "k8s,bgp"

# Auto-detect the BGP IP address.

- name: IP

value: "autodetect"

# Enable IPIP

- name: CALICO_IPV4POOL_IPIP

value: "Always"

# Set MTU for tunnel device used if ipip is enabled

- name: FELIX_IPINIPMTU

valueFrom:

configMapKeyRef:

name: calico-config

key: veth_mtu

# The default IPv4 pool to create on startup if none exists. Pod IPs will be

# chosen from this range. Changing this value after installation will have

# no effect. This should fall within `--cluster-cidr`.

- name: CALICO_IPV4POOL_CIDR

value: "10.244.0.0/16"

# Disable file logging so `kubectl logs` works.

- name: CALICO_DISABLE_FILE_LOGGING

value: "true"

# Set Felix endpoint to host default action to ACCEPT.

- name: FELIX_DEFAULTENDPOINTTOHOSTACTION

value: "ACCEPT"

# Disable IPv6 on Kubernetes.

- name: FELIX_IPV6SUPPORT

value: "false"

# Set Felix logging to "info"

- name: FELIX_LOGSEVERITYSCREEN

value: "info"

- name: FELIX_HEALTHENABLED

value: "true"

securityContext:

privileged: true

resources:

requests:

cpu: 250m

livenessProbe:

exec:

command:

- /bin/calico-node

- -felix-live

- -bird-live

periodSeconds: 10

initialDelaySeconds: 10

failureThreshold: 6

readinessProbe:

exec:

command:

- /bin/calico-node

- -felix-ready

- -bird-ready

periodSeconds: 10

volumeMounts:

- mountPath: /lib/modules

name: lib-modules

readOnly: true

- mountPath: /run/xtables.lock

name: xtables-lock

readOnly: false

- mountPath: /var/run/calico

name: var-run-calico

readOnly: false

- mountPath: /var/lib/calico

name: var-lib-calico

readOnly: false

- name: policysync

mountPath: /var/run/nodeagent

volumes:

# Used by calico-node.

- name: lib-modules

hostPath:

path: /lib/modules

- name: var-run-calico

hostPath:

path: /var/run/calico

- name: var-lib-calico

hostPath:

path: /var/lib/calico

- name: xtables-lock

hostPath:

path: /run/xtables.lock

type: FileOrCreate

# Used to install CNI.

- name: cni-bin-dir

hostPath:

path: /opt/cni/bin

- name: cni-net-dir

hostPath:

path: /etc/cni/net.d

# Mount in the directory for host-local IPAM allocations. This is

# used when upgrading from host-local to calico-ipam, and can be removed

# if not using the upgrade-ipam init container.

- name: host-local-net-dir

hostPath:

path: /var/lib/cni/networks

# Used to create per-pod Unix Domain Sockets

- name: policysync

hostPath:

type: DirectoryOrCreate

path: /var/run/nodeagent

# Used to install Flex Volume Driver

- name: flexvol-driver-host

hostPath:

type: DirectoryOrCreate

path: /usr/libexec/kubernetes/kubelet-plugins/volume/exec/nodeagent~uds

---

apiVersion: v1

kind: ServiceAccount

metadata:

name: calico-node

namespace: kube-system

---

# Source: calico/templates/calico-kube-controllers.yaml

# See https://github.com/projectcalico/kube-controllers

apiVersion: apps/v1

kind: Deployment

metadata:

name: calico-kube-controllers

namespace: kube-system

labels:

k8s-app: calico-kube-controllers

spec:

# The controllers can only have a single active instance.

replicas: 1

selector:

matchLabels:

k8s-app: calico-kube-controllers

strategy:

type: Recreate

template:

metadata:

name: calico-kube-controllers

namespace: kube-system

labels:

k8s-app: calico-kube-controllers

annotations:

scheduler.alpha.kubernetes.io/critical-pod: ''

spec:

nodeSelector:

beta.kubernetes.io/os: linux

tolerations:

# Mark the pod as a critical add-on for rescheduling.

- key: CriticalAddonsOnly

operator: Exists

- key: node-role.kubernetes.io/master

effect: NoSchedule

serviceAccountName: calico-kube-controllers

priorityClassName: system-cluster-critical

containers:

- name: calico-kube-controllers

image: calico/kube-controllers:v3.11.3

env:

# Choose which controllers to run.

- name: ENABLED_CONTROLLERS

value: node

- name: DATASTORE_TYPE

value: kubernetes

readinessProbe:

exec:

command:

- /usr/bin/check-status

- -r

---

apiVersion: v1

kind: ServiceAccount

metadata:

name: calico-kube-controllers

namespace: kube-system

---

# Source: calico/templates/calico-etcd-secrets.yaml

---

# Source: calico/templates/calico-typha.yaml

---

# Source: calico/templates/configure-canal.yaml

EOF

(base) [root@kubuflow ~]# kubectl apply -f calico.yaml

configmap/calico-config created

Warning: apiextensions.k8s.io/v1beta1 CustomResourceDefinition is deprecated in v1.16+, unavailable in v1.22+; use apiextensions.k8s.io/v1 CustomResourceDefinition

customresourcedefinition.apiextensions.k8s.io/felixconfigurations.crd.projectcalico.org created

customresourcedefinition.apiextensions.k8s.io/ipamblocks.crd.projectcalico.org created

customresourcedefinition.apiextensions.k8s.io/blockaffinities.crd.projectcalico.org created

customresourcedefinition.apiextensions.k8s.io/ipamhandles.crd.projectcalico.org created

customresourcedefinition.apiextensions.k8s.io/ipamconfigs.crd.projectcalico.org created

customresourcedefinition.apiextensions.k8s.io/bgppeers.crd.projectcalico.org created

customresourcedefinition.apiextensions.k8s.io/bgpconfigurations.crd.projectcalico.org created

customresourcedefinition.apiextensions.k8s.io/ippools.crd.projectcalico.org created

customresourcedefinition.apiextensions.k8s.io/hostendpoints.crd.projectcalico.org created

customresourcedefinition.apiextensions.k8s.io/clusterinformations.crd.projectcalico.org created

customresourcedefinition.apiextensions.k8s.io/globalnetworkpolicies.crd.projectcalico.org created

customresourcedefinition.apiextensions.k8s.io/globalnetworksets.crd.projectcalico.org created

customresourcedefinition.apiextensions.k8s.io/networkpolicies.crd.projectcalico.org created

customresourcedefinition.apiextensions.k8s.io/networksets.crd.projectcalico.org created

clusterrole.rbac.authorization.k8s.io/calico-kube-controllers created

clusterrolebinding.rbac.authorization.k8s.io/calico-kube-controllers created

clusterrole.rbac.authorization.k8s.io/calico-node created

clusterrolebinding.rbac.authorization.k8s.io/calico-node created

daemonset.apps/calico-node created

serviceaccount/calico-node created

deployment.apps/calico-kube-controllers created

serviceaccount/calico-kube-controllers create

3.15 验证网络

(base) [root@kubuflow ~]# kubectl get nodes

NAME STATUS ROLES AGE VERSION

kubuflow Ready control-plane,master 13m v1.21.5

(base) [root@kubuflow ~]# kubectl get pods -n kube-system

NAME READY STATUS RESTARTS AGE

calico-kube-controllers-5bcd7db644-ncdh5 1/1 Running 0 114s

calico-node-9qjv8 1/1 Running 0 114s

coredns-59d64cd4d4-574b4 1/1 Running 0 13m

coredns-59d64cd4d4-5mr9x 1/1 Running 0 13m

etcd-kubuflow 1/1 Running 0 13m

kube-apiserver-kubuflow 1/1 Running 0 13m

kube-controller-manager-kubuflow 1/1 Running 0 13m

kube-proxy-xcfcd 1/1 Running 0 13m

kube-scheduler-kubuflow 1/1 Running 0 13m

3.16 取消污点

单集版的k8s安装后, 无法部署服务。

因为默认master不能部署pod,有污点, 需要去掉污点或者新增一个node,这里是去除污点。

#执行后看到有输出说明有污点

(base) [root@kubuflow ~]# kubectl get node -o yaml | grep taint -A 5

taints:

- effect: NoSchedule

key: node-role.kubernetes.io/master

status:

addresses:

- address: 192.168.3.130

取消污点

(base) [root@kubuflow ~]# kubectl taint nodes --all node-role.kubernetes.io/master-

node/kubuflow untainted

3.17.安装补全命令的包

(base) [root@kubuflow ~]# yum -y install bash-completion #安装补全命令的包

(base) [root@kubuflow ~]# kubectl completion bash

(base) [root@kubuflow ~]# source /usr/share/bash-completion/bash_completion

(base) [root@kubuflow ~]# kubectl completion bash >/etc/profile.d/kubectl.sh

(base) [root@kubuflow ~]# source /etc/profile.d/kubectl.sh

(base) [root@kubuflow ~]# cat >> /root/.bashrc <3.18 部署和访问 Kubernetes 仪表板(Dashboard)

默认情况下不会部署 Dashboard。可以通过以下命令部署:

kubectl apply -f https://raw.githubusercontent.com/kubernetes/dashboard/v2.6.1/aio/deploy/recommended.yaml

查看是否在运行

(base) [root@kubuflow ~]# kubectl get pod -n kubernetes-dashboard

NAME READY STATUS RESTARTS AGE

dashboard-metrics-scraper-7c857855d9-snpfs 1/1 Running 0 16m

kubernetes-dashboard-6b79449649-4kgsx 1/1 Running 0 16m

将ClusterIP类型改为NodePort,使用 : 从集群外部访问Service

(base) [root@kubuflow ~]# kubectl edit svc kubernetes-dashboard -n kubernetes-dashboard

type: ClusterIP修改为type: NodePort,保存后使用kubectl get svc -n kubernetes-dashboard命令来查看自动生产的端口:

(base) [root@kubuflow ~]# kubectl get svc -n kubernetes-dashboard

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

dashboard-metrics-scraper ClusterIP 10.98.238.142 8000/TCP 25m

kubernetes-dashboard NodePort 10.105.207.158 443:30988/TCP 25m

如上所示,Dashboard已经在30988/端口上公开,现在可以在外部使用https://:30988/进行访问。

创建访问账号

cat > dash.yaml << EOF

apiVersion: v1

kind: ServiceAccount

metadata:

name: admin-user

namespace: kubernetes-dashboard

---

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRoleBinding

metadata:

name: admin-user

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: ClusterRole

name: cluster-admin

subjects:

- kind: ServiceAccount

name: admin-user

namespace: kubernetes-dashboard

EOF

(base) [root@kubuflow ~]# kubectl apply -f dash.yaml

serviceaccount/admin-user created

clusterrolebinding.rbac.authorization.k8s.io/admin-user created

查看token令牌

kubectl -n kubernetes-dashboard get secret $(kubectl -n kubernetes-dashboard get sa/admin-user -o jsonpath="{.secrets[0].name}") -o go-template="{{.data.token | base64decode}}"

eyJhbGciOiJSUzI1Nxxx.....xxxxxxxxx..........pTDfnNmg

由于我主机做了远程映射,所里这里访问地址看起来和主机ip不一样

实际应该是https://192.168.3.130:30988

4 Kubeflow安装

4.1 下载官方安装脚本仓库

安装1.6.0版本

(base) [root@kubuflow softwares]# wget https://github.com/kubeflow/manifests/archive/refs/tags/v1.6.0.zip

(base) [root@kubuflow ~]# unzip v1.6.0.zip

(base) [root@kubuflow ~]# unzip v1.6.0.zip mv manifests-1.6.0/ manifests

4.2 下载安装kustomize

https://github.com/kubernetes-sigs/kustomize

curl -s "https://raw.githubusercontent.com/kubernetes-sigs/kustomize/master/hack/install_kustomize.sh" | bash

如果下载比较慢的话,可以使用代理进行github加速

(base) [root@kubuflow softwares]# curl -s "https://ghproxy.com/https://raw.githubusercontent.com/kubernetes-sigs/kustomize/master/hack/install_kustomize.sh" | bash

添加到bin

cp kustomize /bin/

kustomize version

4.3 镜像同步至dockerhub方式

由于kubeflow有些组件的镜像是国外的,所以需要解决国外谷歌镜像拉取问题,具体可以参考一个大佬分享的帖子:

kubeflow国内环境最新安装方式 https://zhuanlan.zhihu.com/p/546677250

### 获取gcr镜像,因为我的网络只无法获取gcr.io, quay.io正常,可以根据需求修改

kustomize build example |grep 'image: gcr.io'|awk '$2 != "" { print $2}' |sort -u

### 使用github-ci同步至个人dockerhub仓库

https://github.com/kenwoodjw/sync_gcr

修改https://github.com/kenwoodjw/sync_gcr/blob/master/images.txt 提交会触发ci同步镜像至dockerhub

可根据需求修改https://github.com/kenwoodjw/sync_gcr/blob/master/sync_image.py

4.4 准备sc、pv、pvc

kubeflow的组件需要存储,所以需要提前准备好pv,本次实验存储采用的本地磁盘存储的方式。流程如下:

这里需要小心,名字和路径需要写对,按照下面步骤进行,或者根据自己创建的路径仔细修改

- 准备本地目录

mkdir -p /data/k8s/istio-authservice /data/k8s/katib-mysql /data/k8s/minio /data/k8s/mysql-pv-claim

修改auth路径权限

sudo chmod -R 777 /data/k8s/istio-authservice/

- 编写kubeflow-storage.yaml

hostPath: path: "/data/k8s/istio-authservice"改成上面各自创建的目录

kind: StorageClass

apiVersion: storage.k8s.io/v1

metadata:

name: local-storage

provisioner: kubernetes.io/no-provisioner

volumeBindingMode: WaitForFirstConsumer

---

apiVersion: v1

kind: PersistentVolume

metadata:

name: authservice

namespace: istio-system

labels:

type: local

spec:

storageClassName: local-storage

capacity:

storage: 10Gi

accessModes:

- ReadWriteOnce

hostPath:

path: "/data/k8s/istio-authservice"

---

apiVersion: v1

kind: PersistentVolume

metadata:

namespace: kubeflow

name: katib-mysql

labels:

type: local

spec:

storageClassName: local-storage

capacity:

storage: 10Gi

accessModes:

- ReadWriteOnce

hostPath:

path: "/data/k8s/katib-mysql"

---

apiVersion: v1

kind: PersistentVolume

metadata:

name: minio

namespace: kubeflow

labels:

type: local

spec:

storageClassName: local-storage

capacity:

storage: 20Gi

accessModes:

- ReadWriteOnce

hostPath:

path: "/data/k8s/minio"

---

apiVersion: v1

kind: PersistentVolume

metadata:

name: mysql-pv-claim

namespace: kubeflow

labels:

type: local

spec:

storageClassName: local-storage

capacity:

storage: 20Gi

accessModes:

- ReadWriteOnce

hostPath:

path: "/data/k8s/mysql-pv-claim"

执行

kubectl apply -f kubeflow-storage.yaml

4.5 修改安装脚本拉取镜像

(base) [root@kubuflow example]# cat kustomization.yaml

将manifests/example/kustomization.yaml文件内容修改如下,就是后面添加images,这个相当于把谷歌(gcr.io, quay.io)的镜像同步到了dockerhub:

apiVersion: kustomize.config.k8s.io/v1beta1

kind: Kustomization

resources:

# Cert-Manager

- ../common/cert-manager/cert-manager/base

- ../common/cert-manager/kubeflow-issuer/base

# Istio

- ../common/istio-1-16/istio-crds/base

- ../common/istio-1-16/istio-namespace/base

- ../common/istio-1-16/istio-install/base

# OIDC Authservice

- ../common/oidc-authservice/base

# Dex

- ../common/dex/overlays/istio

# KNative

- ../common/knative/knative-serving/overlays/gateways

- ../common/knative/knative-eventing/base

- ../common/istio-1-16/cluster-local-gateway/base

# Kubeflow namespace

- ../common/kubeflow-namespace/base

# Kubeflow Roles

- ../common/kubeflow-roles/base

# Kubeflow Istio Resources

- ../common/istio-1-16/kubeflow-istio-resources/base

# Kubeflow Pipelines

- ../apps/pipeline/upstream/env/cert-manager/platform-agnostic-multi-user

# Katib

- ../apps/katib/upstream/installs/katib-with-kubeflow

# Central Dashboard

- ../apps/centraldashboard/upstream/overlays/kserve

# Admission Webhook

- ../apps/admission-webhook/upstream/overlays/cert-manager

# Jupyter Web App

- ../apps/jupyter/jupyter-web-app/upstream/overlays/istio

# Notebook Controller

- ../apps/jupyter/notebook-controller/upstream/overlays/kubeflow

# Profiles + KFAM

# - ../apps/profiles/upstream/overlays/kubeflow

# Volumes Web App

- ../apps/volumes-web-app/upstream/overlays/istio

# Tensorboards Controller

- ../apps/tensorboard/tensorboard-controller/upstream/overlays/kubeflow

# Tensorboard Web App

- ../apps/tensorboard/tensorboards-web-app/upstream/overlays/istio

# Training Operator

- ../apps/training-operator/upstream/overlays/kubeflow

# User namespace

- ../common/user-namespace/base

# KServe

- ../contrib/kserve/kserve

- ../contrib/kserve/models-web-app/overlays/kubeflow

images:

- name: gcr.io/arrikto/istio/pilot:1.14.1-1-g19df463bb

newName: kenwood/pilot

newTag: "1.14.1-1-g19df463bb"

- name: gcr.io/arrikto/kubeflow/oidc-authservice:28c59ef

newName: kenwood/oidc-authservice

newTag: "28c59ef"

- name: gcr.io/knative-releases/knative.dev/eventing/cmd/controller@sha256:dc0ac2d8f235edb04ec1290721f389d2bc719ab8b6222ee86f17af8d7d2a160f

newName: kenwood/controller

newTag: "dc0ac2"

- name: gcr.io/knative-releases/knative.dev/eventing/cmd/mtping@sha256:632d9d710d070efed2563f6125a87993e825e8e36562ec3da0366e2a897406c0

newName: kenwood/cmd/mtping

newTag: "632d9d"

- name: gcr.io/knative-releases/knative.dev/serving/cmd/domain-mapping-webhook@sha256:847bb97e38440c71cb4bcc3e430743e18b328ad1e168b6fca35b10353b9a2c22

newName: kenwood/domain-mapping-webhook

newTag: "847bb9"

- name: gcr.io/knative-releases/knative.dev/eventing/cmd/webhook@sha256:b7faf7d253bd256dbe08f1cac084469128989cf39abbe256ecb4e1d4eb085a31

newName: kenwood/webhook

newTag: "b7faf7"

- name: gcr.io/knative-releases/knative.dev/net-istio/cmd/controller@sha256:f253b82941c2220181cee80d7488fe1cefce9d49ab30bdb54bcb8c76515f7a26

newName: kenwood/controller

newTag: "f253b8"

- name: gcr.io/knative-releases/knative.dev/net-istio/cmd/webhook@sha256:a705c1ea8e9e556f860314fe055082fbe3cde6a924c29291955f98d979f8185e

newName: kenwood/webhook

newTag: "a705c1"

- name: gcr.io/knative-releases/knative.dev/serving/cmd/activator@sha256:93ff6e69357785ff97806945b284cbd1d37e50402b876a320645be8877c0d7b7

newName: kenwood/activator

newTag: "93ff6e"

- name: gcr.io/knative-releases/knative.dev/serving/cmd/autoscaler@sha256:007820fdb75b60e6fd5a25e65fd6ad9744082a6bf195d72795561c91b425d016

newName: kenwood/autoscaler

newTag: "007820"

- name: gcr.io/knative-releases/knative.dev/serving/cmd/controller@sha256:75cfdcfa050af9522e798e820ba5483b9093de1ce520207a3fedf112d73a4686

newName: kenwood/controller

newTag: "75cfdc"

- name: gcr.io/knative-releases/knative.dev/serving/cmd/domain-mapping-webhook@sha256:847bb97e38440c71cb4bcc3e430743e18b328ad1e168b6fca35b10353b9a2c22

newName: kenwood/domain-mapping-webhook

newTag: "847bb9"

- name: gcr.io/knative-releases/knative.dev/serving/cmd/domain-mapping@sha256:23baa19322320f25a462568eded1276601ef67194883db9211e1ea24f21a0beb

newName: kenwood/domain-mapping

newTag: "23baa1"

- name: gcr.io/knative-releases/knative.dev/serving/cmd/queue@sha256:14415b204ea8d0567235143a6c3377f49cbd35f18dc84dfa4baa7695c2a9b53d

newName: kenwood/queue

newTag: "14415b"

- name: gcr.io/knative-releases/knative.dev/serving/cmd/webhook@sha256:9084ea8498eae3c6c4364a397d66516a25e48488f4a9871ef765fa554ba483f0

newName: kenwood/webhook

newTag: "9084ea"

- name: gcr.io/ml-pipeline/visualization-server:2.0.0-alpha.3

newName: kenwood/visualization-server

newTag: "2.0.0-alpha.3"

- name: gcr.io/ml-pipeline/cache-server:2.0.0-alpha.3

newName: kenwood/cache-server

newTag: "2.0.0-alpha.3"

- name: gcr.io/ml-pipeline/metadata-envoy:2.0.0-alpha.3

newName: kenwood/metadata-envoy

newTag: "2.0.0-alpha.3"

- name: gcr.io/ml-pipeline/viewer-crd-controller:2.0.0-alpha.3

newName: kenwood/viewer-crd-controller

newTag: "2.0.0-alpha.3"

- name: gcr.io/arrikto/kubeflow/oidc-authservice:28c59ef

newName: kenwood/oidc-authservice

newTag: "28c59ef"

修改yaml,下面每个文件里面添加 storageClassName: local-storage

apps/katib/upstream/components/mysql/pvc.yaml

apps/pipeline/upstream/third-party/minio/base/minio-pvc.yaml

apps/pipeline/upstream/third-party/mysql/base/mysql-pv-claim.yaml

common/oidc-authservice/base/pvc.yaml

4.6 一键安装

https://github.com/kubeflow/manifests#install-with-a-single-command

(base) [root@kubuflow manifests]# pwd

/root/softwares/manifests

(base) [root@kubuflow manifests]# while ! kustomize build example | kubectl apply -f -; do echo "Retrying to apply resources"; sleep 10; done

2022/12/24 16:23:51 well-defined vars that were never replaced: kfp-app-name,kfp-app-version

最后报错的地方 error: resource mapping not found for name: “kubeflow-user-example-com” namespace: “” from “STDIN”: no matches for kind “Profile” in version “kubeflow.org/v1beta1”,我们可以先忽略,这个好像是官方的一个kubeflow例子,具体也可以参考分步安装的步骤:

https://github.com/kubeflow/manifests#user-namespace

kustomize build common/user-namespace/base | kubectl apply -f -

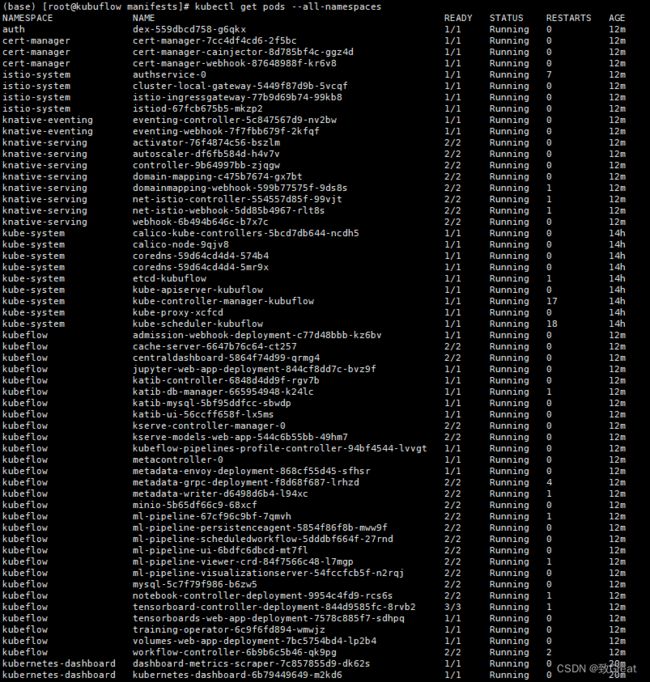

过上一会(可以打会游戏了,耐心等待,中间会拉去每个pod镜像以及容器创建,所以比较慢),我们可以看下pods的状态,全部为running说明一路绿灯,可以访问kubeflow dashbord了

(base) [root@kubuflow ~]# kubectl get pods --all-namespaces

我们查看k8s的dashboard,也可以看到所有的pod都是正常运行的

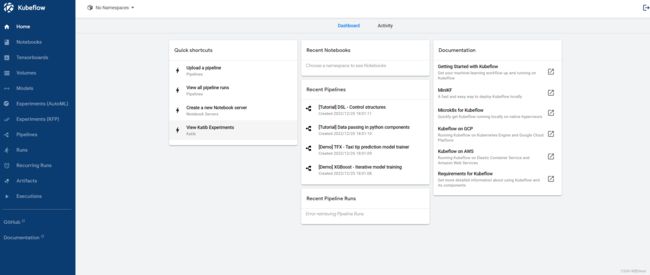

4.7 访问Kubeflow Dashboard

kubectl port-forward --address 0.0.0.0 svc/istio-ingressgateway -n istio-system 8080:80

--address 0.0.0.0代表可以外部host访问,不加的话只能本地访问

默认用户名和密码:

[email protected]

12341234

5 参考资料

- 机器学习平台kubeflow搭建

- kubernetes最新版安装单机版v1.21.5