深度学习 第3章前馈神经网络 实验五 pytorch实现

神经网络是由神经元按照一定的连接结构组合而成的网络。神经网络可以看作一个函数,通过简单非线性函数的多次复合,实现输入空间到输出空间的复杂映射 。

前馈神经网络是最早发明的简单人工神经网络。整个网络中的信息单向传播,可以用一个有向无环路图表示,这种网络结构简单,易于实现

目录:

- 4.1 神经元

-

- 4.1.1 净活性值

- 4.1.2 激活函数

-

- 4.1.2.1 Sigmoid 型函数

- 4.1.2.2 ReLU型函数

- 4.2 基于前馈神经网络的二分类任务

-

- 4.2.1 数据集构建

- 4.2.2 模型构建

-

- 4.2.2.1 线性层算子

- 4.2.2.2 Logistic算子

- 4.2.2.3 层的串行组合

- 4.2.3 损失函数二分类交叉熵

- 4.2.4 模型优化

-

- 4.2.4.1 反向传播算法

- 4.2.4.2 损失函数

- 4.2.4.4 线性层

- 4.2.4.5 整个网络

- 4.2.4.6 优化器

- 4.2.5 完善Runner类:RunnerV2_1

- 4.2.6 模型训练

- 4.2.7 性能评价

- 题:

-

- 1. 【思考题】加权求和与仿射变换之间有什么区别和联系?

- 2. 【思考题】对比 3.1 基于Logistic回归的二分类任务 4.2 基于前馈神经网络的二分类任务

- 其他

- 所有代码

- 心得

4.1 神经元

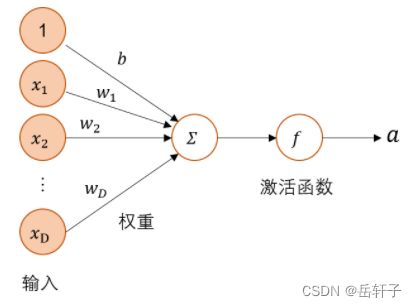

神经网络的基本组成单元为带有非线性激活函数的神经元。

在大脑中,神经网络由称为神经元的神经细胞组成,神经元的主要结构有细胞体、树突(用来接收信号)和轴突(用来传输信号)。一个神经元的轴突末梢和其他神经元的树突相接触,形成突触。神经元通过轴突和突触把产生的信号送到其他的神经元。信号就从树突上的突触进入本细胞,神经元利用一种未知的方法,把所有从树突突触上进来的信号进行相加,如果全部信号的总和超过某个阀值,就会激发神经元进入兴奋状态,产生神经冲动并传递给其他神经元。如果信号总和没有达到阀值,神经元就不会兴奋。图1展示的是一个生物神经元。

人工神经元模拟但简化了生物神经元,是神经网络的基本信息处理单位,其基本要素包括突触、求和单元和激活函数,结构见图2

4.1.1 净活性值

假设一个神经元接收的输入为 x ∈ R D x\in \mathbb{R}^{D} x∈RD,其权重向量为 w ∈ R D w\in \mathbb{R}^{D} w∈RD,神经元所获得的输入信号,即净活性值z的计算方法为:

z = W T x + b z=W^{T}x+b z=WTx+b其中b为偏置。

为了提高预测样本的效率,我们通常会将 N N N个样本归为一组进行成批地预测:

z = X w + b z=Xw+b z=Xw+b其中 X ∈ R N × D X\in \mathbb{R}^{N\times D} X∈RN×D为N个样本的特征矩阵, z ∈ R N z\in \mathbb{R}^{N} z∈RN 为 N N N个预测值组成的列向量。

使用pytorch计算一组输入的净活性值z。代码实现如下:

import torch

X = torch.rand([2, 5]) # 2个特征数为5的样本

w = torch.rand([5, 1]) # 含有5个参数的权重向量

b = torch.rand([1, 1]) # 偏置项

z = torch.matmul(X, w) + b # 使用'torch.matmul'实现矩阵相乘

print("input X:\n", X)

print("weight w:\n", w, "\nbias b:", b)

print("output z:\n", z)

运行结果:

input X: tensor([[0.3794, 0.8122, 0.9398, 0.6996, 0.2361],

[0.9678, 0.4115, 0.0258, 0.9784, 0.2318]])

weight w: tensor([[0.6294],

[0.3592],

[0.5059],

[0.8552],

[0.8733]])

bias b: tensor([[0.1178]])

output z: tensor([[1.9282],

[1.9269]])

净活性值z再经过一个非线性函数 f ( ⋅ ) f(⋅) f(⋅)后,得到神经元的活性值 a a a。

a = f ( z ) a=f(z) a=f(z)注: 在pytorch中,可以使用torch.nn.Linear(features_in, features_out, bias=False)完成输入张量的上述变换。

4.1.2 激活函数

激活函数通常为非线性函数,可以增强神经网络的表示能力和学习能力。常用的激活函数有S型函数和ReLU函数。

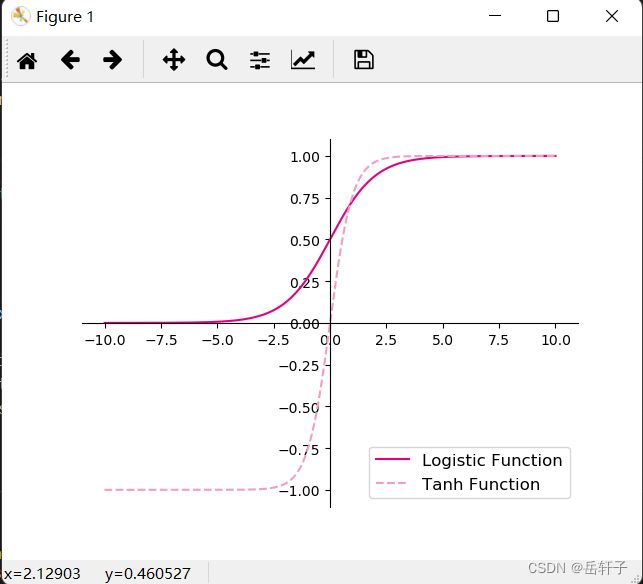

4.1.2.1 Sigmoid 型函数

Sigmoid 型函数是指一类S型曲线函数,为两端饱和函数。常用的 Sigmoid 型函数有 Logistic 函数和 Tanh 函数,其数学表达式为

Logistic 函数:

σ ( z ) = 1 1 + e x p ( − z ) ( 4.4 ) σ(z)= {1 \over 1+exp(−z) }(4.4) σ(z)=1+exp(−z)1(4.4)Tanh 函数:

t a n h ( z ) = e x p ( z ) + e x p ( − z ) e x p ( z ) − e x p ( − z ) tanh(z)= { exp(z)+exp(−z) \over exp(z)−exp(−z)} tanh(z)=exp(z)−exp(−z)exp(z)+exp(−z)

Logistic函数和Tanh函数的代码实现和可视化如下:

import matplotlib.pyplot as plt

# Logistic函数

def logistic(z):

return 1.0 / (1.0 + torch.exp(-z))

# Tanh函数

def tanh(z):

return (torch.exp(z) - torch.exp(-z)) / (torch.exp(z) + torch.exp(-z))

# 在[-10,10]的范围内生成10000个输入值,用于绘制函数曲线

z = torch.linspace(-10, 10, 10000)

plt.figure()

plt.plot(z.tolist(), logistic(z).tolist(), color='#e4007f', label="Logistic Function")

plt.plot(z.tolist(), tanh(z).tolist(), color='#f19ec2', linestyle='--', label="Tanh Function")

ax = plt.gca() # 获取轴,默认有4个

# 隐藏两个轴,通过把颜色设置成none

ax.spines['top'].set_color('none')

ax.spines['right'].set_color('none')

# 调整坐标轴位置

ax.spines['left'].set_position(('data',0))

ax.spines['bottom'].set_position(('data',0))

plt.legend(loc='lower right', fontsize='large')

plt.savefig('fw-logistic-tanh.pdf')

plt.show()

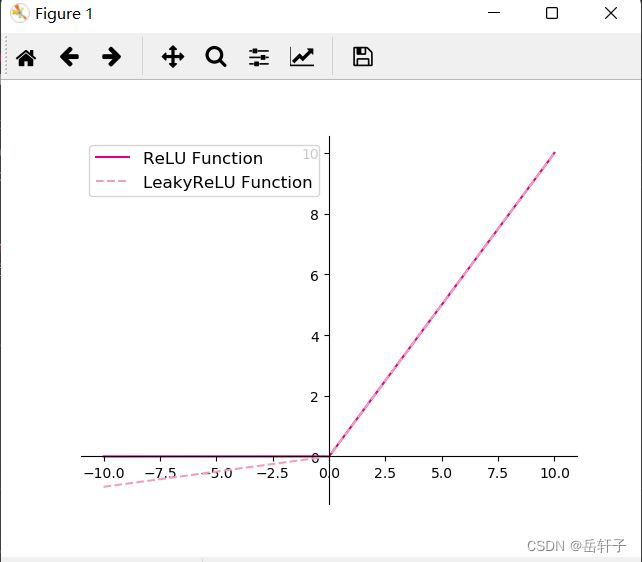

4.1.2.2 ReLU型函数

常见的 R e L U ReLU ReLU函数有 R e L U ReLU ReLU和带泄露的 R e L U ( L e a k y R e L U ) ReLU(Leaky ReLU) ReLU(LeakyReLU),数学表达式分别为:

R e L U ( z ) = m a x ( 0 , z ) ( 4.6 ) L e a k y R e L U ( z ) = m a x ( 0 , z ) + λ m i n ( 0 , z ) ReLU(z)=max(0,z)(4.6)\\ LeakyReLU(z)=max(0,z)+λmin(0,z) ReLU(z)=max(0,z)(4.6)LeakyReLU(z)=max(0,z)+λmin(0,z)其中λ为超参数。

可视化ReLU和带泄露的ReLU的函数的代码实现和可视化如下:

# ReLU

def relu(z):

return torch.maximum(z, torch.tensor(0.))

# 带泄露的ReLU

def leaky_relu(z, negative_slope=0.1):

a1 = (torch.tensor((z > 0), dtype=torch.float32) * z)

a2 = (torch.tensor((z <= 0), dtype=torch.float32) * (negative_slope * z))

return a1 + a2

# 在[-10,10]的范围内生成一系列的输入值,用于绘制relu、leaky_relu的函数曲线

z = torch.linspace(-10, 10, 10000)

plt.figure()

plt.plot(z.tolist(), relu(z).tolist(), color="#e4007f", label="ReLU Function")

plt.plot(z.tolist(), leaky_relu(z).tolist(), color="#f19ec2", linestyle="--", label="LeakyReLU Function")

ax = plt.gca()

ax.spines['top'].set_color('none')

ax.spines['right'].set_color('none')

ax.spines['left'].set_position(('data', 0))

ax.spines['bottom'].set_position(('data', 0))

plt.legend(loc='upper left', fontsize='large')

plt.savefig('fw-relu-leakyrelu.pdf')

plt.show()

4.2 基于前馈神经网络的二分类任务

前馈神经网络的网络结构如图3所示。每一层获取前一层神经元的活性值,并重复上述计算得到该层的活性值,传入到下一层。整个网络中无反馈,信号从输入层向输出层逐层的单向传播,得到网络最后的输出 a ( L ) a^{(L)} a(L)。

4.2.1 数据集构建

使用第3.1.1节中构建的二分类数据集:Moon1000数据集,其中训练集640条、验证集160条、测试集200条。该数据集的数据是从两个带噪音的弯月形状数据分布中采样得到,每个样本包含2个特征。

from nndl.dataset import make_moons

# 采样1000个样本

n_samples = 1000

X, y = make_moons(n_samples=n_samples, shuffle=True, noise=0.5)

num_train = 640

num_dev = 160

num_test = 200

X_train, y_train = X[:num_train], y[:num_train]

X_dev, y_dev = X[num_train:num_train + num_dev], y[num_train:num_train + num_dev]

X_test, y_test = X[num_train + num_dev:], y[num_train + num_dev:]

y_train = y_train.reshape([-1, 1])

y_dev = y_dev.reshape([-1, 1])

y_test = y_test.reshape([-1, 1])

运行结果:

outer_circ_x.shape: torch.Size([500]) outer_circ_y.shape: torch.Size([500])

outer_circ_x.shape: torch.Size([500]) inner_circ_y.shape: torch.Size([500])

after concat shape: torch.Size([1000])

X shape: torch.Size([1000, 2])

y shape: torch.Size([1000])

4.2.2 模型构建

为了更高效的构建前馈神经网络,我们先定义每一层的算子,然后再通过算子组合构建整个前馈神经网络。

假设网络的第l ll层的输入为第 l − 1 l−1 l−1层的神经元活性值 a ( l − 1 ) a(l−1) a(l−1),经过一个仿射变换,得到该层神经元的净活性值 z z z,再输入到激活函数得到该层神经元的活性值 a a a。

在实践中,为了提高模型的处理效率,通常将 N N N个样本归为一组进行成批地计算。假设网络第l层的输入为 A ( l − 1 ) ∈ R N × M l − 1 A ( l − 1 ) ∈ R N × M l − 1 A(l−1)∈RN×Ml−1,其中每一行为一个样本,则前馈网络中第 l l l层的计算公式为

Z ( l ) = A ( l − 1 ) W ( l ) + b ( l ) ∈ R N × M l , ( 4.8 ) A ( l ) = f l ( Z ( l ) ) ∈ R N × M l , ( 4.9 ) Z^{(l)}=A^{(l−1)}W^{(l)}+b^{(l)}∈R^{N×M_l},(4.8)\\ A ^{( l )} = f_l ( Z ^{( l )} ) ∈ R ^{N × M_l} , ( 4.9 ) Z(l)=A(l−1)W(l)+b(l)∈RN×Ml,(4.8)A(l)=fl(Z(l))∈RN×Ml,(4.9)

其中 Z ( l ) Z(l) Z(l)为N个样本第l层神经元的净活性值, A ( l ) A ^{( l )} A(l)为 N N N个样本第 l l l层神经元的活性值, W ( l ) ∈ R M l − 1 × M l W^{( l )} ∈ R ^{M _{l − 1} × M _l} W(l)∈RMl−1×Ml为第 l l l层的权重矩阵, b ( l ) ∈ R 1 × M l b^{( l )} ∈ R ^{1 × M _l} b(l)∈R1×Ml为第 l l l层的偏置。

4.2.2.1 线性层算子

公式(4.8)对应一个线性层算子,权重参数采用默认的随机初始化,偏置采用默认的零初始化。代码实现如下:

from nndl.op import Op

# 实现线性层算子

class Linear(Op):

def __init__(self, input_size, output_size, name, weight_init=torch.randn, bias_init=torch.zeros):

self.params = {}

# 初始化权重

self.params['W'] = weight_init([input_size, output_size])

# 初始化偏置

self.params['b'] = bias_init([1, output_size])

self.inputs = None

self.name = name

def forward(self, inputs):

self.inputs = inputs

outputs = torch.matmul(self.inputs, self.params['W']) + self.params['b']

return outputs

4.2.2.2 Logistic算子

本节我们采用Logistic函数来作为公式(4.9)中的激活函数。这里也将Logistic函数实现一个算子,代码实现如下:

class Logistic(Op):

def __init__(self):

self.inputs = None

self.outputs = None

def forward(self, inputs):

outputs = 1.0 / (1.0 + torch.exp(-inputs))

self.outputs = outputs

return outputs

4.2.2.3 层的串行组合

在定义了神经层的线性层算子和激活函数算子之后,我们可以不断交叉重复使用它们来构建一个多层的神经网络。

下面我们实现一个两层的用于二分类任务的前馈神经网络,选用Logistic作为激活函数,可以利用上面实现的线性层和激活函数算子来组装。代码实现如下:

# 实现一个两层前馈神经网络

class Model_MLP_L2(Op):

def __init__(self, input_size, hidden_size, output_size):

self.fc1 = Linear(input_size, hidden_size, name="fc1")

self.act_fn1 = Logistic()

self.fc2 = Linear(hidden_size, output_size, name="fc2")

self.act_fn2 = Logistic()

def __call__(self, X):

return self.forward(X)

def forward(self, X):

z1 = self.fc1(X)

a1 = self.act_fn1(z1)

z2 = self.fc2(a1)

a2 = self.act_fn2(z2)

return a2

测试一下

现在,我们实例化一个两层的前馈网络,令其输入层维度为5,隐藏层维度为10,输出层维度为1。并随机生成一条长度为5的数据输入两层神经网络,观察输出结果。

# 实例化模型

model = Model_MLP_L2(input_size=5, hidden_size=10, output_size=1)

# 随机生成1条长度为5的数据

X = torch.rand([1, 5])

result = model(X)

print("result: ", result)

运行结果:

result: tensor([[0.1737]])

4.2.3 损失函数二分类交叉熵

损失函数详情见上一章内容中的3.2.3节:

# 实现交叉熵损失函数

class BinaryCrossEntropyLoss(op.Op):

def __init__(self):

self.predicts = None

self.labels = None

self.num = None

def __call__(self, predicts, labels):

return self.forward(predicts, labels)

def forward(self, predicts, labels):

self.predicts = predicts

self.labels = labels

self.num = self.predicts.shape[0]

loss = -1. / self.num * (torch.matmul(self.labels.t(), torch.log(self.predicts)) + torch.matmul((1-self.labels.t()), torch.log(1-self.predicts)))

loss = torch.squeeze(loss, axis=1)

return loss

4.2.4 模型优化

神经网络的参数主要是通过梯度下降法进行优化的,因此需要计算最终损失对每个参数的梯度。

由于神经网络的层数通常比较深,其梯度计算和上一章中的线性分类模型的不同的点在于:线性模型通常比较简单可以直接计算梯度,而神经网络相当于一个复合函数,需要利用链式法则进行反向传播来计算梯度。

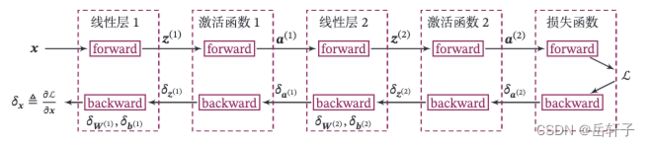

4.2.4.1 反向传播算法

前馈神经网络的参数梯度通常使用误差反向传播算法来计算。使用误差反向传播算法的前馈神经网络训练过程可以分为以下三步:

- 前馈计算每一层的净活性值 Z l Z ^l Zl和激活值 A l A ^l Al,直到最后一层;

- 反向传播计算每一层的误差项 δ l = δ R δ z l \delta^{l} =\frac{\delta R}{\delta z^{l}} δl=δzlδR

- 计算每一层参数的梯度,并更新参数。

在上面实现算子的基础上,来实现误差反向传播算法。在上面的三个步骤中,

第1步是前向计算,可以利用算子的forward()方法来实现;

第2步是反向计算梯度,可以利用算子的backward()方法来实现;

第3步中的计算参数梯度也放到backward()中实现,更新参数放到另外的优化器中专门进行。

这样,在模型训练过程中,我们首先执行模型的forward(),再执行模型的backward(),就得到了所有参数的梯度,之后再利用优化器迭代更新参数。

以这我们这节中构建的两层全连接前馈神经网络Model_MLP_L2为例,下图给出了其前向和反向计算过程:

下面我们按照反向的梯度传播顺序,为每个算子添加backward()方法,并在其中实现每一层参数的梯度的计算。

4.2.4.2 损失函数

二分类交叉熵损失函数对神经网络的输出\hat{y}的偏导数为:

∂ R ∂ y ^ = − 1 N ( d i a l o g ( 1 y ^ ) y − d i a l o g ( 1 1 − y ^ ) ( 1 − y ) ) = − 1 N ( 1 y ^ ⊙ y − 1 1 − y ^ ⊙ ( 1 − y ) ) \frac{\partial R}{\partial \hat{y} }=-\frac{1}{N} (dialog(\frac{1}{\hat{y} } )y-dialog(\frac{1}{1-\hat{y} })(1-y)) \\ =-\frac{1}{N}(\frac{1}{\hat{y} } \odot y-\frac{1}{1-\hat{y} }\odot (1-y)) ∂y^∂R=−N1(dialog(y^1)y−dialog(1−y^1)(1−y))=−N1(y^1⊙y−1−y^1⊙(1−y))其中dialog(x)表示以向量x为对角元素的对角阵, 1 x = 1 x 1 , . . . , 1 x N \frac{1}{x} =\frac{1}{x1} ,...,\frac{1}{xN} x1=x11,...,xN1表示逐元素除, ⊙ \odot ⊙表示逐元素积。

实现损失函数的backward(),代码实现如下:

# 实现交叉熵损失函数

class BinaryCrossEntropyLoss(Op):

def __init__(self, model):

self.predicts = None

self.labels = None

self.num = None

self.model = model

def __call__(self, predicts, labels):

return self.forward(predicts, labels)

def forward(self, predicts, labels):

self.predicts = predicts

self.labels = labels

self.num = self.predicts.shape[0]

loss = -1. / self.num * (torch.matmul(self.labels.t(), torch.log(self.predicts))

+ torch.matmul((1 - self.labels.t()), torch.log(1 - self.predicts)))

loss = torch.squeeze(loss, dim=1)

return loss

def backward(self):

# 计算损失函数对模型预测的导数

loss_grad_predicts = -1.0 * (self.labels / self.predicts -

(1 - self.labels) / (1 - self.predicts)) / self.num

# 梯度反向传播

self.model.backward(loss_grad_predicts)

4.2.4.3 Logistic算子

在本节中,我们使用Logistic激活函数,所以这里为Logistic算子增加的反向函数。

Logistic算子的前向过程表示为 A = σ ( Z ) \boldsymbol{A}=\sigma(\boldsymbol{Z}) A=σ(Z),其中 σ \sigma σ为Logistic函数, Z ∈ R N × D \boldsymbol{Z} \in R^{N \times D} Z∈RN×D和 A ∈ R N × D \boldsymbol{A} \in R^{N \times D} A∈RN×D的每一行表示一个样本。

为了简便起见,我们分别用向量 a ∈ R D \boldsymbol{a} \in R^D a∈RD和 z ∈ R D \boldsymbol{z} \in R^D z∈RD表示同一个样本在激活函数前后的表示,则a对z的偏导数为:

∂ z ∂ a = d i a g ( a ⊙ ( 1 − a ) ) ∈ R D × D , ( 4.12 ) {∂z\over∂a}=diag(a⊙(1−a))∈R^{D×D} ,(4.12) ∂a∂z=diag(a⊙(1−a))∈RD×D,(4.12)按照反向传播算法,令 δ a = ∂ R ∂ a ∈ R D δa={∂R \over ∂a}∈R^D δa=∂a∂R∈RD表示最终损失R对Logistic算子的单个输出a的梯度,则

δ z ≜ ∂ R ∂ z = ∂ a ∂ z δ a ( 4.13 ) = d i a g ( a ⊙ ( 1 − a ) ) δ ( a ) , ( 4.14 ) = a ⊙ ( 1 − a ) ⊙ δ ( a ) 。 ( 4.15 ) δz≜∂R∂z=∂a∂zδa(4.13)\\ =diag(a⊙(1−a))δ(a),(4.14)\\=a⊙(1−a)⊙δ(a)。(4.15) δz≜∂R∂z=∂a∂zδa(4.13)=diag(a⊙(1−a))δ(a),(4.14)=a⊙(1−a)⊙δ(a)。(4.15)将上面公式利用批量数据表示的方式重写,令 δ A = ∂ R ∂ A ∈ R N × D δA={∂R\over ∂A}∈R^{N×D} δA=∂A∂R∈RN×D表示最终损失 R R R对Logistic算子输出 A A A 的梯度,损失函数对Logistic函数输入 Z Z Z的导数为

δ Z = A ⊙ ( 1 − A ) ⊙ δ A ∈ R N × D , ( 4.16 ) δZ=A⊙(1−A)⊙δA∈RN×D,(4.16) δZ=A⊙(1−A)⊙δA∈RN×D,(4.16) δ Z δZ δZ为Logistic算子反向传播的输出。

由于Logistic函数中没有参数,这里不需要在backward()方法中计算该算子参数的梯度。

class Logistic(Op):

def __init__(self):

self.inputs = None

self.outputs = None

self.params = None

def forward(self, inputs):

outputs = 1.0 / (1.0 + torch.exp(-inputs))

self.outputs = outputs

return outputs

def backward(self, grads):

# 计算Logistic激活函数对输入的导数

outputs_grad_inputs = torch.multiply(self.outputs, (1.0 - self.outputs))

return torch.multiply(grads, outputs_grad_inputs)

4.2.4.4 线性层

线性层算子Linear的前向过程表示为 Y = X W + b \boldsymbol{Y}=\boldsymbol{X}\boldsymbol{W}+\boldsymbol{b} Y=XW+b,其中输入为 X ∈ R N × M \boldsymbol{X} \in R^{N \times M} X∈RN×M,输出为 Y ∈ R N × D \boldsymbol{Y} \in R^{N \times D} Y∈RN×D,参数为权重矩阵 W ∈ R M × D \boldsymbol{W} \in R^{M \times D} W∈RM×D和偏置 b ∈ R 1 × D \boldsymbol{b} \in R^{1 \times D} b∈R1×D。 X X X 和 Y Y Y中的每一行表示一个样本。

为了简便起见,我们用向量 y ∈ R D \boldsymbol{y}\in R^D y∈RD和 x ∈ R M \boldsymbol{x}\in R^M x∈RM表示同一个样本在线性层算子中的输入和输出,则 y = W T x + b T \boldsymbol{y}=\boldsymbol{W}^T\boldsymbol{x}+\boldsymbol{b}^T y=WTx+bT。 y y y对输入 x x x 的偏导数为

∂ y ∂ x = W ∈ R D × M 。 ( 4.17 ) {∂y\over∂x}=W∈R^{D×M}。(4.17) ∂x∂y=W∈RD×M。(4.17)线性层输入的梯度 按照反向传播算法,令 δ y = ∂ R ∂ y ∈ R D δy={∂R\over∂y}∈R^D δy=∂y∂R∈RD表示最终损失对线性层算子的单个输出 y y y 的梯度,则

δ x ≜ ∂ R ∂ x = W δ y 。 ( 4.18 ) δx≜{∂R \over ∂x}=Wδy。(4.18) δx≜∂x∂R=Wδy。(4.18)将上面公式利用批量数据表示的方式重写,令 δ Y = ∂ R ∂ Y ∈ R N × D δY={∂R\over∂Y}∈R^{N×D} δY=∂Y∂R∈RN×D表示最终损失对线性层算子输出的梯度,公式可以重写为

δ X = δ Y W T , ( 4.19 ) δX=δYW^T,(4.19) δX=δYWT,(4.19)其中 δ X δX δX为线性层算子反向函数的输出。

计算线性层参数的梯度 由于线性层算子中包含有可学习的参数 W W W和 b b b,因此backward()除了实现梯度反传外,还需要计算算子内部的参数的梯度。

令 δ y = ∂ R ∂ y ∈ R D δy={∂R\over∂y}∈R^D δy=∂y∂R∈RD表示最终损失 R R R对线性层算子的单个输出 y y y 的梯度,则

δ W ≜ ∂ R ∂ W = x δ y T , ( 4.20 ) δ b ≜ ∂ R ∂ b = δ y T 。 ( 4.21 ) δW≜{∂R\over ∂W}=xδ^T_y,(4.20)\\ δb≜{∂R\over∂b}=δ^T_y。(4.21) δW≜∂W∂R=xδyT,(4.20)δb≜∂b∂R=δyT。(4.21)将上面公式利用批量数据表示的方式重写,令 δ Y = ≜ ∂ R ∂ Y ∈ R N × D δY=≜{∂R\over∂Y}∈R^{N×D} δY=≜∂Y∂R∈RN×D表示最终损失对线性层算子输出的梯度,则公式可以重写为

δ W = X T δ Y , ( 4.22 ) δ b = 1 T δ Y 。 ( 4.23 ) δW=X^Tδ_Y,(4.22) \\δb=1^Tδ_Y。(4.23) δW=XTδY,(4.22)δb=1TδY。(4.23)具体实现代码如下:

class Linear(Op):

def __init__(self, input_size, output_size, name, weight_init=torch.randn, bias_init=torch.zeros):

self.params = {}

self.params['W'] = weight_init([input_size, output_size])

self.params['b'] = bias_init([1, output_size])

self.inputs = None

self.grads = {}

self.name = name

def forward(self, inputs):

self.inputs = inputs

outputs = torch.matmul(self.inputs, self.params['W']) + self.params['b']

return outputs

def backward(self, grads):

self.grads['W'] = torch.matmul(self.inputs.T, grads)

self.grads['b'] = torch.sum(grads, dim=0)

# 线性层输入的梯度

return torch.matmul(grads, self.params['W'].T)

4.2.4.5 整个网络

实现完整的两层神经网络的前向和反向计算。代码实现如下:

class Model_MLP_L2(Op):

def __init__(self, input_size, hidden_size, output_size):

# 线性层

self.fc1 = Linear(input_size, hidden_size, name="fc1")

# Logistic激活函数层

self.act_fn1 = Logistic()

self.fc2 = Linear(hidden_size, output_size, name="fc2")

self.act_fn2 = Logistic()

self.layers = [self.fc1, self.act_fn1, self.fc2, self.act_fn2]

def __call__(self, X):

return self.forward(X)

# 前向计算

def forward(self, X):

z1 = self.fc1(X)

a1 = self.act_fn1(z1)

z2 = self.fc2(a1)

a2 = self.act_fn2(z2)

return a2

# 反向计算

def backward(self, loss_grad_a2):

loss_grad_z2 = self.act_fn2.backward(loss_grad_a2)

loss_grad_a1 = self.fc2.backward(loss_grad_z2)

loss_grad_z1 = self.act_fn1.backward(loss_grad_a1)

loss_grad_inputs = self.fc1.backward(loss_grad_z1)

4.2.4.6 优化器

在计算好神经网络参数的梯度之后,我们将梯度下降法中参数的更新过程实现在优化器中。

与第3章中实现的梯度下降优化器SimpleBatchGD不同的是,此处的优化器需要遍历每层,对每层的参数分别做更新。

from nndl.opitimizer import Optimizer

class BatchGD(Optimizer):

def __init__(self, init_lr, model):

super(BatchGD, self).__init__(init_lr=init_lr, model=model)

def step(self):

# 参数更新

for layer in self.model.layers: # 遍历所有层

if isinstance(layer.params, dict):

for key in layer.params.keys():

layer.params[key] = layer.params[key] - self.init_lr * layer.grads[key]

4.2.5 完善Runner类:RunnerV2_1

基于3.1.6实现的 RunnerV2 类主要针对比较简单的模型。而在本章中,模型由多个算子组合而成,通常比较复杂,因此本节继续完善并实现一个改进版: RunnerV2_1类,其主要加入的功能有:

- 支持自定义算子的梯度计算,在训练过程中调用self.loss_fn.backward()从损失函数开始反向计算梯度;

- 每层的模型保存和加载,将每一层的参数分别进行保存和加载。

import os

class RunnerV2_1(object):

def __init__(self, model, optimizer, metric, loss_fn, **kwargs):

self.model = model

self.optimizer = optimizer

self.loss_fn = loss_fn

self.metric = metric

# 记录训练过程中的评估指标变化情况

self.train_scores = []

self.dev_scores = []

# 记录训练过程中的评价指标变化情况

self.train_loss = []

self.dev_loss = []

def train(self, train_set, dev_set, **kwargs):

# 传入训练轮数,如果没有传入值则默认为0

num_epochs = kwargs.get("num_epochs", 0)

# 传入log打印频率,如果没有传入值则默认为100

log_epochs = kwargs.get("log_epochs", 100)

# 传入模型保存路径

save_dir = kwargs.get("save_dir", None)

# 记录全局最优指标

best_score = 0

# 进行num_epochs轮训练

for epoch in range(num_epochs):

X, y = train_set

# 获取模型预测

logits = self.model(X)

# 计算交叉熵损失

trn_loss = self.loss_fn(logits, y) # return a tensor

self.train_loss.append(trn_loss.item())

# 计算评估指标

trn_score = self.metric(logits, y).item()

self.train_scores.append(trn_score)

self.loss_fn.backward()

# 参数更新

self.optimizer.step()

dev_score, dev_loss = self.evaluate(dev_set)

# 如果当前指标为最优指标,保存该模型

if dev_score > best_score:

print(f"[Evaluate] best accuracy performence has been updated: {best_score:.5f} --> {dev_score:.5f}")

best_score = dev_score

if save_dir:

self.save_model(save_dir)

if log_epochs and epoch % log_epochs == 0:

print(f"[Train] epoch: {epoch}/{num_epochs}, loss: {trn_loss.item()}")

def evaluate(self, data_set):

X, y = data_set

# 计算模型输出

logits = self.model(X)

# 计算损失函数

loss = self.loss_fn(logits, y).item()

self.dev_loss.append(loss)

# 计算评估指标

score = self.metric(logits, y).item()

self.dev_scores.append(score)

return score, loss

def predict(self, X):

return self.model(X)

def save_model(self, save_dir):

# 对模型每层参数分别进行保存,保存文件名称与该层名称相同

for layer in self.model.layers: # 遍历所有层

if isinstance(layer.params, dict):

torch.save(layer.params, os.path.join(save_dir, layer.name+".pdparams"))

def load_model(self, model_dir):

# 获取所有层参数名称和保存路径之间的对应关系

model_file_names = os.listdir(model_dir)

name_file_dict = {}

for file_name in model_file_names:

name = file_name.replace(".pdparams", "")

name_file_dict[name] = os.path.join(model_dir, file_name)

# 加载每层参数

for layer in self.model.layers: # 遍历所有层

if isinstance(layer.params, dict):

name = layer.name

file_path = name_file_dict[name]

layer.params = torch.load(file_path)

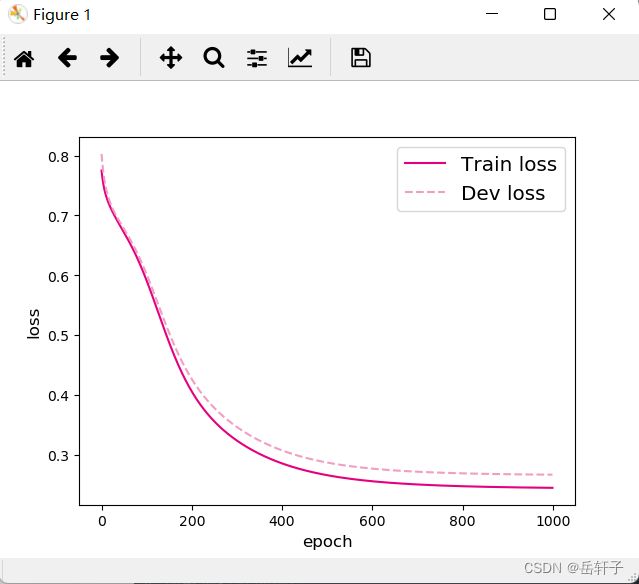

4.2.6 模型训练

基于RunnerV2_1,使用训练集和验证集进行模型训练,共训练2000个epoch。评价指标为第章介绍的accuracy。代码实现如下:

from nndl.metric import accuracy

torch.manual_seed(123)

epoch_num = 1000

model_saved_dir = "model"

# 输入层维度为2

input_size = 2

# 隐藏层维度为5

hidden_size = 5

# 输出层维度为1

output_size = 1

# 定义网络

model = Model_MLP_L2(input_size=input_size, hidden_size=hidden_size, output_size=output_size)

# 损失函数

loss_fn = BinaryCrossEntropyLoss(model)

# 优化器

learning_rate = 0.2

optimizer = BatchGD(learning_rate, model)

# 评价方法

metric = accuracy

# 实例化RunnerV2_1类,并传入训练配置

runner = RunnerV2_1(model, optimizer, metric, loss_fn)

runner.train([X_train, y_train], [X_dev, y_dev], num_epochs=epoch_num, log_epochs=50, save_dir=model_saved_dir)

运行结果:

[Evaluate] best accuracy performence has been updated: 0.00000 --> 0.50000

[Train] epoch: 0/1000, loss: 0.7837428450584412

[Evaluate] best accuracy performence has been updated: 0.50000 --> 0.55625

[Evaluate] best accuracy performence has been updated: 0.55625 --> 0.66250

[Evaluate] best accuracy performence has been updated: 0.66250 --> 0.69375

[Evaluate] best accuracy performence has been updated: 0.69375 --> 0.70000

[Evaluate] best accuracy performence has been updated: 0.70000 --> 0.73750

[Evaluate] best accuracy performence has been updated: 0.73750 --> 0.74375

[Evaluate] best accuracy performence has been updated: 0.74375 --> 0.75625

[Evaluate] best accuracy performence has been updated: 0.75625 --> 0.76250

[Evaluate] best accuracy performence has been updated: 0.76250 --> 0.76875

[Evaluate] best accuracy performence has been updated: 0.76875 --> 0.77500

[Evaluate] best accuracy performence has been updated: 0.77500 --> 0.78750

[Evaluate] best accuracy performence has been updated: 0.78750 --> 0.79375

[Evaluate] best accuracy performence has been updated: 0.79375 --> 0.80000

[Train] epoch: 50/1000, loss: 0.6765806674957275

[Evaluate] best accuracy performence has been updated: 0.80000 --> 0.80625

[Train] epoch: 100/1000, loss: 0.6159222722053528

[Train] epoch: 150/1000, loss: 0.5496628880500793

[Train] epoch: 200/1000, loss: 0.5038267374038696

[Train] epoch: 250/1000, loss: 0.48039698600769043

[Train] epoch: 300/1000, loss: 0.4689186215400696

[Train] epoch: 350/1000, loss: 0.46293506026268005

[Train] epoch: 400/1000, loss: 0.45961514115333557

[Train] epoch: 450/1000, loss: 0.45769912004470825

[Train] epoch: 500/1000, loss: 0.45656299591064453

[Evaluate] best accuracy performence has been updated: 0.80625 --> 0.81250

[Train] epoch: 550/1000, loss: 0.4558698832988739

[Evaluate] best accuracy performence has been updated: 0.81250 --> 0.81875

[Train] epoch: 600/1000, loss: 0.4554292857646942

[Train] epoch: 650/1000, loss: 0.45513463020324707

[Train] epoch: 700/1000, loss: 0.4549262225627899

[Train] epoch: 750/1000, loss: 0.45476943254470825

[Train] epoch: 800/1000, loss: 0.4546455442905426

[Train] epoch: 850/1000, loss: 0.45454326272010803

[Train] epoch: 900/1000, loss: 0.45445701479911804

[Train] epoch: 950/1000, loss: 0.4543817639350891

可视化观察训练集与验证集的损失函数变化情况。

# 打印训练集和验证集的损失

plt.figure()

plt.plot(range(epoch_num), runner.train_loss, color="#e4007f", label="Train loss")

plt.plot(range(epoch_num), runner.dev_loss, color="#f19ec2", linestyle='--', label="Dev loss")

plt.xlabel("epoch", fontsize='large')

plt.ylabel("loss", fontsize='large')

plt.legend(fontsize='x-large')

plt.savefig('fw-loss2.pdf')

plt.show()

#加载训练好的模型

runner.load_model(model_saved_dir)

# 在测试集上对模型进行评价

score, loss = runner.evaluate([X_test, y_test])

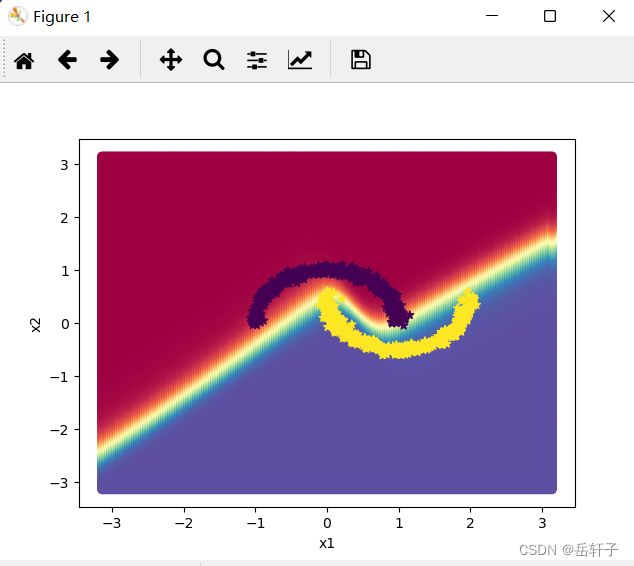

4.2.7 性能评价

使用测试集对训练中的最优模型进行评价,观察模型的评价指标。代码实现如下:

# 加载训练好的模型

runner.load_model(model_saved_dir)

# 在测试集上对模型进行评价

score, loss = runner.evaluate([X_test, y_test])

print("[Test] score/loss: {:.4f}/{:.4f}".format(score, loss))

运行结果:

[Test] score/loss: 0.8950/0.2498

从结果来看,模型在测试集上取得了较高的准确率。

下面对结果进行可视化:

import math

x1, x2 = torch.meshgrid(torch.linspace(-math.pi, math.pi, 200), torch.linspace(-math.pi, math.pi, 200), indexing='ij')

x = torch.stack([torch.flatten(x1), torch.flatten(x2)], dim=1)

# 预测对应类别

y = runner.predict(x)

y = torch.squeeze((y >= 0.5).to(torch.float32), dim=-1)

# 绘制类别区域

plt.ylabel('x2')

plt.xlabel('x1')

plt.scatter(x[:, 0].tolist(), x[:, 1].tolist(), c=y.tolist(), cmap=plt.cm.Spectral)

plt.scatter(X_train[:, 0].tolist(), X_train[:, 1].tolist(), marker='*', c=torch.squeeze(y_train, dim=-1).tolist())

plt.scatter(X_dev[:, 0].tolist(), X_dev[:, 1].tolist(), marker='*', c=torch.squeeze(y_dev, dim=-1).tolist())

plt.scatter(X_test[:, 0].tolist(), X_test[:, 1].tolist(), marker='*', c=torch.squeeze(y_test, dim=-1).tolist())

plt.show()

题:

1. 【思考题】加权求和与仿射变换之间有什么区别和联系?

答:

- 加权和:相当于降维的一种,即将多维数据根据其重要性进行求和降成一维数据。

- 仿射变换:又称仿射映射,是指在几何中,一个向量空间进行一次线性变换并接上一个平移,变换为另一个向量空间。

- 加权求和可简单看成对输入的信息的线性变换 ,从几何上来看,在变换前后原点是不发生改变的。仿射变换在图形学中也叫仿射映射,是指一个向量空间经过一次线性变换,再经过一次平移,变换为另一个向量空间,因此仿射变换在几何上没有原点保持不变这一特点。只有当仿射变换的平移项时,仿射变换才变为线性变换。

2. 【思考题】对比 3.1 基于Logistic回归的二分类任务 4.2 基于前馈神经网络的二分类任务

谈谈自己的看法

基于Logistic回归的二分类任务为线性模型,通常可直接计算出梯度,而神经网络在计算梯度时则相对比较复杂,需要使用反向传播来计算梯度。相较于基于Logistic回归的二分类任务,在大样本的情况下基于前馈神经网络的二分类任务的优势更大。基于Logistic回归的二分类任务的正确率受随机误差大小和变量个数的影响大,基于前馈神经网络的二分类任务的正确率受样本数量的影响大。

其他

看一个有趣的,这里我们把噪音调小(数据密集),学习率调大(容易过拟合),然后进行训练

代码:

import os

import torch

from abc import abstractmethod

import torch

import math

import numpy as np

# 新增make_moons函数

def make_moons(n_samples=1000, shuffle=True, noise=None):

n_samples_out = n_samples // 2

n_samples_in = n_samples - n_samples_out

outer_circ_x = torch.cos(torch.linspace(0, math.pi, n_samples_out))

outer_circ_y = torch.sin(torch.linspace(0, math.pi, n_samples_out))

inner_circ_x = 1 - torch.cos(torch.linspace(0, math.pi, n_samples_in))

inner_circ_y = 0.5 - torch.sin(torch.linspace(0, math.pi, n_samples_in))

X = torch.stack(

[torch.cat([outer_circ_x, inner_circ_x]),

torch.cat([outer_circ_y, inner_circ_y])],

axis=1

)

y = torch.cat(

[torch.zeros([n_samples_out]), torch.ones([n_samples_in])]

)

if shuffle:

idx = torch.randperm(X.shape[0])

X = X[idx]

y = y[idx]

if noise is not None:

X += np.random.normal(0.0, noise, X.shape)

return X, y

n_samples = 1000

X, y = make_moons(n_samples=n_samples, shuffle=True, noise=0.05)

num_train = 640

num_dev = 160

num_test = 200

X_train, y_train = X[:num_train], y[:num_train]

X_dev, y_dev = X[num_train:num_train + num_dev], y[num_train:num_train + num_dev]

X_test, y_test = X[num_train + num_dev:], y[num_train + num_dev:]

y_train = y_train.reshape([-1,1])

y_dev = y_dev.reshape([-1,1])

y_test = y_test.reshape([-1,1])

class Op(object):

def __init__(self):

pass

def __call__(self, inputs):

return self.forward(inputs)

def forward(self, inputs):

raise NotImplementedError

def backward(self, inputs):

raise NotImplementedError

Op=Op

# 实现交叉熵损失函数

class BinaryCrossEntropyLoss(Op):

def __init__(self, model):

self.predicts = None

self.labels = None

self.num = None

self.model = model

def __call__(self, predicts, labels):

return self.forward(predicts, labels)

def forward(self, predicts, labels):

self.predicts = predicts

self.labels = labels

self.num = self.predicts.shape[0]

loss = -1. / self.num * (torch.matmul(self.labels.t().to(torch.float), torch.log(self.predicts).to(torch.float32))

+ torch.matmul((1 - self.labels.t().to(torch.float)), torch.log(1 - self.predicts).to(torch.float32)))

loss = torch.squeeze(loss, axis=1)

return loss

def backward(self):

# 计算损失函数对模型预测的导数

loss_grad_predicts = -1.0 * (self.labels / self.predicts -

(1 - self.labels) / (1 - self.predicts)) / self.num

# 梯度反向传播

self.model.backward(loss_grad_predicts)

class Logistic(Op):

def __init__(self):

self.inputs = None

self.outputs = None

self.params = None

def forward(self, inputs):

outputs = 1.0 / (1.0 + torch.exp(-inputs))

self.outputs = outputs

return outputs

def backward(self, grads):

# 计算Logistic激活函数对输入的导数

outputs_grad_inputs = torch.multiply(self.outputs, (1.0 - self.outputs))

return torch.multiply(grads,outputs_grad_inputs)

class Linear(Op):

def __init__(self, input_size, output_size, name, weight_init=np.random.standard_normal, bias_init=torch.zeros):

self.params = {}

self.params['W'] = weight_init([input_size, output_size])

self.params['W'] = torch.as_tensor(self.params['W'],dtype=torch.float32)

self.params['b'] = bias_init([1, output_size])

self.inputs = None

self.grads = {}

self.name = name

def forward(self, inputs):

self.inputs = inputs

outputs = torch.matmul(self.inputs.to(torch.float32), self.params['W']) + self.params['b']

return outputs

def backward(self, grads):

self.grads['W'] = torch.matmul(self.inputs.T.to(torch.float32), grads)

self.grads['b'] = torch.sum(grads, dim=0)

return torch.matmul(grads.to(torch.float32), self.params['W'].T)

class Model_MLP_L2(Op):

def __init__(self, input_size, hidden_size, output_size):

self.fc1 = Linear(input_size, hidden_size, name="fc1")

self.act_fn1 = Logistic()

self.fc2 = Linear(hidden_size, output_size, name="fc2")

self.act_fn2 = Logistic()

self.layers = [self.fc1, self.act_fn1, self.fc2, self.act_fn2]

def __call__(self, X):

return self.forward(X)

def forward(self, X):

z1 = self.fc1(X)

a1 = self.act_fn1(z1)

z2 = self.fc2(a1)

a2 = self.act_fn2(z2)

return a2

# 反向计算

def backward(self, loss_grad_a2):

loss_grad_z2 = self.act_fn2.backward(loss_grad_a2)

loss_grad_a1 = self.fc2.backward(loss_grad_z2)

loss_grad_z1 = self.act_fn1.backward(loss_grad_a1)

loss_grad_inputs = self.fc1.backward(loss_grad_z1)

#新增优化器基类

class Optimizer(object):

def __init__(self, init_lr, model):

#初始化学习率,用于参数更新的计算

self.init_lr = init_lr

#指定优化器需要优化的模型

self.model = model

@abstractmethod

def step(self):

pass

class BatchGD(Optimizer):

def __init__(self, init_lr, model):

super(BatchGD, self).__init__(init_lr=init_lr, model=model)

def step(self):

# 参数更新

for layer in self.model.layers: # 遍历所有层

if isinstance(layer.params, dict):

for key in layer.params.keys():

layer.params[key] = layer.params[key] - self.init_lr * layer.grads[key]

class RunnerV2_1(object):

def __init__(self, model, optimizer, metric, loss_fn, **kwargs):

self.model = model

self.optimizer = optimizer

self.loss_fn = loss_fn

self.metric = metric

# 记录训练过程中的评估指标变化情况

self.train_scores = []

self.dev_scores = []

# 记录训练过程中的评价指标变化情况

self.train_loss = []

self.dev_loss = []

def train(self, train_set, dev_set, **kwargs):

# 传入训练轮数,如果没有传入值则默认为0

num_epochs = kwargs.get("num_epochs", 0)

# 传入log打印频率,如果没有传入值则默认为100

log_epochs = kwargs.get("log_epochs", 100)

# 传入模型保存路径

save_dir = kwargs.get("save_dir", None)

# 记录全局最优指标

best_score = 0

# 进行num_epochs轮训练

for epoch in range(num_epochs):

X, y = train_set

# 获取模型预测

logits = self.model(X)

# 计算交叉熵损失

trn_loss = self.loss_fn(logits, y) # return a tensor

self.train_loss.append(trn_loss.item())

# 计算评估指标

trn_score = self.metric(logits, y).item()

self.train_scores.append(trn_score)

self.loss_fn.backward()

# 参数更新

self.optimizer.step()

dev_score, dev_loss = self.evaluate(dev_set)

# 如果当前指标为最优指标,保存该模型

if dev_score > best_score:

print(f"[Evaluate] best accuracy performence has been updated: {best_score:.5f} --> {dev_score:.5f}")

best_score = dev_score

if save_dir:

self.save_model(save_dir)

if log_epochs and epoch % log_epochs == 0:

print(f"[Train] epoch: {epoch}/{num_epochs}, loss: {trn_loss.item()}")

def evaluate(self, data_set):

X, y = data_set

# 计算模型输出

logits = self.model(X)

# 计算损失函数

loss = self.loss_fn(logits, y).item()

self.dev_loss.append(loss)

# 计算评估指标

score = self.metric(logits, y).item()

self.dev_scores.append(score)

return score, loss

def predict(self, X):

return self.model(X)

def save_model(self, save_dir):

for layer in self.model.layers: # 遍历所有层

if isinstance(layer.params, dict):

torch.save(layer.params, os.path.join(save_dir, layer.name + ".pdparams"))

def load_model(self, model_dir):

# 获取所有层参数名称和保存路径之间的对应关系

model_file_names = os.listdir(model_dir)

name_file_dict = {}

for file_name in model_file_names:

name = file_name.replace(".pdparams", "")

name_file_dict[name] = os.path.join(model_dir, file_name)

# 加载每层参数

for layer in self.model.layers: # 遍历所有层

if isinstance(layer.params, dict):

name = layer.name

file_path = name_file_dict[name]

layer.params = torch.load(file_path)

def accuracy(preds, labels):

# 判断是二分类任务还是多分类任务,preds.shape[1]=1时为二分类任务,preds.shape[1]>1时为多分类任务

if preds.shape[1] == 1:

preds=(preds>=0.5).to(torch.float32)

else:

preds = torch.argmax(preds,dim=1).int()

return torch.mean((preds == labels).float())

epoch_num = 1000

model_saved_dir = 'model'

# 输入层维度为2

input_size = 2

# 隐藏层维度为5

hidden_size = 5

# 输出层维度为1

output_size = 1

# 定义网络

model = Model_MLP_L2(input_size=input_size, hidden_size=hidden_size, output_size=output_size)

# 损失函数

loss_fn = BinaryCrossEntropyLoss(model)

# 优化器

learning_rate = 3

optimizer = BatchGD(learning_rate, model)

# 评价方法

metric = accuracy

# # 实例化RunnerV2_1类,并传入训练配置

runner = RunnerV2_1(model, optimizer, metric, loss_fn)

#

runner.train([X_train, y_train], [X_dev, y_dev], num_epochs=epoch_num, log_epochs=50, save_dir=model_saved_dir)

#加载训练好的模型

runner.load_model(model_saved_dir)

# 在测试集上对模型进行评价

score, loss = runner.evaluate([X_test, y_test])

print("[Test] score/loss: {:.4f}/{:.4f}".format(score, loss))

import matplotlib.pyplot as plt

# # 打印训练集和验证集的损失

# plt.figure()

# plt.plot(range(epoch_num), runner.train_loss, color="#e4007f", label="Train loss")

# plt.plot(range(epoch_num), runner.dev_loss, color="#f19ec2", linestyle='--', label="Dev loss")

# plt.xlabel("epoch", fontsize='large')

# plt.ylabel("loss", fontsize='large')

# plt.legend(fontsize='x-large')

# plt.show()

import math

# 均匀生成40000个数据点

x1, x2 = torch.meshgrid(torch.linspace(-math.pi, math.pi, 200), torch.linspace(-math.pi, math.pi, 200),indexing='ij')

x = torch.stack([torch.flatten(x1), torch.flatten(x2)], axis=1)

# 预测对应类别

y = runner.predict(x)

y = y.reshape([-1])

# 绘制类别区域

plt.ylabel('x2')

plt.xlabel('x1')

plt.scatter(x[:,0].tolist(), x[:,1].tolist(), c=y.tolist(), cmap=plt.cm.Spectral)

plt.scatter(X_train[:, 0].tolist(), X_train[:, 1].tolist(), marker='*', c=torch.squeeze(y_train,dim=-1).tolist())

plt.scatter(X_dev[:, 0].tolist(), X_dev[:, 1].tolist(), marker='*', c=torch.squeeze(y_dev,dim=-1).tolist())

plt.scatter(X_test[:, 0].tolist(), X_test[:, 1].tolist(), marker='*', c=torch.squeeze(y_test,dim=-1).tolist())

plt.show()

所有代码

# 4.1.1 净活性值

import torch

# 2个特征数为5的样本

X = torch.rand(size=[2, 5])

# 含有5个参数的权重向量

w = torch.rand(size=[5, 1])

# 偏置项

b = torch.rand(size=[1, 1])

# 使用'torch.matmul'实现矩阵相乘

z = torch.matmul(X, w) + b

print("input X:", X)

print("weight w:", w, "\nbias b:", b)

print("output z:", z)

# 4.1.2 激活函数

import matplotlib.pyplot as plt

# Logistic函数

def logistic(z):

return 1.0 / (1.0 + torch.exp(-z))

# Tanh函数

def tanh(z):

return (torch.exp(z) - torch.exp(-z)) / (torch.exp(z) + torch.exp(-z))

# 在[-10,10]的范围内生成10000个输入值,用于绘制函数曲线

z = torch.linspace(-10, 10, 10000)

plt.figure()

plt.plot(z.tolist(), logistic(z).tolist(), color='#e4007f', label="Logistic Function")

plt.plot(z.tolist(), tanh(z).tolist(), color='#f19ec2', linestyle='--', label="Tanh Function")

ax = plt.gca() # 获取轴,默认有4个

# 隐藏两个轴,通过把颜色设置成none

ax.spines['top'].set_color('none')

ax.spines['right'].set_color('none')

# 调整坐标轴位置

ax.spines['left'].set_position(('data', 0))

ax.spines['bottom'].set_position(('data', 0))

plt.legend(loc='lower right', fontsize='large')

plt.savefig('fw-logistic-tanh.pdf')

plt.show()

# 4.1.2.2 ReLU型函数

# ReLU

def relu(z):

return torch.maximum(z, torch.tensor(0.))

# 带泄露的ReLU

def leaky_relu(z, negative_slope=0.1):

a1 = ((z > 0).to(torch.float32) * z)

a2 = (z <= 0).to(torch.float32) * (negative_slope * z)

return a1 + a2

# 在[-10,10]的范围内生成一系列的输入值,用于绘制relu、leaky_relu的函数曲线

z = torch.linspace(-10, 10, 10000)

plt.figure()

plt.plot(z.tolist(), relu(z).tolist(), color="#e4007f", label="ReLU Function")

plt.plot(z.tolist(), leaky_relu(z).tolist(), color="#f19ec2", linestyle="--", label="LeakyReLU Function")

ax = plt.gca()

ax.spines['top'].set_color('none')

ax.spines['right'].set_color('none')

ax.spines['left'].set_position(('data', 0))

ax.spines['bottom'].set_position(('data', 0))

plt.legend(loc='upper left', fontsize='large')

plt.savefig('fw-relu-leakyrelu.pdf')

plt.show()

# 4.2.1 数据集构建

from dataset import make_moons

# 采样1000个样本

n_samples = 1000

X, y = make_moons(n_samples=n_samples, shuffle=True, noise=0.5)

plt.figure(figsize=(5, 5))

plt.scatter(x=X[:, 0].tolist(), y=X[:, 1].tolist(), marker='*', c=y.tolist(),label="我是数据集")

plt.xlim(-3, 4)

plt.ylim(-3, 4)

plt.show()

num_train = 640

num_dev = 160

num_test = 200

X_train, y_train = X[:num_train], y[:num_train]

X_dev, y_dev = X[num_train:num_train + num_dev], y[num_train:num_train + num_dev]

X_test, y_test = X[num_train + num_dev:], y[num_train + num_dev:]

y_train = y_train.reshape([-1,1])

y_dev = y_dev.reshape([-1,1])

y_test = y_test.reshape([-1,1])

from op import Op

# 实现线性层算子

class Linear(Op):

def __init__(self, input_size, output_size, name, weight_init=torch.randn, bias_init=torch.zeros):

"""

输入:

- input_size:输入数据维度

- output_size:输出数据维度

- name:算子名称

- weight_init:权重初始化方式,默认使用'paddle.standard_normal'进行标准正态分布初始化

- bias_init:偏置初始化方式,默认使用全0初始化

"""

self.params = {}

# 初始化权重

self.params['W'] = weight_init(size=[input_size, output_size])

# 初始化偏置

self.params['b'] = bias_init(size=[1, output_size])

self.inputs = None

self.name = name

def forward(self, inputs):

"""

输入:

- inputs:shape=[N,input_size], N是样本数量

输出:

- outputs:预测值,shape=[N,output_size]

"""

self.inputs = inputs

outputs = torch.matmul(self.inputs, self.params['W']) + self.params['b']

return outputs

class Logistic(Op):

def __init__(self):

self.inputs = None

self.outputs = None

def forward(self, inputs):

"""

输入:

- inputs: shape=[N,D]

输出:

- outputs:shape=[N,D]

"""

outputs = 1.0 / (1.0 + torch.exp(-inputs))

self.outputs = outputs

return outputs

# 4.2.2.3 层的串行组合

# 实现一个两层前馈神经网络

class Model_MLP_L2(Op):

def __init__(self, input_size, hidden_size, output_size):

"""

输入:

- input_size:输入维度

- hidden_size:隐藏层神经元数量

- output_size:输出维度

"""

self.fc1 = Linear(input_size, hidden_size, name="fc1")

self.act_fn1 = Logistic()

self.fc2 = Linear(hidden_size, output_size, name="fc2")

self.act_fn2 = Logistic()

def __call__(self, X):

return self.forward(X)

def forward(self, X):

"""

输入:

- X:shape=[N,input_size], N是样本数量

输出:

- a2:预测值,shape=[N,output_size]

"""

z1 = self.fc1(X)

a1 = self.act_fn1(z1)

z2 = self.fc2(a1)

a2 = self.act_fn2(z2)

return a2

# 实例化模型

model = Model_MLP_L2(input_size=5, hidden_size=10, output_size=1)

# 随机生成1条长度为5的数据

X = torch.rand(size=[1, 5])

result = model(X)

print ("result: ", result)

# 4.2.4.1 反向传播算法

# 实现交叉熵损失函数

class BinaryCrossEntropyLoss(Op):

def __init__(self, model):

self.predicts = None

self.labels = None

self.num = None

self.model = model

def __call__(self, predicts, labels):

return self.forward(predicts, labels)

def forward(self, predicts, labels):

"""

输入:

- predicts:预测值,shape=[N, 1],N为样本数量

- labels:真实标签,shape=[N, 1]

输出:

- 损失值:shape=[1]

"""

self.predicts = predicts

self.labels = labels

self.num = self.predicts.shape[0]

loss = -1. / self.num * (torch.matmul(self.labels.t(), torch.log(self.predicts))

+ torch.matmul((1 - self.labels.t()), torch.log(1 - self.predicts)))

loss = torch.squeeze(loss, axis=1)

return loss

def backward(self):

# 计算损失函数对模型预测的导数

loss_grad_predicts = -1.0 * (self.labels / self.predicts -

(1 - self.labels) / (1 - self.predicts)) / self.num

# 梯度反向传播

self.model.backward(loss_grad_predicts)

# 4.2.4.3 Logistic算子

class Logistic(Op):

def __init__(self):

self.inputs = None

self.outputs = None

self.params = None

def forward(self, inputs):

outputs = 1.0 / (1.0 + torch.exp(-inputs))

self.outputs = outputs

return outputs

def backward(self, grads):

# 计算Logistic激活函数对输入的导数

outputs_grad_inputs = torch.multiply(self.outputs, (1.0 - self.outputs))

return torch.multiply(grads,outputs_grad_inputs)

class Linear(Op):

def __init__(self, input_size, output_size, name, weight_init=torch.randn, bias_init=torch.zeros):

self.params = {}

self.params['W'] = weight_init(size=[input_size, output_size])

self.params['b'] = bias_init(size=[1, output_size])

self.inputs = None

self.grads = {}

self.name = name

def forward(self, inputs):

self.inputs = inputs

outputs = torch.matmul(self.inputs, self.params['W']) + self.params['b']

return outputs

def backward(self, grads):

"""

输入:

- grads:损失函数对当前层输出的导数

输出:

- 损失函数对当前层输入的导数

"""

self.grads['W'] = torch.matmul(self.inputs.T, grads)

self.grads['b'] = torch.sum(grads, axis=0)

# 线性层输入的梯度

return torch.matmul(grads, self.params['W'].T)

# 4.2.4.5 整个网络

class Model_MLP_L2(Op):

def __init__(self, input_size, hidden_size, output_size):

# 线性层

self.fc1 = Linear(input_size, hidden_size, name="fc1")

# Logistic激活函数层

self.act_fn1 = Logistic()

self.fc2 = Linear(hidden_size, output_size, name="fc2")

self.act_fn2 = Logistic()

self.layers = [self.fc1, self.act_fn1, self.fc2, self.act_fn2]

def __call__(self, X):

return self.forward(X)

# 前向计算

def forward(self, X):

z1 = self.fc1(X)

a1 = self.act_fn1(z1)

z2 = self.fc2(a1)

a2 = self.act_fn2(z2)

return a2

# 反向计算

def backward(self, loss_grad_a2):

loss_grad_z2 = self.act_fn2.backward(loss_grad_a2)

loss_grad_a1 = self.fc2.backward(loss_grad_z2)

loss_grad_z1 = self.act_fn1.backward(loss_grad_a1)

loss_grad_inputs = self.fc1.backward(loss_grad_z1)

# 4.2.4.6 优化器

from opitimizer import Optimizer

class BatchGD(Optimizer):

def __init__(self, init_lr, model):

super(BatchGD, self).__init__(init_lr=init_lr, model=model)

def step(self):

# 参数更新

for layer in self.model.layers: # 遍历所有层

if isinstance(layer.params, dict):

for key in layer.params.keys():

layer.params[key] = layer.params[key] - self.init_lr * layer.grads[key]

# 4.2.5 完善Runner类:RunnerV2_1

import os

class RunnerV2_1(object):

def __init__(self, model, optimizer, metric, loss_fn, **kwargs):

self.model = model

self.optimizer = optimizer

self.loss_fn = loss_fn

self.metric = metric

# 记录训练过程中的评估指标变化情况

self.train_scores = []

self.dev_scores = []

# 记录训练过程中的评价指标变化情况

self.train_loss = []

self.dev_loss = []

def train(self, train_set, dev_set, **kwargs):

# 传入训练轮数,如果没有传入值则默认为0

num_epochs = kwargs.get("num_epochs", 0)

# 传入log打印频率,如果没有传入值则默认为100

log_epochs = kwargs.get("log_epochs", 100)

# 传入模型保存路径

save_dir = kwargs.get("save_dir", None)

# 记录全局最优指标

best_score = 0

# 进行num_epochs轮训练

for epoch in range(num_epochs):

X, y = train_set

# 获取模型预测

logits = self.model(X)

# 计算交叉熵损失

trn_loss = self.loss_fn(logits, y) # return a tensor

self.train_loss.append(trn_loss.item())

# 计算评估指标

trn_score = self.metric(logits, y).item()

self.train_scores.append(trn_score)

self.loss_fn.backward()

# 参数更新

self.optimizer.step()

dev_score, dev_loss = self.evaluate(dev_set)

# 如果当前指标为最优指标,保存该模型

if dev_score > best_score:

print(f"[Evaluate] best accuracy performence has been updated: {best_score:.5f} --> {dev_score:.5f}")

best_score = dev_score

if save_dir:

self.save_model(save_dir)

if log_epochs and epoch % log_epochs == 0:

print(f"[Train] epoch: {epoch}/{num_epochs}, loss: {trn_loss.item()}")

def evaluate(self, data_set):

X, y = data_set

# 计算模型输出

logits = self.model(X)

# 计算损失函数

loss = self.loss_fn(logits, y).item()

self.dev_loss.append(loss)

# 计算评估指标

score = self.metric(logits, y).item()

self.dev_scores.append(score)

return score, loss

def predict(self, X):

return self.model(X)

def save_model(self, save_dir):

# 对模型每层参数分别进行保存,保存文件名称与该层名称相同

for layer in self.model.layers: # 遍历所有层

if isinstance(layer.params, dict):

torch.save(layer.params, os.path.join(save_dir, layer.name+".pdparams"))

def load_model(self, model_dir):

# 获取所有层参数名称和保存路径之间的对应关系

model_file_names = os.listdir(model_dir)

name_file_dict = {}

for file_name in model_file_names:

name = file_name.replace(".pdparams", "")

name_file_dict[name] = os.path.join(model_dir, file_name)

# 加载每层参数

for layer in self.model.layers: # 遍历所有层

if isinstance(layer.params, dict):

name = layer.name

file_path = name_file_dict[name]

layer.params = torch.load(file_path)

from metric import accuracy

torch.manual_seed(123) #设置随机种子

epoch_num = 1000

model_saved_dir = "model"

# 输入层维度为2

input_size = 2

# 隐藏层维度为5

hidden_size = 5

# 输出层维度为1

output_size = 1

# 定义网络

model = Model_MLP_L2(input_size=input_size, hidden_size=hidden_size, output_size=output_size)

# 损失函数

loss_fn = BinaryCrossEntropyLoss(model)

# 优化器

learning_rate = 0.2

optimizer = BatchGD(learning_rate, model)

# 评价方法

metric = accuracy

# 实例化RunnerV2_1类,并传入训练配置

runner = RunnerV2_1(model, optimizer, metric, loss_fn)

runner.train([X_train, y_train], [X_dev, y_dev], num_epochs=epoch_num, log_epochs=50, save_dir=model_saved_dir)

import matplotlib.pyplot as plt

# 打印训练集和验证集的损失

plt.figure()

plt.plot(range(epoch_num), runner.train_loss, color="#e4007f", label="Train loss")

plt.plot(range(epoch_num), runner.dev_loss, color="#f19ec2", linestyle='--', label="Dev loss")

plt.xlabel("epoch", fontsize='large')

plt.ylabel("loss", fontsize='large')

plt.legend(fontsize='x-large')

plt.show()

#加载训练好的模型

runner.load_model(model_saved_dir)

# 在测试集上对模型进行评价

score, loss = runner.evaluate([X_test, y_test])

# 加载训练好的模型

runner.load_model(model_saved_dir)

# 在测试集上对模型进行评价

score, loss = runner.evaluate([X_test, y_test])

print("[Test] score/loss: {:.4f}/{:.4f}".format(score, loss))

import math

# 均匀生成40000个数据点

x1, x2 = torch.meshgrid(torch.linspace(-math.pi, math.pi, 200), torch.linspace(-math.pi, math.pi, 200),indexing='ij')

x = torch.stack([torch.flatten(x1), torch.flatten(x2)], dim=1)

# 预测对应类别

y = runner.predict(x)

y = torch.squeeze((y>=0.5).to(torch.float32),dim=-1)

# y = y.reshape([-1])

# 绘制类别区域

plt.ylabel('x2')

plt.xlabel('x1')

plt.scatter(x[:,0].tolist(), x[:,1].tolist(), c=y.tolist(), cmap=plt.cm.Spectral)

plt.scatter(X_train[:, 0].tolist(), X_train[:, 1].tolist(), marker='*', c=torch.squeeze(y_train,axis=-1).tolist())

plt.scatter(X_dev[:, 0].tolist(), X_dev[:, 1].tolist(), marker='*', c=torch.squeeze(y_dev,axis=-1).tolist())

plt.scatter(X_test[:, 0].tolist(), X_test[:, 1].tolist(), marker='*', c=torch.squeeze(y_test,axis=-1).tolist())

plt.show()

心得

相较于线性分类和线性回归,在我看来,神经网络应用更加多一点。对于神经网络了解更多了一些。对于模型有了更深的掌握。

创作不易,如果对你有帮助,求求你给我个赞!!!

点赞 + 收藏 + 关注!!!

如有错误与建议,望告知!!!(将于下篇文章更正)

请多多关注我!!!谢谢!!!