创新实训(12)-生成式文本摘要之T5

创新实训(12)-生成式文本摘要之T5

1.简介

T5:Text-To-Text-Transfer-Transformer的简称,是Google在2019年提出的一个新的NLP模型。它的基本思想就是Text-to-Text,即NLP的任务基本上都可以归为从文本到文本的处理过程。

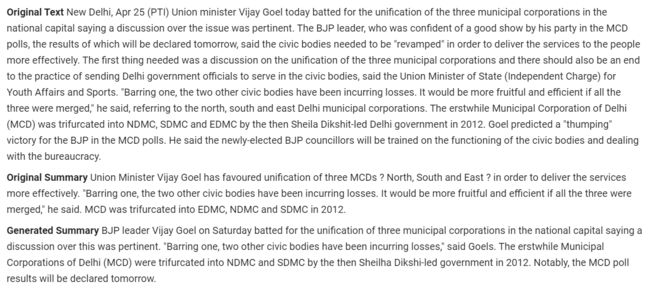

上图就是论文中的一个图,形象的展示了“Text-To-Text”的过程。

2. 模型

在论文中,作者做了大量的实验,最终发现还是Encoder-Decoder的模型表现最好,最终就选择了它,所以T5是一个基于Transformer的Encoder-Decoder模型。

对于预训练的方式,论文作者也进行了许多实验,最终发现类似Bert的将一部分破坏掉的方式效果最好,而破坏的策略则是Replace spans方法最好,破坏的比例是15%最好,破坏的长度发现是3最好。如论文中下图所示:

3.数据集

作者从Common Crawl(一个公开的网页存档数据集)中清洗出了750GB的训练数据,取名为Colossal Clean Crawled Corpus,简称C4,不得不说这作者真会取名字。

然后作者基于此数据集进行了大量的实验,当数据量达到一定的规模之后,继续增大数据量,效果并没有明显的提高,但是大模型是必须的。

4. 复现

依然是使用colab

4.1 导入模块

4.1 定义DataSet

DataSet 是PyTorch的一个用于数据集加载的类,我们需要继承它,重写数据处理方法。

class CustomDataset(Dataset):

def __init__(self, dataframe, tokenizer, source_len, summ_len):

self.tokenizer = tokenizer

self.data = dataframe

self.source_len = source_len

self.summ_len = summ_len

self.text = self.data.text

self.ctext = self.data.ctext

def __len__(self):

return len(self.text)

def __getitem__(self, index):

ctext = str(self.ctext[index])

ctext = ' '.join(ctext.split())

text = str(self.text[index])

text = ' '.join(text.split())

source = self.tokenizer.batch_encode_plus([ctext], max_length= self.source_len, pad_to_max_length=True,return_tensors='pt')

target = self.tokenizer.batch_encode_plus([text], max_length= self.summ_len, pad_to_max_length=True,return_tensors='pt')

source_ids = source['input_ids'].squeeze()

source_mask = source['attention_mask'].squeeze()

target_ids = target['input_ids'].squeeze()

target_mask = target['attention_mask'].squeeze()

return {

'source_ids': source_ids.to(dtype=torch.long),

'source_mask': source_mask.to(dtype=torch.long),

'target_ids': target_ids.to(dtype=torch.long),

'target_ids_y': target_ids.to(dtype=torch.long)

}

4.2 定义训练方法

def train(epoch, tokenizer, model, device, loader, optimizer):

model.train()

for _,data in enumerate(loader, 0):

y = data['target_ids'].to(device, dtype = torch.long)

y_ids = y[:, :-1].contiguous()

lm_labels = y[:, 1:].clone().detach()

lm_labels[y[:, 1:] == tokenizer.pad_token_id] = -100

ids = data['source_ids'].to(device, dtype = torch.long)

mask = data['source_mask'].to(device, dtype = torch.long)

outputs = model(input_ids = ids, attention_mask = mask, decoder_input_ids=y_ids, lm_labels=lm_labels)

loss = outputs[0]

if _%10 == 0:

wandb.log({"Training Loss": loss.item()})

if _%500==0:

print(f'Epoch: {epoch}, Loss: {loss.item()}')

optimizer.zero_grad()

loss.backward()

optimizer.step()

# xm.optimizer_step(optimizer)

# xm.mark_step()

4.3 定义验证方法

def validate(epoch, tokenizer, model, device, loader):

model.eval()

predictions = []

actuals = []

with torch.no_grad():

for _, data in enumerate(loader, 0):

y = data['target_ids'].to(device, dtype = torch.long)

ids = data['source_ids'].to(device, dtype = torch.long)

mask = data['source_mask'].to(device, dtype = torch.long)

generated_ids = model.generate(

input_ids = ids,

attention_mask = mask,

max_length=150,

num_beams=2,

repetition_penalty=2.5,

length_penalty=1.0,

early_stopping=True

)

preds = [tokenizer.decode(g, skip_special_tokens=True, clean_up_tokenization_spaces=True) for g in generated_ids]

target = [tokenizer.decode(t, skip_special_tokens=True, clean_up_tokenization_spaces=True)for t in y]

if _%100==0:

print(f'Completed {_}')

predictions.extend(preds)

actuals.extend(target)

return predictions, actuals

4.4 定义main方法

def main():

# WandB – Initialize a new run

wandb.init(project="transformers_tutorials_summarization")

# WandB – Config is a variable that holds and saves hyperparameters and inputs

# Defining some key variables that will be used later on in the training

config = wandb.config # Initialize config

config.TRAIN_BATCH_SIZE = 2 # input batch size for training (default: 64)

config.VALID_BATCH_SIZE = 2 # input batch size for testing (default: 1000)

config.TRAIN_EPOCHS = 2 # number of epochs to train (default: 10)

config.VAL_EPOCHS = 1

config.LEARNING_RATE = 1e-4 # learning rate (default: 0.01)

config.SEED = 42 # random seed (default: 42)

config.MAX_LEN = 512

config.SUMMARY_LEN = 150

# Set random seeds and deterministic pytorch for reproducibility

torch.manual_seed(config.SEED) # pytorch random seed

np.random.seed(config.SEED) # numpy random seed

torch.backends.cudnn.deterministic = True

# tokenzier for encoding the text

# 定义tokenizer,Tokenizer是Transformers库中对文本进行编码的类,

tokenizer = T5Tokenizer.from_pretrained("t5-base")

# Importing and Pre-Processing the domain data

# Selecting the needed columns only.

# Adding the summarzie text in front of the text. This is to format the dataset similar to how T5 model was trained for summarization task.

df = pd.read_csv('./data/news_summary.csv',encoding='latin-1')

df = df[['text','ctext']]

# T5 模型要求对于进行摘要的文本必须以“summarize: ”作为开头。

df.ctext = 'summarize: ' + df.ctext

print(df.head())

# Creation of Dataset and Dataloader

# Defining the train size. So 80% of the data will be used for training and the rest will be used for validation.

train_size = 0.8

train_dataset=df.sample(frac=train_size, random_state = config.SEED).reset_index(drop=True)

val_dataset=df.drop(train_dataset.index).reset_index(drop=True)

print("FULL Dataset: {}".format(df.shape))

print("TRAIN Dataset: {}".format(train_dataset.shape))

print("TEST Dataset: {}".format(val_dataset.shape))

# Creating the Training and Validation dataset for further creation of Dataloader

# 初始化数据集

training_set = CustomDataset(train_dataset, tokenizer, config.MAX_LEN, config.SUMMARY_LEN)

val_set = CustomDataset(val_dataset, tokenizer, config.MAX_LEN, config.SUMMARY_LEN)

# Defining the parameters for creation of dataloaders

train_params = {

'batch_size': config.TRAIN_BATCH_SIZE,

'shuffle': True,

'num_workers': 0

}

val_params = {

'batch_size': config.VALID_BATCH_SIZE,

'shuffle': False,

'num_workers': 0

}

# Creation of Dataloaders for testing and validation. This will be used down for training and validation stage for the model.

# 初始化 DataLoader ,DataLoader是 Transformers 库中从数据集中加载数据到模型中的类,方便以batch大小加载数据。

training_loader = DataLoader(training_set, **train_params)

val_loader = DataLoader(val_set, **val_params)

# Defining the model. We are using t5-base model and added a Language model layer on top for generation of Summary.

# Further this model is sent to device (GPU/TPU) for using the hardware.

# 定义模型。这里直接使用了针对文本生成调优之后的预训练模型

model = T5ForConditionalGeneration.from_pretrained("t5-base")

model = model.to(device)

# Defining the optimizer that will be used to tune the weights of the network in the training session.

# 定义优化器

optimizer = torch.optim.Adam(params = model.parameters(), lr=config.LEARNING_RATE)

# Log metrics with wandb

wandb.watch(model, log="all")

# Training loop

print('Initiating Fine-Tuning for the model on our dataset')

# 开始训练

for epoch in range(config.TRAIN_EPOCHS):

train(epoch, tokenizer, model, device, training_loader, optimizer)

# Validation loop and saving the resulting file with predictions and acutals in a dataframe.

# Saving the dataframe as predictions.csv

print('Now generating summaries on our fine tuned model for the validation dataset and saving it in a dataframe')

for epoch in range(config.VAL_EPOCHS):

predictions, actuals = validate(epoch, tokenizer, model, device, val_loader)

final_df = pd.DataFrame({'Generated Text':predictions,'Actual Text':actuals})

final_df.to_csv('./models/predictions.csv')

print('Output Files generated for review')

if __name__ == '__main__':

main()

4.5 结果展示

5.尝试移植到中文数据集

失败,huggingface的Transformers项目只提供了英文版的预训练模型,如果要移植到中文数据集的话,需要使用中文的分词器和中文数据集进行训练,而原作者提供的代码是基于Tensorflow的,我不会,也没有时间去学了,遂以失败告终。