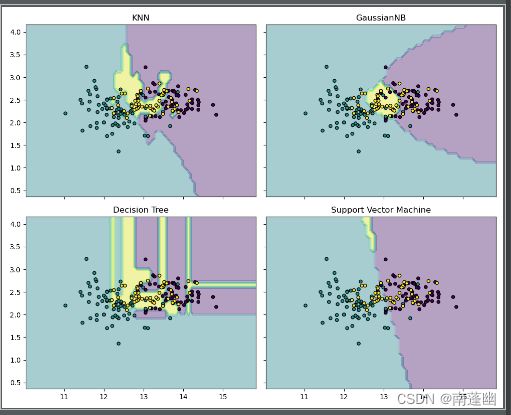

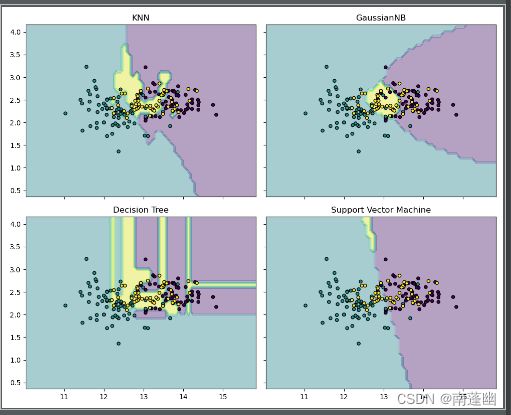

KNN算法

knn = KNeighborsClassifier ( )

朴素贝叶斯

gnb = GaussianNB ( )

决策树

dtc = DecisionTreeClassifier ( )

SVM算法

svm = SVC ()

代码:

import numpy as np

import matplotlib.pyplot as plt

from itertools import product

from sklearn import datasets

from sklearn.neighbors import KNeighborsClassifier

from sklearn.naive_bayes import GaussianNB

from sklearn.tree import DecisionTreeClassifier

from sklearn.svm import SVC

wine = datasets.load_wine ( )

x_train = wine.data [ : , [ 0 , 2 ] ]

y_train = wine.target

knn = KNeighborsClassifier ( )

gnb = GaussianNB ( )

dtc = DecisionTreeClassifier ( )

svm = SVC ()

knn.fit ( x_train , y_train )

gnb.fit ( x_train , y_train )

dtc.fit ( x_train , y_train )

svm.fit ( x_train , y_train )

print ( 'KNN:' , knn.score ( x_train , y_train ))

print ( 'GaussianNB:' , gnb.score ( x_train , y_train ))

print ( 'Decision Tree:' , dtc.score ( x_train , y_train ))

print ( 'Support Vector Machine:' , svm.score ( x_train , y_train ))

x_min , x_max = x_train[:,0].min()-1 , x_train [:,0].max()+1

y_min , y_max = x_train [ : , 1 ].min ( ) - 1 , x_train [ : , 1 ].max ( ) + 1

xx , yy = np.meshgrid ( np.arange ( x_min , x_max , 0.1 ) , np.arange ( y_min , y_max , 0.1 ) )

f , axe = plt.subplots ( 2 , 2 , sharex = 'col' , sharey = 'row' , figsize = ( 10 , 8 ) )

for idx , clf , tt in zip ( product([0,1],[0,1]) , [ knn , gnb , dtc , svm] , [ 'KNN' , 'GaussianNB' , 'Decision Tree' , 'Support Vector Machine' ] ) :

Z = clf.predict ( np.c_[ xx.ravel ( ) , yy.ravel ( ) ] )

Z = Z.reshape ( xx.shape )

axe [ idx [ 0 ] , idx [ 1 ] ].contourf ( xx , yy , Z , alpha = 0.4 )

axe [ idx [ 0 ] , idx [ 1 ] ].scatter ( x_train [ : , 0 ] , x_train [ : , 1 ] , c = y_train , s = 20 , edgecolor = 'k' )

axe [ idx [ 0 ] , idx [ 1 ] ].set_title ( tt )

plt.show()

结果: