pytorch的ANN数据拟合以及cst扫参数据的导出处理

基于pytorch的ANN数据拟合以及cst扫参数据的导出处理

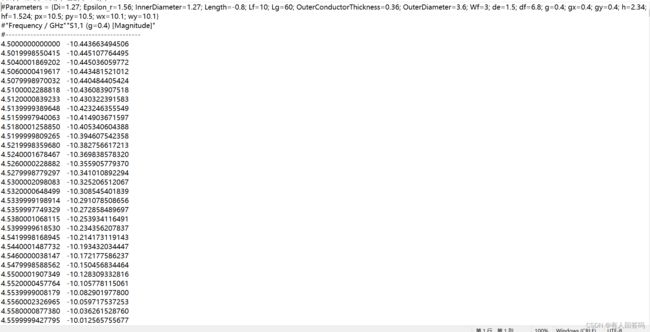

在cst中进行扫参之后导出了S11参数,不同参数对应对应的s参数数据全部存储在一个txt文件中。目的是导出在5.5GHz下的不同参数对应的回波损耗,方便使用ANN进行拟合。

一、CST扫参数据导出处理

import pandas as pd

import numpy as np

path = r'all_data.txt'

df = pd.read_table(path,index_col=False,names=['frequency','S11'])

#删掉nan数据

df.dropna(inplace=True)

f = 5.5#频率

g0 = 0.4#初始参数

num = 500

df_new = pd.DataFrame(np.zeros((num,2)), columns=['frequency','S11'])

id = -1

i = 0

while i <len(df):

if df.iloc[i][0]=='#"Frequency / GHz"':

id = id+1

j=0

while 1:

j=j+1

if float(df.iloc[i+j][0])==f:

df_new.iloc[id][0] = df.iloc[i+j][0]

df_new.iloc[id][1] = df.iloc[i+j][1]

i = i + j

break

i = i + 1

#保存路径

save_path = r'data5_5GHz.csv'

df_new.to_csv(save_path,index=False)

二、搭建ANN

具有三层隐藏层,前两个隐藏层有8

个神经元,最后一个隐藏层有1个神经元,优化器为adam。

#参考:https://blog.csdn.net/weixin_37707670/article/details/120853210

import torch

import numpy as np

import torch.nn as nn

import pandas as pd

from sklearn.model_selection import train_test_split

import matplotlib.pyplot as plt

#创建ANNmodel类是nn.model的子类

#nn.Module是所有神经网络模块的基本类

class ANNmodel(nn.Module):

def __init__(self,input_dim,hidden_dim,output_dim):

super(ANNmodel,self).__init__()#继承父类的初始化函数,对父类的调用必须在子类分配之前

#定义层

#输入层

self.fc1 = nn.Linear(input_dim,hidden_dim)

#默认bias为True

self.softplus1 = nn.Softplus()

#隐藏层1

self.fc2 = nn.Linear(hidden_dim,hidden_dim)

self.softplus2 = nn.Softplus()

#隐藏层2

self.fc3 = nn.Linear(hidden_dim,hidden_dim)

self.softplus3 = nn.Softplus()

#隐藏层3

self.fc4 = nn.Linear(hidden_dim,output_dim)

self.softplus4 = nn.Softplus()

#输出层

self.fc5 = nn.Linear(output_dim,output_dim)

#前向计算

def forward(self,x):

out = self.fc1(x)

out = self.softplus1(out)

out = self.fc2(out)

out = self.softplus2(out)

out = self.fc3(out)

out = self.softplus3(out)

out = self.fc4(out)

return out

if __name__ == '__main__':

input_dim = 1#输入向量size=1

output_dim = 1#输出向量size=1

hidden_dim = 8#隐藏层神经元个数

#读取数据

path = r'ANN\data5_5GHz.csv'

data = pd.read_csv(path,index_col=False,dtype=np.float32)

data_total = np.array(data)#转化为numpy类型数据

#划分数据

data_input = np.arange(0.4,4.99,0.01,dtype=np.float32).reshape(-1,1)

data_target = np.array(data_total[:,1],dtype=np.float32).reshape(-1,1)

train_input, test_input, train_target, test_target = train_test_split(data_input,data_target,test_size=0.2)

#转化为tensor

train_input = torch.from_numpy(train_input).type(torch.float32)

train_target = torch.from_numpy(train_target).type(torch.float32)

test_input = torch.from_numpy(test_input).type(torch.float32)

test_target = torch.from_numpy(test_target).type(torch.float32)

#batch size和迭代次数

batch_size = 100

iteration_num = 8000

#torch.utils.data.TensorDataset将训练集数据和目标合并

train = torch.utils.data.TensorDataset(train_input,train_target)

test = torch.utils.data.TensorDataset(test_input,test_target)

#DataLoader用于随机播放和批量处理数据,再dataset的基础上增加了batch_size buffle等操作

train_loader = torch.utils.data.DataLoader(train, batch_size=batch_size,shuffle=True)

test_loader = torch.utils.data.DataLoader(test,batch_size=batch_size,shuffle=True)

#模型训练

model = ANNmodel(input_dim, hidden_dim, output_dim)

loss_func = nn.MSELoss()#计算输入和目标之间的交叉熵损失

loss_list = []

learning_rate = 0.05

optimizer = torch.optim.Adam(model.parameters(), lr = learning_rate)#优化器

for i in range(iteration_num):

for j, (data_in,data_out) in enumerate(train_loader):

optimizer.zero_grad()#梯度清空

outputs = model(data_in)

loss = loss_func(outputs, data_out)

loss.backward()

optimizer.step()

#验证集计算

if j % 50 ==0:

correct = 0

total = 0

for test_in, test_out in test_loader:

outputs = model(test_in)

loss = loss_func(outputs,test_out)

loss_list.append(loss.data)

if i % 50 == 0:

print('Epoch:{} Loss:{} '.format(i,loss.data))

p_x = np.arange(1,8001,1)

p_y = np.array(loss_list)

plt.plot(p_x,p_y,marker='o')

plt.show()

总结

怕丢失源代码,在这里保存一下,之后有时间会更新inverse optimization的部分。