一、Tensor创建

1.import from numpy

torch.from_numpy():从numpy中导入

a = np.array([2,3.3])

print(torch.from_numpy(a))

2.import from list

torch.tensor([]):接收现有的数据torch.FloatTensor(shape):一般是接收shape,尽量避免直接接收list

a = torch.tensor([2.,3.2])

print(a)

a = torch.FloatTensor([2.,3.2])

print(a)

a = torch.FloatTensor(2,2,3)

print(a)

a = torch.tensor([[2.,3.2],[1.,22.3]])

print(a)

3.初始化tensor

torch.empty(shape):初始化tensor,未初始化的tensor会出现极端数据torch.FloatTensor(d1,d2,d3):生成Float的Tensor

a = torch.empty(1)

torch.FloatTensor(1,2,3)

b = torch.empty(2,3)

b = torch.Tensor(2,3)

4.设置tensor生成的默认类型

torch.tensor().type():查看tensor生成的数据类型torch.set_default_tensor_type():改变默认生成的数据类型

print(torch.tensor([1.2,3]).type())

torch.set_default_tensor_type(torch.DoubleTensor)

print(torch.tensor([1,3.]).type())

二、随机生成

1.rand函数

torch.rand(shape):从[0,1]的均匀采样生成torch.rand_like():接受的是tensor,函数是先求tensor.shape,在传入到rand_like()中torch.randint(min,max,shape):生成的范围是[min,max)的torch.randn(shape):正态分布生成torch.normal(mean=torch.full([10],0),std=torch.arange(1,0,-0.1)):自定义生成10个,均值为0,std为torch.full():全部赋值为一个元素torch.randperm():生成[0,x)的下标

a = torch.rand(3,3)

print(a)

b = torch.rand_like(a)

print(b)

c = torch.randint(3,10,[3,3])

print(c)

torch.randn(1,3)

torch.normal(mean=torch.full([10],0),std=torch.arange(1,0,-0.1))

torch.full([2,3],7)

torch.full([],7)

torch.full([1],7)

torch.randperm(10)

2.arrange、linspace等函数

torch.arange(start,end,step): 生成[start,end)的等差数列torch.linspace(start,end,steps):生成[start,end]的数量为steps的均分数列logspace(start,end,steps,base):生成 b a s e s t a r t − > b a s e e n d base^{start}->base^{end} basestart−>baseend数量为steps的均分数列torch.ones():生成全为1的tensortorch.zeros():生成全为0的tensortorch.eyes():生成对角线为1的tensortorch.ones_like(),torch.zeros_like():参数为tensor|

torch.arange(0,10,2)

torch.linspace(0,10,steps=4)

torch.logspace(0,-1,steps=10,base=10)

三、Tensor索引与切片

- 在Tensor中根据index选取信息:

a = torch.rand(4,3,28,28)

print(a[0].shape)

print(a[0,0].shape)

print(a[0,0,2,4])

- select first/last N

start:end:step:从start开始步数为step一直到end- torch的index:0,1,2,…或者…,-3,-2,-1

print(a.shape)

print(a[:2].shape)

print(a[:2,:1,:,:].shape)

print(a[:2,1:,:,:].shape)

print(a[:2,-1:,:,:].shape)

print(a[:2,:-1].shape)

- selcet by steps

print(a[:,:,0:28:2,0:28:2].shape)

print(a[:,:,::2,::2].shape)

- selset by specific index

index_select(选取的维度,torch.tensor([ ])):对选取的维度上,根据特殊的index选取信息

print(a.index_select(0,torch.tensor([0,2])).shape)

print(a.index_select(1,torch.tensor([1,2])).shape)

print(a.index_select(2,torch.arange(20)).shape)

...自动补全用法

1....:会根据你的torch来进行自动补全,相当于:,:的一系列组合

print(a[...].shape)

print(a[1,...].shape)

print(a[0,...,::2].shape)

- select by mask

torch.masked_select(torch,mask):根据所给定的mask来对torch进行取信息- 缺点是将torch打平后在选取数据

x = torch.randn(3,4)

mask = x.ge(0.5)

print(torch.masked_select(x,mask))

print(torch.masked_select(x,mask).shape)

- selcet by flatten index

torch.take(src,torch.tensor([ ])):对给定的src,根据tensor选择数据

src = torch.tensor([[4,3,5],[6,7,8]])

print(torch.take(src,torch.tensor([0,2,5])))

四、Tensor维度变换

- view函数

- 原来维度信息[b,c,h,w],view 变换后,会丢失物理信息,会造成数据污染

- 必须保持数据不能丢失,及物理意义要符合

a = torch.randn(4,1,28,28)

a.view(4,1*28*28)

print(a.view(4,1*28*28).shape)

print(a.view(4*1*28,28).shape)

print(a.view(4*1,28,28).shape)

- squeeze and unsqueeze 函数

unsqueeze函数是将维度展开,增加维度

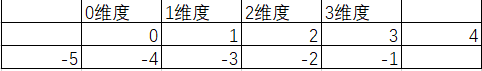

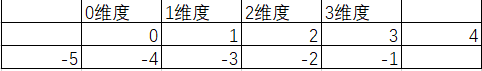

- 范围是

index = [-dim-a,dim+1)

- 函数内容index:正数的话是在x维度前插入,负数的话是从当前维度后插入

- index:说明

print(a.shape)

print(a.unsqueeze(0).shape)

print(a.unsqueeze(-5).shape)

print(a.unsqueeze(4).shape)

print(a.unsqueeze(-1).shape)

--------------------------------------------------------------

b = torch.tensor([1,2,3])

print(b.shape)

print(b.dim())

print(b.unsqueeze(0))

print(b.unsqueeze(1))

--------------------------------------------------------------

bias = torch.rand(32)

f = torch.rand(4,32,14,14)

bias = bias.unsqueeze(1).unsqueeze(2).unsqueeze(0)

print(bias.shape)

2. `squeeze(index)`函数是将维度挤压,减少维度

1. `squeeze(index)` 不给参数会将全部都积压,shape = 1才会挤压掉,shape > 1的话当前维度不变

print(bias.shape)

print(bias.squeeze().shape)

print(bias.squeeze(0).shape)

print(bias.squeeze(1).shape)

- Expand维度扩张函数

expand: 只是增加理解方式,没有对数据进行补充- 前提 参数内的tensor的dim一致,1->n 可以扩张其余不能扩张,维度不变的话写-1

b = torch.rand(1,32,1,1)

print(b.expand(4,32,14,14).shape)

print(b.expand(-1,-1,-1,-1).shape)

print(b.expand(-1,32,14,-1).shape)

-----------------------------

print(b.expand(10).shape)

print(b.expand(1,16,1,1).shape)

- Repeat维度扩张函数

Repeat:增加了数据的方式是将其他数据进行copy- 接受的参数为每一维度要变化的方式

b = torch.rand(1,32,1,1)

print(b.repeat(4,32,32,1).shape)

print(b.repeat(1,1,1,1).shape)

- transpose转置函数

- 参数为需要交换的两个维度

- 进行转置操作时,pytorch并不会创建新的、转置后的tensor,而是修改了tensor中的一些属性(也就是元数据)

b = torch.rand(4,3,32,32);

b1 = b.transpose(1,3);

2. 转置逆操作的contiguous函数

1. 调用contiguous()时,会强制拷贝一份tensor

b2 = b.transpose(1,3).contiguous().view(4,3*32*32).view(4,32,32,3).transpose(1,3)

print(torch.all(torch.eq(b,b2)))

- permute转置函数

- 参数:交换后的维度的顺序

a = torch.rand(0,1,2,3)

print(a.permute(0,2,3,1).shape)

print(a.permute(3,1,2,0).shape)