爬虫--02:爬虫请求模块

Reptilien 02: reptilienmodell gesucht

- 一、urllib.requests模块

-

- 版本

- 常用方法

- 响应对象

- 二、urllib.parse模块

- 三、requests模块

-

- 安装

- requests常用方法

- 响应对象response的方法

- requests小技巧

- requests模块发送post请求

- COOKIE

- SESSION

- 处理不信任的SSL证书

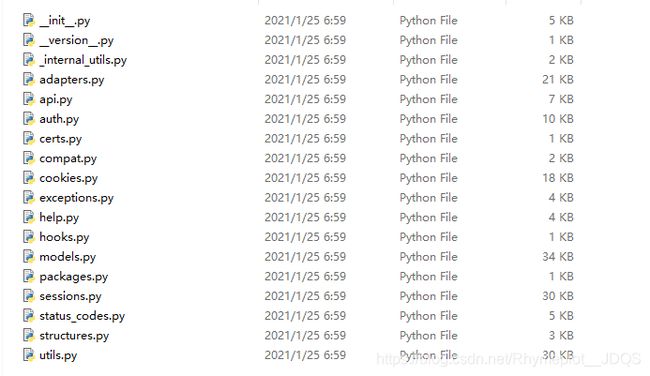

- requests源码分析

一、urllib.requests模块

版本

- python2:urllib2、urllib

- python3:把urllib和urllib2合并

常用方法

reponse = urllib.request.urlopen("网址(url)"):向一个网站发起一个请求并获取响应reponse.read():获取字节流reponse.read().decode('utf-8'):获取字符串reponse = urllib.requests.Requests(‘网址(url)’, headers='字典'):需要向网站发起请求,就需要headers,而urlopen()不支持重构headers

import urllib.request

headers = {}

response = urllib.request.urlopen('https://www.baidu.com/')

# TypeError: urlopen() got an unexpected keyword argument 'headers'

# response = urllib.request.urlopen('https://www.baidu.com/', headers={})

# print(response.read())

# read()可以把对象中的内容读取出来

html = response.read().decode('utf-8')

# print(response)

# 打印输出结果:

C:\python\python.exe D:/PycharmProjects/Python大神班/day-03/02-urllib.request.py

<class 'str'> <html>

<head>

<script>

location.replace(location.href.replace("https://","http://"));

</script>

</head>

<body>

<noscript><meta http-equiv="refresh" content="0;url=http://www.baidu.com/"></noscript>

</body>

</html>

Process finished with exit code 0

响应对象

read():读取服务器响应的内容getcode():返回HTTP的响应码geturl():返回实际数据的url(防止重定向问题)

import urllib.request

# urllib 发起请求思路总结

url = 'https://www.baidu.com/'

headers = {'User-Agent':'Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/87.0.4280.141 Safari/537.36 Edg/87.0.664.75'}

# 1、创建求请求对象 urllib.requests.Requests()--目的是构造user-agent

req = urllib.request.Request(url, headers=headers)

# 2、获取响应对象(拿到网址的response)---通过urllib.requests.urlopen()

res = urllib.request.urlopen(req)

# 3、读取响应对象中的内容---变量名.read().decode('utf-8') bytes-->str

html = res.read().decode('utf-8')

# print(html)

print(res.getcode()) # 返回状态码--200

print(res.geturl()) # 返回请求网址--https://www.baidu.com/

打印输出结果:

C:\python\python.exe D:/PycharmProjects/Python大神班/day-03/02-urllib.request.py

200

https://www.baidu.com/

Process finished with exit code 0

二、urllib.parse模块

- urllib.parse模块的作用:将请求的url中的中文转变成% + 16进制的格式

import urllib.request

import urllib.parse

url1 = 'https://www.baidu.com/s?wd=%E6%B5%B7%E8%B4%BC%E7%8E%8B'

url2 = 'https://www.baidu.com/s?wd=海贼王'

# url2---->url1

# 第一种方式:urllib.parse.urlencode('字典') --括号内传入字典

R = {'wd': '海贼王'}

# 创建的字典就是你需要的输入的中文

# urllib.parse.urlencode(字典)--->将内部的字典中的字符串 转化成% + 16进制的形式

result1 = urllib.parse.urlencode(R)

print(result1)

url3 = 'https://www.baidu.com/s?' + result1

print(url3)

# 第二种方式:urllib.parse.quote(str)--括号内传入字符串

R = '海贼王'

result2 = urllib.parse.quote(R)

print(result2)

url4 = 'https://www.baidu.com/s?wd=' + result2

print(url4)

打印输出结果:

C:\python\python.exe D:/PycharmProjects/Python大神班/day-03/03-urllib.parse的使用.py

wd=%E6%B5%B7%E8%B4%BC%E7%8E%8B

https://www.baidu.com/s?wd=%E6%B5%B7%E8%B4%BC%E7%8E%8B

%E6%B5%B7%E8%B4%BC%E7%8E%8B

https://www.baidu.com/s?wd=%E6%B5%B7%E8%B4%BC%E7%8E%8B

Process finished with exit code 0

- 代码练习01:

import urllib.request

import urllib.parse

header = {'User-Agent':'Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/87.0.4280.141 Safari/537.36 Edg/87.0.664.75'}

# https://www.sogou.com/tx?query=%E7%BE%8E%E5%A5%B3

key = input('请输入你要搜索的内容:')

base_url = 'https://www.sogou.com/tx?'

wd = {'wd': key}

result = urllib.parse.urlencode(wd)

# 拼接url

url = base_url + result

# print(url)

# 构建请求对象d

red = urllib.request.Request(url, headers=header)

# 获取响应对象

res = urllib.request.urlopen(red)

# 读取响应数据

html = res.read().decode('utf-8')

# 保存数据

with open('搜索.html', 'w', encoding='utf-8') as file_obj:

file_obj.write(html)

- 代码练习02:

import urllib.request

import urllib.parse

header = {

'User-Agent':'Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/87.0.4280.141 Safari/537.36 Edg/87.0.664.75'

}

# 贴吧的主题

name = input('请输入需要爬取的贴吧的主题:')

# 爬取的起始页和终止页

begin = int(input('请输入起始页:'))

end = int(input('请输入终止页:'))

# 对name进行处理

kw = {'kw': name}

result = urllib.parse.urlencode(kw)

# 拼接目标url

for i in range(begin, end+1):

pn = (i - 1) * 50

# print(pn)

base_url = 'https://tieba.baidu.com/f?'

url = base_url + result + '&pn=' + str(pn)

# print(url)

# 发起请求获得响应

req = urllib.request.Request(url, headers=header)

res = urllib.request.urlopen(req)

html = res.read().decode('utf-8')

# 写入文件

filename = '第' + str(i) +'页.html'

with open(filename, 'w',encoding='utf-8') as f:

f.write(html)

- 代码练习03:

import urllib.request

import urllib.parse

class BaiduSpider(object):

def __init__(self):

self.headers = {

'Uesr-Agent' : 'Mozilla/5.0 (Macintosh; Intel Mac OS X 11_2_0) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/88.0.4324.96 Safari/537.36'

}

self.base_url = 'https://tieba.baidu.com/f?'

def readPage(self, url):

req = urllib.request.Request(url, headers=self.headers)

res = urllib.request.urlopen(req)

html = res.read().decode('utf-8')

return html

def writePage(self, filename, html):

with open(filename,'w', encoding='utf-8') as f:

f.write(html)

print(filename)

print('写入成功!')

def main(self):

name = input('请输入您要爬取的贴吧主题:')

begin = int(input('请输入起始页:'))

end = int(input('请输入终止页:'))

kw = {'kw': 'name'}

result = urllib.parse.urlencode(kw)

for i in range(begin, end + 1):

pn = (i - 1) * 50

url = self.base_url + result + '&pn=' + str(pn)

print(url)

# 调用函数

html = self.readPage(url)

filename = '第' + str(i) + '页.html'

self.writePage(filename, html)

if __name__ == '__main__':

spider = BaiduSpider()

spider.main()

三、requests模块

安装

- 通过pip安装:

pip install requests - 在开发者工具中安装:

pip install requests -i https://pypi.douban.com/simple(换元安装)

requests常用方法

requests.get(网址)requests.post(网址)

注01:

1、reponse.content是直接从网站上爬取数据,没有任何的处理,也就是没有人的编码。

2、reponse.text是requests模块将reponse.content解码后得到的字符串*(随机解码)*。requests模块会猜一个解码方式,所以在使用reponse.text来查询响应结果的时候会出现乱码。

注02:

encode:编码

decode:解码

响应对象response的方法

- response.text :返回unicode格式的数据(str)

- response.content: 返回字节流数据(二进制)

- response.content.decode(‘utf-8’) :手动进行解码

- response.url : 返回url

- response.encode() = ‘编码 :对响应对象编码

requests小技巧

- 1、设置代理

- 解决的问题?

- 结果封IP的反扒机制

- 设备代理

- 代理服务器的ip

- 代理ip的作用

- 隐藏真实IP

- 反反爬

- 如何去找代理IP

- 免费的IP(用不了)

- 付费的IP

- 快代理

- 匿名度

- 透明:服务器知道真实的IP,也知道代码使用了代理IP

- 匿名:服务器不知道真实的IP,但是知道代码使用了代理IP

- 高匿:服务器不知道真实的IP,也不知道代码使用了代理IP

- 解决的问题?

代码演示:

import requests

url = 'http://httpbin.org/ip'

# 设置代理

proxy = {

'http': '111.2.123.217 : 8088'

}

response = requests.get(url, proxies=proxy)

print(response.text)

注:上述代码仅供参考,使用的代理ip无效

requests模块发送post请求

- 代码01:有道翻译(urllib.requests模块)

import urllib.request

import urllib.parse

import json

while True:

# 请输入你要翻译的内容

content = input('请输入您要翻译的内容: ')

# From date

data = {

'i': content,

'from': 'AUTO',

'to': 'AUTO',

'smartresult': 'dict',

'client': 'fanyideskweb',

'salt': '16121836445996',

'sign': 'c61529daab4c5fb560922b4702e7fcc5',

'lts': '1612183644599',

'bv': '3a4005368b018e46296f9aa83b723587',

'doctype': 'json',

'version': '2.1',

'keyfrom': 'fanyi.web',

'action': 'FY_BY_REALTlME',

}

data = urllib.parse.urlencode(data) # --是字符串格式

# data = bytes(data) # TypeError: string argument without an encoding

data = bytes(data, 'utf-8')

# 目标网址url 去掉下划线_o

# url = 'http://fanyi.youdao.com/translate_o?smartresult=dict&smartresult=rule'

url = 'http://fanyi.youdao.com/translate?smartresult=dict&smartresult=rule'

headers = {

'User-Agent': 'Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/88.0.4324.96 Safari/537.36 Edg/88.0.705.56'

}

# 构建请求对象

req = urllib.request.Request(url, data=data, headers=headers)

# 获取响应对象

res = urllib.request.urlopen(req)

# 解析(读取)响应对象

html = res.read().decode('utf-8')

# print(html) # {"type":"ZH_CN2EN","errorCode":0,"elapsedTime":1,"translateResult":[[{"src":"你好","tgt":"hello"}]]}

# print(type(html)) # --json类型的字符串

# 把json类型的字符串可以转换成python类型的字典

r_dict = json.loads(html)

'''

{"type":"ZH_CN2EN","errorCode":0,"elapsedTime":1,"translateResult":[[{"src":"你好","tgt":"hello"}]]}

'''

# 解析数据

result1 = r_dict['translateResult'] # [[{"src":"你好","tgt":"hello"}]]

result2 = result1[0][0]['tgt'] # -->[{"src":"你好","tgt":"hello"}] # -->{"src":"你好","tgt":"hello"}--> "你好"

print(result2)

上述代码采用的书urllib.requests模块编写

- 代码02:有道翻译(requests模块)

import requests

import json

while True:

content = input('请您输入要翻译的内容:')

data = {

'i': '你好',

'from': 'AUTO',

'to': 'AUTO',

'smartresult': 'dict',

'client': 'fanyideskweb',

'salt': '16122515824475',

'sign': '35b9350456719eb41b43330a35577cbc',

'lts': '1612251582447',

'bv': '24539cb46ca252bbf138e6a349e0da5b',

'doctype': 'json',

'version': '2.1',

'keyfrom': 'fanyi.web',

'action': 'FY_BY_REALTlME'

}

url = 'http://fanyi.youdao.com/translate?smartresult=dict&smartresult=rule'

headers = {

'User-Agent':'Mozilla/5.0 (Macintosh; Intel Mac OS X 11_2_0) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/88.0.4324.96 Safari/537.36'

}

# 发送请求

response = requests.post(url, data=data, headers=headers)

# 得到响应对象

response.encoding = 'utf-8'

html = response.text

# print(html)

r_dict = json.loads(html)

'''

{"type":"ZH_CN2EN","errorCode":0,"elapsedTime":1,"translateResult":[[{"src":"你好","tgt":"hello"}]]}

'''

# 解析数据

result1 = r_dict['translateResult'] # [[{"src":"你好","tgt":"hello"}]]

result2 = result1[0][0]['tgt'] # -->[{"src":"你好","tgt":"hello"}] # -->{"src":"你好","tgt":"hello"}--> "你好"

print(result2)

上述代码采用requests模块编写,采用的requests.post()请求方式来应对反爬机制

COOKIE

- cookie :通过在客户端记录的信息确定用户身份

- HTTP是一种无连接协议,客户端和服务器交互仅仅限于 请求/响应过程,结束后断开,下一次请求时,服务器会认为是一个新的客户端,为了维护他们之间的连接,让服务器知道这是前一个用户发起的请求,必须在一个地方保存客户端信息。

- 代码演示:cookie模拟登录

import requests

url = 'http://www.renren.com/975937712/profile'

headers1 = {

'User-Agent': 'Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/88.0.4324.96 Safari/537.36 Edg/88.0.705.56',

'Cookie': 'anonymid=kkpc7htt-o24is0; depovince=ZJ; _r01_=1; JSESSIONID=abc_JhTybOdmK-1vsNNDx; taihe_bi_sdk_uid=6b3d78e4b660867ea85c5a0c4620ad39; taihe_bi_sdk_session=6e05c9a0cd9e8f01ba267e6d81e4745a; ick_login=8f1b95be-aa27-4e7b-a5e0-8c904cfd6c0e; t=0b6ce5910f376c65eeb7fa778114e65d2; societyguester=0b6ce5910f376c65eeb7fa778114e65d2; id=975937712; xnsid=98614cef; jebecookies=7d2f8aba-8e14-4768-a1c3-b7dc899da159|||||; ver=7.0; loginfrom=null; wp_fold=0'

} # 携带cookie

headers2 = {

'User-Agent': 'Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/88.0.4324.96 Safari/537.36 Edg/88.0.705.56'

} # 未携带cookie

req1 = requests.get(url, headers=headers1)

# print(req.text)

# 人人1为携带cookie

with open('rr1.html', 'w', encoding='utf-8') as file_obj:

file_obj.write(req1.text)

# 人人2未携带cookie

req2 = requests.get(url, headers=headers2)

with open('rr2.html', 'w', encoding='utf-8') as file_obj:

file_obj.write(req2.text)

打印输出结果:

人人1:记录上次登录信息,直接进入用户界面

人人2:不携带COOKIE,直接进入登录界面

SESSION

- session :通过在服务端记录的信息确定用户身份 这里这个session就是一个指的是会话

- 作用:保持会话

代码演示:12306界面登录图形验证码

import requests

req = requests.session() # 作用是保持会话的作用

def login():

# 获取图片

img_response = req.get(

'https://kyfw.12306.cn/passport/captcha/captcha-image?login_site=E&module=login&rand=sjrand')

codeImage = img_response.content

# 'w'写入,'b'为二进制--->’wb‘的意思是二进制写入文件

# 二进制文件通过content得出

fn = open('code.png', 'wb')

fn.write(codeImage)

fn.close()

headers = {

'User-Agent': 'Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/88.0.4324.96 Safari/537.36 Edg/88.0.705.56'

}

codeStr = input('请输入验证码坐标:')

data = {

'answer': codeStr,

'rand': 'sjrand',

'login_site': 'E'

}

response = req.post('https://kyfw.12306.cn/passport/captcha/captcha-check', data=data, headers=headers)

print(response.text)

login()

处理不信任的SSL证书

- SSL证书是数字证书的一种,类似于驾驶证、护照和营业执照的电子副本。因为配置在服务器上,也称为SSL服务器证书。SSL 证书就是遵守 SSL协议,由受信任的数字证书颁发机构CA,在验证服务器身份后颁发,具有服务器身份验证和数据传输加密功能

代码演示:response = requests.get(url, verify=False)

import requests

url = 'https://inv-veri.chinatax.gov.cn/'

response = requests.get(url, verify=False)

print(response.text)