The uptick in Twitter user activity during the recent lockdown made it seem like a good place to start looking for a quarantine project to increase my competency with machine learning. Specifically, as misinformation and baffling conspiracies took hold of the U.S.’s online population, trying to come up with new ways to identify bad actors seemed like more and more of a relevant task.

在最近的锁定期间,Twitter用户活动的增加使它看起来像是一个开始寻找隔离项目以提高我的机器学习能力的好地方。 具体来说,随着误导和令人困惑的 阴谋 笼罩着美国的在线人群,试图找到新的方法来识别不良行为者似乎越来越是一项重要的任务。

In this post, I’ll be demonstrating, with the help of some useful Python network graphing and machine learning packages, how to build a model for predicting whether Twitter users are humans or bots, using only a minimum viable graph representation of each user.

在这篇文章中,我将在一些有用的Python网络图形和机器学习包的帮助下演示如何构建模型,以仅使用每个用户的最小可行图形表示来预测Twitter用户是人类还是机器人。

大纲 (Outline)

1. Preliminary Research

1.初步研究

2. Data Collection

2.数据收集

3. Data Conversion

3.数据转换

4. Training the Classification Model

4.训练分类模型

5. Closing thoughts / Room for Improvement

5.总结思想/改进空间

技术说明 (Technical Notes)

All programming, data collection, etc. was done in a Jupyter Notebook. Libraries used:

所有编程,数据收集等都在Jupyter Notebook中完成。 使用的库:

tweepy

pandas

igraph

networkx

numpy

json

csv

ast

itemgetter (from operator)

re

Graph2Vec (from karateclub)

xgboostFinally, four resources were key to this task, which I will discuss later in this writeup:

最后,四个资源是此任务的关键,我将在本文后续部分中讨论这些资源:

The Indiana University Network Science Institute’s Bot Repository,

印第安纳大学网络科学研究所的Bot资料库 ,

Jacob Moore’s tutorial on identifying Twitter influencers, using Eigenvector Centrality as a metric,

雅各布·摩尔(Jacob Moore)使用特征向量中心性(Eigenvector Centrality)作为度量标准来确定Twitter影响者的教程 ,

Karate Club, an extension of NetworkX,

空手道俱乐部 ,NetworkX的扩展,

and the XGBoost gradient boosting library.

和XGBoost梯度增强库。

Let’s get to it!

让我们开始吧!

初步研究 (Preliminary Research)

While bot detection as a goal is nothing new, to the extent that a project like this would have been impossible without drawing on the prior and vital work referenced above, there were a few topics within the problem space that I thought could be further explored.

尽管以僵尸程序检测为目标并不是什么新鲜事,但如果不依靠上述先前的重要工作就不可能进行这样的项目,那么我认为问题空间中还有一些主题可以进一步探讨。

The first was scale and reduction. I wouldn’t describe my data science expertise at any level above “hobbyist”, and as such, processing power and Twitter API access were both factors I had to keep in mind. I knew I wouldn’t be able to replicate the accuracy of models by larger, more established groups, so instead one of the things I set out to investigate was how scalable and accurate of a classification model could be made given these limitations.

首先是规模缩小。 我不会在“爱好者”以上的任何水平上描述我的数据科学专业知识,因此,处理能力和Twitter API访问都是我必须牢记的因素。 我知道我将无法通过更大,更成熟的团队来复制模型的准确性,因此,我着手研究的一件事是在存在这些限制的情况下如何使分类模型具有可伸缩性和准确性。

The second was the type of user data used in classification. I found several models that drew on a variety of different elements of users’ profiles, from the text content of tweets to the length of usernames or the profile pictures used. However, I found only a few attempts at doing the same with features based on graphs of users’ social networks. By chance, this graph-based method also ended up being the best way for me to collect just enough data on each user to use for later classification without coming up against Twitter’s API limits.

第二个是分类中使用的用户数据类型。 我发现了几种模型,这些模型借鉴了用户个人资料的各种不同元素,从推文的文本内容到用户名的长度或所使用的个人资料图片。 但是,我发现只有很少的 尝试对基于用户社交网络图的功能进行相同的操作。 偶然地,这种基于图的方法最终成为我最好的方式,我可以在每个用户上收集足够的数据以用于以后的分类,而不会遇到Twitter的API限制。

数据采集 (Data Collection)

First things first, when working with Twitter, you’ll need developer API access. If you haven’t already, you can apply for it here, and Tweepy (the Twitter API wrapper I’ll be using throughout this writeup) has more information on the authentication process in its docs.

首先,在使用Twitter时,您需要开发人员API访问权限。 如果您还没有的话,可以在这里申请,Tweepy(本文中将使用的Twitter API包装器) 在其docs中提供了有关身份验证过程的更多信息。

Once you’ve done so, you’ll need to create an instance of the API with your credentials, like so.

完成此操作后,您将需要使用您的凭据创建API的实例,就像这样。

Inputting your credentials 输入您的凭证In order to train a model, I would need a database of Twitter usernames and existing labels, as well as a way to quickly collect relevant data about each user.

为了训练模型,我需要一个Twitter用户名和现有标签的数据库,以及一种快速收集有关每个用户的相关数据的方法。

The database I ended up settling on was IUNI’s excellent Bot Repository, which contains thousands of labeled human and bot accounts in TSV and JSON format. The second part was a bit harder. At first, I tried to generate graph representations of each user’s entire timeline, but this could take more than a day per user for some more prolific users, due to API limits. The best format for a smaller graph ended up being Jacob Moore’s tutorial for identifying Twitter influencers using Eigenvector Centrality.

我最终选择的数据库是IUNI出色的Bot存储库 ,其中包含成千上万个带有TSV和JSON格式的带标签的人类和机器人帐户。 第二部分比较难。 最初,我尝试生成每个用户整个时间轴的图形表示,但是由于API的限制,对于一些多产的用户, 每个用户可能要花费超过一天的时间。 较小图的最佳格式最终是Jacob Moore的使用Eigenvector Centrality识别Twitter影响者的教程 。

I won’t repeat the full explanation of how his script works, or what Eigencentrality is, because both of those things are there in his tutorial better than I could put them, but from a high-level view, his script takes a Twitter user (or a keyword, but I didn’t end up using that functionality) as input, and outputs a CSV with an edge list of users weighted by how much “influence” they have on the given user’s interactions on Twitter. It additionally outputs an iGraph object, which we’ll be writing to a GML file that we’ll use going forwards as a unique representation of each user.

我不会重复对他的脚本如何工作或本征中心性进行完整的解释,因为在他的教程中,这两个方面都比我可以说的要好,但是从高层次来看,他的脚本需要一个Twitter用户(或关键字,但我最终没有使用该功能)作为输入,并输出一个CSV文件,其中包含用户的边缘列表,该列表由他们在Twitter上给定用户的交互的“影响力”加权。 它还会输出一个iGraph对象,我们将其写入GML文件,并将其用作每个用户的唯一表示。

You’ll need the functionality of the TweetGrabber, RetweetParser, and TweetGraph classes from his tutorial. The next step is to create a TweetGrabber instance with your API keys, and perform a search on your selected Twitter user.

您将需要他的教程中的TweetGrabber,RetweetParser和TweetGraph类的功能。 下一步是使用您的API密钥创建一个TweetGrabber实例,并对所选的Twitter用户执行搜索。

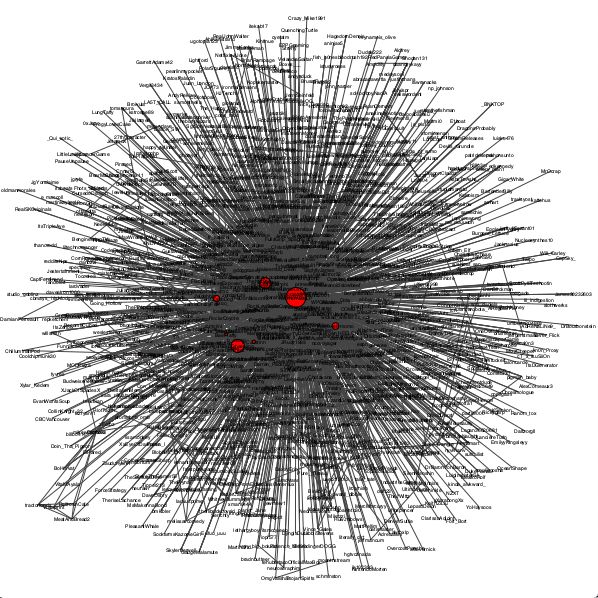

Creating a GML file of a user’s relationship with their network 创建用户与其网络之间的关系的GML文件Lines 27 and 28 of the above code create a ‘size’ attribute for each vertex that holds its Eigencentrality value, meaning that when we write the created iGraph object to a GML file, as we do in line 31, that file will contain all the information we need on the user, and the previously created CSV can be discarded. Additionally, if you’d like, you can uncomment lines 33–38 to plot and view the graph, which will likely look something like this:

上面代码的第27和28行为每个保存其特征中心值的顶点创建一个'size'属性,这意味着当我们将创建的iGraph对象写入GML文件时(如在第31行中所做的那样),该文件将包含所有我们需要的关于用户的信息,以及先前创建的CSV可以被丢弃。 另外,如果您愿意,可以取消注释第33–38行以绘制和查看图形,这看起来可能像这样:

The graph output for a Twitch streamer I follow. 我遵循的Twitch流光的图形输出。To build the database I would be training my classification model on, I added each of the usernames and labels collected from the Bot Repository into a pandas DataFrame, and iterated through it, running this script with each of the usernames as input. This part of the process was the most time-intensive, taking several hours, but the result, after dropping empty or deleted accounts from the frame, was just over 20,000 user graphs with ‘ground truth’ labels for classification. Next step: formatting this data to train the model.

为了构建数据库,我将在其上训练分类模型,我将从Bot存储库收集的每个用户名和标签添加到pandas DataFrame中,并对其进行迭代,以每个用户名作为输入运行此脚本。 该过程的这一部分最耗时,需要花费几个小时,但是从框架中删除空帐户或删除帐户后,结果是超过20,000个带有“地面真相”标签进行分类的用户图。 下一步:格式化此数据以训练模型。

资料转换 (Data Conversion)

But first, a brief refresher on what a model is (if you’re familiar, you can skip to ‘At this point in the process…’).

但是首先,简要回顾一下什么是模型(如果您熟悉模型,可以跳到“过程中的这一点……”)。

The goal of a machine learning model is to look at a bunch of information (features) about something , and then to use that information to try and predict a specific statement (or label) about that thing.

机器学习模型的目标是查看有关某物的一堆信息(特征),然后使用该信息来尝试并预测有关该物的特定声明(或标签)。

For example, this could be a model that takes a person’s daily diet and tries to predict the amount of plaque they would have on their teeth, or this could be a model that takes the kinds of stores a person shops at regularly and tries to predict their hair colour. A model like the first, where the information about the person is more strongly correlated to the characteristic being predicted (diet probably has more impact on dental health than shopping habits do on hair colour), is usually going to be more successful.

例如,这可能是一个采用一个人的日常饮食并试图预测他们牙齿上的牙菌斑数量的模型,或者可能是一个采用一个人定期在商店购物的种类并试图预测一个人的模型。他们的头发颜色。 像第一个模型一样,该模型通常会更加成功,在该模型中,有关人的信息与所预测的特征之间的相关性更高(饮食对牙齿健康的影响可能比购物习惯对染发的影响更大)。

The way a model like this is created and is “taught” to more accurately make these predictions is by being exposing it to a large number of somethings that have their features and labels already given. Through “studying” these provided examples, the model ideally “learns” what features are the most correlated with one label or another. E.g. if the database your model is “studying” contains information on a bunch of people who have the feature “eats marshmallows for breakfast”, and most of these people coincidentally tend to have higher amounts of plaque, your model is probably going to be able to predict that if an unlabeled person also eats marshmallows for breakfast, their teeth won’t be looking so hot.

这样的模型的创建和“讲授”以更准确地做出这些预测的方式是,将其暴露于已经具有其特征和标签的大量物体上。 通过“研究”这些提供的示例,模型可以理想地“学习”与一个标签或另一个标签最相关的功能。 例如,如果您的模型“正在研究”的数据库包含有关一群人的信息,这些人的特征是“吃棉花糖吃早餐”,而这些人中的大多数恰好倾向于具有更高的牙菌斑,则您的模型可能会可以预测,如果一个没有标签的人也吃棉花糖作为早餐,他们的牙齿不会看起来那么热。

For a better and more comprehensive explanation, I’d recommend this video.

为了获得更好,更全面的解释,我推荐此视频 。

At this point in the process, we have a database of somethings (Twitter users), each for which we also have information (their graph) and a yes/no statement (whether or not they’re a bot). However, this brings us to our next step, which is a crucial one in the creation of a model — how to convert these graphs to input features. Providing a model with too much irrelevant information can make it take longer to “learn” from the input, or worse, make its prediction less accurate.

在此过程的这一点上,我们有一个东西数据库(Twitter用户),每个数据库都有信息(它们的图表)和是/否语句(无论他们是不是机器人)。 但是,这将我们带入下一步,这是创建模型的关键步骤-如何将这些图形转换为输入特征。 为模型提供太多不相关的信息可能会使从输入中“学习”所需的时间更长,或更糟糕的是,使其预测的准确性降低。

What’s the most efficient way to represent each user’s graph for our model, without losing any important information?

在不丢失任何重要信息的情况下,代表我们模型的每个用户图的最有效方法是什么?

That’s where Karate Club comes in. Specifically, Graph2Vec, a whole-graph “embedding” library that takes an arbitrarily-sized graph such as the one in the above image, and embeds it as a lower-dimensional vector. For more information about graph embedding (including Graph2Vec specifically), I’d recommend this writeup, as well as this white paper. Long story short, Graph2Vec converts graphs into denser representations that preserve properties like structure and information, which is exactly the kind of thing we want for our input features.

这就是空手道俱乐部的 来历 。具体地说,是Graph2Vec ,这是一个全图“嵌入”库,它采用任意大小的图(例如上图中的一个图),并将其嵌入为低维向量。 有关图嵌入(包括Graph2Vec特别)的更多信息,我建议你这样的书面记录 ,以及本白皮书 。 长话短说,Graph2Vec将图形转换为更密集的表示形式,以保留诸如结构和信息之类的属性,而这正是我们想要的输入功能。

In order to do so, we’ll need to convert our graphs into a format that’s compatible with Graph2Vec. For me, the process looked like this:

为此,我们需要将图形转换为与Graph2Vec兼容的格式。 对我来说,过程看起来像这样:

Creating a vector embedding of a user’s graph 创建用户图的矢量嵌入The end result will look something like the following:

最终结果将类似于以下内容:

[[-0.04542452 0.228086 0.13908194 -0.05709897 0.05758724 0.4356743

0.16271514 0.09336048 0.05702725 -0.2599525 -0.44161066 0.34562927

0.3947958 0.30249864 -0.23051494 0.31273103 -0.26534733 -0.10631609

-0.44468483 -0.17555945 0.07549448 0.38697574 0.2060106 0.08094891

-0.30476692 0.08177203 0.35429433 0.2300599 -0.26465878 0.07840226

0.14166194 0.0674125 0.0869598 0.16948421 0.1830279 -0.17096592

-0.17521448 0.18930815 0.35843915 -0.19418521 0.10822983 -0.25496888

-0.1363765 -0.2970226 0.33938506 0.09292185 0.02078495 0.27141875

-0.43539774 0.23756032 -0.11258412 0.01081391 0.44175783 -0.19365656

-0.04390689 0.09775431 0.03468767 0.06897729 0.2971188 -0.35383108

0.2914173 0.45880902 0.22477058 0.12225034]]Not pretty to human eyes, but combined with our labels, exactly what we’ll need for creating the classification model. I repeated this process for each labeled user and stored the results in another pandas DataFrame, so that now I had a DataFrame of ~20,000 rows and 65 columns, 64 of which were vectors describing the user’s graph, and the 65th being the “ground truth” label of whether that user was a bot or a human. Now, on to the final step.

肉眼看上去并不漂亮,但结合我们的标签,正是创建分类模型所需要的。 我为每个有标签的用户重复了此过程,并将结果存储在另一个熊猫DataFrame中,这样我现在有了一个大约20,000行和65列的DataFrame,其中有64个是描述用户图形的向量,第65个是“基本事实”该用户是机器人还是人类的标签。 现在,进入最后一步。

训练分类模型 (Training the Classification Model)

Since our goal is classification (predicting whether each “something” should be placed in one of two categories, in this case a bot or a human), I opted to use XGBoost’s XGBClassifier model. XGBoost uses gradient boosting to optimize predictions for regression and classification problems, resulting in, in my experience, more accurate predictions than most other options out there.

由于我们的目标是分类(预测每个“事物”是否应置于两种类别之一,在这种情况下为机器人还是人类),因此我选择使用XGBoost的XGBClassifier模型。 根据我的经验,XGBoost使用梯度增强来优化针对回归和分类问题的预测,从而获得比大多数其他选项更准确的预测。

From here, there are two different options:

从这里开始,有两种不同的选择:

If your goal is to train your own model to make predictions with and modify, and you have a database of user graph vectors and labels to do so with, you’ll need to fit the classification model to your database. This was my process:

如果您的目标是训练自己的模型以进行预测并进行修改,并且您可以使用用户图形向量和标签数据库,则需要将分类模型拟合到数据库中。 这是我的过程:

Training your own classification model 训练自己的分类模型If your goal is just to try to predict the human-ness or bot-ness of a single user you’ve graphed and vectorized, that’s fine too. I’ve included a JSON file that you can load my model from in my GitHub, which is linked to in my profile. Here’s what that process would look like:

如果您的目标只是试图预测您绘制并矢量化的单个用户的人性或机器人性,那也很好。 我包含了一个JSON文件,您可以从GitHub中加载我的模型,该文件链接到我的个人资料中。 该过程如下所示:

Loading my classification model 加载我的分类模型And that’s it! You should be looking at the predicted label for the account you started with.

就是这样! 您应该查看开始使用的帐户的预测标签。

总结思想/改进空间 (Closing Thoughts / Room for Improvement)

There are a number of things that could be improved about my model, and which I hope to revisit someday.

关于我的模型,有许多方面可以改进,我希望有一天可以重新审视。

First and foremost is accuracy. While the Botometer classification model that IUNI has built from their Bot Repository demonstrates nearly 100% accuracy at classification on the testing data set, mine demonstrates 79% accuracy. This makes sense, as I’m using a lower number of features to predict each user’s labels, but I’m confident that there is a middle ground between my minimalist approach and IUNI’s, and I would be interested in trying to combine graph-based, text-based, and profile-based classification methods.

首先是准确性。 IUNI从其Bot信息库建立的Botometer分类模型在测试数据集上的分类中显示了近100%的准确性,而我的Botometer分类模型显示了79%的准确性。 这是有道理的,因为我正在使用较少的功能来预测每个用户的标签,但是我有信心在我的极简主义方法和IUNI方法之间有一个中间立场,并且我想尝试结合基于图的方法,基于文本和基于配置文件的分类方法。

Another, which ties into accuracy, is the structure of the graphs themselves. Igraph is able to compute the Eigenvector centrality for each node in a graph, but also includes a number of other node-based measurements, such as closeness, betweenness, or an optimized combination of multiple measurements.

另一个关系到准确性的是图形本身的结构。 Igraph能够为图中的每个节点计算特征向量中心度,但是Igraph还包括许多其他基于节点的度量,例如紧密度,中间度或多个度量的优化组合。

Finally, two things make it difficult to test and improve upon the accuracy of this model at scale. The first is that due to my limited understanding of vector embedding, it’s difficult for me to identify what features lead to accurate or inaccurate labeling. The second is how accurate results on the testing data set would be to Twitter’s ecosystem today. As bots are detected, the field evolves, and methods for detection will have to evolve as well. Towards that end, I’ve been skimming trending topics from Twitter throughout the quarantine for users to apply this model to, but I think that will have to wait for a later post.

最后,有两件事使得难以大规模测试和改进该模型的准确性。 首先是由于我对向量嵌入的了解有限,因此我很难确定导致准确或不正确标记的特征。 第二个是测试数据集对当今Twitter生态系统的准确性如何。 随着机器人的发现,该领域也在发展,并且检测方法也必须发展。 为此,我一直在整个隔离区中收集来自Twitter的热门话题,以供用户应用此模型,但我认为这将需要等待以后的发布。

Thank you for reading! Please let me know in the comments if you have any questions or feedback.

感谢您的阅读! 如果您有任何疑问或反馈,请在评论中让我知道。

翻译自: https://towardsdatascience.com/python-detecting-twitter-bots-with-graphs-and-machine-learning-41269205ab07