【COMP2411: Database Systems Learning Notes】

Database Systems Learning Notes

Resources from the Hong Kong Polytechnic University course COMP2411

Summary

ER model: characterize relationships among entities

Relational model: transform from ER diagram to tables

SQL: language for writing queries

Relational Algebra: logical way to represent queries

Normal Forms: how to design good tables

File Organization: provide file level structure to speed up query (Applications of Index, B+ Tree)

Query Optimization: transform queries into more efficient ones (Calculation, Optimisation graph)

Transactions and Concurrency Control: handle concurrent operations and guarantee correctness of the database

Content

- Summary

- Chapter 1: Database Systems

-

- 1. Introduction

- 2. Database Architecture (1-tier,2-tier,3-tier)

- 3. 3-level Architecture/Three schema Architecture

-

- 1. Internal

- 2. Conceptual

- 3. External

- Mapping

- Data Independence

-

- 1. Logical Data Independence

- 2. Physical Data Independence

- 4. Data Model

- 5. DBMS Language

-

- 5.1 Data Definition Language (DDL)

- 5.2 Data Manipulation Language (DML)

- 6. Data model Schema and Instance

- 7. Basic concepts and terminologies

- Chapter 2: Entity-Relationship (ER) Model

-

- 1. Components of ER diagram

-

- 1. Entity

-

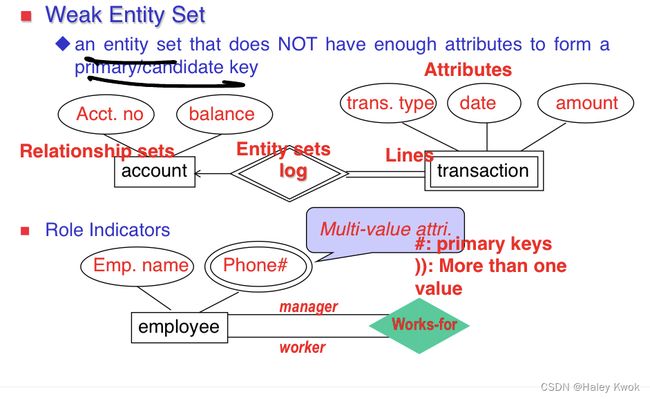

- 1.1.1 Weak Entity

- 2. Attribute

-

- a. Key Attribute

- b. Composite Attribute

- c. Multivalued Attribute

- d. Derived Attribute

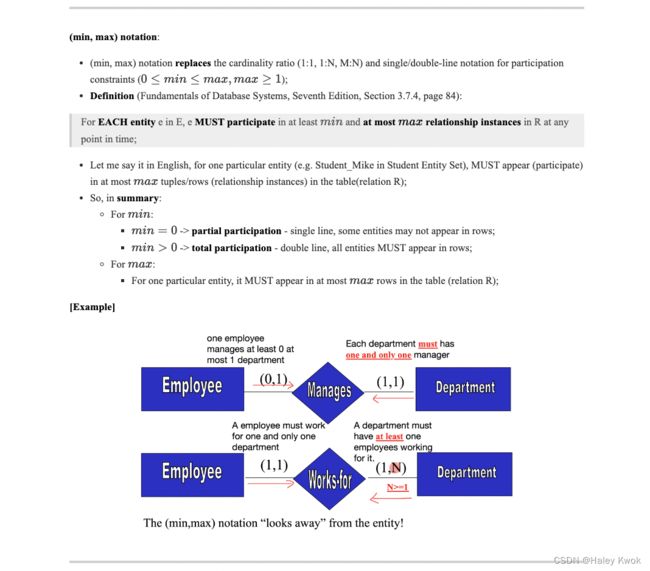

- 3. Structural Constraint of relationship: Cardinality ratio (of a binary relationship) V.S. (Min, Max)

-

-

- Relationship V.S. Relationship Sets

- Cardinality ratio

-

- a. One-to-One Relationship (1:1)

- b. One-to-many relationship (1:N)

- c. Many-to-one relationship (N:1)

- d. Many-to-many relationship (M:N)

- Participation constraint (on each participating entity set or type)

-

- Higher degree of relationship

- 2. Notation of ER diagram

- 3. ER Design Issues

-

- 1) Use of Entity Set vs Attributes

- 2) Use of Entity Set vs. Relationship Sets

- 3) Use of Binary vs n-ary Relationship Sets

- 4) Placing Relationship Attributes

- 4. Keys

-

- 1. Primary Keys

- 2. Candidate Keys

- 3. Super Keys

- 4. Foreign Keys

- 5. Generalization

- 6. Specialization

- 7. Aggregation

- 8. Reduction of ER diagram to Table/ ER diagram V.S.

-

- Relational Schema

-

- Method 1

- Method 2

- 9. Relationship of higher degree

- Chapter 3: SQL

-

- Examples

- SELECT `DATA (from bottom to top) SELECT IS THE COLUMN RESULT`

-

- 1. DISTINCT

- 2. AND/OR/NOT

- 3. ORDER BY

- 4. IS NULL/ IS NOT NULL

- 5. TOP

- 6. MIN MAX

- 7. COUNT/AVG/SUM

- 8. LIKE

- 9. IN

- 10. BETWEEN..AND/ NOT BETWEEN..AND

- 11. AS

- 12. ALL and ANY

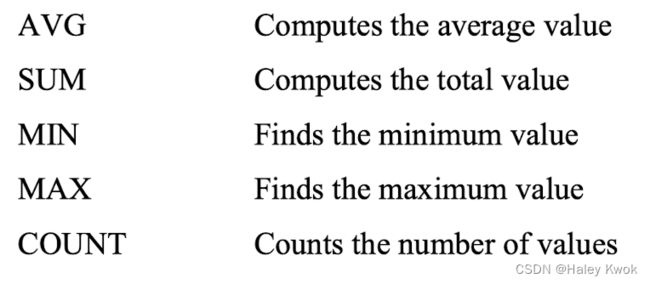

- AGGREGATE

-

- 13. GROUP BY

- 14. HAVING (with aggregate functions only)

- JOIN

- UNION

- INSERT INTO `DATA (from top to bottom)`

- Lab Java

- 2/11 Discussion

- Chapter 4: Relational Model Concept

-

- 1. Relational Algebra

-

- 1. Select Operation:

- 2. Project Operation

- 3. Union Operation (or):

- 4. Set Intersection (and):

- 5. Set Difference:

- 6. Cartesian product |><|

- 2. Join Operations:

-

- 1. Natural Join

- 2. Outer Join:

-

- a. Left outer join:

- b. Right outer join:

- c. Full outer join:

- 3. Equi join:

- Summary

- Chapter 5: Integrity Constraints (ICs) and Normal Forms

-

- 1. Domain Constraint

- 2. Entity Integrity Constraint

- 3. Referential Integrity Constraint

- 4. Key Constraint

- Functional Dependency and Normal Forms

-

- Types of Functional Dependency

-

- 1. Trivial functional dependency

- 2. Non-trivial functional dependency

- Inference Rules for FDs

-

- 1. Reflexive Rule (IR1)

- 2. Augmentation Rule (IR2)

- 3. Transitive Rule (IR3)

- 4. Union Rule (IR4)

- 5. Decomposition Rule (IR5)

- 6. Pseudo transitive Rule (IR6)

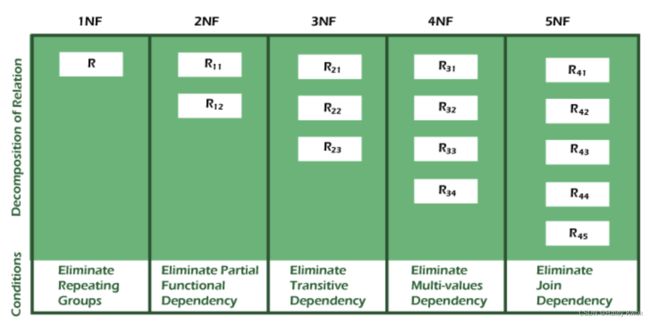

- Types of Normal Forms:

-

- 1NF

-

- Problems

- Solution

- 2NF

- 3NF (Good Design!)

- BCNF

- Chapter 6: File Organization and Indexing

-

- File Organization

- 1. Unordered File

-

- 1.1 Pile File Method:

- 2. Ordered File (Sequential File Organization)

-

- 2.1 Sorted File Method:

- 3. Heap Files (Unordered)

- 4. Hashed Files

-

- 4.1 Static External Hashing

-

- 4.1.1 Collisions problem

-

- 4.1.1.1 Opening Addressing: Linear Probing

- 4.1.1.2 Opening Addressing: Quadratic Probing

- 4.1.2 Overflow chaining/ Overflow handling

- 4.2 Dynamic And Extendible Hashing

- 5. Indexing

-

- 5.1 Ordered indices

-

- 5.1.1 Single level index/ Sparse indexing/ Primary index (Ordered)

-

- 5.1.1.1 Dense index

- 5.1.1.2 Sparse index

- 5.2.1 Clustering index (Ordered)

- 5.3.1 Secondary index (Non-ordered)

- 5.4.1 Multi-level index (primary, secondary, clustering)

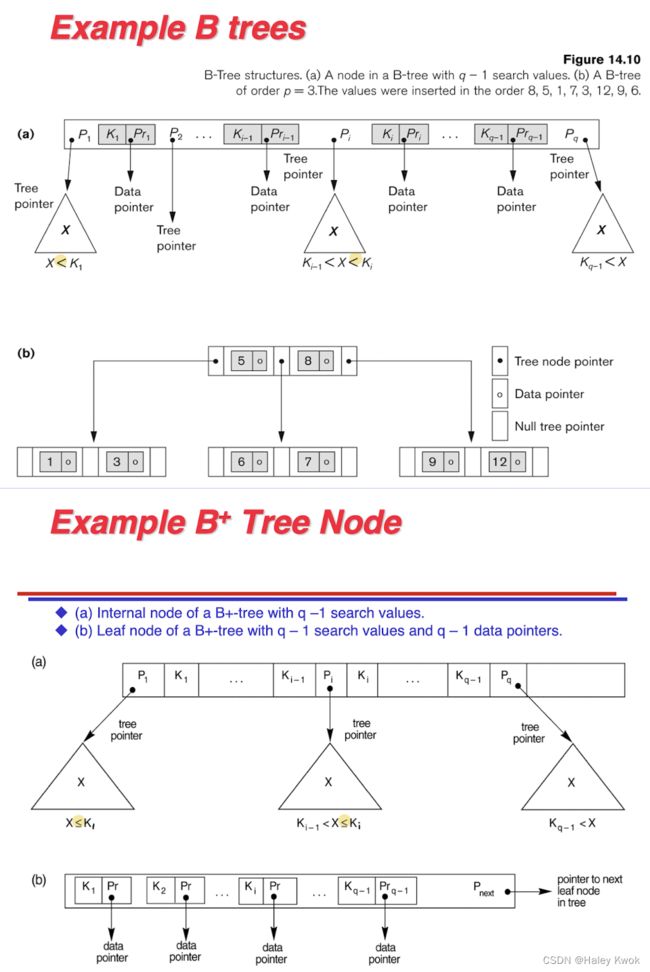

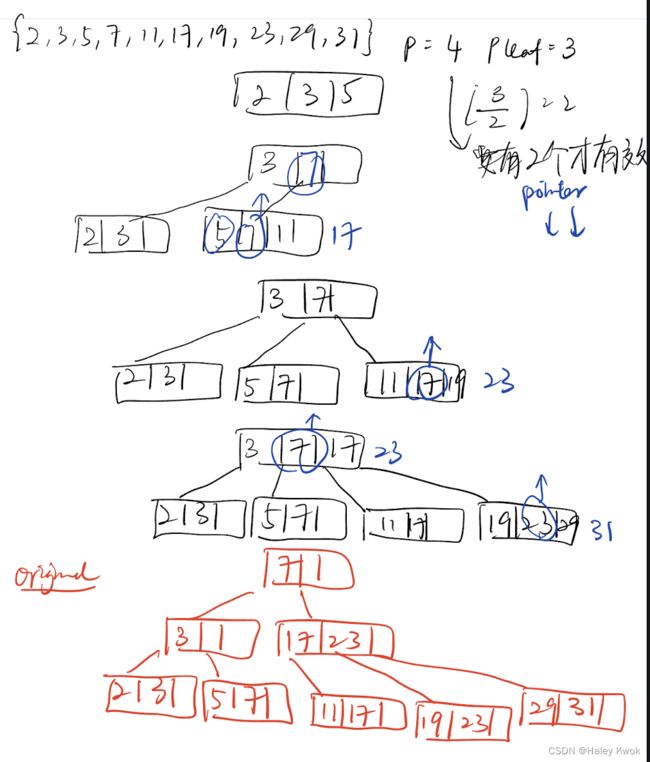

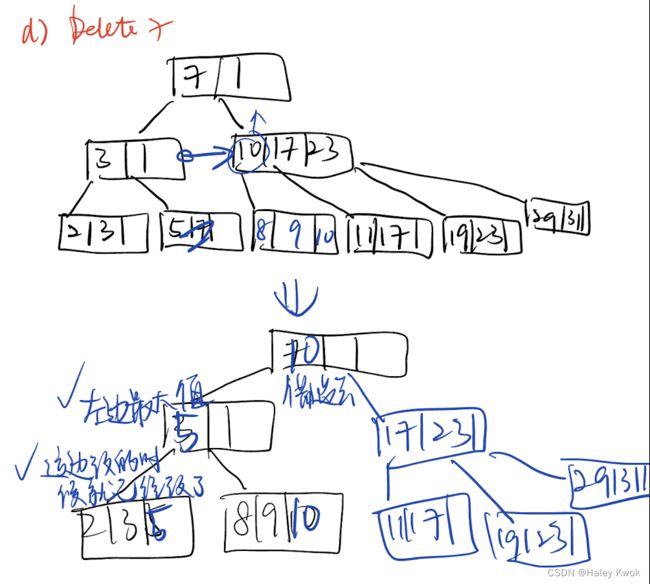

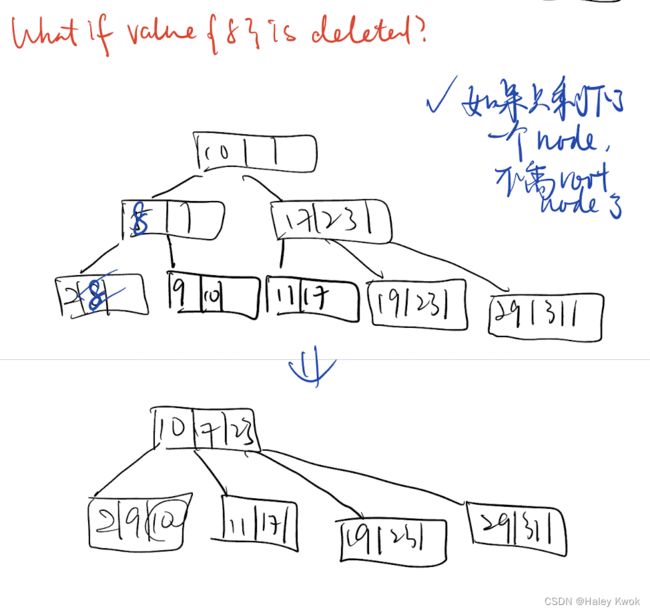

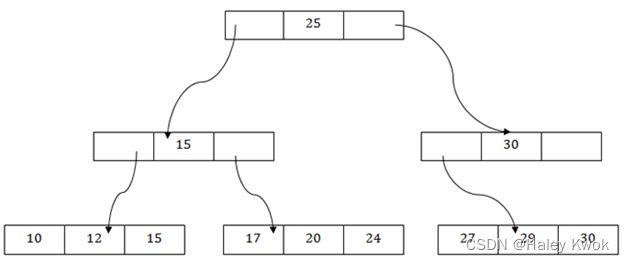

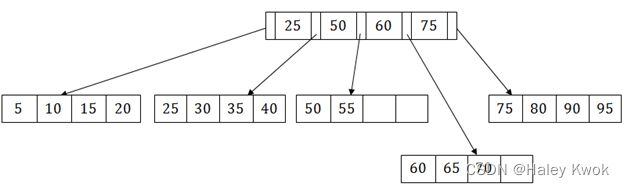

- 5.4.2 Using B Trees and B+ Trees as Dynamic Multi-level Indexes

-

- Insertion

- Delection

- Denormalization

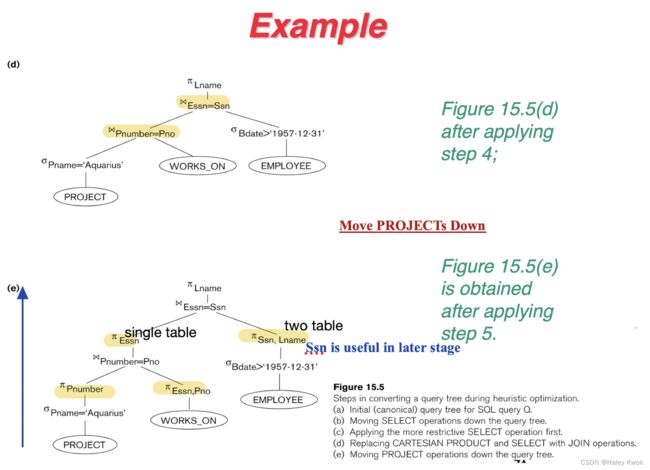

- Chapter 7: Query Processing & Query Optimization

-

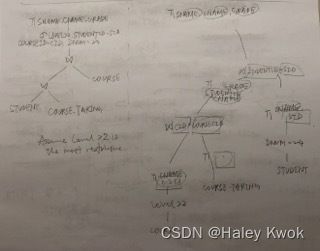

- 1. Translating SQL Queries into Relational Algebra

- 2. Search Methods for Selection

-

- Linear search (brute force)

- Binary search

- Using a primary index or hash key to retrieve a single record

- Using a primary index to retrieve multiple records

- Using a clustering index to retrieve multiple records

- 3. Calculation

-

- a. Nested-loop join

- b. Block nested-loop join

- c. Merge join

- d. Hash join

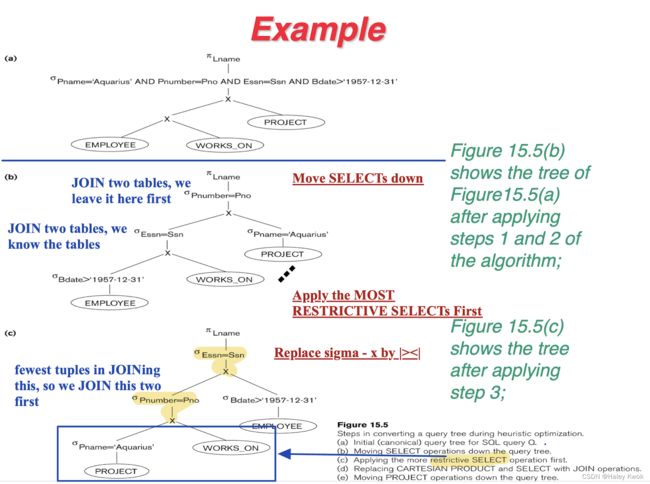

- 4. Heuristic Algebraic Optimization Algorithm

- Chapter 8: Transactions and Concurrency Control

-

- 1. Transaction

-

- 1.1 Property of Transaction

- 1.2 Transaction Status

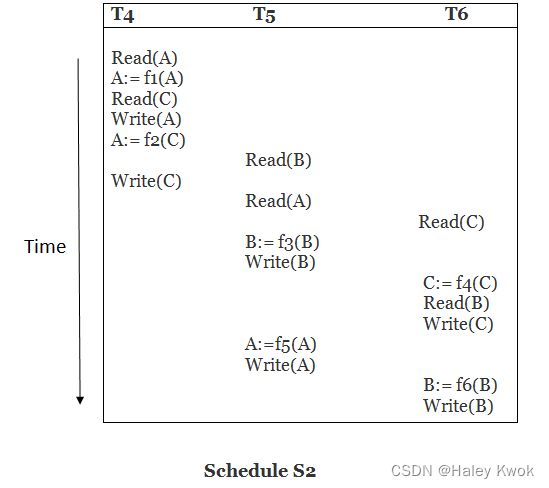

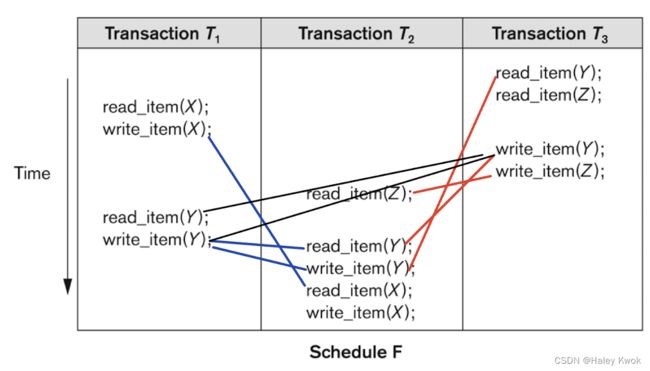

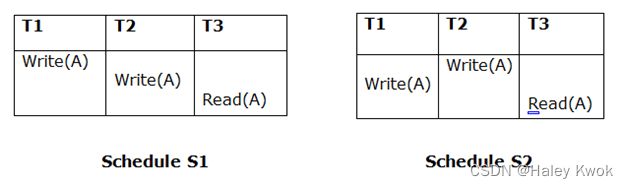

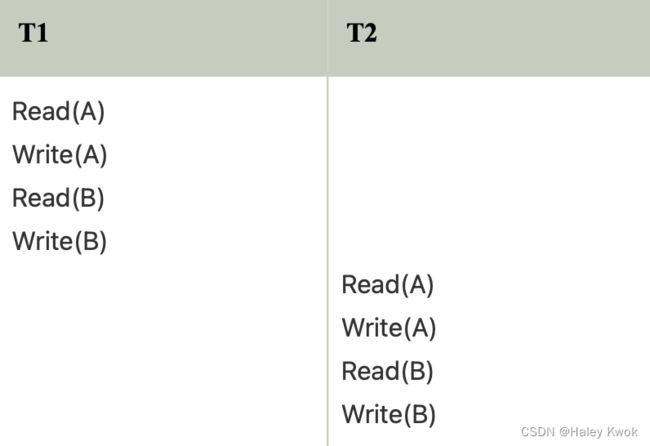

- 2. Schedule

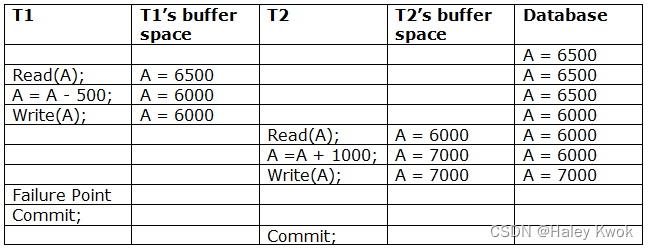

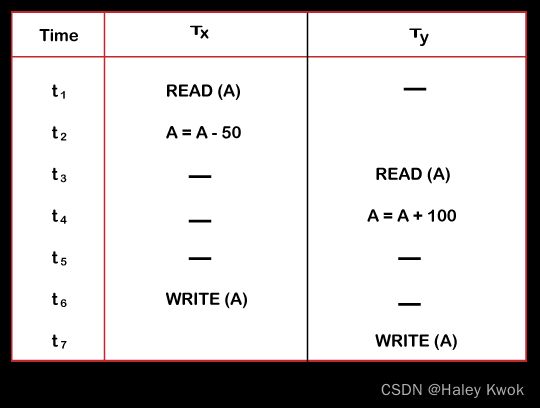

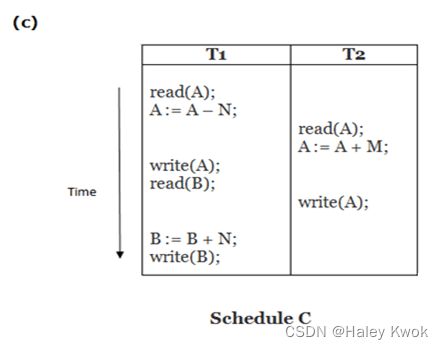

- Example

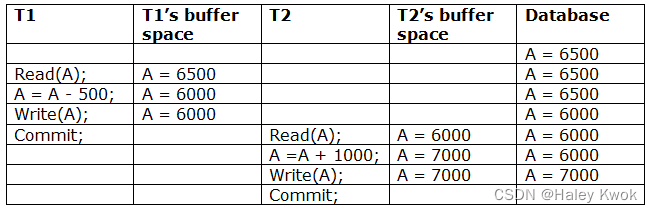

- 3. Schedules Classified on Recoverability

-

- 3.1 Non-recoverable schedule

- 3.2 Recoverable schedule with cascaded rollback

- 3.3 Cascadeless schedule

- 3.4 Strict schedules

- Example

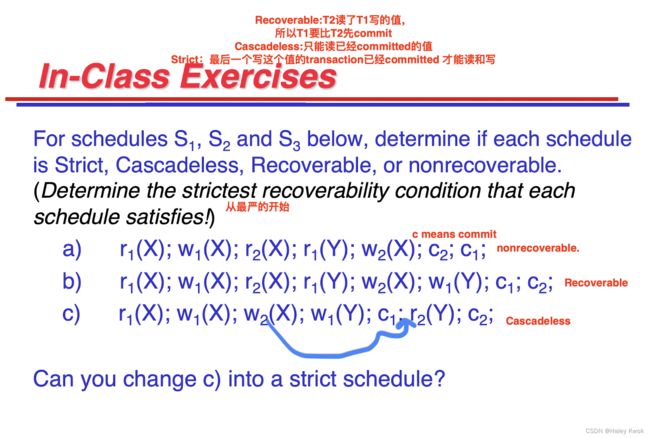

- 4. Schedules Classified on Serializability

-

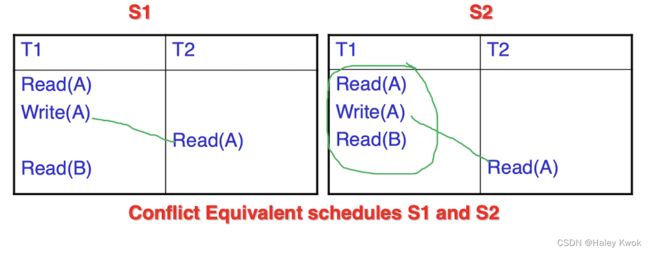

- 4.1 Conflict Serializable Schedule

- 4.2 Conflict Equivalent

- 4.3 Result equivalent

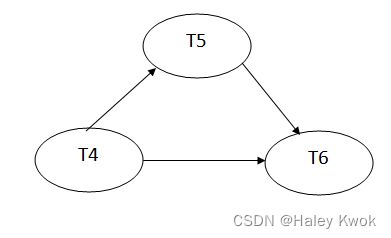

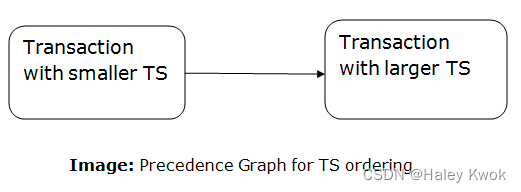

- 5. Test of Serializability

-

- 5.1 Non-serializable schedule

- 5.2 Serializable schedule

- Example

- --

- NOT YET MENTIONED IN TGE LECTURE

- View Serializability

- View Equivalent

- --

- Failure Classification

-

- 1. Transaction failure

- 2. System Crash

- 3. Disk Failure

- Log-Based Recovery

-

- Recovery using Log records

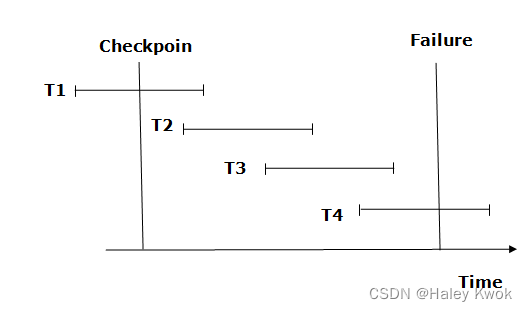

- Checkpoint

-

- Recovery using Checkpoint

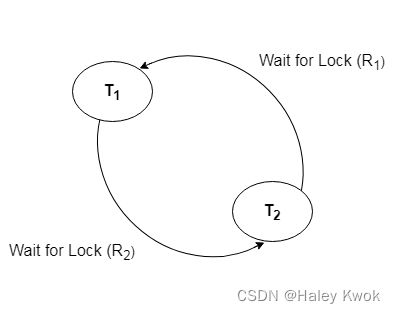

- Deadlock in DBMS

-

- 1. Deadlock Avoidance

- 2. Deadlock Detection

- 3. Wait for Graph

- Deadlock Prevention

-

- 1. Wait-Die scheme

- 2. Wound wait scheme

- Chapter 8: DBMS Concurrency Control

-

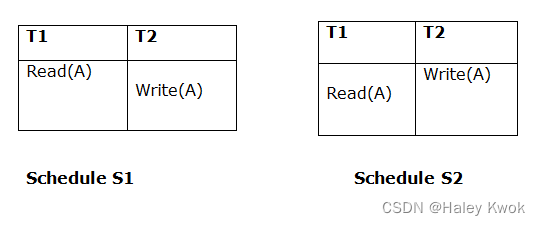

- Concurrent Execution

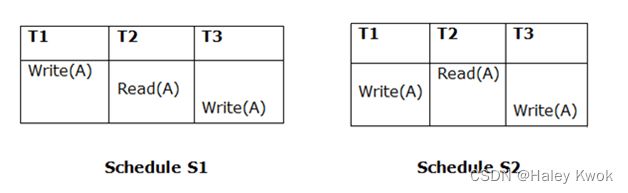

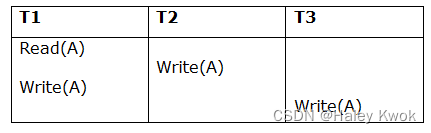

- Problems with Concurrent Execution

-

- 1. Lost Update Problems (W-W Conflict)

- 2. Dirty Read/ Temporary Update Problems (W-R Conflict)

- 3. Unrepeatable Read Problem (W-R Conflict)

- Concurrency Control Protocols

-

- 1. Lock Based Concurrency Control Protocol

-

- 1. Shared lock

- 2. Exclusive lock

-

- 1. Simplistic lock protocol

- 2. Pre-claiming Lock Protocol

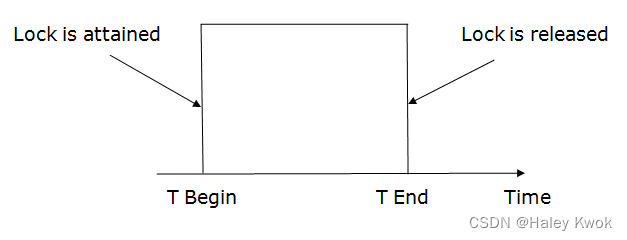

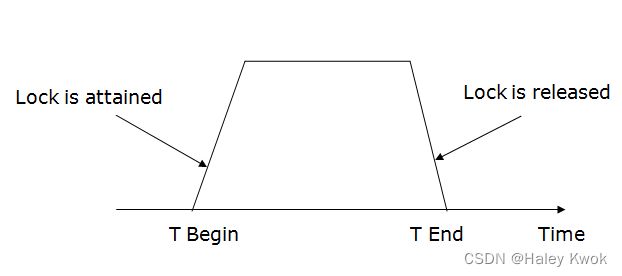

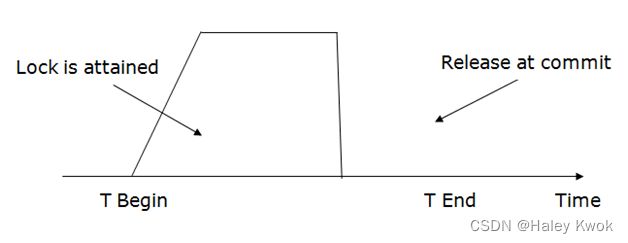

- 3. Two-phase locking (2PL)

- 4. Strict Two-phase locking (Strict-2PL)

- 2. Time Stamp Concurrency Control Protocol

- 3. Validation Based Concurrency Control Protocol

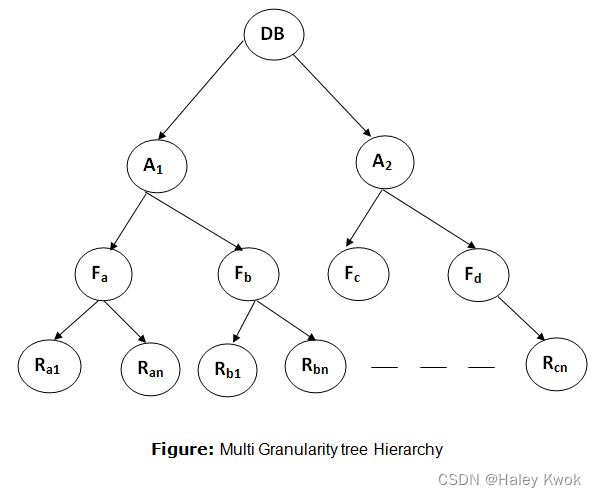

- Multiple Granularity

- Recovery with Concurrent Transaction

- Chapter 9: DDT

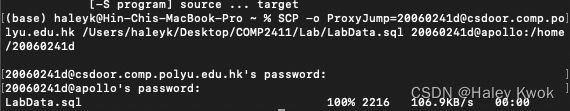

- Lab 1 Table Definition, Manipulation and Simple Queries

-

- Link to SQL

- Import .SQL

- Exercise

- 1.1 Common Data Types

- 1.2 NULL values

- 2.1 Inserting Tuples into Tables

- 2.2 Altering Tables

- 3.1 Select

- Lab 2 Queries, Arithmetic Expressions and Functions

-

- 1.1 Selecting Rows within a Certain Range

- 1.2 Selecting Rows That Match a Value in List

- 1.3 Selecting Rows That Match a Character Pattern

- 2.1 Ordering Rows

- 2.2 Ordering Rows Using Multiple Columns

- 2.3 Ordering Rows By Columns With NULL Values

- 3.1 Arithmetic Expressions

- 3.2 Arithmetic Expressions with ORDER BY

- 3.3 The NULL Function

- 3.4 Column Labels

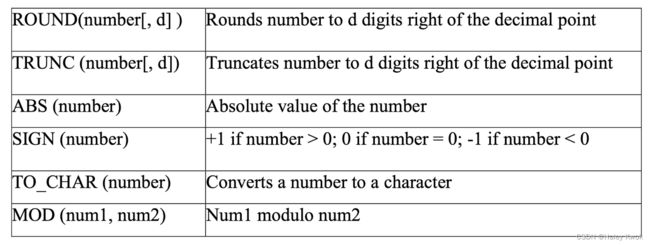

- 4.1 Arithmetic Functions (ROUND)

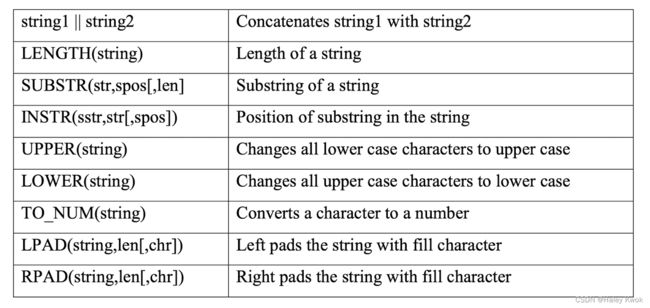

- 5.1 Character String Functions

- Lab 3 Aggregate Functions, 'Group By' and 'Having' Clauses

-

- 1.1 Selecting Summary Information from One Group

- 2.1 Sub-query

- 2.2 DISTINCT

- 3.1 SELECTING SUMMARY INFORMATION FROM GROUPS – GROUP BY

- 3.2 SPECIFYING A SEARCH-CONDITION FOR GROUPS – HAVING

- 3.3 More on Sub-query

- 4.1 4 EFFECTS OF NULL VALUES ON GROUP FUNCTIONS

- 5 FORMATING QUERY RESULTS INTO A REPORT

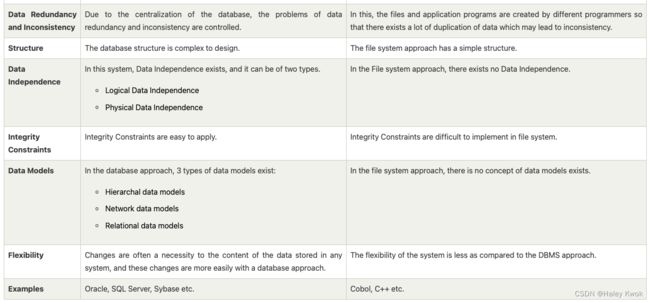

Chapter 1: Database Systems

1. Introduction

The main purpose of the database is to operate a large amount of information by storing, retrieving, and managing data.

There are many dynamic websites on the World Wide Web nowadays which are handled through databases. For example, a model that checks the availability of rooms in a hotel. It is an example of a dynamic website that uses a database.

There are many databases available like MySQL, Sybase, Oracle, MongoDB, Informix, PostgreSQL, SQL Server, etc.

Relational Database

Relational database model has two main terminologies called instance and schema.

The instance is a table with rows or columns

There are following four commonly known properties of a relational model known as ACID properties, where:

A means Atomicity: This ensures the data operation will complete either with success or with failure. It follows the ‘all or nothing’ strategy. For example, a transaction will either be committed or will abort.

C means Consistency: If we perform any operation over the data, its value before and after the operation should be preserved. For example, the account balance before and after the transaction should be correct, i.e., it should remain conserved.

I means Isolation: There can be concurrent users for accessing data at the same time from the database. Thus, isolation between the data should remain isolated. For example, when multiple transactions occur at the same time, one transaction effects should not be visible to the other transactions in the database.

D means Durability: It ensures that once it completes the operation and commits the data, data changes should remain permanent.

Cloud Database

Cloud database facilitates you to store, manage, and retrieve their structured, unstructured data via a cloud platform. This data is accessible over the Internet. Cloud databases are also called a database as service (DBaaS) because they are offered as a managed service.

e.g., DWS, Oracle, MS Server

NoSQL

Not only for database

MongoDB, CouchDB, Cloudant (Document-based)

Memcached, Redis, Coherence (key-value store)

HBase, Big Table, Accumulo (Tabular)

DBMS, Graph Database, RDBMS…

2. Database Architecture (1-tier,2-tier,3-tier)

2-tier Architecture

The 2-Tier architecture is same as basic client-server. In the two-tier architecture, applications on the client end can directly communicate with the database at the server side. For this interaction, API’s like: ODBC, JDBC are used.

The user interfaces and application programs are run on the client-side.

The server side is responsible to provide the functionalities like: query processing and transaction management.

To communicate with the DBMS, client-side application establishes a connection with the server side.

3-tier Architecture

The 3-Tier architecture contains another layer between the client and server. In this architecture, client can’t directly communicate with the server.

The application on the client-end interacts with an application server which further communicates with the database system.

End user has no idea about the existence of the database beyond the application server. The database also has no idea about any other user beyond the application.

The 3-Tier architecture is used in case of large web application.

![]()

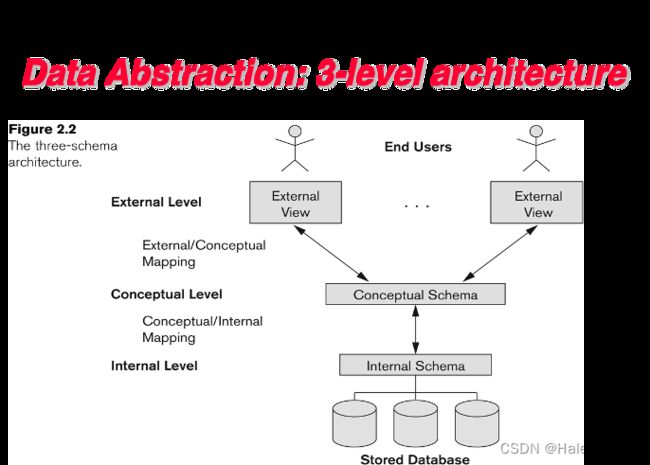

3. 3-level Architecture/Three schema Architecture

The three schema architecture is also called ANSI/SPARC architecture or three-level architecture.

The main objective of three level architecture is to enable multiple users to access the same data with a personalized view while storing the underlying data only once. Thus it separates the user’s view from the physical structure of the database.

- Abstract view of the data

simplify interaction with the system

hide details of how data is stored and manipulated - Levels of abstraction

physical/internal level: data structures; how data are actually stored

conceptual level: schema, what data are actually stored

view/external level: partial schema - the ability to manage persistent data

- primary goal of DBMS: to provide an environment that is convenient, efficient, and robust to use in retrieving & storing data

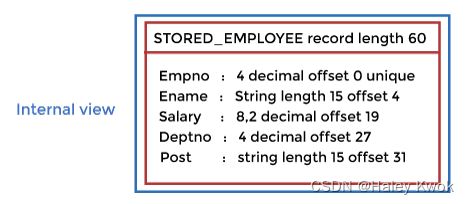

1. Internal

How data store in block

Storage space allocations.

For Example: B-Trees, Hashing etc.

Access paths.

For Example: Specification of primary and secondary keys, indexes, pointers and sequencing.

Data compression and encryption techniques.

Optimization of internal structures.

Representation of stored fields.

2. Conceptual

The conceptual schema describes the design of a database at the conceptual level. Conceptual level is also known as logical level.

The conceptual schema describes the structure of the whole database.

The conceptual level describes what data are to be stored in the database and also describes what relationship exists among those data.

In the conceptual level, internal details such as an implementation of the data structure are hidden.

Programmers and database administrators work at this level.

3. External

At the external level, a database contains several schemas that sometimes called as subschema. The subschema is used to describe the different view of the database.

An external schema is also known as view schema.

Each view schema describes the database part that a particular user group is interested and hides the remaining database from that user group.

The view schema describes the end user interaction with database systems.

![]()

Mapping

- Conceptual/ Internal Mapping

The Conceptual/ Internal Mapping lies between the conceptual level and the internal level. Its role is to define the correspondence between the records and fields of the conceptual level and files and data structures of the internal level.

- External/ Conceptual Mapping

The External/Conceptual Mapping lies between the external level and the Conceptual level. Its role is to define the correspondence between a particular external and the conceptual view.

Data Independence

Data independence can be explained using the three-schema architecture.

Data independence refers characteristic of being able to modify the schema at one level of the database system without altering the schema at the next higher level.

There are two types of data independence:

1. Logical Data Independence

Logical data independence refers characteristic of being able to change the conceptual schema without having to change the external schema.

Logical data independence is used to separate the external level from the conceptual view.

If we do any changes in the conceptual view of the data, then the user view of the data would not be affected.

Logical data independence occurs at the user interface level.

2. Physical Data Independence

Physical data independence can be defined as the capacity to change the internal schema without having to change the conceptual schema.

If we do any changes in the storage size of the database system server, then the Conceptual structure of the database will not be affected.

Physical data independence is used to separate conceptual levels from the internal levels.

Physical data independence occurs at the logical interface level.

4. Data Model

- Object-based logical models (conceptual & view levels)

the Entity-Relationship (ER) model – mid 70’s

the Semantic Data Models – early/mid 80’s

the Object-Oriented data models – late 80’s - Record-based logical models (conceptual & view levels)

the Network and Hierarchical models – 60’s

the Relational model – early 70’s

An ER model is the logical representation of data as objects and relationships among them. These objects are known as entities, and relationship is an association among these entities. This model was designed by Peter Chen and published in 1976 papers. It was widely used in database designing. A set of attributes describe the entities. For example, student_name, student_id describes the ‘student’ entity. A set of the same type of entities is known as an 'Entity set', and the set of the same type of relationships is known as 'relationship set'.

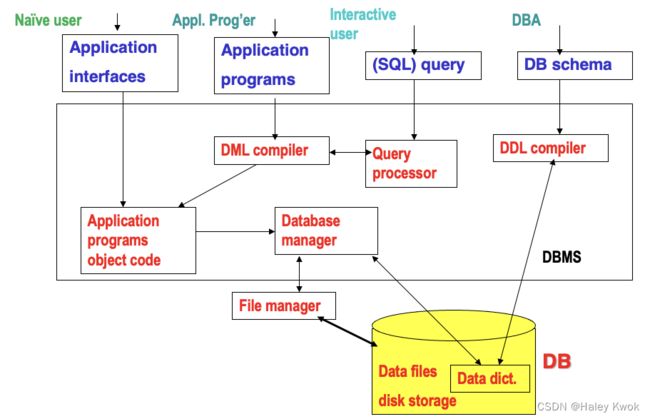

5. DBMS Language

5.1 Data Definition Language (DDL)

- a language for defining DB schema

- Data definition language is used to

store the information of metadatalike the number of tables and schemas, their names, indexes, columns in each table, constraints, etc.

Create: It is used to create objects in the database.

Alter: It is used to alter the structure of the database.

Drop: It is used to delete objects from the database.

Truncate: It is used to remove all records from a table.

Rename: It is used to rename an object.

Comment: It is used to comment on the data dictionary.

These commands are used to update the database schema that’s why they come under Data definition language.

5.2 Data Manipulation Language (DML)

DML stands for Data Manipulation Language. It is used for accessing and manipulating data in a database. It handles user requests.

- an important subset for retrieving data is called Query Language

- two types of DML: procedural (specify “what” & “how”) vs. declarative (just specify “what”)

Select: It is used to retrieve data from a database.

Insert: It is used to insert data into a table.

Update: It is used to update existing data within a table.

Delete: It is used to delete all records from a table.

Merge: It performs UPSERT operation, i.e., insert or update operations.

Call: It is used to call a structured query language or a Java subprogram.

Explain Plan: It has the parameter of explaining data.

Lock Table: It controls concurrency.

6. Data model Schema and Instance

The data which is stored in the database at a particular moment of time is called an instance of the database.

The overall design of a database is called schema.

A database schema is the skeleton structure of the database. It represents the logical view of the entire database.

A schema contains schema objects like table, foreign key, primary key, views, columns, data types, stored procedure, etc.

A database schema can be represented by using the visual diagram. That diagram shows the database objects and relationship with each other.

7. Basic concepts and terminologies

- instance

the collection of data (information) stored in the DB at a particular moment (ie, a snapshot) - scheme/schema

the overall structure (design) of the DB – relatively static

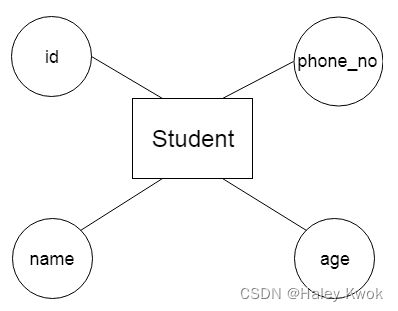

Chapter 2: Entity-Relationship (ER) Model

1. Components of ER diagram

1. Entity

An entity may be any object, class, person or place. In the ER diagram, an entity can be represented as rectangles.

Consider an organization as an example- manager, product, employee, department etc. can be taken as an entity.

1.1.1 Weak Entity

An entity that depends on another entity called a weak entity. The weak entity doesn’t contain any key attribute of its own. The weak entity is represented by a double rectangle.

There is no primary key in weak attribute (non-key attribute)

2. Attribute

The attribute is used to describe the property of an entity. Eclipse is used to represent an attribute.

For example, id, age, contact number, name, etc. can be attributes of a student.

a. Key Attribute

The key attribute is used to represent the main characteristics of an entity. It represents a primary key. The key attribute is represented by an ellipse with the text underlined.

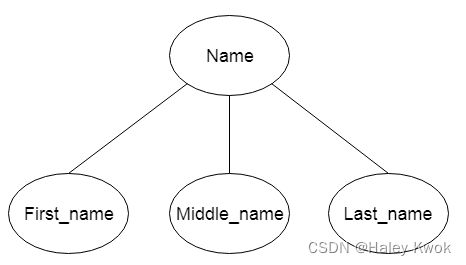

b. Composite Attribute

An attribute that composed of many other attributes is known as a composite attribute. The composite attribute is represented by an ellipse, and those ellipses are connected with an ellipse.

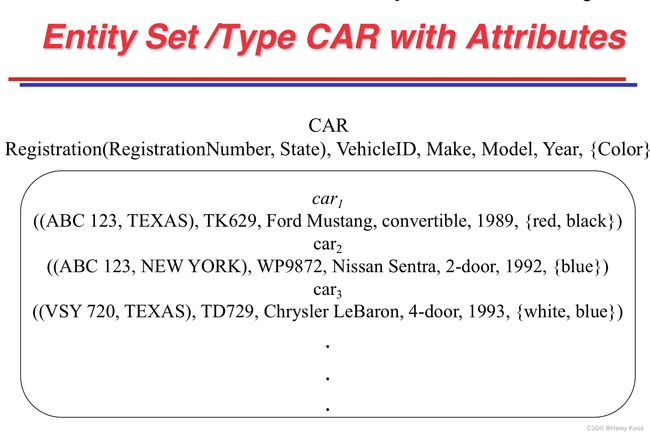

c. Multivalued Attribute

An attribute can have more than one value. These attributes are known as a multivalued attribute. The double oval is used to represent multivalued attribute. For example, a student can have more than one phone number.

SIMPLE: SSN, Sex

COMPOSITE: Address(Apt#, Street, City, State, ZipCode, Country) or Name(FirstName, MiddleName, LastName)

MULTI-VALUED: multiple values; Color of a CAR, denoted as {Color}.

COMPOSITE and MULTI-VALUED may be generally nested.

{PreviousDegrees(College, Year, Degrees, Field)}

d. Derived Attribute

An attribute that can be derived from other attribute is known as a derived attribute. It can be represented by a dashed ellipse. For example, A person’s age changes over time and can be derived from another attribute like Date of birth.

3. Structural Constraint of relationship: Cardinality ratio (of a binary relationship) V.S. (Min, Max)

Relationship V.S. Relationship Sets

- Relationship: related two or more distinct entities with a specific meaning

EMPLOYEE John Smithworks onthe PROJECT ‘solar’, or EMPLOYEE Franklin Wongmanagesthe Research DEPARTMENT. - Relationship Set: Relationships of the same type are grouped together.

theWORKS_ONrelationship type in which EMPLOYEES and PROJECTS participate; theMANAGESrelationship type in which EMPLOYEE and DEPARTMENT.

Both MANAGES and WORKS_ON are binary relationships, both of which can same participate in entity sets/ types.

Cardinality ratio

A relationship is used to describe the relation between entities. Diamond or rhombus is used to represent the relationship.

a. One-to-One Relationship (1:1)

When only one instance of an entity is associated with the relationship, then it is known as one to one relationship.

For example, A female can marry to one male, and a male can marry to one female.

![]()

b. One-to-many relationship (1:N)

When only one instance of the entity on the left, and more than one instance of an entity on the right associates with the relationship then this is known as a one-to-many relationship.

For example, Scientist can invent many inventions, but the invention is done by the only specific scientist.

![]()

c. Many-to-one relationship (N:1)

When more than one instance of the entity on the left, and only one instance of an entity on the right associates with the relationship then it is known as a many-to-one relationship.

For example, Student enrolls for only one course, but a course can have many students.

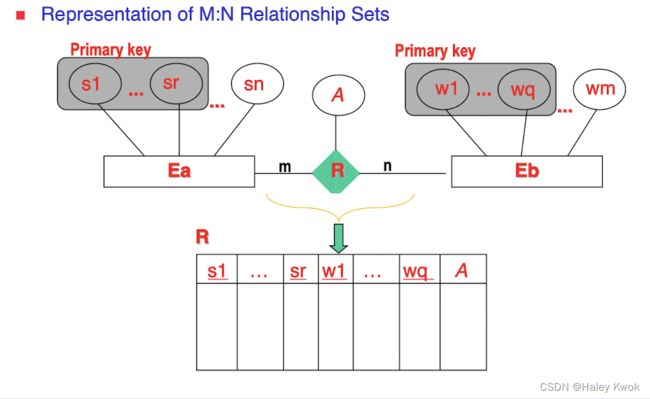

d. Many-to-many relationship (M:N)

When more than one instance of the entity on the left, and more than one instance of an entity on the right associates with the relationship then it is known as a many-to-many relationship.

For example, Employee can assign by many projects and project can have many employees.

Participation constraint (on each participating entity set or type)

Partial participation: min = 0, MAY NOT appears in the rows; by single line

Total participation: min > 0, MUST appears in the rows; by double line (every entity participate)

Minimum cardinality tells whether the participation is partial or total.

If minimum cardinality = 0, then it signifies partial participation.

If minimum cardinality = 1, then it signifies total participation.

Maximum cardinality tells the maximum number of entities that participates in a relationship set.

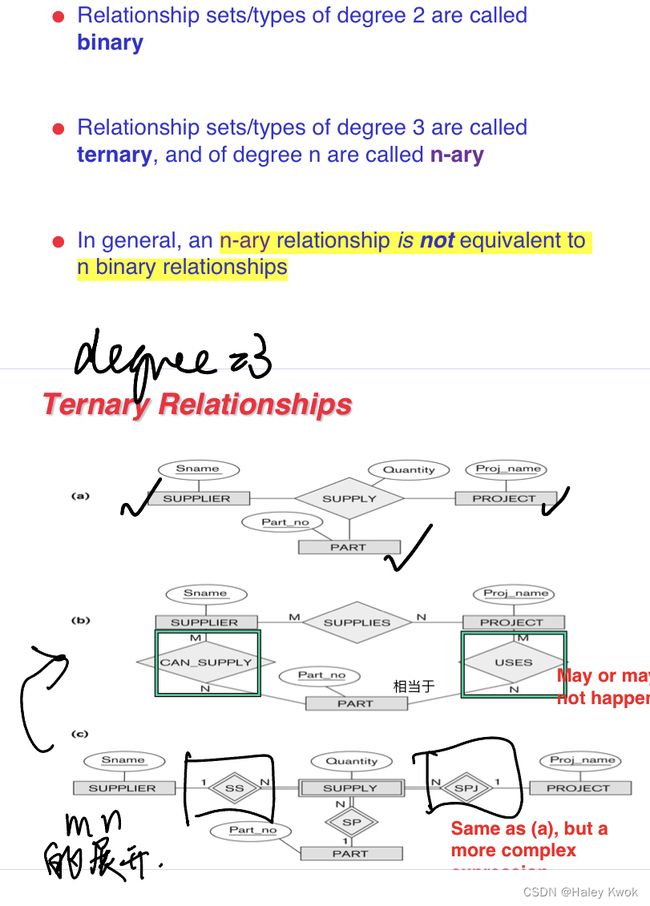

Higher degree of relationship

Directly transformation between cardinality ratio and ( m i n , m a x ) (min, max) (min,max) is not allowed.

(MIN, MAX) can be considered as how many times an entity would appear in the rows.

[Examples]

One-to-one

Many students belong to one department

Student ---- N ----- belongs to ----- 1 ---- Department

Each student belongs to at one department only; Each department has at least one and more than one students

This would be more clear, since some times when there is tertinary relationship, m:n:p is meaningless.

Student ---- ( 1,1 ) ----- belongs to -----( 1,N ) ---- Department

2. Notation of ER diagram

3. ER Design Issues

1) Use of Entity Set vs Attributes

The use of an entity set or attribute depends on the structure of the real-world enterprise that is being modelled and the semantics associated with its attributes. It leads to a mistake when the user use the primary key of an entity set as an attribute of another entity set. Instead, he should use the relationship to do so. Also, the primary key attributes are implicit in the relationship set, but we designate it in the relationship sets.

We should not link ____ directly with other entity’s attribute

2) Use of Entity Set vs. Relationship Sets

It is difficult to examine if an object can be best expressed by an entity set or relationship set. To understand and determine the right use, the user need to designate a relationship set for describing an action that occurs in-between the entities.

If there is a requirement of representing the object as a relationship set, then its better not to mix it with the entity set.

3) Use of Binary vs n-ary Relationship Sets

Generally, the relationships described in the databases are binary relationships. However, non-binary relationships can be represented by several binary relationships. For example, we can create and represent a ternary relationship ‘parent’ that may relate to a child, his father, as well as his mother. Such relationship can also be represented by two binary relationships i.e, mother and father, that may relate to their child.

It is possible to represent a non-binary relationship by a set of distinct binary relationships.

4) Placing Relationship Attributes

The cardinality ratios can become an affective measure in the placement of the relationship attributes. So, it is better to associate the attributes of one-to-one or one-to-many relationship sets with any participating entity sets, instead of any relationship set. The decision of placing the specified attribute as a relationship or entity attribute should possess the charactestics of the real world enterprise that is being modelled.

For example, if there is an entity which can be determined by the combination of participating entity sets, instead of determing it as a separate entity. Such type of attribute must be associated with the many-to-many relationship sets.

Focus on the number of determination

4. Keys

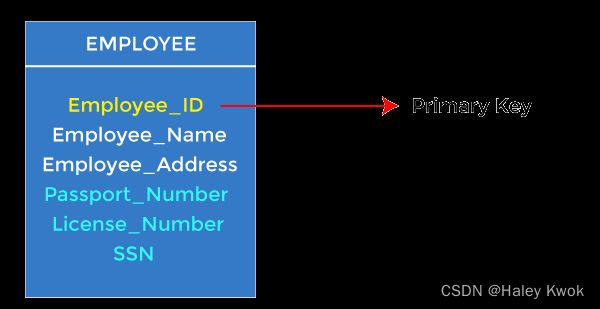

1. Primary Keys

The minimal attribute of candidate key, which is the first key used to identify one and only one instance of an entity uniquely. An entity can contain multiple keys, as we saw in the PERSON table. The key which is most suitable from those lists becomes a primary key.

2. Candidate Keys

A candidate key is an attribute or set of attributes that can uniquely identify a tuple.

Except for the primary key, the remaining attributes are considered a candidate key. The candidate keys are as strong as the primary key.

For example: In the EMPLOYEE table, id is best suited for the primary key. The rest of the attributes, like SSN, Passport_Number, License_Number, etc., are considered a candidate key.

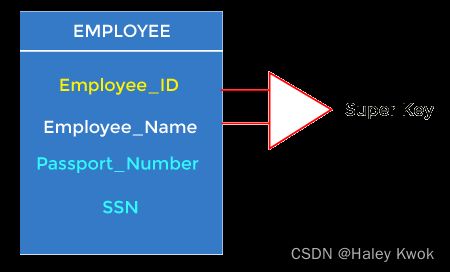

3. Super Keys

Super key is an attribute set that can uniquely identify a tuple. A super key is a superset of a candidate key.

For example: In the above EMPLOYEE table, for(EMPLOEE_ID, EMPLOYEE_NAME), the name of two employees can be the same, but their EMPLYEE_ID can’t be the same. Hence, this combination can also be a key.

The super key would be EMPLOYEE-ID (EMPLOYEE_ID, EMPLOYEE-NAME), etc.

![]()

Super key in the table above:

{EMP_ID}, {EMP_ID, EMP_NAME}, {EMP_ID, EMP_NAME, EMP_ZIP}…so on

Candidate key: {EMP_ID}

To find the candidate keys, you need to see what path leads you to all attributes using the dependencies. So you are correct about A because from A you can reach B that can reach {C, D}. AB can’t be considered a candidate key because it has never been mentioned in your dependencies. Another way to think about it is by remembering that candidate key is the minimum number of attributes that guarantees uniqueness in your rows. But since A is already a candidate key then AB is not minimal set. Since you only have one candidate key that’s A. A is called a key attribute and all other attributes are called non-key attributes. then you decide the number of super keys by 2 to the power of number of non-key attributes (B, C, D). In this scenario you should have 8 super keys. The way to find them is simply by mashing A with all possible combinations of the non-key attributes. So your superkeys would be A, AB, AC, AD, ABC, ABD, ACD, ABCD.

Find the Superkeys

4. Foreign Keys

Foreign keys are the column of the table used to point to the primary key of another table.

Every employee works in a specific department in a company, and employee and department are two different entities. So we can’t store the department’s information in the employee table. That’s why we link these two tables through the primary key of one table.

We add the primary key of the DEPARTMENT table, Department_Id, as a new attribute in the EMPLOYEE table.

In the EMPLOYEE table, Department_Id is the foreign key, and both the tables are related.

3 more keys to go…

superkey

a set of one or more attributes which, taken together, identify uniquely an entity in an entity set

Example: {student ID, Name} identify a student

candidate key

minimal set of attributes which can identify uniquely an entity in an entity set

a special case of superkey (for which no proper subset is a superkey)

Example: student ID identify a student, but Name is not a candidate key (WHY?)

more than candidate key, pick one as primary key

primary key

a candidate key chosen by the DB designer to identify an entity in an entity set

5. Generalization

Generalization is like a bottom-up approach in which two or more entities of lower level combine to form a higher level entity if they have some attributes in common.

In generalization, an entity of a higher level can also combine with the entities of the lower level to form a further higher level entity.

Generalization is more like subclass and superclass system, but the only difference is the approach. Generalization uses the bottom-up approach.

In generalization, entities are combined to form a more generalized entity, i.e., subclasses are combined to make a superclass.

For example, Faculty and Student entities can be generalized and create a higher level entity Person.

6. Specialization

Specialization is a top-down approach, and it is opposite to Generalization. In specialization, one higher level entity can be broken down into two lower level entities.

Specialization is used to identify the subset of an entity set that shares some distinguishing characteristics.

Normally, the superclass is defined first, the subclass and its related attributes are defined next, and relationship set are then added.

For example: In an Employee management system, EMPLOYEE entity can be specialized as TESTER or DEVELOPER based on what role they play in the company.

7. Aggregation

In aggregation, the relation between two entities is treated as a single entity. In aggregation, relationship with its corresponding entities is aggregated into a higher level entity.

For example: Center entity offers the Course entity act as a single entity in the relationship which is in a relationship with another entity visitor. In the real world, if a visitor visits a coaching center then he will never enquiry about the Course only or just about the Center instead he will ask the enquiry about both.

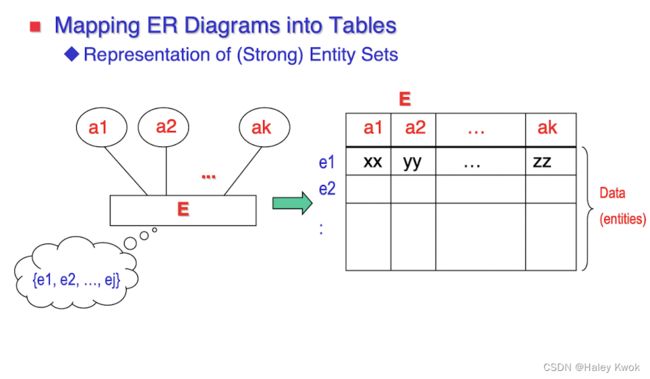

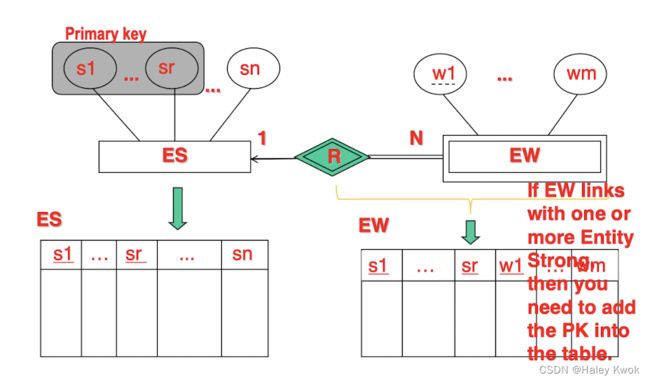

8. Reduction of ER diagram to Table/ ER diagram V.S.

The database can be represented using the notations, and these notations can be reduced to a collection of tables.

In the database, every entity set or relationship set can be represented in tabular form.

In the student table, a hobby is a multivalued attribute. So it is not possible to represent multiple values in a single column of STUDENT table.

Don't have to link the arrow, since it is not the referential constraints

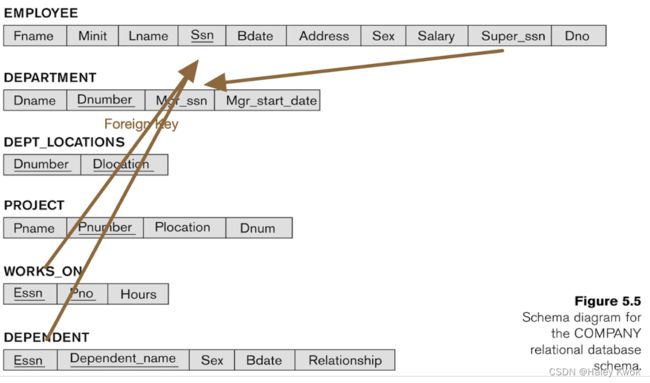

Entity: EMPLOYEE, DEPARTMENT, PROJECT, DEPENDENT

Relationship: DEPT_LOCATIONS, WORKS_ON -> primary keys, and non-key attributes

Relational Schema

Method 1

Method 2

(Primary Key#, Foreign Key*, Attribute, …, …)

9. Relationship of higher degree

The degree of relationship can be defined as the number of occurrences in one entity that is associated with the number of occurrences in another entity.

There is the three degree of relationship:

One-to-one (1:1)

One-to-many (1:M)

Many-to-many (M:N)

- One-to-one

In a one-to-one relationship, one occurrence of an entity relates to only one occurrence in another entity.

A one-to-one relationship rarely exists in practice.

For example: if an employee is allocated a company car then that car can only be driven by that employee.

Therefore, employee and company car have a one-to-one relationship.

- One-to-many

In a one-to-many relationship, one occurrence in an entity relates to many occurrences in another entity.

For example: An employee works in one department, but a department has many employees.

Therefore, department and employee have a one-to-many relationship.

- Many-to-many

In a many-to-many relationship, many occurrences in an entity relate to many occurrences in another entity.

Same as a one-to-one relationship, the many-to-many relationship rarely exists in practice.

For example: At the same time, an employee can work on several projects, and a project has a team of many employees.

Therefore, employee and project have a many-to-many relationship.

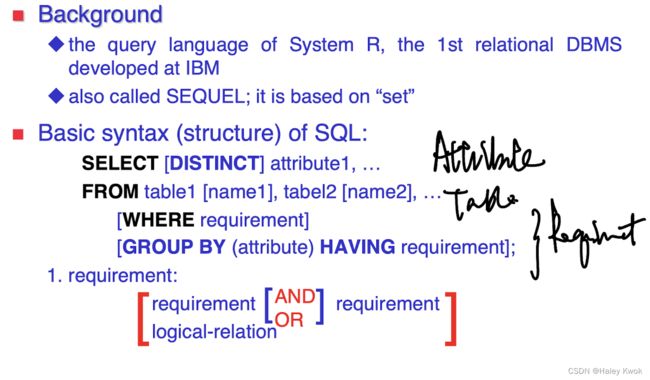

Chapter 3: SQL

Some of The Most Important SQL Commands

• SELECT - extracts data from a database

• UPDATE - updates data in a database

• DELETE - deletes data from a database

• INSERT INTO - inserts new data into a database

• CREATE DATABASE - creates a new database

• ALTER DATABASE - modifies a database

• CREATE TABLE - creates a new table

• ALTER TABLE - modifies a table

• DROP TABLE - deletes a table

• CREATE INDEX - creates an index (search key)

• DROP INDEX - deletes an index

![]()

Examples

More than one attribute has the same name, therefore, you need to specify the name with '.'

You don’t have to use UNION???

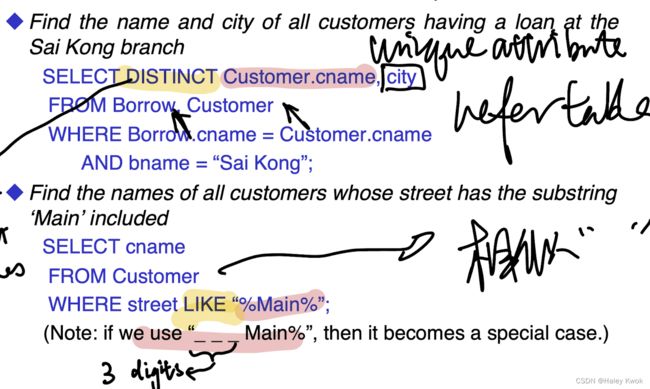

SELECT DISTINCT Customer.cname, city

FROM Customer, Borrow

WHERE bname = 'Sai Kong'

AND Borrow.cname = Customer.cname;

You can see that you don’t have to join anything when you need to select two things, since they can be linked.

SELECT DATA (from bottom to top) SELECT IS THE COLUMN RESULT

SELECT column1, column2, ... -- the columns only exist in the output

FROM table_name;

SELECT CustomerName, City

FROM Customers;

SELECT *

FROM Customers;

1. DISTINCT

In a table, there may be a chance to exist a duplicate value and sometimes we want to retrieve only unique values. In such scenarios, SQL SELECT DISTINCT statement is used.

SELECT DISTINCT column1, column2, ...

FROM table_name;

SELECT DISTINCT home_town

FROM students

2. AND/OR/NOT

SELECT column1, column2, ...

FROM table_name

WHERE condition;

SELECT column1, column2, ...

FROM table_name

WHERE condition1 AND condition2 AND condition3 ...; #CAN BE ,

SELECT * FROM Customers

WHERE Country='Germany' AND City='Berlin';

SELECT column1, column2, ...

FROM table_name

WHERE condition1 OR condition2 OR condition3 ...;

SELECT * FROM Customers

WHERE City='Berlin' OR City='München';

SELECT column1, column2, ...

FROM table_name

WHERE NOT condition;

SELECT * FROM Customers

WHERE Country='Germany' AND (City='Berlin' OR City='München');

3. ORDER BY

SELECT column1, column2, ...

FROM table_name

ORDER BY column1, column2, ... ASC|DESC;

4. IS NULL/ IS NOT NULL

SELECT column_names

FROM table_name

WHERE column_name IS NULL;

SELECT column_names

FROM table_name

WHERE column_name IS NOT NULL;

5. TOP

SELECT TOP number|percent column_name(s)

FROM table_name

WHERE condition;

SELECT TOP 3 * FROM Customers;

SELECT TOP 50 PERCENT * FROM Customers;

6. MIN MAX

SELECT MIN|MAX(column_name)

FROM table_name

WHERE condition;

SELECT MIN(Price) AS SmallestPrice

FROM Products;

SELECT MAX(Price) AS LargestPrice

FROM Products;

7. COUNT/AVG/SUM

SELECT COUNT|AVG|SUM (column_name)

FROM table_name

WHERE condition;

8. LIKE

SELECT column1, column2, ...

FROM table_name

WHERE columnN LIKE pattern;

LIKE Operator Description

WHERE CustomerName LIKE 'a%' Finds any values that start with "a"

WHERE CustomerName LIKE '%a' Finds any values that end with "a"

WHERE CustomerName LIKE '%or%' Finds any values that have "or" in any position

WHERE CustomerName LIKE '_r%' Finds any values that have "r" in the second position

WHERE CustomerName LIKE 'a_%' Finds any values that start with "a" and are at least 2 characters in length

WHERE CustomerName LIKE 'a__%' Finds any values that start with "a" and are at least 3 characters in length

WHERE ContactName LIKE 'a%o' Finds any values that start with "a" and ends with "o"

Symbol Description Example

* Represents zero or more characters bl* finds bl, black, blue, and blob

? Represents a single character h?t finds hot, hat, and hit

[] Represents any single character within the brackets h[oa]t finds hot and hat, but not hit

! Represents any character not in the brackets h[!oa]t finds hit, but not hot and hat

- Represents any single character within the specified range c[a-b]t finds cat and cbt

'#' Represents any single numeric character 2#5 finds 205, 215, 225, 235, 245, 255, 265, 275, 285, and 295

9. IN

SELECT column_name(s)

FROM table_name

WHERE column_name IN (value1, value2, ...);

#or

SELECT column_name(s)

FROM table_name

WHERE column_name IN (SELECT STATEMENT);

10. BETWEEN…AND/ NOT BETWEEN…AND

SELECT column_name(s)

FROM table_name

WHERE column_name BETWEEN value1 AND value2;

SELECT * FROM Products

WHERE Price NOT BETWEEN 10 AND 20;

SELECT * FROM Products

WHERE Price BETWEEN 10 AND 20

AND CategoryID NOT IN (1,2,3);

SELECT * FROM Products

WHERE ProductName BETWEEN 'Carnarvon Tigers' AND 'Mozzarella di Giovanni'

ORDER BY ProductName;

11. AS

Table

SELECT column_name AS alias_name

FROM table_name;

Column

SELECT column_name(s)

FROM table_name AS alias_name;

SELECT CustomerID AS ID, CustomerName AS Customer

FROM Customers;

SELECT CustomerName AS Customer, ContactName AS [Contact Person] #contain space need []

FROM Customers;

SELECT CustomerName, Address + ', ' + PostalCode + ' ' + City + ', ' + Country AS Address

FROM Customers;

12. ALL and ANY

ALL means that the condition will be true only if the operation is true for all values in the range.

is used with SELECT, WHERE and HAVING statements

SELECT column_name(s) FROM table1

UNION|ALL

SELECT column_name(s) FROM table2;

The following SQL statement lists the ProductName if ALL the records in the OrderDetails table has Quantity equal to 10. This will of course return FALSE because the Quantity column has many different values (not only the value of 10):

SELECT ProductName

FROM Products

WHERE ProductID = ALL

(SELECT ProductID

FROM OrderDetails

WHERE Quantity = 10);

ANY means that the condition will be true if the operation is true for any of the values in the range.

The following SQL statement lists the ProductName if it finds ANY records in the OrderDetails table has Quantity larger than 99 (this will return TRUE because the Quantity column has some values larger than 99):

SELECT ProductName

FROM Products

WHERE ProductID = ANY

(SELECT ProductID

FROM OrderDetails

WHERE Quantity = 10);

AGGREGATE

13. GROUP BY

The GROUP BY statement groups rows that have the same values into summary rows, like “find the number of customers in each country”.

The GROUP BY statement is often used with aggregate functions (COUNT(), MAX(), MIN(), SUM(), AVG()) to group the result-set by one or more columns.

Group by statement is used to group the rows that have the same value. Whereas Order by statement sort the result-set either in ascending or in descending order. … In select statement, it is always used before the order by keyword.

SELECT column_name(s)

FROM table_name

WHERE condition

GROUP BY column_name(s)

ORDER BY column_name(s);

SELECT COUNT(CustomerID), Country

FROM Customers

GROUP BY Country

ORDER BY COUNT(CustomerID) DESC;

-- MostRentBook: Correct

SELECT bookName, ISBN, author, bookCategory, SUM(bookRentNum)

FROM BOOK

GROUP BY bookName, ISBN, author, bookCategory

HAVING SUM(bookRentNum) >=

ALL(SELECT SUM(bookRentNum)

FROM book

GROUP BY ISBN);

SELECT bookName, ISBN, author, bookCategory, SUM(bookRentNum)

FROM book

GROUP BY bookName, ISBN, author, bookCategory, ISBN

HAVING (SUM(bookRentNum) >=

ALL(SELECT SUM(B.bookRentNum)

FROM book B

GROUP BY B.ISBN));

-- MostRentBook: Wrong

-- SELECT A.bookName, A.ISBN, A.author, A.bookCategory, SUM(A.bookRentNum)

-- FROM book A

-- GROUP BY A.ISBN

-- HAVING SUM(A.bookRentNum) >= ALL(SELECT SUM(B.bookRentNum) FROM book B GROUP BY B.ISBN)

14. HAVING (with aggregate functions only)

The HAVING clause was added to SQL because the WHERE keyword cannot be used with aggregate functions.

An aggregate function in SQL performs a calculation on multiple values and returns a single value. SQL provides many aggregate functions that include avg, count, sum, min, max, etc.

SELECT column_name(s)

FROM table_name

WHERE condition

GROUP BY column_name(s)

HAVING condition

ORDER BY column_name(s);

SELECT COUNT(CustomerID), Country

FROM Customers

GROUP BY Country

HAVING COUNT(CustomerID) > 5;

- ANY

The ANY and ALL operators allow you to perform a comparison between a single column value and a range of other values.

The ALL operator:

• returns a boolean value as a result

• returns TRUE if ALL of the subquery values meet the condition

• is used with SELECT, WHERE and HAVING statements

ALL means that the condition will be true only if the operation is true for all values in the range.

SELECT column_name(s)

FROM table_name

WHERE column_name operator ANY

(SELECT column_name

FROM table_name

WHERE condition);

The following SQL statement lists the ProductName if it finds ANY records in the OrderDetails table has Quantity equal to 10 (this will return TRUE because the Quantity column has some values of 10):

SELECT ProductName

FROM Products

WHERE ProductID = ANY

(SELECT ProductID

FROM OrderDetails

WHERE Quantity = 10);

The following SQL statement lists the ProductName if ALL the records in the OrderDetails table has Quantity equal to 10. This will of course return FALSE because the Quantity column has many different values (not only the value of 10):

SELECT ProductName

FROM Products

WHERE ProductID = ALL

(SELECT ProductID

FROM OrderDetails

WHERE Quantity = 10);

JOIN

You can use NATURAL LEFT JOIN or LEFT JOIN ON to solve this problem. The difference between them is that NATURAL LEFT JOIN will automatically match the common columns between two tables, while LEFT JOIN ON requires you to specify the common columns.

-- Correct:

SELECT Person.FirstName, Person.LastName, Address.City, Address.State

FROM Person NATURAL LEFT JOIN Address;

SELECT firstName, lastName, city, state

FROM Person p LEFT JOIN Address a

ON p.personId = a.personId;

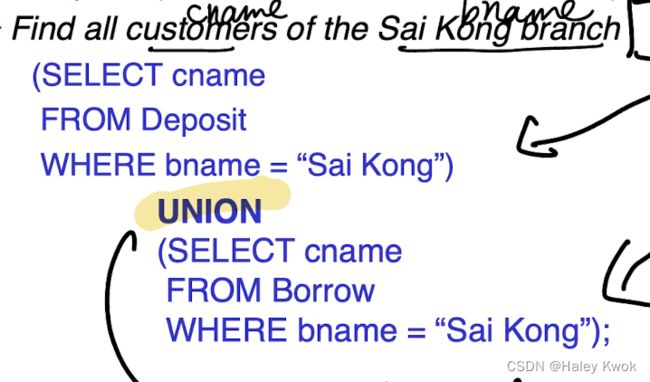

UNION

The UNION operator is used to combine the result-set of two or more SELECT statements.

Every SELECT statement within UNION must have the same number of columns

The columns must also have similar data types

The columns in every SELECT statement must also be in the same order

SELECT employee_id

FROM Employees

WHERE employee_id NOT IN (SELECT employee_id

FROM Salaries)

UNION

SELECT employee_id

FROM Salaries

WHERE employee_id NOT IN (SELECT employee_id

FROM Employees)

ORDER BY employee_id;

INSERT INTO DATA (from top to bottom)

INSERT INTO table_name (column1, column2, column3, ...)

VALUES (value1, value2, value3, ...);

UPDATE

UPDATE table_name

SET column1 = value1, column2 = value2, ...

WHERE condition;

***WARNING ALL DATA WOULD BE UPDATED AS Juan

UPDATE Customers

SET ContactName='Juan';

DELETE

1.

DELETE FROM table_name

WHERE condition;

2.

DELETE FROM table_name;

-TABLE

• (INNER) JOIN: Returns records that have matching values in both tables

• LEFT (OUTER) JOIN: Returns all records from the left table, and the matched records from the right table

• RIGHT (OUTER) JOIN: Returns all records from the right table, and the matched records from the left table

• FULL (OUTER) JOIN: Returns all records when there is a match in either left or right table

INNER\RIGHT\LEFT JOIN

SELECT column_name(s)

FROM table1

INNER JOIN table2

ON table1.column_name = table2.column_name;

SELECT Orders.OrderID, Customers.CustomerName, Orders.OrderDate

FROM Orders

INNER JOIN Customers ON Orders.CustomerID=Customers.CustomerID;

SELECT Orders.OrderID, Customers.CustomerName, Shippers.ShipperName

FROM ((Orders

INNER JOIN Customers ON Orders.CustomerID = Customers.CustomerID)

INNER JOIN Shippers ON Orders.ShipperID = Shippers.ShipperID);

FULL JOIN

SELECT column_name(s)

FROM table1

FULL OUTER JOIN table2

ON table1.column_name = table2.column_name

WHERE condition;

SELECT Customers.CustomerName, Orders.OrderID

FROM Customers

FULL OUTER JOIN Orders ON Customers.CustomerID=Orders.CustomerID

ORDER BY Customers.CustomerName;

SELECT

SELECT *

INTO newtable [IN externaldb]

FROM oldtable

WHERE condition;

INSERT INTO

INSERT INTO table2

SELECT * FROM table1

WHERE condition;

INSERT INTO Customers (CustomerName, City, Country)

SELECT SupplierName, City, Country FROM Suppliers;

CREATE

CREATE TABLE table_name (

column1 datatype,

column2 datatype,

column3 datatype,

....

);

CREATE TABLE Persons (

PersonID int,

LastName varchar(255),

FirstName varchar(255),

Address varchar(255),

City varchar(255)

);

CREATE TABLE Persons (

ID int NOT NULL,

LastName varchar(255) NOT NULL,

FirstName varchar(255) NOT NULL,

Age int

);

CREATE TABLE Persons (

ID int NOT NULL UNIQUE,

LastName varchar(255) NOT NULL,

FirstName varchar(255),

Age int

);

CREATE TABLE Persons (

ID int NOT NULL PRIMARY KEY,

LastName varchar(255) NOT NULL,

FirstName varchar(255),

Age int

);

DROP

DROP TABLE table_name;

ALTER TABLE – ADD/DROP Column

ALTER TABLE table_name

ADD column_name datatype;

ALTER TABLE Customers

ADD Email varchar(255);

ALTER TABLE table_name

DROP COLUMN column_name;

ALTER TABLE Customers

DROP COLUMN Email;

ALTER TABLE Persons

MODIFY Age int NOT NULL;

-DATABASE

CREATE DATABASE databasename;

DROP DATABASE databasename;

BACKUP DATABASE databasename

TO DISK = 'filepath';

CASE

SELECT OrderID, Quantity,

CASE

WHEN Quantity > 30 THEN 'The quantity is greater than 30'

WHEN Quantity = 30 THEN 'The quantity is 30'

ELSE 'The quantity is under 30'

END AS QuantityText

FROM OrderDetails;

COMMENTS

--SELECT * FROM Customers;

SELECT * FROM Products;

Multi-line comments start with /* and end with */.

Any text between /* and */ will be ignored.

The following example uses a multi-line comment as an explanation:

/*Select all the columns

of all the records

in the Customers table:*/

SELECT * FROM Customers;

Lab Java

javac -cp ojdbc8.jar simpleApplication.java

java -cp ojdbc8.jar:. simpleApplication

Enter your username: "20060241d"

Enter your password: iyrhpast

2/11 Discussion

Memory consumption 100, 1000

The database will process the data faster than the processing side

TO_DATE

Can’t update sql without modify

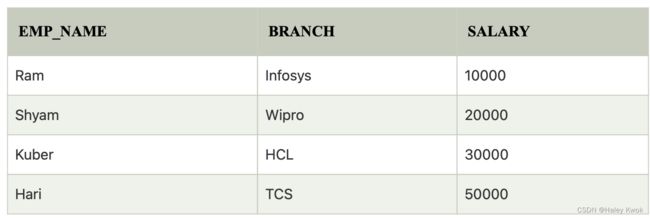

Chapter 4: Relational Model Concept

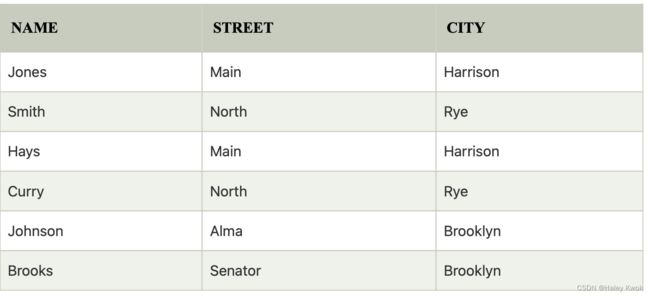

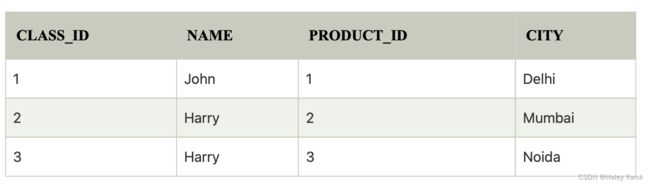

Relational model can represent as a table with columns and rows. Each row is known as a tuple. Each table of the column has a name or attribute.

Domain: It contains a set of atomic values that an attribute can take.

Attribute: It contains the name of a column in a particular table. Each attribute Ai must have a domain, dom(Ai)

Relational instance: In the relational database system, the relational instance is represented by a finite set of tuples. Relation instances do not have duplicate tuples.

Relational schema: A relational schema contains the name of the relation and name of all columns or attributes.

Relational key: In the relational key, each row has one or more attributes. It can identify the row in the relation uniquely.

In the given table, NAME, ROLL_NO, PHONE_NO, ADDRESS, and AGE are the attributes.

The instance of schema STUDENT has 5 tuples.

t3 =

Properties of Relations

Name of the relation is distinct from all other relations.

Each relation cell contains exactly one atomic (single) value

Each attribute contains a distinct name

Attribute domain has no significance

tuple has no duplicate value

Order of tuple can have a different sequence

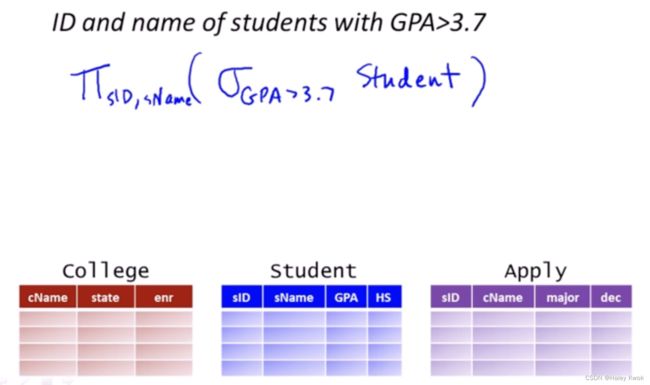

1. Relational Algebra

1. Select Operation:

The select operation selects tuples that satisfy a given predicate.

It is denoted by sigma (σ).

Notation: σ p(r )

WHERE

where: σ is used for selection prediction

r is used for relation

p is used as a propositional logic formula which may use connectors like: AND OR and NOT. These relational can use as relational operators like =, ≠, ≥, <, >, ≤.

[Example]

LOAN Relation

2. Project Operation

This operation shows the list of those attributes that we wish to appear in the result. Rest of the attributes are eliminated from the table.

It is denoted by ∏.

Notation: ∏ A1, A2, An (r )

FROM

Where A1, A2, A3 is used as an attribute name of relation r.

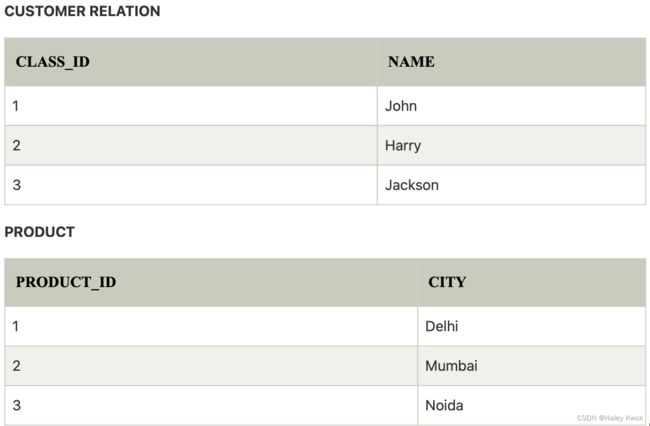

3. Union Operation (or):

Suppose there are two tuples R and S. The union operation contains all the tuples that are either in R or S or both in R & S.

It eliminates the duplicate tuples. It is denoted by ∪.

Notation: R ∪ S

A union operation must hold the following condition:

R and S must have the attribute of the same number.

Duplicate tuples are eliminated automatically.

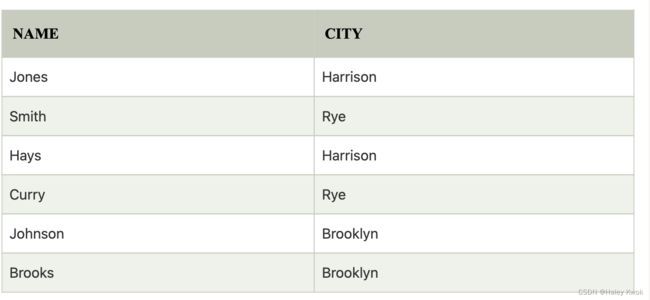

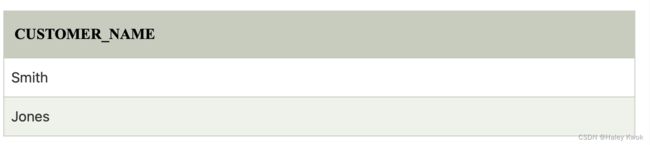

[Example]

DEPOSITOR RELATION

BORROW RELATION

Input:

∏ CUSTOMER_NAME (BORROW) ∪ ∏ CUSTOMER_NAME (DEPOSITOR)

Output:

4. Set Intersection (and):

Suppose there are two tuples R and S. The set intersection operation contains all tuples that are in both R & S.

It is denoted by intersection ∩.

Notation: R ∩ S

Input:

∏ CUSTOMER_NAME (BORROW) ∩ ∏ CUSTOMER_NAME (DEPOSITOR)

Output:

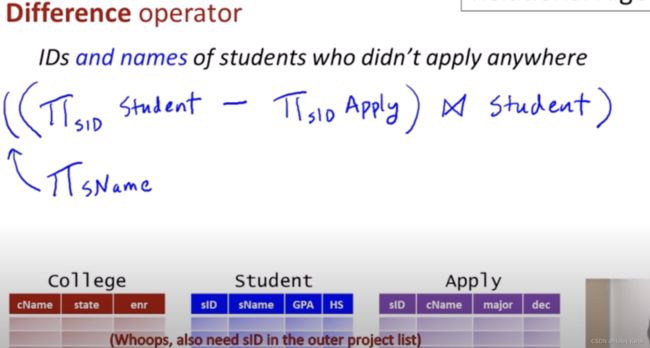

5. Set Difference:

Suppose there are two tuples R and S. The set intersection operation contains all tuples that are in R but not in S.

It is denoted by intersection minus (-).

Notation: R - S

[Example]

Using the above DEPOSITOR table and BORROW table

Input:

∏ CUSTOMER_NAME (BORROW) - ∏ CUSTOMER_NAME (DEPOSITOR)

Output:

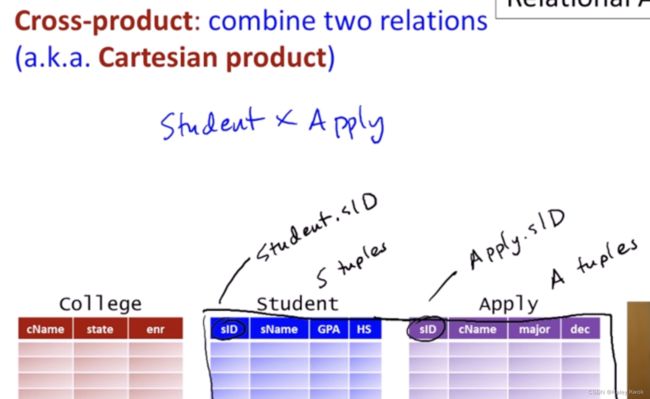

6. Cartesian product |><|

The Cartesian product is used to combine each row in one table with each row in the other table. It is also known as a cross product.

It is denoted by X.

Notation: E X D

![]()

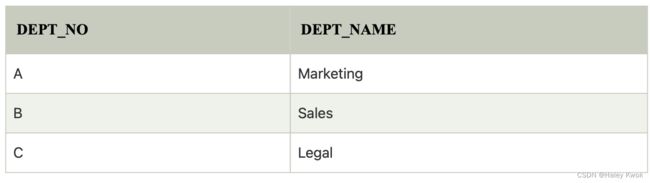

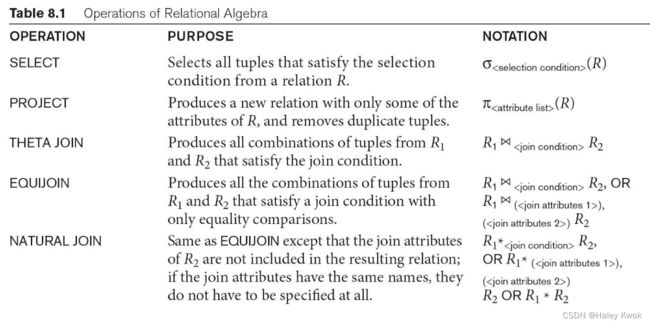

2. Join Operations:

A Join operation combines related tuples from different relations, if and only if a given join condition is satisfied. It is denoted by ⋈.

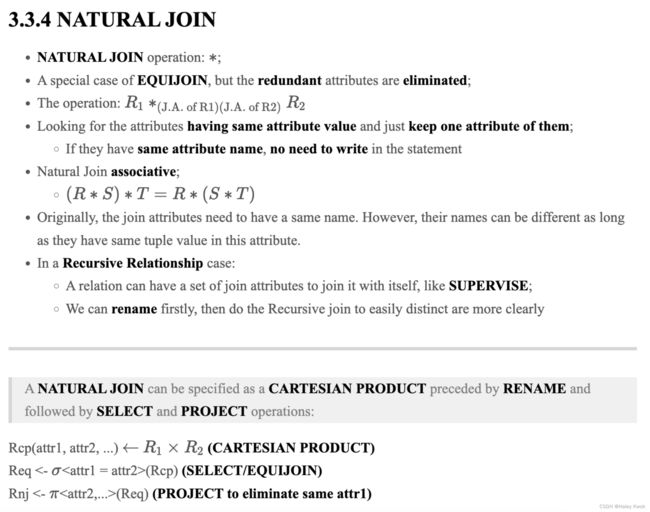

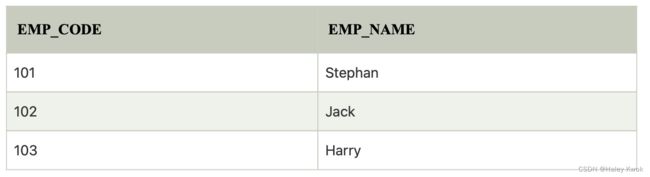

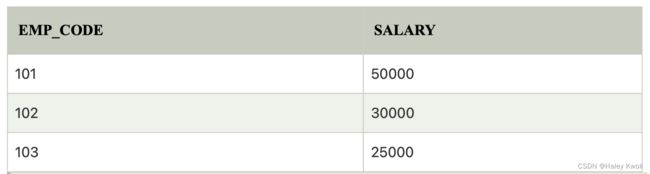

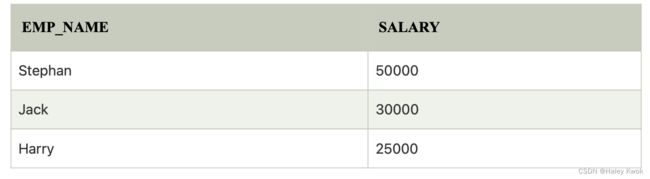

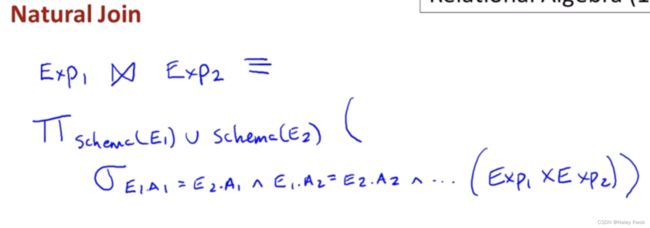

1. Natural Join

A natural join is the set of tuples of all combinations in R and S that are equal on their common attribute names.

It is denoted by ⋈.

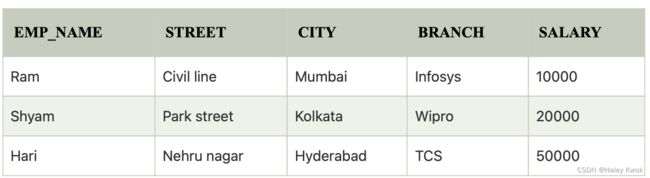

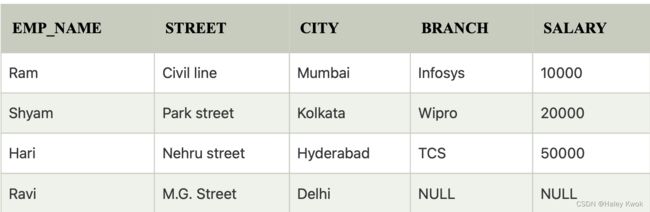

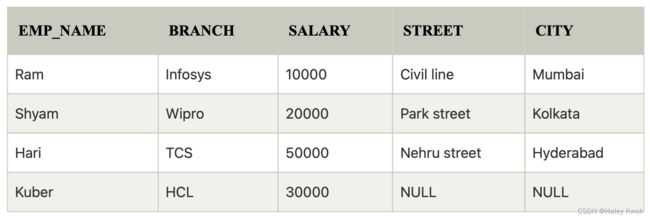

Example: Let’s use the above EMPLOYEE table and SALARY table:

2. Outer Join:

The outer join operation is an extension of the join operation. It is used to deal with missing information.

Example:

EMPLOYEE

FACT_WORKERS

An outer join is basically of three types:

Left outer join

Right outer join

Full outer join

a. Left outer join:

Left outer join contains the set of tuples of all combinations in R and S that are equal on their common attribute names.

In the left outer join, tuples in R have no matching tuples in S.

It is denoted by ⟕.

Example: Using the above EMPLOYEE table and FACT_WORKERS table

b. Right outer join:

EMPLOYEE ⟖ FACT_WORKERS

c. Full outer join:

In full outer join, tuples in R that have no matching tuples in S and tuples in S that have no matching tuples in R in their common attribute name

EMPLOYEE ⟗ FACT_WORKERS

3. Equi join:

It is also known as an inner join. It is the most common join. It is based on matched data as per the equality condition. The equi join uses the comparison operator(=).

Summary

PRODUCT: distinct

EQUIJOIN: LIVES r1, LIVES r2, MANAGES m

NATURAL JOIN: LIVES, MANAGES

THINK FROM THE CONDITION, AND FINALLY FOR THE SELECT

You don’t have to = tow tables.attribute anymore!

Chapter 5: Integrity Constraints (ICs) and Normal Forms

Integrity constraints are a set of rules. It is used to maintain the quality of information.

Integrity constraints ensure that the data insertion, updating, and other processes have to be performed in such a way that data integrity is not affected.

Thus, integrity constraint is used to guard against accidental damage to the database.

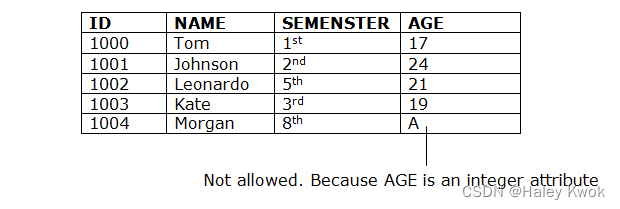

1. Domain Constraint

Domain constraints can be defined as the definition of a valid set of values for an attribute.

The data type of domain includes string, character, integer, time, date, currency, etc. The value of the attribute must be available in the corresponding domain. It may also prohibit ‘null’ values of particular attributes.

Number(6,2) -> 6 digits in total; and 2 digits after the decimal points

1234.56

2. Entity Integrity Constraint

The entity integrity constraint states that primary key value can't be null.

This is because the primary key value is used to identify individual rows in relation and if the primary key has a null value, then we can’t identify those rows.

A table can contain a null value other than the primary key field.

3. Referential Integrity Constraint

A referential integrity constraint is specified between two tables.

In the Referential integrity constraints, if a foreign key in Table 1 refers to the Primary Key of Table 2, then every value of the Foreign Key in Table 1 must be null or be available in Table 2.

4. Key Constraint

Keys are the entity set that is used to identify an entity within its entity set uniquely.

An entity set can have multiple keys, but out of which one key will be the primary key. A primary key can contain a unique and null value in the relational table.

Functional Dependency and Normal Forms

Functional Dependency (FD) is a particular kind of constraint that is on the set of “legal” relations n Formal definition:

The functional dependency is a relationship that exists between two attributes. It typically exists between the primary key and non-key attribute within a table.

Actually it implies that if you can find the non-key attribute, it may be easier for you to find out the primary key and candidate keys.

X → Y

The left side of FD is known as a determinant, the right side of the production is known as a dependent.

Assume we have an employee table with attributes: Emp_Id, Emp_Name, Emp_Address.

Here Emp_Id attribute can uniquely identify the Emp_Name attribute of employee table because if we know the Emp_Id, we can tell that employee name associated with it. We can say that Emp_Name is functionally dependent on Emp_Id.

Functional dependency can be written as:

Emp_Id → Emp_Name

Types of Functional Dependency

1. Trivial functional dependency

A → B has trivial functional dependency if B is a subset of A.

The following dependencies are also trivial like: A → A, B → B, AB -> A,…

2. Non-trivial functional dependency

A → B has a non-trivial functional dependency if B is not a subset of A.

When A intersection B is NULL, then A → B is called as complete non-trivial.

Inference Rules for FDs

1. Reflexive Rule (IR1)

In the reflexive rule, if Y is a subset of X, then X determines Y.

superkey and candidate key

If X ⊇ Y then X → Y

Example:

X = {a, b, c, d, e}

Y = {a, b, c}

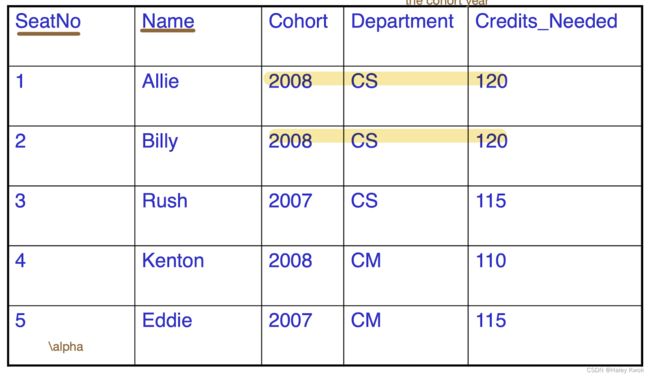

α \alpha α -> β \beta β

The Cohort AND Department -> Credits_Needed

SeatNo/ Name -> …

2. Augmentation Rule (IR2)

Commonly being ignored, but very important.

The augmentation is also called as a partial dependency. In augmentation, if X determines Y, then XZ determines YZ for any Z.

If X → Y then XZ → YZ (XZ stands for X U Z)

Example:

For R(ABCD), if A → B then AC → BC

3. Transitive Rule (IR3)

In the transitive rule, if X determines Y and Y determine Z, then X must also determine Z.

If X → Y and Y → Z then X → Z

4. Union Rule (IR4)

Union rule says, if X determines Y and X determines Z, then X must also determine Y and Z.

If X → Y and X → Z then X → YZ

Proof:

- X → Y (given)

- X → Z (given)

- X → XY (using IR2 on 1 by augmentation with X. Where XX = X)

- XY → YZ (using IR2 on 2 by augmentation with Y)

- X → YZ (using IR3 on 3 and 4)

5. Decomposition Rule (IR5)

Decomposition rule is also known as project rule. It is the reverse of union rule.

This Rule says, if X determines Y and Z, then X determines Y and X determines Z separately.

If X → YZ then X → Y and X → Z

Proof:

- X → YZ (given)

- YZ → Y (using IR1 Rule)

- X → Y (using IR3 on 1 and 2)

6. Pseudo transitive Rule (IR6)

In Pseudo transitive Rule, if X determines Y and YZ determines W, then XZ determines W.

If X → Y and YZ → W then XZ → W

Proof:

- X → Y (given)

- WY → Z (given)

- WX → WY (using IR2 on 1 by augmenting with W)

- WX → Z (using IR3 on 3 and 2)

Data modification anomalies can be categorized into three types:

Insertion Anomaly: Insertion Anomaly refers to when one cannot insert a new tuple into a relationship due to lack of data.

Deletion Anomaly: The delete anomaly refers to the situation where the deletion of data results in the unintended loss of some other important data.

Updatation Anomaly: The update anomaly is when an update of a single data value requires multiple rows of data to be updated.

Repetition, potential consistency, inability to represent, and loss of data

Types of Normal Forms:

Normalization works through a series of stages called Normal forms. The normal forms apply to individual relations. The relation is said to be in particular normal form if it satisfies constraints.

Normal Form Description

1NF

A relation is in 1NF if it contains an

atomic value. It states that an attribute of a table cannot hold multiple values. It must hold onlysingle-valued attribute.

Relation EMPLOYEE is not in 1NF because of multi-valued attribute EMP_PHONE.

S# P# -> Qty

S# -> Status, City

City -> Status

, while Status and City is the non-key attribute, which forms partial relationship with the Primary key S#

Problems

- Insert Anomalies

Inability to represent certain information:

Eg, cannot enter “Supplier and City” information until Supplier supplies at least one part - Delete Anomalies

Deleting the “only tuple” for a supplier will destroy all the information about that supplier - Update Anomalies

“S# and City” could be redundantly represented for each P#, which may cause potential inconsistency when updating a tuple

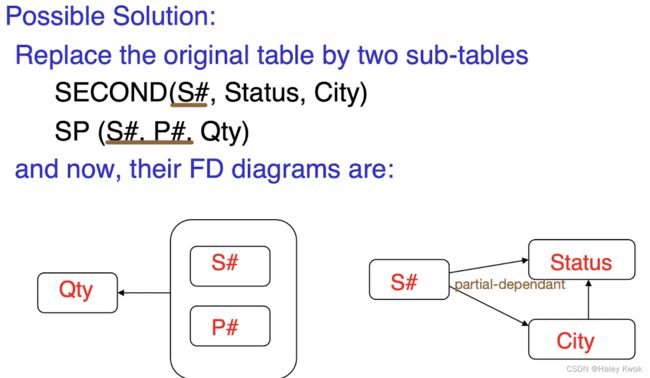

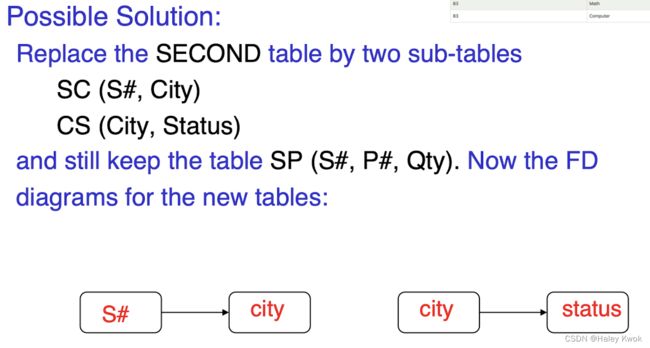

Solution

2NF

所有非关键字字段都由关键字段决定

不能部分依赖,就是说当一个表有组合主键时,其他非主键的字段必须完全依赖于主键。

No non-prime attribute is dependent on the proper subset of any candidate key of table

[Example]

for R1, F1 = {I->C, I->D, CD->N}

I is the only CK for RL, so no partial dependency in R1, hence satisfying 2NF.

but I->CD-> N is a transitive dependency, so violates 3NF, so R1 is 2NF

In the given table, non-prime attribute (Non-CK) TEACHER_AGE is dependent on TEACHER_ID which is a proper subset of a candidate key. That’s why it violates the rule for 2NF.

Update Problems: 1000 supplier live in Hong Kong and Hong Kong status. This pair information will be repeated for each of this 1000 supplier.

3NF (Good Design!)

Transitive functional dependencyof non-prime (non-CK) attribute on any super key should be removed.

[Example]

R(A,B,C,D,E)

F{A->B, BC->E, and ED->A}

CK: ABC, BCD, CDE

R is in the 3NF since all the attributes of R are ONLY key attributes.

所有非关键字字段都仅由关键字段决定

![]()

Super key in the table above:

{EMP_ID}, {EMP_ID, EMP_NAME}, {EMP_ID, EMP_NAME, EMP_ZIP}…so on

Candidate key: {EMP_ID}

Non-prime attributes: In the given table, all attributes except EMP_ID (CK) are non-prime.

Here, EMP_STATE & EMP_CITY dependent on EMP_ZIP and EMP_ZIP dependent on EMP_ID. The non-prime attributes (EMP_STATE, EMP_CITY) transitively dependent on super key(EMP_ID). It violates the rule of third normal form. 2NF ONLY

F{A,B,C,D,E}

A->C

C->DE

A->C->DE

That’s why we need to move the EMP_CITY and EMP_STATE to the new EMP_ZIP as a Primary key.

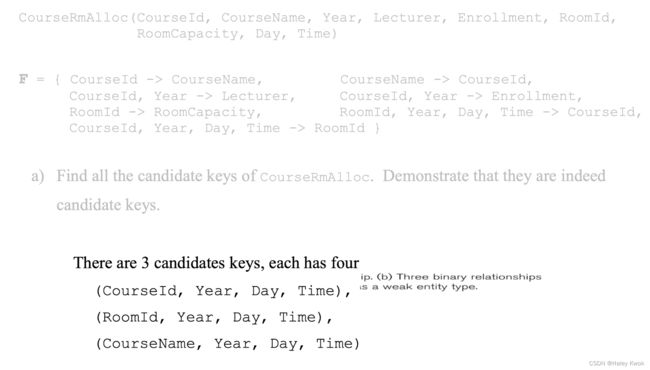

BCNF

A stronger definition of 3NF is known as Boyce Codd’s normal form. A relation R is in BCNF if and only if

every determinant (left-hand side of an FD) is a candidate key.

multiple candidate keys, and

these candidate keys are composite ones, and

they overlap on some common attribute

Chapter 6: File Organization and Indexing

File Organization

Data stored as magnetised areas on magnetic disk surfaces.

The block size B is fixed for each system. Typical block sizes range from B=512 bytes to B=4096 bytes.

Whole blocks are transferred between disk and main memory for processing.

Reading or writing a disk block is time consuming because of the seek time s and rotational delay (latency) rd

Double buffering can be used to speed up the transfer of contiguous disk blocks

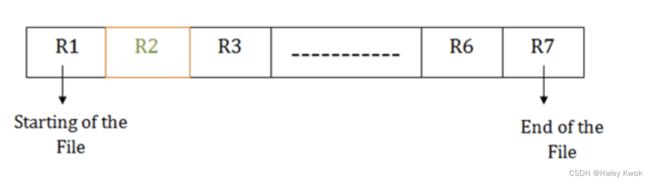

A file is a sequence of records, where each record is a collection of data values.

Records are stored in disk blocks. The blocking factor bfr for a file is the (average) number of file records stored in a disk block.

A file can have fixed-length records or variable-length records.

File records can be unspanned (no record can span two blocks) -> a file with fixed-length records

or

spanned (a record can be stored in more than one block) -> a file with variable-length records

The physical disk blocks that are allocated to hold the records of a file can be contiguous, linked, or indexed

1. Unordered File

1.1 Pile File Method:

It is a quite simple method.

- To search for a record, a

linear searchthrough the file records is necessary. This requires reading and searching half the file blocks on the average, and is hence quite expensive. - Reading the records in order of a particular field requires sorting the file records.

- In this method, we store the record in a sequence, i.e., one after another.

- In case of updating or deleting of any record, the record will be searched in the memory blocks. When it is found, then it will be marked for deleting, and the new record is inserted.

- Here, the record will be inserted in the order in which they are inserted into tables. New records are inserted at the end of the file. Record insertion is quite efficient.

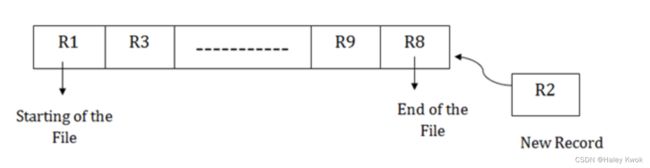

[Example]

Insertion of the new record:

Suppose we have four records R1, R3 and so on upto R9 and R8 in a sequence. Hence, records are nothing but a row in the table. Suppose we want to insert a new record R2 in the sequence, then it will be placed at the end of the file. Here, records are nothing but a row in any table.

2. Ordered File (Sequential File Organization)

This method is the easiest method for file organization. In this method, files are stored sequentially.

2.1 Sorted File Method:

In this method, the new record is always inserted at the file’s end, and then it will sort the sequence in ascending or descending order. Sorting of records is based on any primary key or any other key.

In the case of modification of any record, it will update the record and then sort the file, and lastly, the updated record is placed in the right place.

Pros of sequential file organization

It contains a fast and efficient method for the huge amount of data.

In this method, files can be easily stored in cheaper storage mechanism like magnetic tapes.

It is simple in design.

It requires no much effort to store the data.

This method is used when most of the records have to be accessed like grade calculation of a student, generating the salary slip, etc.

This method is used for report generation or statistical calculations.

Cons of sequential file organization

It will waste time as we cannot jump on a particular record that is required but we have to move sequentially which takes our time.

Sorted file method takes more time and space for sorting the records.

[Example]

Insertion of the new record:

Suppose there is a preexisting sorted sequence of four records R1, R3 and so on upto R6 and R7. Suppose a new record R2 has to be inserted in the sequence, then it will be inserted at the end of the file, and then it will sort the sequence.

Binary Tree: Each node corresponds to a disk block; if the file has n blocks, the height of the tree will be l o g 2 n log_2n log2n

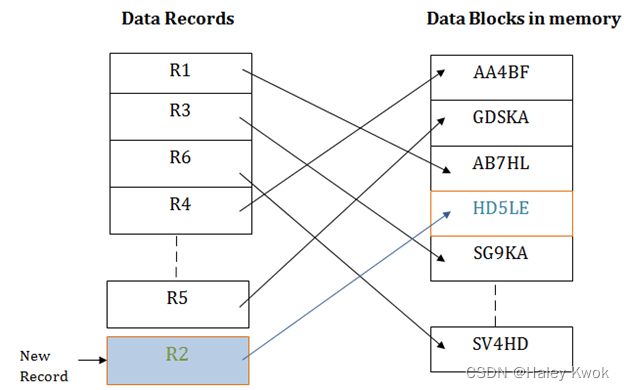

3. Heap Files (Unordered)

It is the simplest and most basic type of organization. It works with data blocks. In heap file organization, the records are inserted at the file’s end. When the records are inserted, it doesn’t require the sorting and ordering of records.

When the data block is full, the new record is stored in some other block. This new data block need not to be the very next data block, but it can select any data block in the memory to store new records. The heap file is also known as an unordered file.

Suppose we have five records R1, R3, R6, R4 and R5 in a heap and suppose we want to insert a new record R2 in a heap. If the data block 3 is full then it will be inserted in any of the database selected by the DBMS, let’s say data block 1.

Pros of Heap file organization

It is a very good method of file organization for bulk insertion. If there is a large number of data which needs to load into the database at a time, then this method is best suited.

In case of a small database, fetching and retrieving of records is faster than the sequential record.

Cons of Heap file organization

This method is inefficient for the large database because it takes time to search or modify the record. If we want to search, update or delete the data in heap file organization, then we need to traverse the data from staring of the file till we get the requested record.

This method is inefficient for large databases.

If the database is very large then searching, updating or deleting of record will be time-consuming because there is no sorting or ordering of records. In the heap file organization, we need to check all the data until we get the requested record.

4. Hashed Files

The hash function h should distribute the records uniformly among the buckets. Otherwise, search time will increase because many overflow records will exist.

4.1 Static External Hashing

Hash File Organization uses the computation of hash function on some fields of the records. The hash function’s output determines the location of disk block where the records are to be placed.

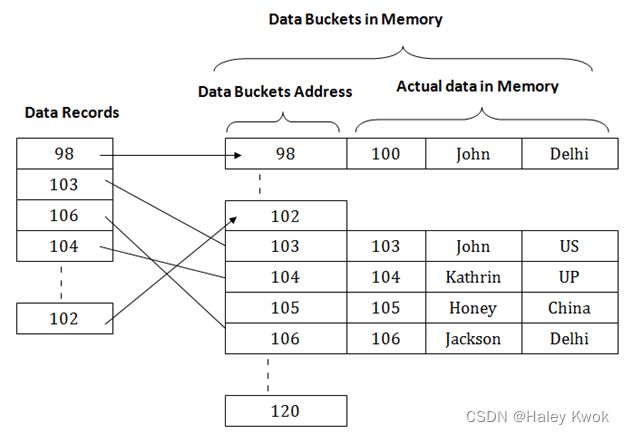

One of the file fields is designated to be the hash key of the file. // Primary key itself as the address of the data block. That means each row whose address will be the same as a primary key stored in the data block.

The record with hash key value K is stored in bucket i, where i=h(K), and h is the hashing function.

Pros

- Search is very efficient on the hash key. In a huge database structure, it is very inefficient to search all the index values and reach the desired data. Hashing technique is used to calculate the direct location of a data record on the disk without using index structure. In this technique, data is stored at the data blocks whose address is generated by using the hashing function. The memory location where these records are stored is known as data bucket or data blocks. In this, a hash function can choose any of the column value to generate the address.

- When a record has to be received using the hash key columns, then the address is generated, and the whole record is retrieved using that address. In the same way, when a new record has to be inserted, then the address is generated using the hash key and record is directly inserted. The same process is applied in the case of delete and update.

- In this method, there is no effort for searching and sorting the entire file.

- In this method, each record will be stored randomly in the memory.

Cons

Fixed number of buckets M is a problem when the number of records in the file grows or shrinks

4.1.1 Collisions problem

Collisions occur when a new record hashes to a bucket that is

already full.

An overflow file is kept for storing such records;

overflow records that hash to each bucket can be linked together.

There are numerous methods for collision resolution, including the following: Open Addressing or Chaining

4.1.1.1 Opening Addressing: Linear Probing

When a hash function generates an address at which data is already stored, then the next bucket will be allocated to it. This mechanism is called as Linear Probing.

[Example]

try Bucket_id+1, Bucket_id+2, …

suppose R3 is a new address which needs to be inserted, the hash function generates address as 112 for R3. But the generated address is already full. So the system searches next available data bucket, 113 and assigns R3 to it.

4.1.1.2 Opening Addressing: Quadratic Probing

try Bucket_id+1, Bucket_id+4,…

3 + 4 = 7

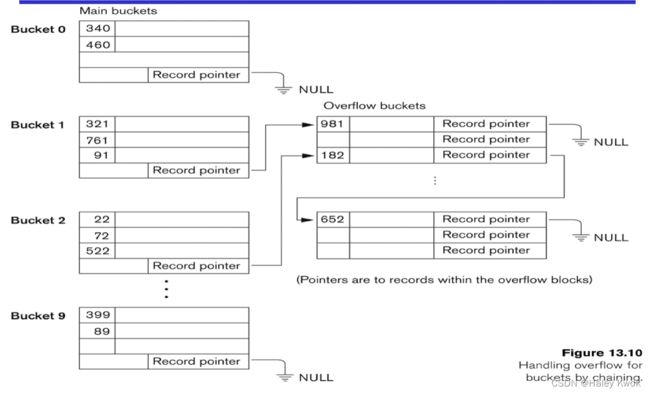

4.1.2 Overflow chaining/ Overflow handling

When buckets are full, then a new data bucket is allocated for the same hash result and is linked after the previous one. This mechanism is known as Overflow chaining.

For this method, various overflow locations are kept, usually by extending the array with a number of overflow positions.

In addition, a pointer field is added to each record location.

A collision is resolved by placing the new record in an unused overflow location and setting the pointer of the occupied hash address location to the address of that overflow location.

[Example]

Suppose R3 is a new address which needs to be inserted into the table, the hash function generates address as 110 for it. But this bucket is full to store the new data. In this case, a new bucket is inserted at the end of 110 buckets and is linked to it.

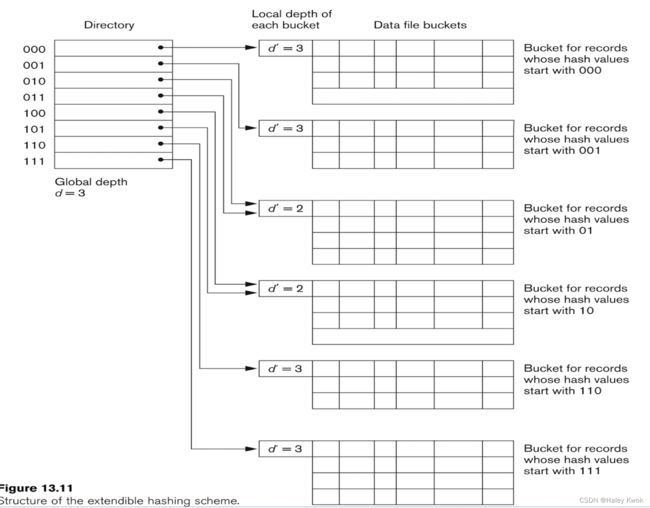

4.2 Dynamic And Extendible Hashing

The dynamic hashing method is used to overcome the problems of static hashing like bucket overflow. Dynamic and extendible hashing do not require an overflow area.

In this method, data buckets grow or shrink as the records increases or decreases. The directories can be stored on disk, and they expand or shrink dynamically. Directory entries point to the disk blocks that contain the stored records.

This method makes hashing dynamic, i.e., it allows insertion or deletion without resulting in poor performance.

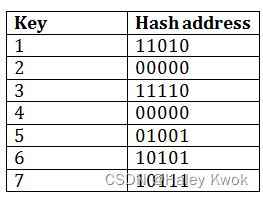

Both dynamic and extendible hashing use the binary representation of the hash value h(K) in order to access a

directory.

In dynamic hashing the directory is a binary tree. In extendible hashing the directory is an array of size 2 d 2^d 2d where is called the global depth.

[Example]

Insert key 9 with hash address 10001 into the above structure:

Since key 9 has hash address 10001, it must go into the first bucket. But bucket B1 is full, so it will get split.

The splitting will separate 5, 9 from 6 since last three bits of 5, 9 are 001, so it will go into bucket B1, and the last three bits of 6 are 101, so it will go into bucket B5.

Advantages of dynamic hashing

- In this method, the performance does not decrease as the data grows in the system. It simply increases the size of memory to accommodate the data.

- In this method, memory is well utilized as it grows and shrinks with the data. There will not be any unused memory lying. This method is good for the dynamic database where data grows and shrinks frequently.

Disadvantages of dynamic hashing

- In this method, if the data size increases then the bucket size is also increased. These addresses of data will be maintained in the bucket address table. This is because the data address will keep changing as buckets grow and shrink. If there is a huge increase in data, maintaining the bucket address table becomes tedious.

- In this case, the bucket overflow situation will also occur. But it might take little time to reach this situation than static hashing.

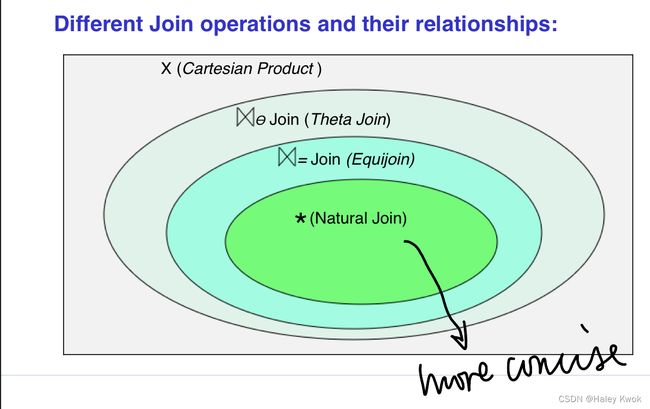

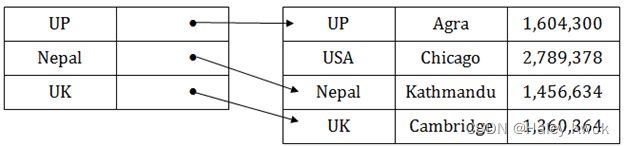

5. Indexing

![]()

5.1 Ordered indices

The indices are usually sorted to make searching faster. The indices which are sorted are known as ordered indices.

[Example]

Suppose we have an employee table with thousands of record and each of which is 10 bytes long. If their IDs start with 1, 2, 3…and so on and we have to search student with ID-543.

In the case of a database with no index, we have to search the disk block from starting till it reaches 543. The DBMS will read the record after reading 54310=5430 bytes.

In the case of an index, we will search using indexes and the DBMS will read the record after reading 5422= 1084 bytes which are very less compared to the previous case.

5.1.1 Single level index/ Sparse indexing/ Primary index (Ordered)

Applicable conditions:

Primary index: applicable to a data file which is ordered on the key field (corresponding to the primary key of the corresponding relation), upon which the index is built, and it can be either a dense or non-dense index.

Query types supported by each:

Primary index: suitable for “one-record-at-a-time” search with a precise condition (such as given an id, find the corresponding employee); it can also be used to support “ordered read” according to the indexed field (eg, primary key).

是稀疏索引 仅指向每个块的地址

defined on ordered file

ordered on key field

1 index entry for each block (in data file)

index entry has key value for first record in block, i.e., block anchor

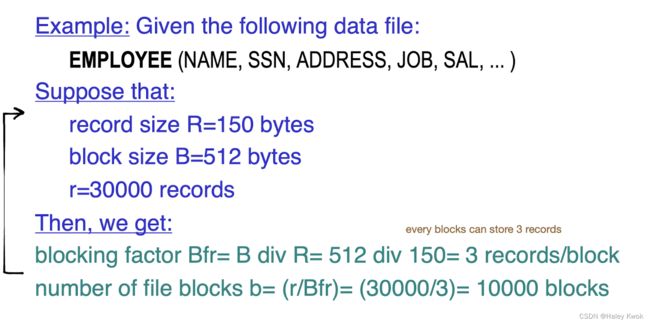

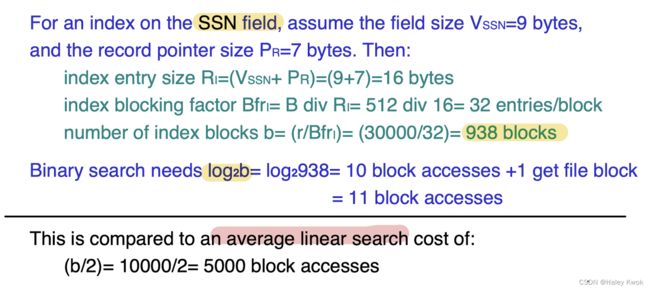

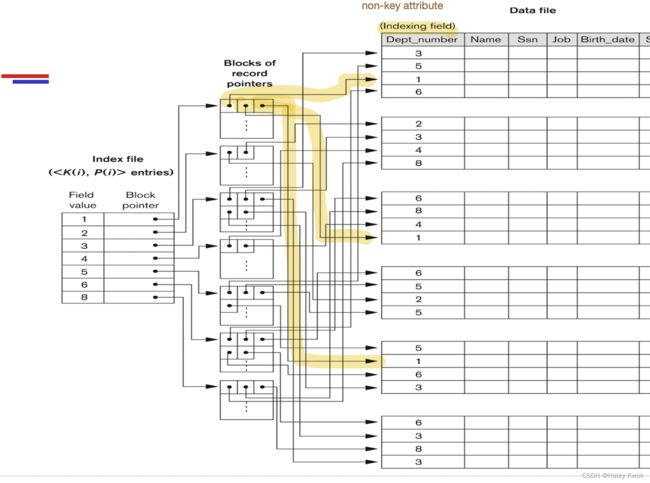

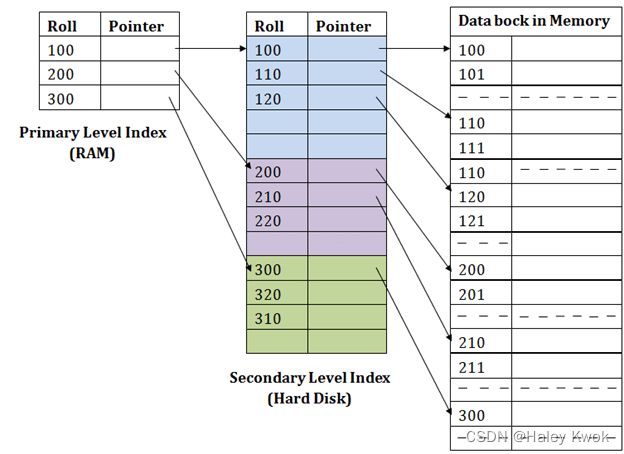

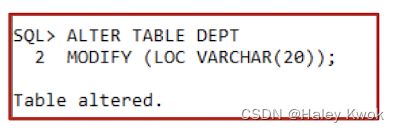

total number of entries in index is same as number of disk blocks