pytorch实现yolov1

参考代码:https://github.com/abeardear/pytorch-YOLO-v1

参考视频:https://www.bilibili.com/video/BV1DS4y1R7zd?p=4&vd_source=a4e3b0193258dd9e115221e68fda2ac1

一、数据加载

数据加载需要继承data.Dataset类,其次实现对应的__init__函数、__getitem__函数以及__len__函数。在这里还需要实现encoder函数,将输入的数据转换为网络需要的数据。

数据加载的基本流程

参考博客:https://blog.csdn.net/weixin_42295969/article/details/126333679?spm=1001.2014.3001.5502

需要完成以下几个函数:

# 自定义数据集类

class MyDataset(torch.utils.data.Dataset):

def __init__(self, *args):

super().__init__()

# 初始化数据集包含的数据和标签

pass

def __getitem__(self, index):

# 根据索引index从文件中读取一个数据

# 对数据预处理

# 返回数据和对应标签

pass

def __len__(self):

# 返回数据集的大小

return len()

encoder实现

- 引用大佬说的话:encoder过程就是把人类看得懂的数据转换为方便神经网络训练的数据。

- 实现:让这个神经网络完成(n,c,h,w)(全称:batch_size,channels,height,width) -> (n,30,7,7)的映射

实现理解

1、从box那里计算获得中心点——>2、遍历每一个box——>2-1、分别设置中心点的置信度以及类别、中心点坐标以及宽高——>3、返回对应的结果target

1、从box那里计算获得中心点

cxcy = (boxes[:,2:]+boxes[:,:2])/2 #中心点的x,y

2、遍历每一个box——>2-1、分别设置中心点的置信度以及类别、中心点坐标以及宽高

for i in range(cxcy.size()[0]):

cxcy_sample = cxcy[i]

ij = (cxcy_sample/cell_size).ceil()-1 #ceil向上取整

# 设置中心点的置信度以及类别

target[int(ij[1]),int(ij[0]),4] = 1

target[int(ij[1]),int(ij[0]),9] = 1

target[int(ij[1]),int(ij[0]),int(labels[i])+9] = 1 #设置类别那个是1

xy = ij*cell_size #匹配到的网格的左上角相对坐标

delta_xy = (cxcy_sample -xy)/cell_size

#设置中心点坐标以及宽高

target[int(ij[1]),int(ij[0]),2:4] = wh[i] #设置宽高

target[int(ij[1]),int(ij[0]),:2] = delta_xy #px,py

target[int(ij[1]),int(ij[0]),7:9] = wh[i] #设置宽高

target[int(ij[1]),int(ij[0]),5:7] = delta_xy #px ,py

3、返回对应的结果target

return target

代码实现

def encoder(self,boxes,labels):

'''

boxes (tensor) [[x1,y1,x2,y2],[]]

labels (tensor) [...]

return 7x7x30 x,y,w,h,c,x,y,w,h,c 20个分类(000...1000)

'''

grid_num = 14

target = torch.zeros((grid_num,grid_num,30))

cell_size = 1./grid_num

wh = boxes[:,2:]-boxes[:,:2]

cxcy = (boxes[:,2:]+boxes[:,:2])/2 #中心点的x,y

for i in range(cxcy.size()[0]):

cxcy_sample = cxcy[i]

ij = (cxcy_sample/cell_size).ceil()-1 #ceil向上取整

target[int(ij[1]),int(ij[0]),4] = 1

target[int(ij[1]),int(ij[0]),9] = 1

target[int(ij[1]),int(ij[0]),int(labels[i])+9] = 1 #设置类别那个是1

xy = ij*cell_size #匹配到的网格的左上角相对坐标

delta_xy = (cxcy_sample -xy)/cell_size

target[int(ij[1]),int(ij[0]),2:4] = wh[i] #设置宽高

target[int(ij[1]),int(ij[0]),:2] = delta_xy #px,py

target[int(ij[1]),int(ij[0]),7:9] = wh[i] #设置宽高

target[int(ij[1]),int(ij[0]),5:7] = delta_xy #px ,py

return target

数据增强

img, boxes = self.random_flip(img, boxes) #随机翻转

img,boxes = self.randomScale(img,boxes) #随机缩放

img = self.randomBlur(img) #高斯模糊

img = self.RandomBrightness(img) #随机亮度

img = self.RandomHue(img) #色相

img = self.RandomSaturation(img) #随机饱和度

img,boxes,labels = self.randomShift(img,boxes,labels) #平移

img,boxes,labels = self.randomCrop(img,boxes,labels) #裁剪

二、网络设计

网络结构:

输入图片大小(3,224,224)

----------------------------------------------------------------

Layer (type) Output Shape Param #

================================================================

Conv2d-1 [-1, 64, 112, 112] 9,408

BatchNorm2d-2 [-1, 64, 112, 112] 128

ReLU-3 [-1, 64, 112, 112] 0

MaxPool2d-4 [-1, 64, 56, 56] 0

Conv2d-5 [-1, 64, 56, 56] 4,096

BatchNorm2d-6 [-1, 64, 56, 56] 128

ReLU-7 [-1, 64, 56, 56] 0

Conv2d-8 [-1, 64, 56, 56] 36,864

BatchNorm2d-9 [-1, 64, 56, 56] 128

ReLU-10 [-1, 64, 56, 56] 0

Conv2d-11 [-1, 256, 56, 56] 16,384

BatchNorm2d-12 [-1, 256, 56, 56] 512

Conv2d-13 [-1, 256, 56, 56] 16,384

BatchNorm2d-14 [-1, 256, 56, 56] 512

ReLU-15 [-1, 256, 56, 56] 0

Bottleneck-16 [-1, 256, 56, 56] 0

Conv2d-17 [-1, 64, 56, 56] 16,384

BatchNorm2d-18 [-1, 64, 56, 56] 128

ReLU-19 [-1, 64, 56, 56] 0

Conv2d-20 [-1, 64, 56, 56] 36,864

BatchNorm2d-21 [-1, 64, 56, 56] 128

ReLU-22 [-1, 64, 56, 56] 0

Conv2d-23 [-1, 256, 56, 56] 16,384

BatchNorm2d-24 [-1, 256, 56, 56] 512

ReLU-25 [-1, 256, 56, 56] 0

Bottleneck-26 [-1, 256, 56, 56] 0

Conv2d-27 [-1, 64, 56, 56] 16,384

BatchNorm2d-28 [-1, 64, 56, 56] 128

ReLU-29 [-1, 64, 56, 56] 0

Conv2d-30 [-1, 64, 56, 56] 36,864

BatchNorm2d-31 [-1, 64, 56, 56] 128

ReLU-32 [-1, 64, 56, 56] 0

Conv2d-33 [-1, 256, 56, 56] 16,384

BatchNorm2d-34 [-1, 256, 56, 56] 512

ReLU-35 [-1, 256, 56, 56] 0

Bottleneck-36 [-1, 256, 56, 56] 0

Conv2d-37 [-1, 128, 56, 56] 32,768

BatchNorm2d-38 [-1, 128, 56, 56] 256

ReLU-39 [-1, 128, 56, 56] 0

Conv2d-40 [-1, 128, 28, 28] 147,456

BatchNorm2d-41 [-1, 128, 28, 28] 256

ReLU-42 [-1, 128, 28, 28] 0

Conv2d-43 [-1, 512, 28, 28] 65,536

BatchNorm2d-44 [-1, 512, 28, 28] 1,024

Conv2d-45 [-1, 512, 28, 28] 131,072

BatchNorm2d-46 [-1, 512, 28, 28] 1,024

ReLU-47 [-1, 512, 28, 28] 0

Bottleneck-48 [-1, 512, 28, 28] 0

Conv2d-49 [-1, 128, 28, 28] 65,536

BatchNorm2d-50 [-1, 128, 28, 28] 256

ReLU-51 [-1, 128, 28, 28] 0

Conv2d-52 [-1, 128, 28, 28] 147,456

BatchNorm2d-53 [-1, 128, 28, 28] 256

ReLU-54 [-1, 128, 28, 28] 0

Conv2d-55 [-1, 512, 28, 28] 65,536

BatchNorm2d-56 [-1, 512, 28, 28] 1,024

ReLU-57 [-1, 512, 28, 28] 0

Bottleneck-58 [-1, 512, 28, 28] 0

Conv2d-59 [-1, 128, 28, 28] 65,536

BatchNorm2d-60 [-1, 128, 28, 28] 256

ReLU-61 [-1, 128, 28, 28] 0

Conv2d-62 [-1, 128, 28, 28] 147,456

BatchNorm2d-63 [-1, 128, 28, 28] 256

ReLU-64 [-1, 128, 28, 28] 0

Conv2d-65 [-1, 512, 28, 28] 65,536

BatchNorm2d-66 [-1, 512, 28, 28] 1,024

ReLU-67 [-1, 512, 28, 28] 0

Bottleneck-68 [-1, 512, 28, 28] 0

Conv2d-69 [-1, 128, 28, 28] 65,536

BatchNorm2d-70 [-1, 128, 28, 28] 256

ReLU-71 [-1, 128, 28, 28] 0

Conv2d-72 [-1, 128, 28, 28] 147,456

BatchNorm2d-73 [-1, 128, 28, 28] 256

ReLU-74 [-1, 128, 28, 28] 0

Conv2d-75 [-1, 512, 28, 28] 65,536

BatchNorm2d-76 [-1, 512, 28, 28] 1,024

ReLU-77 [-1, 512, 28, 28] 0

Bottleneck-78 [-1, 512, 28, 28] 0

Conv2d-79 [-1, 256, 28, 28] 131,072

BatchNorm2d-80 [-1, 256, 28, 28] 512

ReLU-81 [-1, 256, 28, 28] 0

Conv2d-82 [-1, 256, 14, 14] 589,824

BatchNorm2d-83 [-1, 256, 14, 14] 512

ReLU-84 [-1, 256, 14, 14] 0

Conv2d-85 [-1, 1024, 14, 14] 262,144

BatchNorm2d-86 [-1, 1024, 14, 14] 2,048

Conv2d-87 [-1, 1024, 14, 14] 524,288

BatchNorm2d-88 [-1, 1024, 14, 14] 2,048

ReLU-89 [-1, 1024, 14, 14] 0

Bottleneck-90 [-1, 1024, 14, 14] 0

Conv2d-91 [-1, 256, 14, 14] 262,144

BatchNorm2d-92 [-1, 256, 14, 14] 512

ReLU-93 [-1, 256, 14, 14] 0

Conv2d-94 [-1, 256, 14, 14] 589,824

BatchNorm2d-95 [-1, 256, 14, 14] 512

ReLU-96 [-1, 256, 14, 14] 0

Conv2d-97 [-1, 1024, 14, 14] 262,144

BatchNorm2d-98 [-1, 1024, 14, 14] 2,048

ReLU-99 [-1, 1024, 14, 14] 0

Bottleneck-100 [-1, 1024, 14, 14] 0

Conv2d-101 [-1, 256, 14, 14] 262,144

BatchNorm2d-102 [-1, 256, 14, 14] 512

ReLU-103 [-1, 256, 14, 14] 0

Conv2d-104 [-1, 256, 14, 14] 589,824

BatchNorm2d-105 [-1, 256, 14, 14] 512

ReLU-106 [-1, 256, 14, 14] 0

Conv2d-107 [-1, 1024, 14, 14] 262,144

BatchNorm2d-108 [-1, 1024, 14, 14] 2,048

ReLU-109 [-1, 1024, 14, 14] 0

Bottleneck-110 [-1, 1024, 14, 14] 0

Conv2d-111 [-1, 256, 14, 14] 262,144

BatchNorm2d-112 [-1, 256, 14, 14] 512

ReLU-113 [-1, 256, 14, 14] 0

Conv2d-114 [-1, 256, 14, 14] 589,824

BatchNorm2d-115 [-1, 256, 14, 14] 512

ReLU-116 [-1, 256, 14, 14] 0

Conv2d-117 [-1, 1024, 14, 14] 262,144

BatchNorm2d-118 [-1, 1024, 14, 14] 2,048

ReLU-119 [-1, 1024, 14, 14] 0

Bottleneck-120 [-1, 1024, 14, 14] 0

Conv2d-121 [-1, 256, 14, 14] 262,144

BatchNorm2d-122 [-1, 256, 14, 14] 512

ReLU-123 [-1, 256, 14, 14] 0

Conv2d-124 [-1, 256, 14, 14] 589,824

BatchNorm2d-125 [-1, 256, 14, 14] 512

ReLU-126 [-1, 256, 14, 14] 0

Conv2d-127 [-1, 1024, 14, 14] 262,144

BatchNorm2d-128 [-1, 1024, 14, 14] 2,048

ReLU-129 [-1, 1024, 14, 14] 0

Bottleneck-130 [-1, 1024, 14, 14] 0

Conv2d-131 [-1, 256, 14, 14] 262,144

BatchNorm2d-132 [-1, 256, 14, 14] 512

ReLU-133 [-1, 256, 14, 14] 0

Conv2d-134 [-1, 256, 14, 14] 589,824

BatchNorm2d-135 [-1, 256, 14, 14] 512

ReLU-136 [-1, 256, 14, 14] 0

Conv2d-137 [-1, 1024, 14, 14] 262,144

BatchNorm2d-138 [-1, 1024, 14, 14] 2,048

ReLU-139 [-1, 1024, 14, 14] 0

Bottleneck-140 [-1, 1024, 14, 14] 0

Conv2d-141 [-1, 512, 14, 14] 524,288

BatchNorm2d-142 [-1, 512, 14, 14] 1,024

ReLU-143 [-1, 512, 14, 14] 0

Conv2d-144 [-1, 512, 7, 7] 2,359,296

BatchNorm2d-145 [-1, 512, 7, 7] 1,024

ReLU-146 [-1, 512, 7, 7] 0

Conv2d-147 [-1, 2048, 7, 7] 1,048,576

BatchNorm2d-148 [-1, 2048, 7, 7] 4,096

Conv2d-149 [-1, 2048, 7, 7] 2,097,152

BatchNorm2d-150 [-1, 2048, 7, 7] 4,096

ReLU-151 [-1, 2048, 7, 7] 0

Bottleneck-152 [-1, 2048, 7, 7] 0

Conv2d-153 [-1, 512, 7, 7] 1,048,576

BatchNorm2d-154 [-1, 512, 7, 7] 1,024

ReLU-155 [-1, 512, 7, 7] 0

Conv2d-156 [-1, 512, 7, 7] 2,359,296

BatchNorm2d-157 [-1, 512, 7, 7] 1,024

ReLU-158 [-1, 512, 7, 7] 0

Conv2d-159 [-1, 2048, 7, 7] 1,048,576

BatchNorm2d-160 [-1, 2048, 7, 7] 4,096

ReLU-161 [-1, 2048, 7, 7] 0

Bottleneck-162 [-1, 2048, 7, 7] 0

Conv2d-163 [-1, 512, 7, 7] 1,048,576

BatchNorm2d-164 [-1, 512, 7, 7] 1,024

ReLU-165 [-1, 512, 7, 7] 0

Conv2d-166 [-1, 512, 7, 7] 2,359,296

BatchNorm2d-167 [-1, 512, 7, 7] 1,024

ReLU-168 [-1, 512, 7, 7] 0

Conv2d-169 [-1, 2048, 7, 7] 1,048,576

BatchNorm2d-170 [-1, 2048, 7, 7] 4,096

ReLU-171 [-1, 2048, 7, 7] 0

Bottleneck-172 [-1, 2048, 7, 7] 0

Conv2d-173 [-1, 256, 7, 7] 524,288

BatchNorm2d-174 [-1, 256, 7, 7] 512

Conv2d-175 [-1, 256, 7, 7] 589,824

BatchNorm2d-176 [-1, 256, 7, 7] 512

detnet_bottleneck-195 [-1, 256, 7, 7] 0

Conv2d-196 [-1, 30, 7, 7] 69,120

BatchNorm2d-197 [-1, 30, 7, 7] 60

================================================================

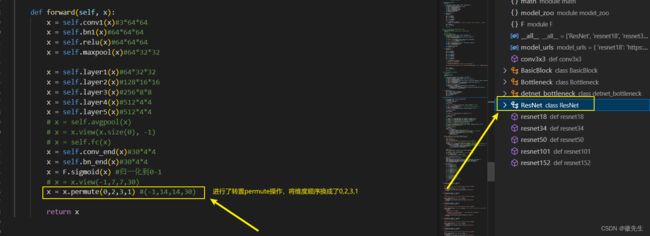

网络设计中可以使用ResNet或者vgg作为骨干网络,重点看后面怎么加分类以及回归的

如何加分类以及回归设计

最后输出设计为(-1,14,14,30)就行,其实这里需要做一个维度上的转置,也就是网络设计里的x = x.permute(0,2,3,1),上面由于sigmod激活函数和permute转置函数都不属于网络参数,所以在上面都没有输出出来。

关键要做到最后的维度要和损失函数一直,比如这里的(14,14,30)里的30就和损失函数的数据维度就很一致。

将ResNet或者vgg加载加骨干网络

resnet50——>ResNet(Bottleneck, [3, 4, 6, 3], **kwargs)__init__(self, block, layers, num_classes=1470)——>layer1-5——>_make_layer函数构建网络块

make_layer作用是将一个网络可以循环地进行连接,只需要设置是什么网络,输入以及输出,以及循环的次数

layer1=self._make_layer(block, 64, layers[0])

layer2=self._make_layer(block, 128, layers[1], stride=2)#128*16*16

layer3=self._make_layer(block, 256, layers[2], stride=2)#256*8*8

layer4=self._make_layer(block, 512, layers[3], stride=2)#256*4*4

layer5=self._make_detnet_layer(in_channels=2048)#512*4*4

...#做相关的适配

for i in range(1, blocks):

layers.append(block(self.inplanes, planes))

_make_detnet_layer也是一样将detnet循环了指定的次数,其实make_***_layer就是做一个封装,可以指定网络的循环次数,并且内部还可以做相关的适配。

设计并加载ResNet_yolo(以ResNet为骨干)的网络:

加载预训练模型进行参数赋值:

#加载预训练模型

resnet = models.resnet50(pretrained=True)

new_state_dict = resnet.state_dict()

dd = net.state_dict()

for k in new_state_dict.keys():

print(k)

if k in dd.keys() and not k.startswith('fc'):

print('yes')

dd[k] = new_state_dict[k]

net.load_state_dict(dd)

三、计算损失函数

可以通过继承nn.moudle类,因为基于这个类可以做反向传播,不用自己去写grad等。

- 首先继承

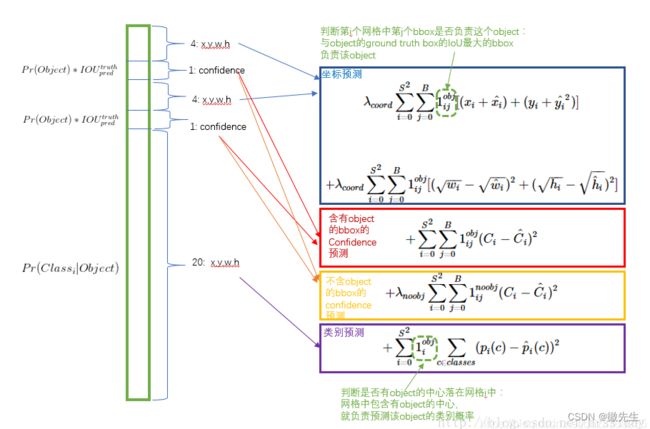

nn.moudle类,关键点去关注以及实现__init__以及forward函数; - 根据损失函数的表达式(这里补一个表达式),可以得到交并比是一个很关键的函数,所以单独实现交并比的函数

compute_iou(self, box1, box2) - 在forwad函数里面实现损失函数,分为四个部分:坐标预测损失值;含有object的bbox的置信度预测值;不含object的bbox的置信度值;以及类别预测。

几个用到的操作

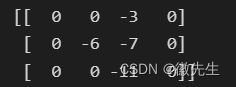

对矩阵元素进行判断操作,将大于0的元素置为0

temp=np.array([[1,2,-3,4],[5,-6,-7,8],[9,10,-11,12]])

temp[temp>0]=0

print(temp)

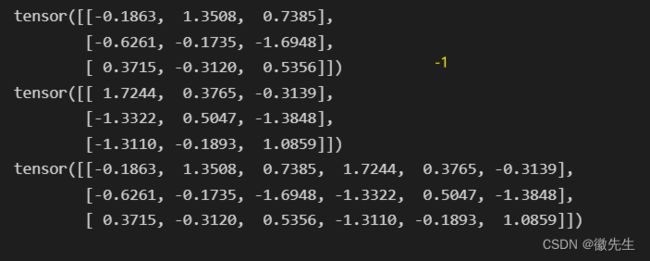

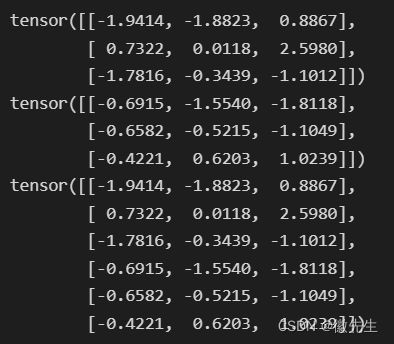

torch.min(a,b)的作用,直接出来的是值,而不是对应的索引

import torch

a=torch.randn(2,3,4)

b=torch.randn(2,3,4)

print(a)

print("*"*100)

print(b)

print("*"*100)

print(torch.min(a,b))

- 用来标记位置的变量类型只能是逻辑值True或者false,并且如果是0都不行,因为如果是具体的值,结果就是对应的元素;

# 只会输出一个一维向量,[]内的矩阵需要是true或者false类型

import numpy as np

a=torch.randn(3,2)

print(a)

a[2,0]=-1

b=a[:,:]>0

print(b)

c=a[b]

print(c)

1.继承nn.moudle类实现__init__以及forward函数

在__init__实现相关的变量定义,在forward函数里面实现对应的损失值。

2.实现损失函数——4个损失的部分

坐标损失、有目标的置信度损失、没有目标的置信度损失以及分类损失

将对应的公式拿出来理解

坐标损失值:

λ coord ∑ i = 0 S 2 ∑ j = 0 B 1 i j o b j ‾ [ ( x i + x ^ i ) + ( y i + y ^ i 2 ) ] + λ coord ∑ i = 0 S 2 ∑ j = 0 B 1 i j o b j [ ( w i − w ^ i ) 2 + ( h i − h ^ i ) 2 ] \begin{gathered} \lambda_{\text {coord }} \sum_{i=0}^{S^{2}} \sum_{j=0}^{B} 1_{i j}^{\underline{o b j}}\left[\left(x_{i}+\hat{x}_{i}\right)+\left(y_{i}+\hat{y}_{i}^{2}\right)\right] \\ +\lambda_{\text {coord }} \sum_{i=0}^{S^{2}} \sum_{j=0}^{B} 1_{i j}^{o b j}\left[\left(\sqrt{w_{i}}-\sqrt{\hat{w}_{i}}\right)^{2}+\left(\sqrt{h_{i}}-\sqrt{\hat{h}_{i}}\right)^{2}\right] \end{gathered} λcoord i=0∑S2j=0∑B1ijobj[(xi+x^i)+(yi+y^i2)]+λcoord i=0∑S2j=0∑B1ijobj[(wi−w^i)2+(hi−h^i)2]

含有目标的bbox的置信度损失值:

+ ∑ i = 0 S 2 ∑ j = 0 B 1 i j o b j ( C i − C ^ i ) 2 +\sum_{i=0}^{S^{2}} \sum_{j=0}^{B} 1_{i j}^{o b j}\left(C_{i}-\hat{C}_{i}\right)^{2} +i=0∑S2j=0∑B1ijobj(Ci−C^i)2

不含目标的bbox的置信度损失值

+ λ noobj ∑ i = 0 S 2 ∑ j = 0 B 1 i j n o o b j ( C i − C ^ i ) 2 +\lambda_{\text {noobj }} \sum_{i=0}^{S^{2}} \sum_{j=0}^{B} 1_{i j}^{n o o b j}\left(C_{i}-\hat{C}_{i}\right)^{2} +λnoobj i=0∑S2j=0∑B1ijnoobj(Ci−C^i)2

类别预测:

+ ∑ i = 0 S 2 1 i o b j ∑ i = c classes ( p i ( c ) − p ^ i ( c ) ) 2 +\sum_{i=0}^{S^{2}} 1_{i}^{o b j} \sum_{i=c \text { classes }}\left(p_{i}(c)-\hat{p}_{i}(c)\right)^{2} +i=0∑S21iobji=c classes ∑(pi(c)−p^i(c))2

不包含目标的置信度损失

+ λ noobj ∑ i = 0 S 2 ∑ j = 0 B 1 i j n o o b j ( C i − C ^ i ) 2 +\lambda_{\text {noobj }} \sum_{i=0}^{S^{2}} \sum_{j=0}^{B} 1_{i j}^{n o o b j}\left(C_{i}-\hat{C}_{i}\right)^{2} +λnoobj i=0∑S2j=0∑B1ijnoobj(Ci−C^i)2

(1)实现思路

直接将背景的损失值分别从pred以及target中提取出来。

(2)代码理解

1、前期准备:

分别计算预测以及目标的三个值:带30个值的全部值coo_pred、前五个(x,y,w、h、c)box_pred、类别结果class_pred

- coo_pred、box_pred 、class_pred 、coo_target、box_target、class_target

#grid_cell =>[7,7]

coo_pred = pred_tensor[coo_mask].view(-1,30) #前景的预测值

# 所有目标边框单独放置

box_pred = coo_pred[:,:10].contiguous().view(-1,5) #box[x1,y1,w1,h1,c1]

# 所有的类别单独放置

class_pred = coo_pred[:,10:] #[x2,y2,w2,h2,c2]

区分一下前景与背景,并进行维度统一

- 注意这里是target_tensor,所以它两个(x,y, w,h,c)都是一样的。只需要提取第四个看是都是大于0,就能够判断前景

coo_mask = target_tensor[:,:,:,4] > 0

noo_mask = target_tensor[:,:,:,4] == 0 #batch_size*7*7

#unsqueeze(-1)表示扩展到最后一个维度,batch_size*7*7*1

coo_mask = coo_mask.unsqueeze(-1).expand_as(target_tensor) #batch_size*7*7*30

noo_mask = noo_mask.unsqueeze(-1).expand_as(target_tensor)

2、代码实现:

#计算不包含目标的置信度损失

# compute not contain obj loss

# view能够做到无论是多少个维度的数据,最后都是一个二维的数据比如view(-1,30),最后就都是N*30

noo_pred = pred_tensor[noo_mask].view(-1,30) #背景的预测值

noo_target = target_tensor[noo_mask].view(-1,30)

#计算背景的置信度损失,拿出对应的数据就行,拿对应位置4和9的数据

noo_pred_mask = torch.cuda.ByteTensor(noo_pred.size())

noo_pred_mask.zero_()

noo_pred_mask[:,4]=1;noo_pred_mask[:,9]=1

#拿到对应的置信度的值,然后计算损失

noo_pred_c = noo_pred[noo_pred_mask] #noo pred只需要计算 c 的损失 size[-1,2]

noo_target_c = noo_target[noo_pred_mask]

nooobj_loss = F.mse_loss(noo_pred_c,noo_target_c,size_average=False)

有目标的置信度损失

+ ∑ i = 0 S 2 ∑ j = 0 B 1 i j o b j ( C i − C ^ i ) 2 +\sum_{i=0}^{S^{2}} \sum_{j=0}^{B} 1_{i j}^{o b j}\left(C_{i}-\hat{C}_{i}\right)^{2} +i=0∑S2j=0∑B1ijobj(Ci−C^i)2

首先需要实现交并比函数,因为这是会用在置信度损失的那部分,需要用 【置信度 = 概率 ∗ 交并比】 【置信度=概率*交并比】 【置信度=概率∗交并比】来表示损失值。然后就可以分别计算

(1)交并比实现

找到左下角的值以及右上角的值,然后计算一下宽高就能够得到交集;

而交集=区域1+区域2-交集

实现思路

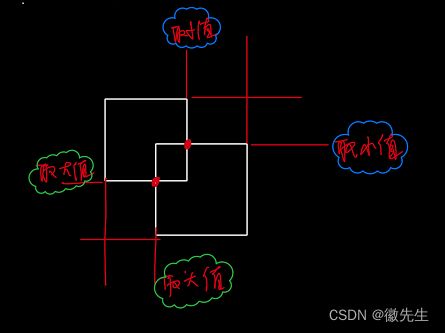

已有的数据:左下的坐标数据、右上的坐标数据;

需要得到:得到每个不同的矩阵(box1和box2两两相互之间)之间的左下坐标的最大值(x和y都是,x和y不必要是同一个节点)以及右上坐标的最小值;

解决方式:

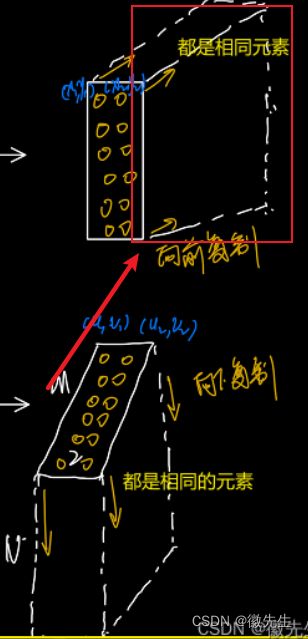

实现交并比这里采用了比较有意思的技巧,正常的实现是通过循环遍历是实现交并比,详细的思路在微信中:

- 他们的数据分别是[N,2],[M,2],所以最终将其变为[N,M,2],其实如上图所示:

第一个向前复制的第一列元素,第一个都是一个的元素(x2,y2),而第二个则是(u2[i],u3[i])上的不同元素,用上面减去下面,其实就是用第一个的那个元素对第二个那个列进行遍历相减,这样就直接用矩阵相减代替了for循环遍历;

(2)代码过程理解

1、前期准备:

分别计算预测以及目标的三个值:带30个值的全部值coo_pred、前五个(x,y,w、h、c)box_pred、类别结果class_pred

- coo_pred、box_pred 、class_pred 、coo_target、box_target、class_target

#grid_cell =>[7,7]

coo_pred = pred_tensor[coo_mask].view(-1,30) #前景的预测值

# 所有目标边框单独放置

box_pred = coo_pred[:,:10].contiguous().view(-1,5) #box[x1,y1,w1,h1,c1]

# 所有的类别单独放置

class_pred = coo_pred[:,10:] #[x2,y2,w2,h2,c2]

区分一下前景与背景,并进行维度统一

- 比如需要在第四个位置的置信度,然后再

coo_mask = target_tensor[:,:,:,4] > 0

noo_mask = target_tensor[:,:,:,4] == 0 #batch_size*7*7

#unsqueeze(-1)表示扩展到最后一个维度,batch_size*7*7*1

coo_mask = coo_mask.unsqueeze(-1).expand_as(target_tensor) #batch_size*7*7*30

noo_mask = noo_mask.unsqueeze(-1).expand_as(target_tensor)

- 用for循环遍历每个框box_target分别计算左下右上坐标,然后计算iou

#计算左下、右上的框

box1_xyxy = Variable(torch.FloatTensor(box1.size()))

box1_xyxy[:,:2] = box1[:,:2]/14. -0.5*box1[:,2:4]

box1_xyxy[:,2:4] = box1[:,:2]/14. +0.5*box1[:,2:4]

box2 = box_target[i].view(-1,5)

box2_xyxy = Variable(torch.FloatTensor(box2.size()))

box2_xyxy[:,:2] = box2[:,:2]/14. -0.5*box2[:,2:4]

box2_xyxy[:,2:4] = box2[:,:2]/14. +0.5*box2[:,2:4]

#带入坐标值计算iou值

iou = self.compute_iou(box1_xyxy[:,:4],box2_xyxy[:,:4]) # [2,1]#计算IOU值

- 对比出最大的iou值,并将索引以及相关值进行保存

(3)代码实现

#compute contain obj loss

coo_response_mask = torch.cuda.ByteTensor(box_target.size())

coo_response_mask.zero_()

coo_not_response_mask = torch.cuda.ByteTensor(box_target.size())

coo_not_response_mask.zero_()

box_target_iou = torch.zeros(box_target.size()).cuda()

#计算pred_box与target_box的iou,最大iou,用作置信度

#box_target已经是铺平了5了,所以每两个进行计算,每两个就是一个grid_cell对应的两个bbox

for i in range(0,box_target.size()[0],2): #choose the best iou box

box1 = box_pred[i:i+2]

#pred_box的两个边框

box1_xyxy = Variable(torch.FloatTensor(box1.size()))

box1_xyxy[:,:2] = box1[:,:2]/14. -0.5*box1[:,2:4]

box1_xyxy[:,2:4] = box1[:,:2]/14. +0.5*box1[:,2:4]

box2 = box_target[i].view(-1,5)

box2_xyxy = Variable(torch.FloatTensor(box2.size()))

box2_xyxy[:,:2] = box2[:,:2]/14. -0.5*box2[:,2:4]

box2_xyxy[:,2:4] = box2[:,:2]/14. +0.5*box2[:,2:4]

iou = self.compute_iou(box1_xyxy[:,:4],box2_xyxy[:,:4]) # [2,1]#计算IOU值

max_iou,max_index = iou.max(0)

max_index = max_index.data.cuda()

coo_response_mask[i+max_index]=1 #最大IOU对应的mask为1

coo_not_response_mask[i+1-max_index]=1

#####

# 我们希望置信度分数等于预测框和地面实况之间的交集(IOU)

# we want the confidence score to equal the

# intersection over union (IOU) between the predicted box

# and the ground truth

#####

box_target_iou[i+max_index,torch.LongTensor([4]).cuda()] = (max_iou).data.cuda()

box_target_iou = Variable(box_target_iou).cuda() #[batch_size,5]转换为梯度进行计算

#1.response loss(1,2,3个公式的复现 坐标+有目标的置信度损失)

# iou大的那个对应的预测值以及真实值

box_pred_response = box_pred[coo_response_mask].view(-1,5)

box_target_response_iou = box_target_iou[coo_response_mask].view(-1,5)

box_target_response = box_target[coo_response_mask].view(-1,5)

contain_loss = F.mse_loss(box_pred_response[:,4],box_target_response_iou[:,4],size_average=False)

loc_loss = F.mse_loss(box_pred_response[:,:2],box_target_response[:,:2],size_average=False) + F.mse_loss(torch.sqrt(box_pred_response[:,2:4]),torch.sqrt(box_target_response[:,2:4]),size_average=False) #sqrt开平方

坐标损失

λ coord ∑ i = 0 S 2 ∑ j = 0 B 1 i j o b j ‾ [ ( x i + x ^ i ) + ( y i + y ^ i 2 ) ] + λ coord ∑ i = 0 S 2 ∑ j = 0 B 1 i j o b j [ ( w i − w ^ i ) 2 + ( h i − h ^ i ) 2 ] \begin{gathered} \lambda_{\text {coord }} \sum_{i=0}^{S^{2}} \sum_{j=0}^{B} 1_{i j}^{\underline{o b j}}\left[\left(x_{i}+\hat{x}_{i}\right)+\left(y_{i}+\hat{y}_{i}^{2}\right)\right] \\ +\lambda_{\text {coord }} \sum_{i=0}^{S^{2}} \sum_{j=0}^{B} 1_{i j}^{o b j}\left[\left(\sqrt{w_{i}}-\sqrt{\hat{w}_{i}}\right)^{2}+\left(\sqrt{h_{i}}-\sqrt{\hat{h}_{i}}\right)^{2}\right] \end{gathered} λcoord i=0∑S2j=0∑B1ijobj[(xi+x^i)+(yi+y^i2)]+λcoord i=0∑S2j=0∑B1ijobj[(wi−w^i)2+(hi−h^i)2]

直接将x,y,w,h代入进行计算就行

loc_loss = F.mse_loss(box_pred_response[:,:2],box_target_response[:,:2],size_average=False) + F.mse_loss(torch.sqrt(box_pred_response[:,2:4]),torch.sqrt(box_target_response[:,2:4]),size_average=False) #sqrt开平方

类别损失

+ ∑ i = 0 S 2 1 i o b j ∑ i = c classes ( p i ( c ) − p ^ i ( c ) ) 2 +\sum_{i=0}^{S^{2}} 1_{i}^{o b j} \sum_{i=c \text { classes }}\left(p_{i}(c)-\hat{p}_{i}(c)\right)^{2} +i=0∑S21iobji=c classes ∑(pi(c)−p^i(c))2

直接将前期准备提取的第11-30的数据class_pred、class_target就可以了。

#3.class loss

class_loss = F.mse_loss(class_pred,class_target,size_average=False)

self.l_coord*loc_loss + 2*contain_loss + not_contain_loss + self.l_noobj*nooobj_loss + class_loss)/N

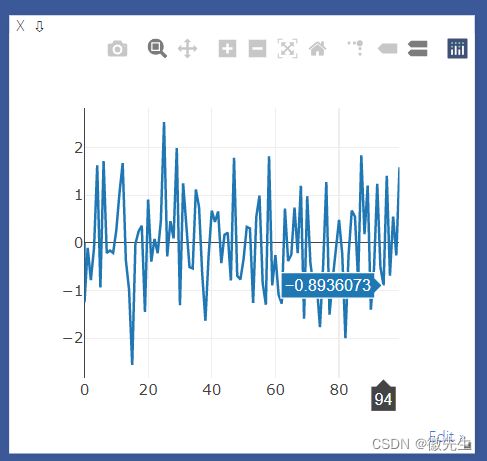

四、可视化结果

这里采用的visdom进行可视化,其实已经有了对应的数据,那么用什么做可视化都是没有问题。

visdom的一个优势就在于可以在浏览器中显示结果,而不必占用IDE的空间,方便做一个调试

1、首先要开启visdom-sever服务——>2.代码里实现画图(这里是画折线程序)

1、首先要开启visdom-sever服务

python -m visdom.server

之后就可以在浏览器访问http://localhost:8097/

2.代码里实现画图(这里是画折线程序)

vis画折线首先需要一个基准点进行构建

这里x=0的时候用于基准点的构建。

示例:

# 绘制折线图

from visdom import Visdom

import numpy as np

import time

vis=Visdom(env="loss")

# 绘制折线图,如果要使用append,那么就需要先画一个基准线,或者基准点,在这个基准上增加折线图的节点

x,y=0,0

win=vis.line(np.array([y]),np.array([x]))

for i in range(100):

x=np.array([i])

time.sleep(0.1)

y=np.random.randn(1)

vis.line(y,x,win,update="append")

绘制训练以及测试的数据代码:

#绘制train以及val损失的折线图

def plot_train_val(self, loss_train=None, loss_val=None):

'''

plot val loss and train loss in one figure

'''

#训练集以及测试集的

x = self.index.get('train_val', 0) # if none, return 0 ,如果有的话就返回对应的

#其实等于1,因为是第一次绘制,所以是绘制第一个点[0,0] [0,0]但是为了拓展性,所以变成了相对应的变量了

if x == 0: #如果索引里面没有train_val,这里应该是直接考虑了索引里面没有train_val的情况

loss = loss_train if loss_train else loss_val

#win_y和win_x

win_y = np.column_stack((loss, loss))#将两个数组按列连接起来

win_x = np.column_stack((x, x)) #[[0 0]]

self.win = self.vis.line(Y=win_y, X=win_x,

env=self.env)

# opts=dict(

# title='train_test_loss',

# ))

self.index['train_val'] = x + 1 #更新index

return

if loss_train != None:

self.vis.line(Y=np.array([loss_train]), X=np.array([x]),

win=self.win,

name='1',

update='append',

env=self.env)

self.index['train_val'] = x + 5

else: #loss_train为空,则是val损失

self.vis.line(Y=np.array([loss_val]), X=np.array([x]),

win=self.win,

name='2',

update='append',

env=self.env)

五、推理

在训练的时候需要将数据进行encoder,那么推理的时候就需要将数据进行逆向操作,将数据转向于我们能够理解的:

格式转换:转换成xy形式 convert[cx,cy,w,h] to [x1,xy1,x2,y2]

在预测过程中,将网络输出的(n,30,7,7)先reshape为(n,7,7,30)——方便理解、处理,然后将转换为(98,25)的信息矩阵。

过程:

- 首先,一样地初始化一个输出全0矩阵outputs(shape=(98,25) 对应的2个7*7 x,y,w,h,c,20个种类);

- 然后,遍历每个网格(i,j),利用encoder中(dx,dy,dw,dh)->(px,py,dw,dh)的推导公式反向推动将(px,py,dw,dh)转为(dx,dy,dw,dh)。唯一数据要变的就是这个地方;

- 按照位置进行存储 2个7*7 x,y,w,h,c,20个种类概率;

def decoder(pred):

'''

pred (tensor) 1x7x7x30

return (tensor) box[[x1,y1,x2,y2]] label[...]

'''

grid_num = 14

boxes=[]

cls_indexs=[]

probs = []

cell_size = 1./grid_num

pred = pred.data

pred = pred.squeeze(0) #7x7x30

contain1 = pred[:,:,4].unsqueeze(2)

contain2 = pred[:,:,9].unsqueeze(2)

contain = torch.cat((contain1,contain2),2)

mask1 = contain > 0.1 #大于阈值

mask2 = (contain==contain.max()) #we always select the best contain_prob what ever it>0.9

mask = (mask1+mask2).gt(0)

# min_score,min_index = torch.min(contain,2) #每个cell只选最大概率的那个预测框

for i in range(grid_num):

for j in range(grid_num):

for b in range(2):

# index = min_index[i,j]

# mask[i,j,index] = 0

if mask[i,j,b] == 1:

#print(i,j,b)

box = pred[i,j,b*5:b*5+4]

contain_prob = torch.FloatTensor([pred[i,j,b*5+4]])

xy = torch.FloatTensor([j,i])*cell_size #cell左上角 up left of cell

box[:2] = box[:2]*cell_size + xy # return cxcy relative to image

box_xy = torch.FloatTensor(box.size())#转换成xy形式 convert[cx,cy,w,h] to [x1,xy1,x2,y2]

box_xy[:2] = box[:2] - 0.5*box[2:]

box_xy[2:] = box[:2] + 0.5*box[2:]

max_prob,cls_index = torch.max(pred[i,j,10:],0)

if float((contain_prob*max_prob)[0]) > 0.1:

boxes.append(box_xy.view(1,4))

cls_indexs.append(cls_index)

probs.append(contain_prob*max_prob)

几个常用到的操作

gt(0)大于0,表示大于0对应位置就是True,小于或者等于0对应位置就是false

import torch

a=torch.randn(3,4)

a[2,3]=-1

mask=a.gt(0)

print(mask)

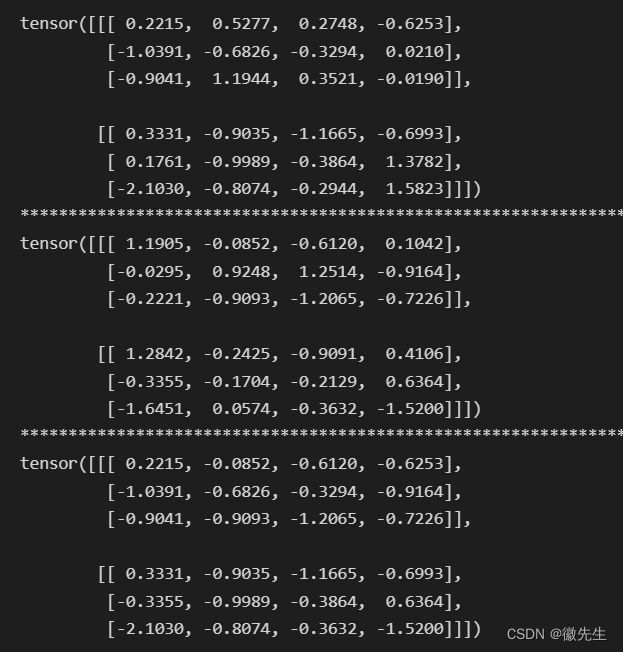

torch.cat表示对两个tensor进行相连

b1=torch.randn(3,3)

b2=torch.randn(3,3)

print(torch.cat((b1,b2),0))

cat函数里的属性设置不同的值:

只要记住这常见的三种形式就可以了,其实是按照位置来进行连接的,通过-1[0[1这样的位置进行判断

设置为-1