数据划分+json_to_dataset+基于Unet训练模型

文章目录

- 一、划分ICDAR2019-LSVT数据集并生成对应标签文件

-

- 1.下载数据后,将数据合并在一个文件夹内,这些数据的label存在train_full_labels.json文件里,将json文件里数据的label读取出来并保存在txt里。为了训练,对此数据集进行划分,划分为训练集和验证集,并将所对应的label分别存在各自的txt文件里,这里以train为例,val同理,修改文件路径等即可。

- 二、修改labelme自带的json_to_dataset.py实现批量转化.json文件

-

- 1.将labelme标注生成的json文件转化为mask文件

- 三、用UNet训练模型

一、划分ICDAR2019-LSVT数据集并生成对应标签文件

自己的数据集为开源的ICDAR2019-LSVT数据集,下载下来后包括train_full_images_0和train_full_images_1。

1.下载数据后,将数据合并在一个文件夹内,这些数据的label存在train_full_labels.json文件里,将json文件里数据的label读取出来并保存在txt里。为了训练,对此数据集进行划分,划分为训练集和验证集,并将所对应的label分别存在各自的txt文件里,这里以train为例,val同理,修改文件路径等即可。

import os

import numpy as np

import json

import argparse

import os

from glob import glob

# 定义参数

jsonPath = "/Users/yi/Desktop/ICDAR2019-LSVT/icdar2019-lsvt-train/train_full_labels.json"

absPath="icdar2019-lsvt-train/train_full_images/"

def get_args():

#val_full_images

parser = argparse.ArgumentParser()

parser.add_argument('--input', default='/Users/yi/Desktop/ICDAR2019-LSVT/icdar2019-lsvt-train/train_full_images/')

parser.add_argument('--out_dir', default='/Users/yi/Desktop/ICDAR2019-LSVT/icdar2019-lsvt-train/train_full_images/')

return parser.parse_args()

def cidar2019(jpg_files, task="Label"):

count = 0

if not os.path.isdir(get_args().out_dir):

os.makedirs(get_args().out_dir)

# 创建label存储的文件,例 test.txt

label_file = open('/Users/yi/Desktop/ICDAR2019-LSVT/icdar2019-lsvt-train/train_full_images/%s.txt' % (task), 'w')

for jpg_name in jpg_files:

#使用json.load()加载并读取json文件,json.loads()为读取json字符串

json_file = json.load(open(jsonPath, "r", encoding="utf-8"))

#for multi in json_file:

#if multi == jpg_name:

annot_ppocr = []

imagePath = get_args().input + jpg_name + ".jpg"

#获取自己文件当前的相对路径

absPath = os.path.join(imagePath.split("/")[-3],imagePath.split("/")[-2], imagePath.split("/")[-1])

#json串的循环访问

for mm in json_file[jpg_name]:

pts = []

#获取transcription字段内容

transcription = mm["transcription"]

#获取points字段内容

points = mm["points"]

pts.append(points[0])

pts.append(points[1])

pts.append(points[2])

pts.append(points[3])

#按固定格式添加串

annot_ppocr.append({"transcription": transcription, "points": pts, "difficult": False})

print(annot_ppocr)

label_file.write(absPath + '\t' + json.dumps(annot_ppocr, ensure_ascii=False)+ '\n' )

count = count + 1

print(count)

def main(args):

files = glob(args.input+ "*.jpg") #获取文件夹下所有.json文件

#将文件名与文件后缀分开

files = [i.split("/")[-1].split(".jpg")[0] for i in files]

test_files = files

print(files)

cidar2019(test_files , "Label")

if __name__ == '__main__':

main(get_args())

二、修改labelme自带的json_to_dataset.py实现批量转化.json文件

有的模型在处理图片时需要的输入是二值图,这时我们就需要将图片根据labelme标注生成的.json文件

1.将labelme标注生成的json文件转化为mask文件

github上开源的Pytorch-UNet训练模型时要求的输入图像为二值图,所以在这里第一步就是根据json文件将图片转化为mask型并输出。下面是labelme在文件anaconda3/envs/labelme/lib/python3.7/site-packages/labelme/cli下json_to_dataset.py转化单个文件的源代码:

import argparse

import base64

import json

import os

import os.path as osp

import imgviz

import PIL.Image

from labelme.logger import logger

from labelme import utils

def main():

logger.warning(

"This script is aimed to demonstrate how to convert the "

"JSON file to a single image dataset."

)

logger.warning(

"It won't handle multiple JSON files to generate a "

"real-use dataset."

)

parser = argparse.ArgumentParser()

parser.add_argument("json_file")

parser.add_argument("-o", "--out", default=None)

args = parser.parse_args()

json_file = args.json_file

if args.out is None:

out_dir = osp.basename(json_file).replace(".", "_")

out_dir = osp.join(osp.dirname(json_file), out_dir)

else:

out_dir = args.out

if not osp.exists(out_dir):

os.mkdir(out_dir)

data = json.load(open(json_file))

imageData = data.get("imageData")

if not imageData:

imagePath = os.path.join(os.path.dirname(json_file), data["imagePath"])

with open(imagePath, "rb") as f:

imageData = f.read()

imageData = base64.b64encode(imageData).decode("utf-8")

img = utils.img_b64_to_arr(imageData)

label_name_to_value = {"_background_": 0}

for shape in sorted(data["shapes"], key=lambda x: x["label"]):

label_name = shape["label"]

if label_name in label_name_to_value:

label_value = label_name_to_value[label_name]

else:

label_value = len(label_name_to_value)

label_name_to_value[label_name] = label_value

lbl, _ = utils.shapes_to_label(

img.shape, data["shapes"], label_name_to_value

)

label_names = [None] * (max(label_name_to_value.values()) + 1)

for name, value in label_name_to_value.items():

label_names[value] = name

lbl_viz = imgviz.label2rgb(

label=lbl, img=imgviz.asgray(img), label_names=label_names, loc="rb"

)

PIL.Image.fromarray(img).save(osp.join(out_dir, "img.png"))

utils.lblsave(osp.join(out_dir, "label.png"), lbl)

PIL.Image.fromarray(lbl_viz).save(osp.join(out_dir, "label_viz.png"))

with open(osp.join(out_dir, "label_names.txt"), "w") as f:

for lbl_name in label_names:

f.write(lbl_name + "\n")

logger.info("Saved to: {}".format(out_dir))

if __name__ == "__main__":

main()

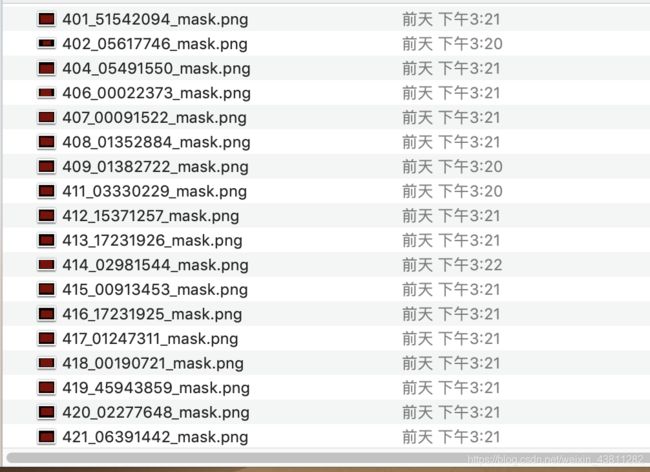

之后自己对代码进行了修改,输入是含有原图片.jpg和标注.json的文件夹,输出是含有所有二值图的文件夹,更改后代码如下:

import argparse

import base64

import json

import os

import os.path as osp

from glob import glob

from labelme.logger import logger

from labelme import utils

def get_args():

parser = argparse.ArgumentParser()

parser.add_argument('--input', default='/Users/yi/Desktop/val_seg_dsy/')

parser.add_argument('--out', default='/Users/yi/Desktop/val_seg_dsy_mask/')

return parser.parse_args()

def data_to_mask(json_files):

for json_name in json_files:

jsonPath = get_args().input + json_name + ".json"

print(jsonPath)

imagePath = get_args().input + json_name + ".jpg"

json_file = json.load(open(jsonPath, "r", encoding="utf-8"))

imageData = json_file.get("imageData")

print(imageData)

if not imageData:

imagePath = get_args().input + json_name + ".jpg"

with open(imagePath, "rb") as f:

imageData = f.read()

imageData = base64.b64encode(imageData).decode("utf-8")

img = utils.img_b64_to_arr(imageData)

#转化生成

label_name_to_value = {"_background_": 0}

print(label_name_to_value)

for shape in sorted(json_file["shapes"], key=lambda x: x["label"]):

label_name = shape["label"]

if label_name in label_name_to_value:

label_value = label_name_to_value[label_name]

else:

label_value = len(label_name_to_value)

label_name_to_value[label_name] = label_value

lbl, _ = utils.shapes_to_label(

img.shape, json_file["shapes"], label_name_to_value

)

label_names = [None] * (max(label_name_to_value.values()) + 1)

for name, value in label_name_to_value.items():

label_names[value] = name

# lbl_viz = imgviz.label2rgb(

# label=lbl, img=imgviz.asgray(img), label_names=label_names, loc="rb"

# )

#取消生成灰度图

#PIL.Image.fromarray(img).save(osp.join(get_args().out, json_name+".png"))

#保存

utils.lblsave(osp.join(get_args().out, json_name+"_mask.png"), lbl)

# PIL.Image.fromarray(lbl_viz).save(osp.join(out_dir, "label_viz.png"))

#print(osp.join(get_args().out, json_name+".png"))

# with open(osp.join(out_dir, "label_names.txt"), "w") as f:

# for lbl_name in label_names:

# f.write(lbl_name + "\n")

logger.info("Saved to: {}".format(get_args().out))

def main(args):

files = glob(args.input + "*.json")

print(files)

out_dir = args.out

if not osp.exists(out_dir):

os.mkdir(out_dir)

files = [i.split("/")[-1].split(".json")[0] for i in files]

test_files = files

data_to_mask(test_files)

if __name__ == "__main__":

main(get_args())

三、用UNet训练模型

基于GitHub上开源代码,进行训练,训练过程后续更新。