深度学习-【图像分类】学习笔记3AlexNet

文章目录

- 3.1 AlexNet网络结构详解与花分类数据集

-

- AlexNet详解

- 花分类数据集

- 3.2 使用pytorch搭建AlexNet并训练

-

- model

- train

- predict

- 代码示例

-

- model.py

- train.py

- predict.py

3.1 AlexNet网络结构详解与花分类数据集

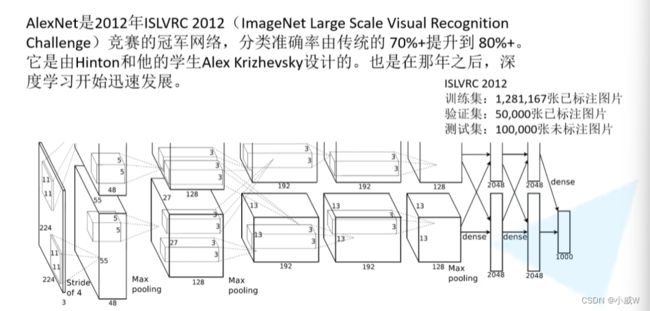

AlexNet详解

传统Sigmoid激活函数的缺点:

- 求导比较麻烦

- 深度较深时出现梯度消失

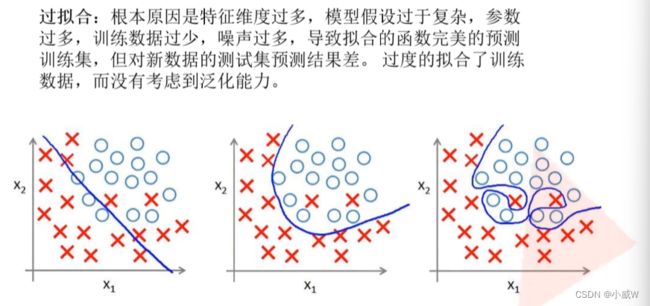

过拟合

使用Dropout的方式在网络正向传播过程中随机失活一部分神经元。

回顾:N = (W - F + 2P) / S + 1

花分类数据集

data_set文件夹中,readme.md。

https://storage.googleapis.com/download.tensorflow.org/example_images/flower_photos.tgz

3.2 使用pytorch搭建AlexNet并训练

model

nn.Sequential():

一个序列容器,用于搭建神经网络的模块被按照被传入构造器的顺序添加到nn.Sequential()容器中。

nn.Conv2d(3, 48, kernel_size=11, stride=4, padding=2)

padding可以传入两种类型,int或tuple。

如果是int=1,上下左右各补一列0。

如果是tuple=(1, 2),上下各一列0,左右各两列0。

如果想要左1,右2,上1,下2。需要nn.ZeroPad2d((1, 2, 1, 2))。参数的顺序就是左右上下。

e.g.https://pytorch.org/docs/stable/generated/torch.nn.ZeroPad2d.html?highlight=zeropad2d#torch.nn.ZeroPad2d

>>> m = nn.ZeroPad2d(2)

>>> input = torch.randn(1, 1, 3, 3)

>>> input

tensor([[[[-0.1678, -0.4418, 1.9466],

[ 0.9604, -0.4219, -0.5241],

[-0.9162, -0.5436, -0.6446]]]])

>>> m(input)

tensor([[[[ 0.0000, 0.0000, 0.0000, 0.0000, 0.0000, 0.0000, 0.0000],

[ 0.0000, 0.0000, 0.0000, 0.0000, 0.0000, 0.0000, 0.0000],

[ 0.0000, 0.0000, -0.1678, -0.4418, 1.9466, 0.0000, 0.0000],

[ 0.0000, 0.0000, 0.9604, -0.4219, -0.5241, 0.0000, 0.0000],

[ 0.0000, 0.0000, -0.9162, -0.5436, -0.6446, 0.0000, 0.0000],

[ 0.0000, 0.0000, 0.0000, 0.0000, 0.0000, 0.0000, 0.0000],

[ 0.0000, 0.0000, 0.0000, 0.0000, 0.0000, 0.0000, 0.0000]]]])

>>> # using different paddings for different sides

>>> m = nn.ZeroPad2d((1, 1, 2, 0))

>>> m(input)

tensor([[[[ 0.0000, 0.0000, 0.0000, 0.0000, 0.0000],

[ 0.0000, 0.0000, 0.0000, 0.0000, 0.0000],

[ 0.0000, -0.1678, -0.4418, 1.9466, 0.0000],

[ 0.0000, 0.9604, -0.4219, -0.5241, 0.0000],

[ 0.0000, -0.9162, -0.5436, -0.6446, 0.0000]]]])

如果根据N = (W - F + 2P) / S + 1算出来有小数,那么pytorch会自动舍去右边和下边的列。

nn.ReLU(inplace=True)

https://blog.csdn.net/HJC256ZY/article/details/106471982

不管是true 还是False 都不会改变Relu后的结果。 inplace选择是否进行覆盖运算。

利用inplace=True用输出的数据覆盖输入的数据;节省空间,此时两者共用内存。可以节省内(显)存,同时还可以省去反复申请和释放内存的时间。

nn.MaxPool2d(kernel_size=3, stride=2)

池化没有池化核个数这一参数,池化操作只影响尺寸,不影响channel。

padding默认0。

权重初始化。

目前版本的pytorch会自动进行。

x = torch.flatten(x, start_dim=1)

start_dim表示从channel开始展平,而不动batch这一维度。

回顾:Pytorch Tensor的通道排序:[batch, channel, height, width]。

train

data_transform = {

"train": transforms.Compose([transforms.RandomResizedCrop(224), # 随机裁剪到指定大小

transforms.RandomHorizontalFlip(), # 随机水平翻转

transforms.ToTensor(), # 转化成一个tensor

transforms.Normalize((0.5, 0.5, 0.5), (0.5, 0.5, 0.5))]), # 用均值和标准差归一化处理

"val": transforms.Compose([transforms.Resize((224, 224)), # cannot 224, must (224, 224) | 如果size是int,则图像较小的边缘将与该数字匹配,较大的按等比例来

transforms.ToTensor(),

transforms.Normalize((0.5, 0.5, 0.5), (0.5, 0.5, 0.5))])}

训练集:用来学习的样本集,用于分类器参数的拟合。

验证集:用来调整分类器超参数的样本集,如在神经网络中选择隐藏层神经元的数量。

测试集:仅用于对已经训练好的分类器进行性能评估的样本集。

net.train()和net.eval()

只希望dropout在train的时候部分失活。

进入eval模式后就会关闭dropout。(也会对bn等有作用)

predict

output = torch.squeeze(model(img.to(device))).cpu()

torch.squeeze:将tensor中大小为1的维度删除

torch.argmax(x, dim),其中x为张量,dim控制比较的维度,返回最大值的索引。

torch.argmax方法详解https://blog.csdn.net/wuyalan1994/article/details/125920290

代码示例

model.py

import torch.nn as nn

import torch

class AlexNet(nn.Module):

def __init__(self, num_classes=1000, init_weights=False):

super(AlexNet, self).__init__()

# 提取特征

self.features = nn.Sequential(

nn.Conv2d(3, 48, kernel_size=11, stride=4, padding=2), # input[3, 224, 224] output[48, 55, 55] 55=(224-11+4)/4+1取整

nn.ReLU(inplace=True),

nn.MaxPool2d(kernel_size=3, stride=2), # output[48, 27, 27] 27=(55-3+0)/2+1 padding默认0

nn.Conv2d(48, 128, kernel_size=5, padding=2), # output[128, 27, 27] 27=(27-5+4)/1+1 stride默认1

nn.ReLU(inplace=True),

nn.MaxPool2d(kernel_size=3, stride=2), # output[128, 13, 13] 13=(27-3)/2+1

nn.Conv2d(128, 192, kernel_size=3, padding=1), # output[192, 13, 13] 13=(13-3+2)/1+1

nn.ReLU(inplace=True),

nn.Conv2d(192, 192, kernel_size=3, padding=1), # output[192, 13, 13] 13=(13-3+2)/1+1

nn.ReLU(inplace=True),

nn.Conv2d(192, 128, kernel_size=3, padding=1), # output[128, 13, 13] 13=(13-3+2)/1+1

nn.ReLU(inplace=True),

nn.MaxPool2d(kernel_size=3, stride=2), # output[128, 6, 6] 6=(13-3)/2+1

)

# 全连接层 分类

self.classifier = nn.Sequential(

nn.Dropout(p=0.5),

nn.Linear(128 * 6 * 6, 2048),

nn.ReLU(inplace=True),

nn.Dropout(p=0.5),

nn.Linear(2048, 2048),

nn.ReLU(inplace=True),

nn.Linear(2048, num_classes),

)

if init_weights:

self._initialize_weights()

def forward(self, x):

x = self.features(x)

x = torch.flatten(x, start_dim=1)

x = self.classifier(x)

return x

def _initialize_weights(self): # 初始化网络权重| 当前版本不需要此操作,pytorch会自动进行这种权重初始化

for m in self.modules(): # Returns an iterator over all modules in the network

if isinstance(m, nn.Conv2d):

nn.init.kaiming_normal_(m.weight, mode='fan_out', nonlinearity='relu') # 何凯明初始化

if m.bias is not None:

nn.init.constant_(m.bias, 0) # 将偏置都设置为0

elif isinstance(m, nn.Linear):

nn.init.normal_(m.weight, 0, 0.01) # 正态分布

nn.init.constant_(m.bias, 0)

train.py

import os

import sys

import json

import torch

import torch.nn as nn

from torchvision import transforms, datasets, utils

import matplotlib.pyplot as plt

import numpy as np

import torch.optim as optim

from tqdm import tqdm

from model import AlexNet

def main():

device = torch.device("cuda:0" if torch.cuda.is_available() else "cpu") # 指定训练过程中使用的设备

print("using {} device.".format(device))

# 定义数据预处理函数

data_transform = {

"train": transforms.Compose([transforms.RandomResizedCrop(224), # 随机裁剪到指定大小

transforms.RandomHorizontalFlip(), # 随机水平翻转

transforms.ToTensor(), # 转化成一个tensor

transforms.Normalize((0.5, 0.5, 0.5), (0.5, 0.5, 0.5))]), # 用均值和标准差归一化处理

"val": transforms.Compose([transforms.Resize((224, 224)), # cannot 224, must (224, 224) | 如果size是int,则图像较小的边缘将与该数字匹配,较大的按等比例来

transforms.ToTensor(),

transforms.Normalize((0.5, 0.5, 0.5), (0.5, 0.5, 0.5))])}

data_root = os.path.abspath(os.path.join(os.getcwd(), "../..")) # get data root path

image_path = os.path.join(data_root, "data_set", "flower_data") # flower data set path

assert os.path.exists(image_path), "{} path does not exist.".format(image_path)

train_dataset = datasets.ImageFolder(root=os.path.join(image_path, "train"),

transform=data_transform["train"])

train_num = len(train_dataset)

# {'daisy':0, 'dandelion':1, 'roses':2, 'sunflower':3, 'tulips':4}

flower_list = train_dataset.class_to_idx # 获取分类的名称所对应的索引

cla_dict = dict((val, key) for key, val in flower_list.items())

# write dict into json file

json_str = json.dumps(cla_dict, indent=4)

with open('class_indices.json', 'w') as json_file:

json_file.write(json_str)

batch_size = 32

nw = min([os.cpu_count(), batch_size if batch_size > 1 else 0, 8]) # number of workers

print('Using {} dataloader workers every process'.format(nw))

train_loader = torch.utils.data.DataLoader(train_dataset,

batch_size=batch_size, shuffle=True,

num_workers=nw)

validate_dataset = datasets.ImageFolder(root=os.path.join(image_path, "val"),

transform=data_transform["val"])

val_num = len(validate_dataset)

validate_loader = torch.utils.data.DataLoader(validate_dataset,

batch_size=4, shuffle=False,

num_workers=nw)

print("using {} images for training, {} images for validation.".format(train_num,

val_num))

# test_data_iter = iter(validate_loader)

# test_image, test_label = test_data_iter.next()

#

# def imshow(img):

# img = img / 2 + 0.5 # unnormalize 反归一化

# npimg = img.numpy()

# plt.imshow(np.transpose(npimg, (1, 2, 0)))

# plt.show()

#

# print(' '.join('%5s' % cla_dict[test_label[j].item()] for j in range(4)))

# imshow(utils.make_grid(test_image))

net = AlexNet(num_classes=5, init_weights=True)

net.to(device)

loss_function = nn.CrossEntropyLoss()

# pata = list(net.parameters()) # 查看模型的参数

optimizer = optim.Adam(net.parameters(), lr=0.0002)

epochs = 10

save_path = './AlexNet.pth'

best_acc = 0.0

train_steps = len(train_loader)

for epoch in range(epochs):

# train

net.train()

running_loss = 0.0

train_bar = tqdm(train_loader, file=sys.stdout)

for step, data in enumerate(train_bar):

images, labels = data

optimizer.zero_grad()

outputs = net(images.to(device))

loss = loss_function(outputs, labels.to(device))

loss.backward()

optimizer.step()

# print statistics

running_loss += loss.item()

train_bar.desc = "train epoch[{}/{}] loss:{:.3f}".format(epoch + 1,

epochs,

loss)

# validate

net.eval()

acc = 0.0 # accumulate accurate number / epoch

with torch.no_grad():

val_bar = tqdm(validate_loader, file=sys.stdout)

for val_data in val_bar:

val_images, val_labels = val_data

outputs = net(val_images.to(device))

predict_y = torch.max(outputs, dim=1)[1]

acc += torch.eq(predict_y, val_labels.to(device)).sum().item()

val_accurate = acc / val_num

print('[epoch %d] train_loss: %.3f val_accuracy: %.3f' %

(epoch + 1, running_loss / train_steps, val_accurate))

if val_accurate > best_acc:

best_acc = val_accurate

torch.save(net.state_dict(), save_path) # 保存模型参数

print('Finished Training')

if __name__ == '__main__':

main()

predict.py

import os

import json

import torch

from PIL import Image

from torchvision import transforms

import matplotlib.pyplot as plt

from model import AlexNet

def main():

device = torch.device("cuda:0" if torch.cuda.is_available() else "cpu")

data_transform = transforms.Compose(

[transforms.Resize((224, 224)),

transforms.ToTensor(),

transforms.Normalize((0.5, 0.5, 0.5), (0.5, 0.5, 0.5))])

# load image

img_path = "../tulip.jpg"

assert os.path.exists(img_path), "file: '{}' dose not exist.".format(img_path)

img = Image.open(img_path)

plt.imshow(img)

# [N, C, H, W]

img = data_transform(img)

# expand batch dimension

img = torch.unsqueeze(img, dim=0)

# read class_indict

json_path = './class_indices.json'

assert os.path.exists(json_path), "file: '{}' dose not exist.".format(json_path)

with open(json_path, "r") as f:

class_indict = json.load(f)

# create model

model = AlexNet(num_classes=5).to(device)

# load model weights

weights_path = "./AlexNet.pth"

assert os.path.exists(weights_path), "file: '{}' dose not exist.".format(weights_path)

model.load_state_dict(torch.load(weights_path))

model.eval()

with torch.no_grad():

# predict class

output = torch.squeeze(model(img.to(device))).cpu() # torch.squeeze:将tensor中大小为1的维度删除

predict = torch.softmax(output, dim=0)

predict_cla = torch.argmax(predict).numpy()

print_res = "class: {} prob: {:.3}".format(class_indict[str(predict_cla)],

predict[predict_cla].numpy())

plt.title(print_res)

for i in range(len(predict)):

print("class: {:10} prob: {:.3}".format(class_indict[str(i)],

predict[i].numpy()))

plt.show()

if __name__ == '__main__':

main()