GAN生成对抗网络pytorch实现

生成对抗网络原理及实现

- 1、什么是生成对抗网络GAN?

- 2、数学语言描述

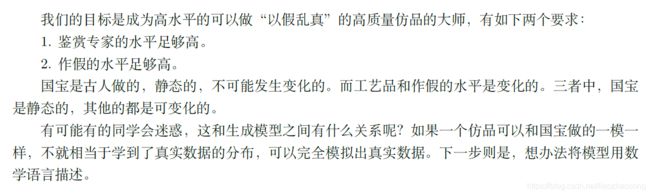

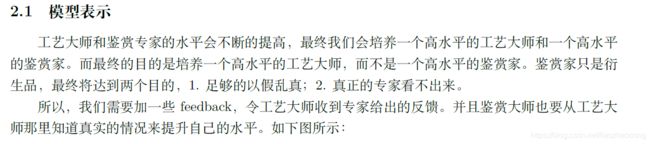

1、什么是生成对抗网络GAN?

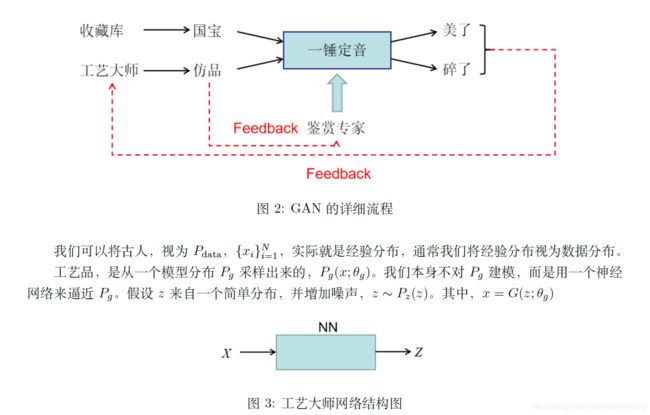

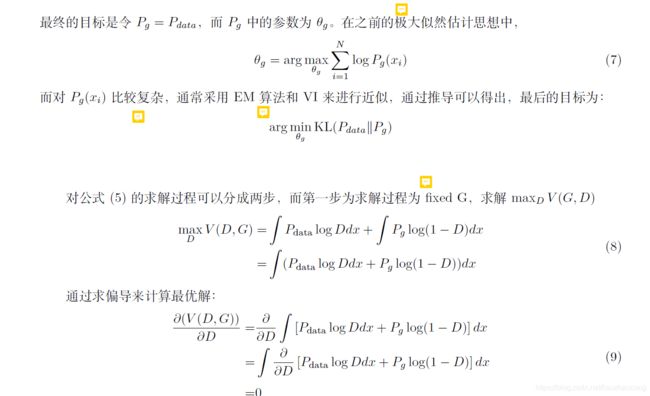

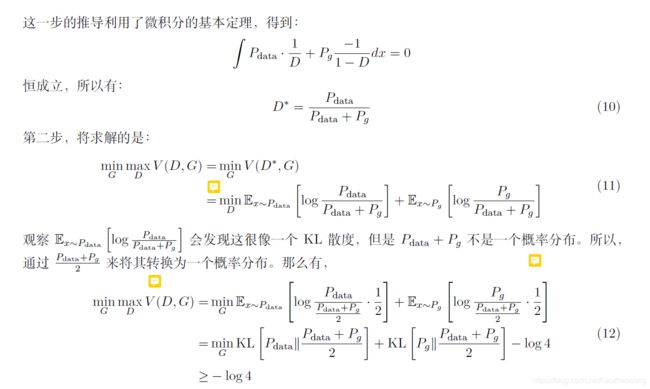

2、数学语言描述

import os

import torch

import torchvision

import torch.nn as nn

from torchvision import transforms

from torchvision.utils import save_image

# Device configuration

device = torch.device('cuda' if torch.cuda.is_available() else 'cpu')

# Hyper-parameters

latent_size = 64

hidden_size = 256

image_size = 784

num_epochs = 200

batch_size = 100

sample_dir = 'samples'

# Create a directory if not exists

if not os.path.exists(sample_dir):

os.makedirs(sample_dir)

# Image processing

transform = transforms.Compose([

transforms.ToTensor(),

transforms.Normalize(mean=(0.5, 0.5, 0.5), # 3 for RGB channels

std=(0.5, 0.5, 0.5))])

# MNIST dataset

#因为 root='../../data/',所以需要将data下载并放入C盘

mnist = torchvision.datasets.MNIST(root='../../data/',

train=True,

transform=transform,

download=True)

# Data loader

data_loader = torch.utils.data.DataLoader(dataset=mnist,

batch_size=batch_size,

shuffle=True)

# Discriminator

D = nn.Sequential(

nn.Linear(image_size, hidden_size),

nn.LeakyReLU(0.2),

nn.Linear(hidden_size, hidden_size),

nn.LeakyReLU(0.2),

nn.Linear(hidden_size, 1),

nn.Sigmoid())

# Generator nn神经网络 # 3 for RGB channels

G = nn.Sequential(

nn.Linear(latent_size, hidden_size),

nn.ReLU(),

nn.Linear(hidden_size, hidden_size),

nn.ReLU(),

nn.Linear(hidden_size, image_size),

nn.Tanh())

# Device setting设备设置

D = D.to(device)

G = G.to(device)

# Binary cross entropy loss and optimizer 二进制交叉熵损失

criterion = nn.BCELoss()

d_optimizer = torch.optim.Adam(D.parameters(), lr=0.0002)

g_optimizer = torch.optim.Adam(G.parameters(), lr=0.0002)

def denorm(x):

out = (x + 1) / 2

return out.clamp(0, 1)

#clamp夹紧,夹住,固定; 区间限定函数

def reset_grad():

d_optimizer.zero_grad()

g_optimizer.zero_grad()

# Start training

total_step = len(data_loader)

for epoch in range(num_epochs):

for i, (images, _) in enumerate(data_loader):

images = images.reshape(batch_size, -1).to(device)

# Create the labels which are later 隐式 used as input for the BCE loss

real_labels = torch.ones(batch_size, 1).to(device)

fake_labels = torch.zeros(batch_size, 1).to(device)

# ================================================================== #

# Train the discriminator #

# ================================================================== #

# Compute BCE_Loss using real images计算真实图像损失 where BCE_Loss(x, y): - y * log(D(x)) - (1-y) * log(1 - D(x))

# Second term of the loss is always zero since real_labels == 1

outputs = D(images)

d_loss_real = criterion(outputs, real_labels)

real_score = outputs

# Compute BCELoss using fake images计算假图像损失

# First term of the loss is always zero since fake_labels == 0

z = torch.randn(batch_size, latent_size).to(device)

fake_images = G(z)

outputs = D(fake_images)

d_loss_fake = criterion(outputs, fake_labels)

fake_score = outputs

# Backprop and optimize 判别网络

d_loss = d_loss_real + d_loss_fake

reset_grad()

d_loss.backward()

d_optimizer.step()

# ================================================================== #

# Train the generator #

# ================================================================== #

# Compute loss with fake images

z = torch.randn(batch_size, latent_size).to(device)

fake_images = G(z)

outputs = D(fake_images)

# We train G to maximize log(D(G(z)) 代替instead of minimizing log(1-D(G(z)))

# For the reason, see the last paragraph of section 3. https://arxiv.org/pdf/1406.2661.pdf

g_loss = criterion(outputs, real_labels)

# Backprop and optimize 生成网络

reset_grad()

g_loss.backward()

g_optimizer.step()

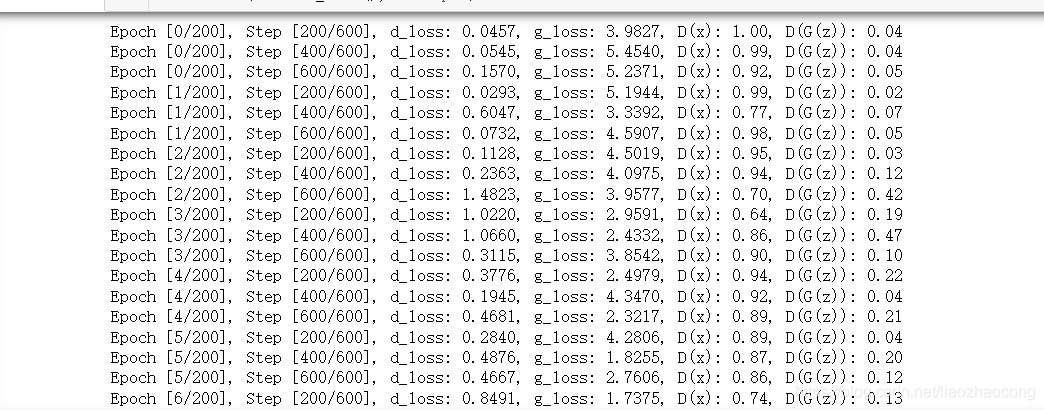

if (i+1) % 200 == 0:

print('Epoch [{}/{}], Step [{}/{}], d_loss: {:.4f}, g_loss: {:.4f}, D(x): {:.2f}, D(G(z)): {:.2f}'

.format(epoch, num_epochs, i+1, total_step, d_loss.item(), g_loss.item(),

real_score.mean().item(), fake_score.mean().item()))

# Save real images

if (epoch+1) == 1:

images = images.reshape(images.size(0), 1, 28, 28)

save_image(denorm(images), os.path.join(sample_dir, 'real_images.png'))

# Save sampled images

fake_images = fake_images.reshape(fake_images.size(0), 1, 28, 28)

save_image(denorm(fake_images), os.path.join(sample_dir, 'fake_images-{}.png'.format(epoch+1)))

# Save the model checkpoints

torch.save(G.state_dict(), 'G.ckpt')

torch.save(D.state_dict(), 'D.ckpt')

结果如下

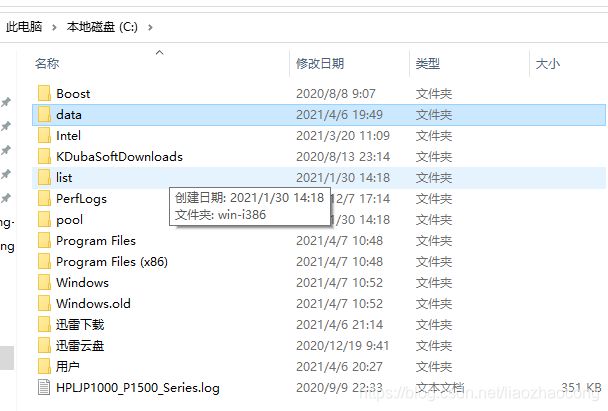

注意:需要将minist 数据集data下载并放入C盘

注意:需要将minist 数据集data下载并放入C盘

6.参考内容

1、白板推导系列(三十一) ~ 生成对抗网络

2、codingdict