复现记录1——MECT: Multi-Metadata Embedding based Cross-Transformer for Chinese Named Entity Recognition

论文链接:https://arxiv.org/pdf/2104.07204.pdf

代码地址:https://github.com/CoderMusou/MECT4CNER

github上readme关于复现实验的步骤写的比较详细,这里写一下复现过程。

数据集下载:

汉语拆字字典:GitHub - kfcd/chaizi: 漢語拆字字典

Weibo+MSRA:GitHub - OYE93/Chinese-NLP-Corpus: Collections of Chinese NLP corpus

下载后数据集按照readme要求放在新建的data文件夹下

配置:

安装pytorch版本GPU(pytorch官网 Start Locally | PyTorch)

首先要下载CUDA,这篇要求的是CUDA10.1。然后去pytorch官网https://pytorch.org/点开下面的“previous version”找到想要的和CUDA对应的版本。(我之前安装过CUDA10.0,有关anconda的安装和CUDA的安装可以参考(23条消息) Windows 10 安装 CUDA Toolkit 10.1_Yongqiang Cheng的博客-CSDN博客_cudatoolkit10.1)

在虚拟环境下创建新的CUDA10.1并不影响其他环境下的CUDA(参考:(23条消息) Windows下深度学习环境CUDA10.1和CUDA10.0共存_cskywit的博客-CSDN博客_cuda101)

我下载的是pip install torch==1.5.1+cu101 torchvision==0.6.1+cu101 -f https://download.pytorch.org/whl/torch_stable.html

会有两个网址下载,再通过给的wheel网址下载

修改

1、paths.py里的路径和modules文件中记得改成自己的!

2、环境配置尽量按照readme里要求

除此之外还有一个包需要下载(pytz)

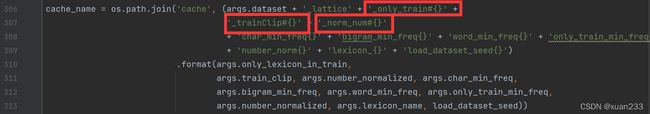

3.修改四处,将:修改为#(参考(23条消息) 【报错】“OSError: [Errno 22] Invalid argument: ‘cache\\resume_lattice_only_tra”_阿芒Aris的博客-CSDN博客)

否则会报错:

Traceback (most recent call last):

File "main.py", line 326, in

only_train_min_freq=args.only_train_min_freq)

File "D:\Anaconda\envs\MECT4CNER\lib\site-packages\fastNLP\core\utils.py", line 160, in wrapper

with open(cache_filepath, 'wb') as f:

OSError: [Errno 22] Invalid argument: 'cache\\weibo_lattice_only_train#False_trainClip#True_norm_num:0char_min_freq1bigram_min_freq1word_min_freq1only_train_min_freqTruenumber

_norm0lexicon_yjload_dataset_seed100'

4、需要把\MECT4CNER\Modules\CNNRadicalLevelEmbedding.py文件中 char2radical函数里的"return list(c_info[3])"(line 26)中的c_info[3]改成c_info[0]

否则报错“IndexError: list index out of range”

5、报错UnicodeDecodeError: 'gbk' codec can't decode byte 0x80 in position 658: illegal multibyte

在相应行增添,encoding='utf-8'(参考:(23条消息) 解决Python报错UnicodeDecodeError: 'gbk' codec can't decode byte 0x80 in position 658: illegal multibyte_淡竹云开的博客-CSDN博客)

6、改小batch_size=1,后

将batch改为2就可以了,按不同的电脑需求可能不太一样

查找到的原因:(参考:ValueError: batch_size should be a positive integer value, but got batch_size=0 · Issue #120 · cleinc/bts · GitHub)

In bts_main.py you'll notice a line:

args.batch_size = int(args.batch_size / ngpus_per_node)

My guess is that your batch_size is smaller than ngpus_per_node. Since int() rounds to the floor, your batch_size = 0.

For example:

batch_size = 3

ngpus_per_node = 4

int(3/4) = 0

Try increasing your batch_size and maybe using multiples of your ngpus_per_node.

复现过程借鉴了MECT: Multi-Metadata Embedding based Cross-Transformer forChinese Named Entity Recognition论文实验复现_啾星的小猫猫的博客-CSDN博客