机器学习好伙伴之scikit-learn的使用——datasets获得数据集

机器学习好伙伴之scikit-learn的使用——datasets获得数据集

- 载入sklearn中自带的datesets

- 利用sklearn的函数生成数据

- 应用示例

-

- 利用sklearn中自带的datesets进行训练

- 利用sklearn中生成的数据进行训练

在上一节课我们说到如何进行训练集和测试集的划分,在划分之前,还有一点很重要的是载入合适的数据集。

![]()

scikit-learn自带许多实用的数据集,用于测试模型与训练,非常实用

载入sklearn中自带的datesets

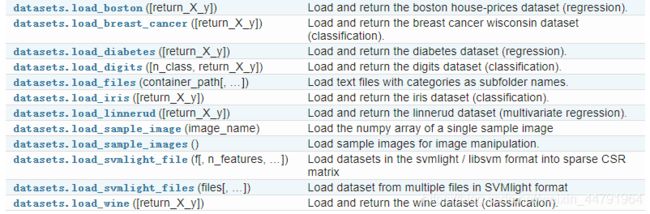

利用如下函数可以载入sklearn中常用的datesets

from sklearn import datasets

在datasets中,常用的数据名称、调用方式、适用算法和数据规模如下:

除去上面的几种数据集外,我们可以前往sklearn的官网查看其它实用的数据集:

https://scikit-learn.org/stable/modules/classes.html#module-sklearn.datasets

在python代码中,可以通过如下的方法获取数据集。

loaded_data = datasets.load_boston()

# 获取特征与对应的目标值

data_X = loaded_data.data

data_Y = loaded_data.target

利用sklearn的函数生成数据

除去sklearn中自带的许多数据集外,sklearn还可以生成数据。

常用的生成方法如下:

本文以生成回归数据为例,讲解如何利用sklearn生成可以用于训练的数据。

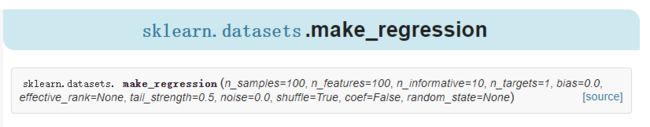

打开datasets.make_regression的页面可以看到datasets.make_regression详细的参数。

常用参数如下:

n_samples:样本数

n_features:每个样本的特征数

n_informative :有效信息,信息特征的数量,即用于构建用于生成输出的线性模型的特征的数量。

n_redundant:冗余信息,informative特征的随机线性组合

n_repeated :重复信息,随机提取n_informative和n_redundant 特征

n_targets:回归目标的输出

bias:基本线性模型中的偏差项

noise:生成结果的噪声

函数调用方式为:

X, y = datasets.make_regression(n_samples=100, n_features=1, n_targets=1, noise=10)

应用示例

利用sklearn中自带的datesets进行训练

实现代码为:

# 载入数据集

from sklearn import datasets

from sklearn.model_selection import train_test_split

from sklearn.linear_model import LinearRegression

import numpy as np

# 获取自带的数据库

loaded_data = datasets.load_boston()

data_X = loaded_data.data

data_Y = loaded_data.target

# 对自带的数据库进行划分

X_train, X_test, y_train, y_test = train_test_split(data_X, data_Y, test_size=0.3)

# 进行线性训练

model = LinearRegression()

model.fit(X_train, y_train)

# 预测,比较结果

print(model.predict(X_test)-y_test)

实验结果为,预测结果与实际结果有一定的偏差:

[ -4.32083993 1.21779322 0.92588852 6.63213006 3.64206762

0.53339736 0.58771424 -2.57979413 1.24509333 6.5652264

6.96686417 4.44253819 -0.80383831 -2.65691237 -6.65582548

2.51880244 -20.26150608 -5.07295788 3.14756883 1.79616885

1.00446839 0.95839837 6.98646141 0.67669195 2.27505619

-5.2557447 5.31508681 -1.78250561 1.52031694 0.95947612

0.36195507 -0.9259978 -1.36718779 4.27230508 0.83577059

-2.70704876 2.5626411 -0.96554518 -2.08485853 4.52656586

-0.56106616 -0.82236156 0.32942509 2.87786991 -1.57245813

3.56041759 -0.89990552 -0.70613626 0.56616644 -0.44223704

-2.96971507 1.59152775 -27.33888072 2.85800776 0.62582613

1.64704949 -10.38681447 -0.20579836 -5.12782407 -1.95571152

1.46984184 6.26040771 3.61214832 -5.15636024 3.57058735

0.69584653 0.04508708 2.39937335 -3.4427129 -7.23339761

-13.25181432 5.32245934 4.19778417 -3.38290778 1.17488126

2.88578286 -2.46635697 2.9071728 1.99747009 3.62120858

2.80418927 4.96581854 6.89986873 5.25073363 -2.66358202

6.16654885 4.70551011 -2.50297777 0.29701667 2.30451103

-18.69710415 2.44340239 5.0921586 2.07129662 -9.12512144

-7.23221737 3.06076078 3.2289587 0.57908266 -6.66312125

3.36308189 0.15555137 1.10987299 1.20843313 -3.08905426

4.62481519 -2.97478583 3.44949672 0.62329198 2.05383104

0.87283797 1.89363157 -9.48663782 -0.04938579 1.13795316

-1.93810385 2.16260112 -4.61696882 1.37781137 -1.77504111

0.39900168 2.95068343 -0.05496372 -1.04251617 6.67036798

-11.42967859 -1.03175378 0.95026778 6.29436769 -8.19767754

-1.61315791 -1.19523958 -2.27840324 -2.81928986 -2.64508748

1.28006411 -30.939282 0.9696373 -0.87861839 15.39549095

0.93702141 -0.52928773 0.97060633 -18.11069675 1.94384285

6.0846876 -0.32219858 1.6885332 1.72306516 0.30902564

1.1483849 1.69583331]

利用sklearn中生成的数据进行训练

实现代码为:

# 载入数据集

from sklearn import datasets

from sklearn.model_selection import train_test_split

from sklearn.linear_model import LinearRegression

import numpy as np

# 利用函数获取测试内容

data_X, data_Y = datasets.make_regression(n_samples=500, n_features=4, n_targets=1, noise=10)

# 对内容进行划分

X_train, X_test, y_train, y_test = train_test_split(data_X, data_Y, test_size=0.3)

# 进行线性训练

model = LinearRegression()

model.fit(X_train, y_train)

# 预测,比较结果

print(model.predict(X_test)-y_test)

实验结果为,预测结果与实际结果有一定的偏差:

[ 18.02467269 10.65427027 3.6119628 -2.31792705 17.12008342

4.78838342 15.18861015 -26.5113201 1.89947597 7.82148113

-10.10536587 -5.29198345 5.77718464 1.03981516 10.97207192

10.16088225 -4.44089219 -11.55399703 -9.03323276 0.90299202

-7.89203736 6.11155059 -22.18435471 8.71301653 3.36389708

-10.76470998 -24.07692007 -4.46077878 1.03771658 -5.39892106

5.72751355 4.95228045 6.6005534 0.1526285 -7.07137473

8.9840744 5.07088902 -3.62815639 1.86320243 4.42907918

8.04422199 -7.58088343 0.3912087 5.27823853 -8.03661081

-4.57543018 -10.0447772 -1.7485529 9.56039568 2.05514496

0.4758245 -8.4528048 -1.34800374 11.7522642 0.38639048

0.76524824 5.63207193 -10.98782417 27.91112702 -5.80363586

-12.70346101 9.14590667 -1.46200611 9.97969014 9.96405817

1.38061919 -5.35214404 -4.41259465 12.11166841 12.97697531

2.8912008 8.62946742 -0.04821456 -6.50127461 11.24254809

4.68218756 -33.56564161 -9.57347681 -9.17343223 11.77168043

3.35652864 -8.45436188 18.56012243 -3.14858898 9.59542618

2.42877687 -5.48526428 17.01071347 -2.14069015 4.39177413

5.57498895 -2.38403742 -2.43446519 14.36727563 -0.88722033

10.04797528 -9.33955844 -3.68504439 6.69969735 -29.4115413

-1.21425655 1.51859444 6.08471639 14.09008583 16.08143523

13.34213548 -4.66548477 -4.12516003 -2.4253649 -3.39698823

-2.29500693 -0.12027219 -0.23804341 -17.72229705 2.10578861

-3.99810849 -14.97580105 1.05624791 5.37911996 -3.46126258

-0.32871116 -4.99624719 10.59211956 14.24566851 5.71547039

4.52283305 -3.10016563 -15.05354972 -5.74515295 12.51473663

4.63068615 -3.20664498 -5.42049079 9.37493256 2.2296205

1.30180444 6.47528801 -9.26910871 5.36695885 -5.05384241

-15.74364437 -13.61850084 -22.27715077 12.15521687 -1.81656035

-3.71082241 -1.73377178 -8.61096813 6.1261822 4.22906206]