深度学习P3周----天气识别

深度学习P3周----天气识别

- 深度学习P3周----天气识别

- 前言

- 遇到问题以及解决办法

- 一、导入相关的包

- 二、数据集及操作

-

- 1.数据集

- 2.划分数据集

- 3.数据集变换形式

- 三、构建CNN网络

-

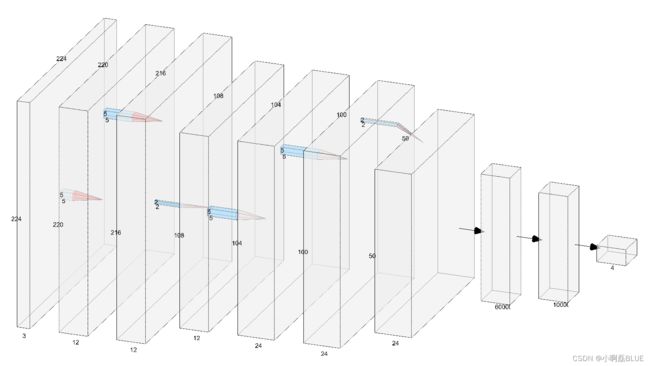

- 1.网络结构图

- 2.网络结构代码

- 四、训练与测试函数

-

- 1.超参数设置

- 2.训练进度条函数

- 3.训练函数

- 4.测试函数

- 五、训练循环函数及结果可视化函数

-

- 1.训练循环函数

- 2.结果可视化

- 六、调整参数提高测试集的精度

- 总结

前言

- 本文为[365天深度学习训练营] 中的学习记录博客

- ** 参考文章地址: [深度学习100例-卷积神经网络(CNN)天气识别 | 第3周

- ** 作者:[K同学啊]

遇到问题以及解决办法

- 发现原作者的网络结构和代码有一点不匹配,这里做了修改,使网络结构图与网络代码进行匹配。

- 由于自己的笔记本显存小的问题导致不能正常进行训练,这里才用pycharm远程连接服务器进行开发训练,并自主解决远程开发的一些问题。

- 在看网络结构图,自己没有把重点放在前向传播的过程,使得在计算卷积的过程出现了一点问题,但最终自己还是解决存在的问题,并在原来的结构中加了一个全连接结构。

- 这个学习train_dataloader, test_dataloader创建过程和以往不一样,值得我们学习, 同时自己也总结这个过程的方法。

一、导入相关的包

import torch

from torch import nn

import numpy as np

import pandas as pd

import datetime

import matplotlib.pyplot as plt

import torch.nn.functional as F

from torch.utils.data import DataLoader

import torchvision

from torchvision.transforms import ToTensor, transforms

import glob

import os, PIL, random, pathlib

二、数据集及操作

1.数据集

本次使用的数据集是天气数据集,总共有4种不同的的天气,其存放在文件夹格式如下:

2.划分数据集

total_dir = r'/root/autodl-tmp/weather_photos/'

total_data = torchvision.datasets.ImageFolder(total_dir, transform)

print(total_data)

print(len(total_data)) # 1125

train_size = int(len(total_data) * 0.8)

test_size = len(total_data) - train_size

train_dataset, test_dataset = torch.utils.data.random_split(total_data, [train_size, test_size])

train_dataloader = DataLoader(train_dataset, batch_size=32)

test_dataloader = DataLoader(test_dataset, batch_size=32)

3.数据集变换形式

transform = transforms.Compose([

transforms.Resize((224, 224)),

transforms.ToTensor(),

transforms.Normalize(mean=[0.5, 0.5, 0.5], std=[0.5, 0.5, 0.5])

])

三、构建CNN网络

1.网络结构图

2.网络结构代码

class Net(nn.Module):

def __init__(self):

super(Net, self).__init__()

self.conv_1 = nn.Conv2d(in_channels=3, out_channels=12, kernel_size=5, stride=1)

self.bn_1 = nn.BatchNorm2d(12)

self.conv_2 = nn.Conv2d(in_channels=12, out_channels=12, kernel_size=5, stride=1)

self.bn_2 = nn.BatchNorm2d(12)

self.pool_1 = nn.MaxPool2d(2, 2)

self.conv_3 = nn.Conv2d(in_channels=12, out_channels=24, kernel_size=5, stride=1)

self.bn_3 = nn.BatchNorm2d(24)

self.conv_4 = nn.Conv2d(in_channels=24, out_channels=24, kernel_size=5, stride=1)

self.bn_4 = nn.BatchNorm2d(24)

self.pool_2 = nn.MaxPool2d(2, 2)

self.linear_1 = nn.Linear(60000, 10000)

self.linear_2 = nn.Linear(10000, 4)

def forward(self, x):

x = F.relu(self.bn_1(self.conv_1(x)))

x = F.relu(self.bn_2(self.conv_2(x)))

x = self.pool_1(x)

x = F.relu(self.bn_3(self.conv_3(x)))

x = F.relu(self.bn_4(self.conv_4(x)))

x = self.pool_2(x)

x = x.view(-1, 24*50*50)

x = F.relu(self.linear_1(x))

x = self.linear_2(x)

return x

四、训练与测试函数

1.超参数设置

device = 'cuda' if torch.cuda.is_available() else 'cpu'

model = Net().to(device)

optimizer = torch.optim.SGD(model.parameters(), lr=0.0001)

loss_fn = nn.CrossEntropyLoss()

2.训练进度条函数

def printlog(info):

nowtime = datetime.datetime.now().strftime('%Y-%m-%d %H:%M:%S')

print("\n"+"=========="*8 + "%s"%nowtime)

print(str(info)+"\n")

3.训练函数

def train(train_dataloader, model, loss_fn, optimizer):

size = len(train_dataloader.dataset)

num_of_batch = len(train_dataloader)

train_correct, train_loss = 0.0, 0.0

for x, y in train_dataloader:

x, y = x.to(device), y.to(device)

pre = model(x)

loss = loss_fn(pre, y)

optimizer.zero_grad()

loss.backward()

optimizer.step()

with torch.no_grad():

train_correct += (pre.argmax(1) == y).type(torch.float).sum().item()

train_loss += loss.item()

train_correct /= size

train_loss /= num_of_batch

return train_correct, train_loss

4.测试函数

def test(test_dataloader, model, loss_fn):

size = len(test_dataloader.dataset)

num_of_batch = len(test_dataloader)

test_correct, test_loss = 0.0, 0.0

with torch.no_grad():

for x, y in test_dataloader:

x, y = x.to(device), y.to(device)

pre = model(x)

loss = loss_fn(pre, y)

test_loss += loss.item()

test_correct += (pre.argmax(1) == y).type(torch.float).sum().item()

test_correct /= size

test_loss /= num_of_batch

return test_correct, test_loss

五、训练循环函数及结果可视化函数

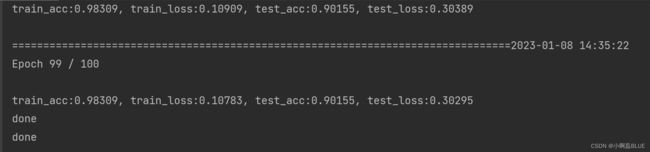

1.训练循环函数

epochs = 100

train_acc = []

train_loss = []

test_acc = []

test_loss = []

for epoch in range(epochs):

printlog("Epoch {0} / {1}".format(epoch, epochs))

epoch_train_acc, epoch_train_loss = train(train_dataloader, model, loss_fn, optimizer)

epoch_test_acc, epoch_test_loss = test(test_dataloader, model, loss_fn)

train_acc.append(epoch_train_acc)

train_loss.append(epoch_train_loss)

test_acc.append(epoch_test_acc)

test_loss.append(epoch_test_loss)

template = ("train_acc:{:.5f}, train_loss:{:.5f}, test_acc:{:.5f}, test_loss:{:.5f}")

print(template.format(epoch_train_acc, epoch_train_loss, epoch_test_acc, epoch_test_loss))

print('done')

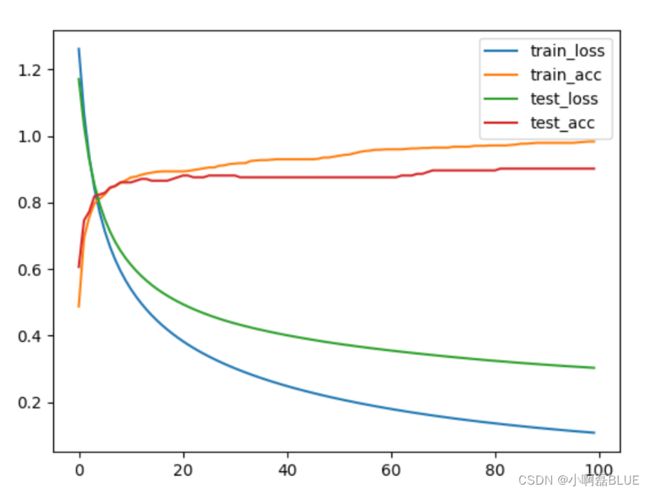

plt.plot(range(epochs), train_loss, label='train_loss')

plt.plot(range(epochs), train_acc, label='train_acc')

plt.plot(range(epochs), test_loss, label='test_loss')

plt.plot(range(epochs), test_acc, label='test_acc')

plt.legend()

plt.show()

print('done')

2.结果可视化

六、调整参数提高测试集的精度

总结

- 动态的调整 lr, 没5个epoch动态调整lr, 原来test_acc:0.9, 调整后test_acc:0.93