【图像修复】基于深度学习的图像修复算法的MATLAB仿真

1.软件版本

matlab2021a

2.本算法理论知识

在许多领域,人们对图像质量的要求都很高,如医学图像领域、卫星遥感领域等。随着信息时代的快速发展,低分辨率图像已经难以满足特定场景的需要。因此,低分辨率图像恢复与重建的研究逐渐成为现阶段的研究热点,具有广阔的应用前景和应用价值。目前,现有的图像恢复和重建算法在一定程度上可以解决图像分辨率低的问题,但对于细节丰富的图像,重建能力差,视觉效果差。针对这一问题,本文结合深度学习神经网络的基本原理,提出了一种基于卷积神经网络的图像恢复与重建算法。本文的主要内容如下:

首先,在阅读大量国内外文献的基础上,总结了图像恢复与重建算法的研究现状。总结了卷积神经网络在深度学习中的基本原理、优缺点。

其次,详细介绍了卷积神经网络的基本原理和结构。推导了正向传播和反向传播公式。从理论上证明了卷积神经网络在图像超分辨率重建中的优越性。

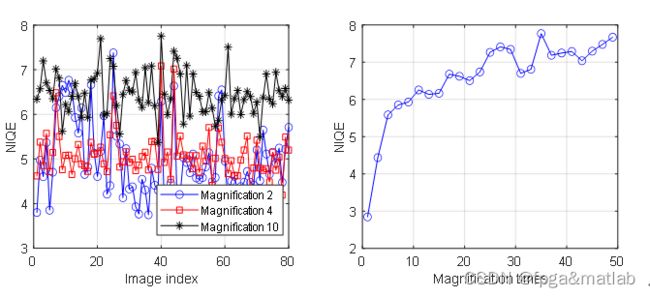

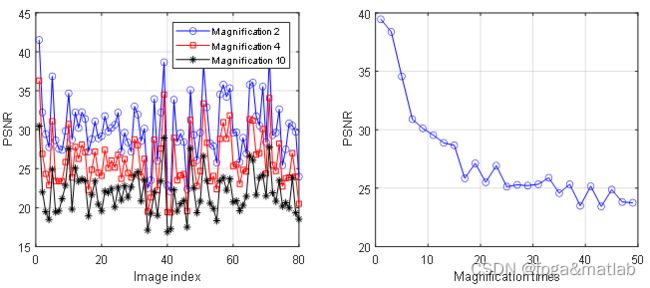

再次,利用MATLAB深度学习工具箱设计了卷积神经网络模型。在设计过程中,利用MATLAB对卷积神经网络的参数进行优化,包括卷积层数、学习速率、卷积核大小和批量大小。通过MATLAB仿真结果,CNN的最佳参数为:学习率为0.05,卷积层数为18,卷积核大小为3*3,批量大小为32。最后,利用IAPR-TC12基准图像数据库对卷积神经网络的性能进行了仿真。并对双三次方法和SRCNN方法进行了比较。仿真结果表明,与传统的双三次和SRCNN方法相比,该方法具有更好的性能和更高的精细度优势。

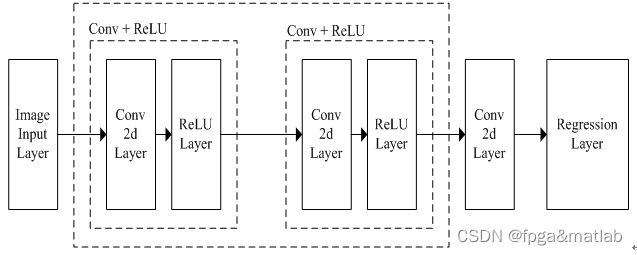

Through the theoretical introduction of convolution neural network, we will establish the following deep learning network model of image reconstruction.

Fig 1. The structure of image reconstruction CNN model

Figure 6 shows that the CNN contains the model of image Input Layer, convolution 2d Layer, relu Layer and regression Layer.

According to the structure of this CNN model, we will use the deep learning toolbox to establish the CNN image reconstruction model. The main functions used include “imageInputLayer”, “convolution2dLayer”, “reluLayer”, “trainNetwork” and ”regression Layer”.

The function of “imageInputLayer” is mainly used to input images. An image input layer inputs 2-D images to a network and applies data normalization. In this function, we can set the size and type of the input image. In our model, we set up the function as follows:

| Layers = imageInputLayer([64 64 1], 'Name','InputLayer', 'Normalization','none' ); |

The function of “convolution2dLayer” is mainly used to realized the image convolution and get the feature of image. A 2-D convolutional layer applies sliding convolutional filters to the input. The layer convolves the input by moving the filters along the input vertically and horizontally and computing the dot product of the weights and the input, and then adding a bias term. In our model, we set the function as follows:

| convLayer = convolution2dLayer(3,64, 'Padding',1, 'WeightsInitializer','he', 'BiasInitializer','zeros', 'Name','Conv1' ); |

The function of “reluLayer” is mainly used to realized the activation layer of convolution neural networks. A ReLU layer performs a threshold operation to each element of the input, where any value less than zero is set to zero.

| relLayer = reluLayer('Name', 'ReLU1'); |

The function of “regressionLayer” is mainly used to compute the half-mean-squared-error loss for regression problems. In our model, we set up the function as follows:

| regressionLayer('Name','FinalRegressionLayer') |

The function of “trainNetwork” is to train a convolutional neural network for image data. We can train on either CPU or GPU. In our model, we set the function as follows:

| options = trainingOptions('sgdm', ... 'Momentum', 0.9, ... 'InitialLearnRate', initLearningRate, ... 'LearnRateSchedule', 'piecewise', ... 'LearnRateDropPeriod', 10, ... 'LearnRateDropFactor', learningRateFactor, ... 'L2Regularization', l2reg, ... 'MaxEpochs', maxEpochs, ... 'MiniBatchSize', miniBatchSize, ... 'GradientThresholdMethod', 'l2norm', ... 'GradientThreshold', 0.01, ... 'Plots', 'training-progress', ... 'Verbose', false); net = trainNetwork(dsTrain, layers, options); |

3.核心代码

clc;

close all;

clear all;

warning off;

addpath 'func\'

%%

Testdir = 'images\';

[train_up2,train_res2,augmenter]=func_train_data(Testdir);

%%

SCS = 64;

[layers,lgraph,dsTrain] = func_myCNN(SCS,train_up2,train_res2,augmenter,18,3);

figure

plot(lgraph)

%%

maxEpochs = 1;

epochIntervals = 1;

initLearningRate = 0.05;

learningRateFactor = 0.1;

l2reg = 0.0001;

miniBatchSize = 32;

options = trainingOptions('sgdm', ...

'Momentum',0.9, ...

'InitialLearnRate',initLearningRate, ...

'LearnRateSchedule','piecewise', ...

'LearnRateDropPeriod',10, ...

'LearnRateDropFactor',learningRateFactor, ...

'L2Regularization',l2reg, ...

'MaxEpochs',maxEpochs, ...

'MiniBatchSize',miniBatchSize, ...

'GradientThresholdMethod','l2norm', ...

'GradientThreshold',0.01, ...

'Plots','training-progress', ...

'Verbose',false);

net = trainNetwork(dsTrain,layers,options);

save trained_cnn.mat 4.操作步骤与仿真结论

| unit |

parameter |

|

| 1 |

InputLayer |

input Image size is 64x64x1 |

| 2 |

Conv1 |

Convolution kernel size is 3x3,convolution kernel number is 64, stride is [1 1 1] |

| 3 |

ReLU1 |

The activation function is ReLU |

| 4 |

Conv2 |

Convolution kernel size is 3x3,convolution kernel number is 64, stride is [1 1 1] |

| 5 |

ReLU2 |

The activation function is ReLU |

| 6 |

Conv3 |

Convolution kernel size is 3x3,convolution kernel number is 64, stride is [1 1 1] |

| 7 |

ReLU4 |

The activation function is ReLU |

| ………. |

||

| 8 |

Conv16 |

Convolution kernel size is 3x3,convolution kernel number is 64, stride is [1 1 1] |

| 9 |

ReLU16 |

The activation function is ReLU |

| 10 |

Conv17 |

Convolution kernel size is 3x3,convolution kernel number is 64, stride is [1 1 1] |

| 11 |

ReLU17 |

The activation function is ReLU |

| 12 |

Conv18 |

Convolution kernel size is 3x3x64,convolution kernel number is 64, stride is [1 1 1] |

| 13 |

FinalRegressionLayer |

The Regression output is mean squared error |

5.参考文献

| [1] |

B. WU, Y. WU and H. ZHANG, Image restoration technology based on variational partial differential equation, Beijing: Peking University Press, 2008. |

| [2] |

J. YANG and C. HUANG, Digital image processing and MATLAB implementation (second edition), Beijing: Electronic Industry Press, 2013. |

| [3] |

D. Wang, Z. Li, S. Guo and L. Xie, "Nonlocally centralized sparse represention for image restoration," IEEE Trans. Image Process, vol. 22, pp. 1620-1630, 2013. |

A05-110

6.完整源码获得方式

方式1:微信或者QQ联系博主

方式2:订阅MATLAB/FPGA教程,免费获得教程案例以及任意2份完整源码