二进制安装部署 kubernetes 集群环境

二进制安装部署 kubernetes 环境

- 前期准备

-

- 虚拟机准备:

- 系统环境准备

- 整体架构设计

- 自建DNS服务 --- bind工具

- 证书签发环境 —— 自签证书之 CFSSL

-

-

- 证书签发工具安装:

- 根证书制作

-

- 部署 docker 容器引擎环境

- 搭建一个私有 docker 镜像仓库 —— harbor(离线部署版)

- 部署 etcd 存储服务集群

-

-

- 下载 etcd

- 为 etcd 服务签发证书:

- 启动 etcd 服务

-

- k8s 主控节点

-

- 部署 kube-apiserver 集群

-

-

- 下载资源:

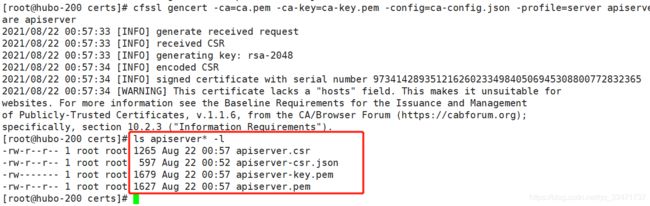

- 签发证书

-

-

- 客户端与服务端通信时需要的证书:client 证书

- 服务端与客户端通信时需要的证书:server 证书

- 证书下发到 hubo-21 和 hubo-22

-

- 配置 apiserver 日志审计

- 配置启动脚本

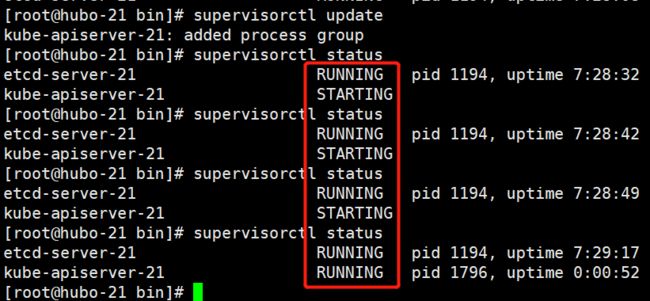

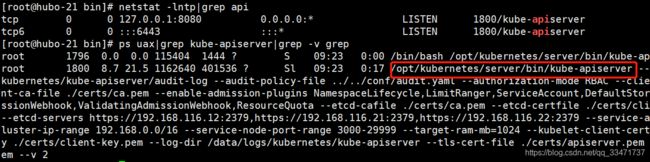

- supervisor 管理 apiserver

- apiserver L4代理

- keepalive 安装部署---实现 vip :192.168.116.10

-

- controller-manager 安装

-

-

- 创建启动脚本

- 配置supervisor启动配置

- 启动

-

- kube-scheduler 调度服务安装

-

-

- 创建启动脚本

- 配置supervisor启动配置

- 启动

-

- 检查主控节点状态

- k8s 运算节点

-

- kubelet 部署

-

-

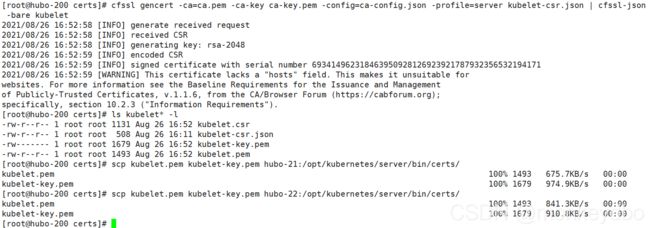

- 签发证书

- 创建 kubelet 配置

- 角色绑定(ClusterRoleBinding —— 是一个集群资源,被存储到了 etcd 中)

- 装备 pause 基础镜像

- 创建启动参数配置文件和启动脚本

- 交给 supervisor 管理

-

- kube-proxy部署

-

-

- 签发证书

- 创建 kube-proxy 配置

- 加载ipvs模块

- 创建启动脚本

- 交给 supervisor 管理

-

- 验证集群

-

-

- 利用 ipvsadm 查看集群网络结构

- 创建 pod 资源

-

-

- Pod 资源配置文件

- 通过刚才的配置文件创建资源

-

-

前期准备

虚拟机准备:

- hubo-11

Vmware:192.168.116.11 - hubo-12

Vmware:192.168.116.12 - hubo-21

Vmware:192.168.116.21 - hubo-22

Vmware:192.168.116.22 - hubo-200

Vmware:192.168.116.200

系统环境准备

-

主机名修改

hostnamectl set-hostname hubo-11.host.com -

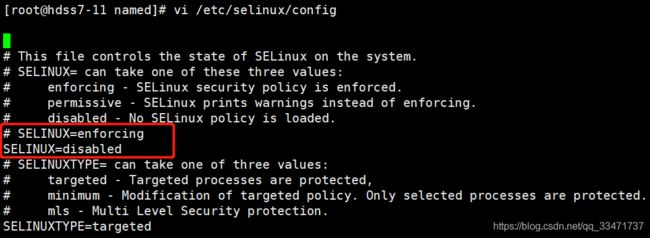

SELinux 状态配置:

说明:SELinux 是 2.6 版本的 Linux 内核中提供的强制访问控制(MAC)系统。【推荐】

# 查看SELinux状态,enforcing 表示开启状态 getenforce# 关闭 SELinux,将 SELINUX 设置为 disabled vi /etc/selinux/config -

防火墙配置

# 查看防火墙状态 firewall-cmd --state # 暂时先关闭防火墙 systemctl stop firewalld # 禁止开机自启动 systemctl disable firewalld.service -

配置安装epel源和base源

yum install epel-release -y -

安装一些基本工具:

yum install wget net-tools telnet tree nmap sysstat lrzsz dos2unix bind-utils -y工具功能简单说明:

工具 用途 wget 通过 url 获取网络资源 net-tools telnet 远程连接工具 tree nmap sysstat lrzsz dos2unix bind-utils

整体架构设计

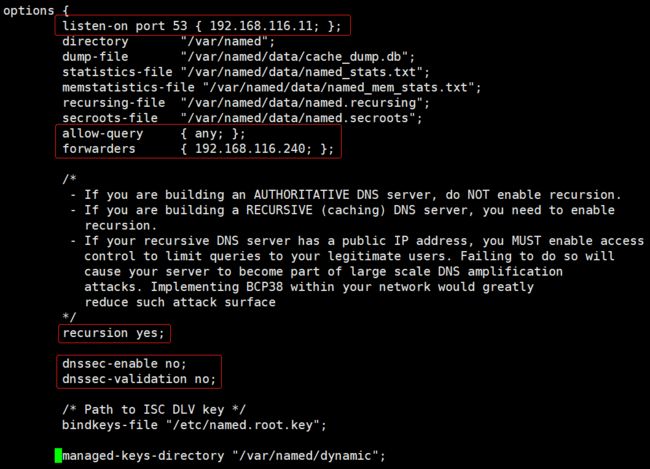

自建DNS服务 — bind工具

注:在虚拟主机 192.168.116.11 上安装 DNS 服务

nslookup www.baidu.com 可以产看域名映射的ip地址,nslookup 是 bind-utils 工具中的一个指令

- 安装 bind

yum install bind -y - 修改配置

-

修改主配置文件 /etc/named.conf

vi /etc/named.conf主要修改内容:

listen-on 监听配置,监听本机网络IP地址 端口53

allow-query 允许查询配置,运行网络内所有主机查询

forwarders 上级DNS服务,使用虚拟机的网关地址

recursion 使用递归解析方式解析域名

dnssec-enable 和 dnssec-validation 实验环境暂时关闭节约性能

修改完成后可以使用:named-checkconf 命令查看配置文件格式是否正确

-

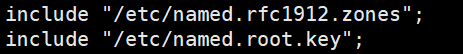

配置区域配置文件 /etc/named.rfc1912.zones

vi /etc/named.rfc1912.zones在配置文件结尾追加两个域,一个主机域,一个业务域名,内容如下:

注意空行空格tab等分隔符,要求严格,配置完成后可以用 named-checkconf filename 检查

zone "host.com" IN { type master; file "host.com.zone"; allow-update { 192.168.116.11; }; }; zone "k8s.com" IN { type master; file "k8s.com.zone"; allow-update { 192.168.116.11; }; }; -

编辑区域数据文件

添加主机域配置文件:

vi /var/named/host.com.zone/var/named/host.com.zone 文件内容:

$ORIGIN host.com. $TTL 600 ;10 minutes @ IN SOA dns.host.com. dnsadmin.host.com. ( 2021081501 ; serial 10800 ; refresh (3 hours) 900 ; retry (15 minutes) 604800 ; expire (1 week) 86400 ; minimum (1 day) ) NS dns.host.com. $TTL 60 ; 1 minute dns A 192.168.116.11 HUBO-11 A 192.168.116.11 HUBO-12 A 192.168.116.12 HUBO-21 A 192.168.116.21 HUBO-22 A 192.168.116.22 HUBO-200 A 192.168.116.200添加业务域配置文件

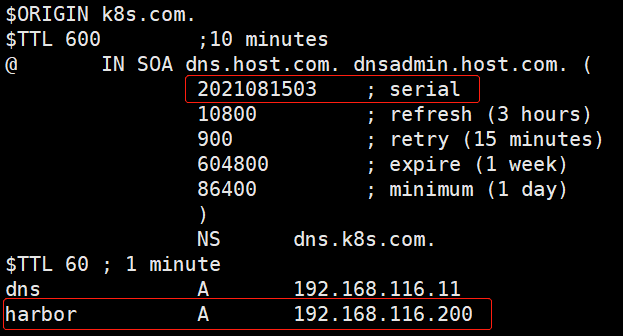

vi /var/named/k8s.com.zone/var/named/k8s.com.zone 文件内容:

$ORIGIN k8s.com. $TTL 600 ;10 minutes @ IN SOA dns.host.com. dnsadmin.host.com. ( 2021081501 ; serial 10800 ; refresh (3 hours) 900 ; retry (15 minutes) 604800 ; expire (1 week) 86400 ; minimum (1 day) ) NS dns.k8s.com. $TTL 60 ; 1 minute dns A 192.168.116.11 -

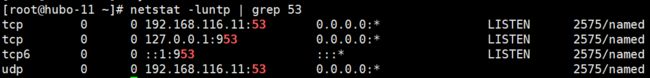

启动 DNS 服务:

systemctl start named ## 查看启动后的监听 netstat -luntp | grep 53

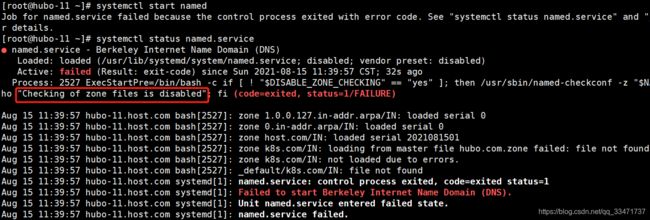

测试写是否可以正确解析:dig -t A hubo-21.host.com @192.168.116.11 +short注:在启动是出错:

配置文件有问题,通过命令检查:named-checkconf -z /etc/named/named.conf

发现之前在区域配置文件中配置的 k8s.com 域对应的数据文件写错了:

解决:该配置或者改文件名 -

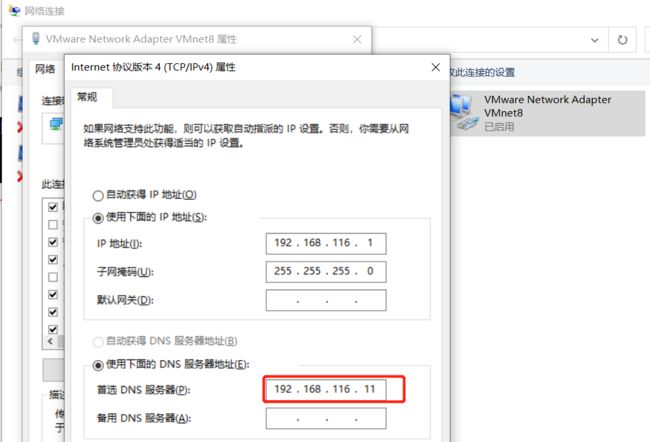

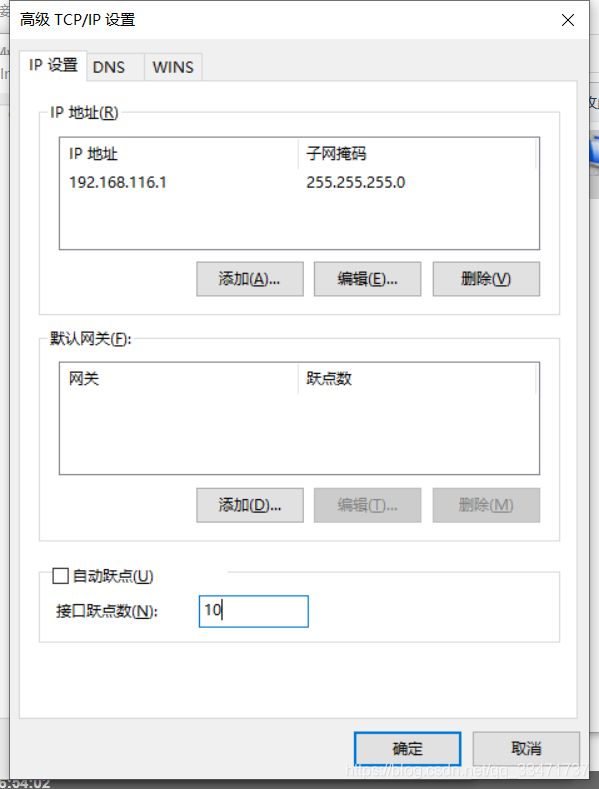

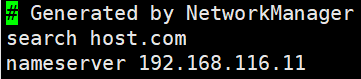

修改网卡配置并重启

每一台机器都要修改DNS服务地址,改成192.168.116.11

vi /etc/sysconfig/network-scripts/ifcfg-ens33

并添加主机域访问时自动搜索,这样访问主机域时不用写后面的主机域(host.com)

vi /etc/resolv.conf添加一行search host.com

重启网卡:

systemctl restart network

通过主机域ping其他主机测试:

ping hubo-200

-

证书签发环境 —— 自签证书之 CFSSL

注:也可以用openssl

证书签发服务安装在:192.168.116.200 虚拟主机上

证书签发工具安装:

版本:1.2

- cfssl

- cfssl-json

- cfssl-certinfo

如果没有互联网环境可以下载二进制文件然后传到虚拟机中

有互联网环境就直接用wget命令从网上获取:

# 将软件包下载到 /usr/bin 目录下

wget https://pkg.cfssl.org/R1.2/cfssl_linux-amd64 -O /usr/bin/cfssl

wget https://pkg.cfssl.org/R1.2/cfssljson_linux-amd64 -O /usr/bin/cfssl-json

wget https://pkg.cfssl.org/R1.2/cfssl-certinfo_linux-amd64 -O /usr/bin/cfssl-certinfo

# 添加可执行权限

chmod +x /usr/bin/cfssl*

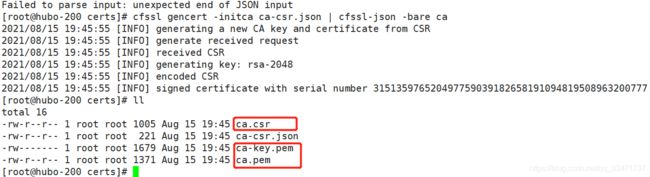

根证书制作

-

创建根证书 json 配置文件: ca-csr.json

mkdir -p /opt/certs vi /opt/certs/ca-csr.jsonca-csr.json文件内容:

{ "CN": "HuBoEdu", "hosts": [ ], "key": { "algo": "rsa", "size": 2048 }, "names": [ { "C": "CN", "ST": "liaoning", "L": "shenyang", "O": "edu", "OU": "development" } ], "ca": { "expiry": "17520h" } }文件内容说明:

- CN : Common Name,浏览器使用该字段验证网站是否合法,一般用域名

- key:密钥相关配置,algo 表示加密算法

- names:基本名称信息,C-国家,ST-州省,L-地区城市,O-组织机构,OU-组织单位名称或部门

- ca:证书配置,expiry表示过期时间

-

制作根证书

cfssl gencert -initca ca-csr.json | cfssl-json -bare ca

部署 docker 容器引擎环境

k8s依赖于容器引擎

计划在 HUBO-21 ,HUBO-22 ,HUBO-200 三台虚拟主机部署 docker-ce 容器引擎

- 在线安装可参考:

- 离线二进制包安装:

-

下载对应的二进制文件

docker历史版本下载地址

也可以在其他又网络的主机上下载:curl -O https://download.docker.com/linux/static/stable/x86_64/docker-18.06.3-ce.tgz -

编辑安装脚本:install-docker.sh

#!/bin/sh usage(){ echo "Usage: $0 FILE_NAME_DOCKER_CE_TAR_GZ" echo " $0 docker-17.09.0-ce.tgz" echo "Get docker-ce binary from: https://download.docker.com/linux/static/stable/x86_64/" echo "eg: wget https://download.docker.com/linux/static/stable/x86_64/docker-17.09.0-ce.tgz" echo "" } SYSTEMDDIR=/usr/lib/systemd/system SERVICEFILE=docker.service DOCKERDIR=/usr/bin DOCKERBIN=docker SERVICENAME=docker if [ $# -ne 1 ]; then usage exit 1 else FILETARGZ="$1" fi if [ ! -f ${FILETARGZ} ]; then echo "Docker binary tgz files does not exist, please check it" echo "Get docker-ce binary from: https://download.docker.com/linux/static/stable/x86_64/" echo "eg: wget https://download.docker.com/linux/static/stable/x86_64/docker-17.09.0-ce.tgz" exit 1 fi echo "##unzip : tar xvpf ${FILETARGZ}" tar xvpf ${FILETARGZ} echo echo "##binary : ${DOCKERBIN} copy to ${DOCKERDIR}" cp -p ${DOCKERBIN}/* ${DOCKERDIR} >/dev/null 2>&1 which ${DOCKERBIN} echo "##systemd service: ${SERVICEFILE}" echo "##docker.service: create docker systemd file" cat >${SYSTEMDDIR}/${SERVICEFILE} <<EOF [Unit] Description=Docker Application Container Engine Documentation=http://docs.docker.com After=network.target docker.socket [Service] Type=notify EnvironmentFile=-/run/flannel/docker WorkingDirectory=/usr/local/bin ExecStart=/usr/bin/dockerd \ -H tcp://0.0.0.0:4243 \ -H unix:///var/run/docker.sock \ --selinux-enabled=false \ --log-opt max-size=1g ExecReload=/bin/kill -s HUP $MAINPID # Having non-zero Limit*s causes performance problems due to accounting overhead # in the kernel. We recommend using cgroups to do container-local accounting. LimitNOFILE=infinity LimitNPROC=infinity LimitCORE=infinity # Uncomment TasksMax if your systemd version supports it. # Only systemd 226 and above support this version. #TasksMax=infinity TimeoutStartSec=0 # set delegate yes so that systemd does not reset the cgroups of docker containers Delegate=yes # kill only the docker process, not all processes in the cgroup KillMode=process Restart=on-failure [Install] WantedBy=multi-user.target EOF echo "" systemctl daemon-reload echo "##Service status: ${SERVICENAME}" systemctl status ${SERVICENAME} echo "##Service restart: ${SERVICENAME}" systemctl restart ${SERVICENAME} echo "##Service status: ${SERVICENAME}" systemctl status ${SERVICENAME} echo "##Service enabled: ${SERVICENAME}" systemctl enable ${SERVICENAME} echo "## docker version" docker version -

授予可执行权限并执行

chmod +x install-docker.sh

./install-docker.sh docker-18.06.3-ce.tgz 2>&1 |tee install.log -

检验

docker version,docker info,docker images -

配置各 docker 的网络 bip,便于区分

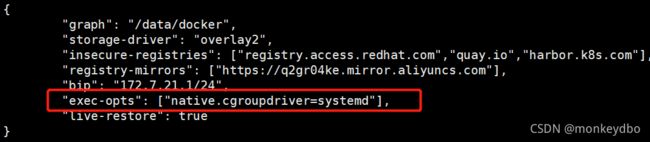

修改配置文件:daemon.json配置文件位置:/etc/docker/daemon.json,如果没有就新建一个

daemon.json内容:

{ "graph": "/data/docker", "storage-driver": "overlay2", "insecure-registries": ["registry.access.redhat.com","quay.io","harbor.hubo.com"], "registry-mirrors": ["https://q2gr04ke.mirror.aliyuncs.com"], "bip": "172.7.21.1/24", "live-restore": true }注:bip 根据各个主机 ip 做响应的配置

注:"exec-opts": ["native.cgroupdriver=systemd"],` 这个选项配置有问题,加了这个之后容器运行不起来,导致后面启动 Pod 出错。先使用默认的 cgroupfs ,在后面 kubelet 配置 cgroupRoot 参数时也要选择 cgroupfs。 -

重启docker :

systemctl restart docker -

查看 docker0 网卡信息:

ip addr

-

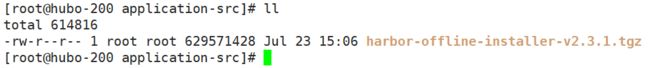

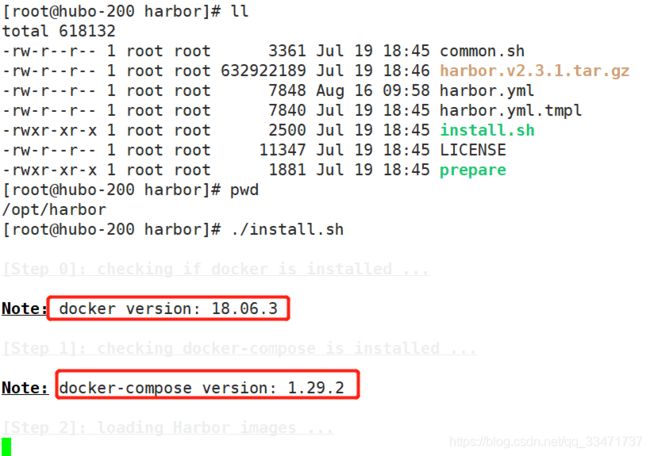

搭建一个私有 docker 镜像仓库 —— harbor(离线部署版)

在 hubo-200 虚拟主机中搭建

官方地址:https://goharbor.io/

下载地址:https://github.com/goharbor/harbor/releases

下载的时候下载 harbor-offline-installer-vx.x.x.tgz 版本(离线安装版本)

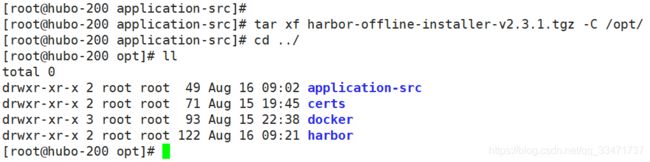

harbor-offline-installer-v2.3.1.tgz

下载后上传到:192.168.116.200 虚拟主机的 /opt/application-src 中

有网可以直接在 192.168.116.200 上用 wget 命令获取:

wget https://github.com/goharbor/harbor/releases/download/v2.3.1/harbor-offline-installer-v2.3.1.tgz

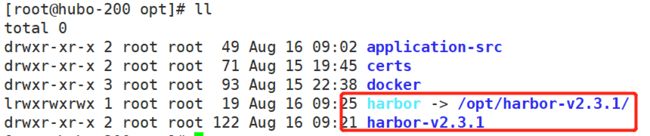

解压到 /opt 目录下:

tar xf harbor-offline-installer-v2.3.1.tgz -C /opt/

将 /etc/harbor 目录加上版本号并建立软连接(便于版本管理):

# /opt 目录下

mv harbor/ harbor-v2.3.1

ln -s /opt/harbor-v2.3.1/ /opt/harbor

# 在 /opt/harbor 目录下

# 拷贝模板配置文件

cp harbor.yml.tmpl harbor.yml

# 编辑配置文件

vi harbor.yml

修改内容:

-

将 hostname 配置到之前设计的业务域上

hostname: harber.k8s.com原本想设置的是:harbor.k8s.com ,但是写出了,导致了后面出现的问题,但是可以通过其他方式解决,详细见后面的内容。

-

修改 htpp 端口,默认是 80,改成 180(防止将来使用 80 时被占用)

http: port: 180 -

修改默认的管理原密码

harbor_admin_password: Harbor12345 -

修改日志相关配置

# Log configurations log: # options are debug, info, warning, error, fatal level: info # configs for logs in local storage local: # Log files are rotated log_rotate_count times before being removed. If count is 0, old versions are removed rather than rotated. rotate_count: 50 # Log files are rotated only if they grow bigger than log_rotate_size bytes. If size is followed by k, the size is assumed to be in kilobytes. # If the M is used, the size is in megabytes, and if G is used, the size is in gigabytes. So size 100, size 100k, size 100M and size 100G # are all valid. rotate_size: 200M # The directory on your host that store log 日志保存地址 location: /data/harbor/logs -

修改默认数据存储目录:

# The default data volume data_volume: /data/harbor -

mkdir -p /data/harbor/logs创建相应的目录 -

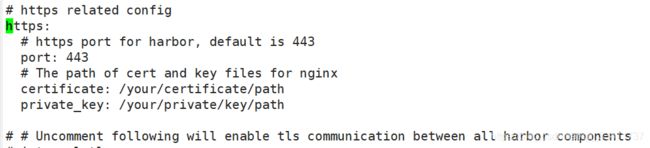

遇到的问题:安装时最后出现 https 相关的错误 没有设置ssl证书

prepare base dir is set to /opt/harbor-v2.3.1

Error happened in config validation…

ERROR:root:Error: The protocol is https but attribute ssl_cert is not set

所以需要先把 https 相关的配置注释掉。

harbor 是一个基于 docker 引擎的单机编排的软件,需要依赖 docker-compose 。

在 hubo-200 上安装 docker-compose :

-

在线安装:

yum install docker-compose -y -

离线安装

下载离线包:https://github.com/docker/compose/releases

docker-compose-Linux-x86_64

放到/opt/application-src/目录下

将刚下载的docker-compose-Linux-x86_64复制到/usr/local/bin并重命名为docker-compose:# 在 /opt/application-src/ 目录下执行 # 复制重命名 cp docker-compose-Linux-x86_64 /usr/local/bin/docker-compose # 可执行权限 chmod +x /usr/local/bin/docker-compose # 检查是否安装成功 docker-compose -version

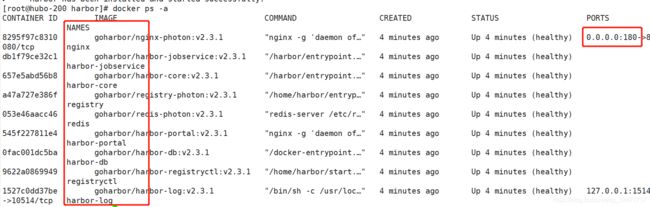

安装 harbor:(到前面的 /opt/harbor/ 目录下执行安装文件)

./install.sh

启动过程中遇到的问题:

- https 问题在本节修改配置中第7条说明

- ERROR: Failed to Setup IP tables: Unable to enable SKIP DNAT rule

原因:之前关闭防火墙没有重启 docker

解决:service docker restart

设置 harbor 开机启动:

[root@hubo-200 harbor]# vi /etc/rc.d/rc.local # 增加以下内容

# start harbor

cd /opt/harbor

/usr/docker-compose stop

/usr/docker-compose start

宿主机web访问:http://hubo-200.host.com:180/

配置业务域访问:

这里访问使用的是自建DNS中设置的主机域,为了使用业务域访问,需要配置 hubo-11 上的DNS:

# 在 hubo-11(192.168.116.11)主机上,修改业务域配置

vi /var/named/k8s.com.zone

在业务域中增加一条:

harbor A 192.168.116.200

另外:序列号需要手动的加一

修改完成后重启服务

systemctl restart named.service

# 测试一下

host harbor.k8s.com

浏览器中可以用:http://harbor.k8s.com:180 访问 harbor 控制台

反向代理 harbor

为了用 80 端口访问 harbor 需要在 hubo-200 上装 Nginx 做一个反向代理:

- 在线安装 Nginx :

yum install nginx -y - 离线安装Nginx:

软件包下载地址:http://nginx.org/packages/centos/7/x86_64/RPMS/

nginx-1.16.1-1.el7.ngx.x86_64.rpm

下载上传到/opt/application-src/目录下

安装命令:yum install -y nginx-1.16.1-1.el7.ngx.x86_64.rpm

添加 Nginx 配置文件:vi /etc/nginx/conf.d/harbor.conf 内容如下:

server {

listen 80;

server_name harbor.k8s.com;

client_max_body_size 1000m;

location / {

proxy_pass http://127.0.0.1:180;

}

}

启动与开机自启动:

## 启动

service nginx start

#或者

systemctl start nginx

## 设置开机自启动

systemctl enable nginx.service

至此,在浏览器中已经可以直接使用 http://harbor.k8s.com/ 访问 harbor 。

在完成整个项目部署后会正对防火墙进行配置,会关闭 hubo-200 主机 180 端口的对外访问。

测试私有仓库:

访问 http://harbor.k8s.com/ 登录,新建一个项目:public

向私有仓库 push 第一个镜像:

- 先给已有镜像打标签 tag

docker tag nginx:1.7.9 harbor.k8s.com/public/nginx:v1.7.9nginx:1.7.9 是从公网拉的:docker pull nginx:1.7.9

- 登录私有仓库:docker login

docker login -u admin harbor.k8s.com - 推送

docker push harbor.od.com/public/nginx:v1.7.9 - 退出

docker logout

镜像来源可以从公网

pull的,如果没有网,那就从别的地方上创导宿主机然后docker load,或者在宿主机上构建。

执行 docker login harbor.k8s.com 时遇到问题:

Error response from daemon: Get http://harbor.k8s.com/v2/: Get http://harbor.k8s.com:180/service/token?account=admin&client_id=docker&offline_token=true&service=habor-registry: net/http: request canceled while waiting for connection (Client.Timeout exceeded while awaiting headers)

错误分析:docker使用Http请求并且是Get,访问 http://harbor.k8s.com:180 ,说明 DNS 解析是正常的,但是代理出了问题,正常应该是 180 端口通过 Nginx 交给了 80 端口代理,命令缺省,说明访问的是 80 端口,而错误日志却显示 180,说明 Nginx 应该也没有问题,至少是代理了, 所以需要检查一下 harbor 的配置:发现一个 external_url 的配置,以及其官网:

# Uncomment external_url if you want to enable external proxy

# And when it enabled the hostname will no longer used

# external_url: https://reg.mydomain.com:8433

如果要启用外部代理,请取消 external_url 的注释

当它启用时,主机名将不再使用

如果取消这个注释,那么主机名就不会生效了,于是检查了一下配置的主机名,果然写错了,写的 harber.k8s.com ,但是使用的是 harbor.k8s.com ,harbor认为 harbor.k8s.com 是一个 external_url ,但是配置文件中又没有将这个配置打开,所以 harbor 访问时会出错

解决:

-

把主机名写正确,或者打开 external_url 配置,地址设置为

http://harbor.k8s.com:80 -

删除所有 harbor 相关的容器,停止所有 harbor 服务

## 在 /opt/harbor/ 目录下 docker-compose down -

删除之前安装 harbor 的配置目录

## 在 /opt/harbor/ 目录下 rm -rf ./common/config -

重新安装执行命令

## 在 /opt/harbor/ 目录下 ./install.sh

部署 etcd 存储服务集群

etcd 目标是构建一个高可用的分布式键值(key-value)数据库。etcd内部采用 raft 协议作为一致性算法,基于Go语言实现。

优势:

- 简单:安装配置简单,而且提供了HTTP API进行交互,使用也很简单

- 安全:支持SSL证书验证

- 快速:根据官方提供的benchmark数据,单实例支持每秒2k+读操作

- 可靠:采用raft算法,实现分布式系统数据的可用性和一致性

etcd 详细介绍

目标,在 hubo-12,hubo-21,hubo-22 上部署 etcd 集群

| 主机名 | 角色 | IP |

|---|---|---|

| hubo-12 | etcd lead | 192.168.116.12 |

| hubo-21 | etcd follow | 192.168.116.21 |

| hubo-22 | etcd follow | 192.168.116.22 |

注: 以 hubo-12 主 etcd节点部署为例,另外两个节点类似。

下载 etcd

etcd 地址:https://github.com/etcd-io/etcd/releases

本文使用版本: etcd-v3.5.0-linux-amd64.tar.gz

下载后放到/opt/application-src/目录下

解压:tar -xf etcd-v3.5.0-linux-amd64.tar.gz

移动到/opt并重命名:mv etcd-v3.5.0-linux-amd64 /opt/etcd-v3.5.0

创建软连接:ln -s /opt/etcd-v3.5.0 /opt/etcd

查看结果:ll /opt/etcd

创建 etcd 用户

useradd -s /sbin/nologin -M etcd

创建一些目录:

mkdir -p /opt/etcd/certs /data/etcd /data/logs/etcd-server

至此,三台机器都完成了下载解压创建用户和目录的步骤。(目录和用户是后面需要使用的)

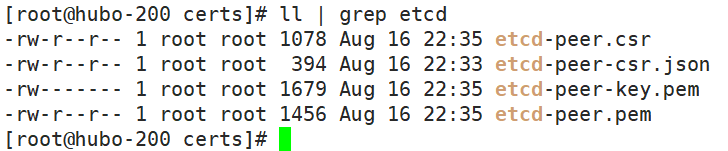

为 etcd 服务签发证书:

使用 hubo-200 虚拟主机上的 cfssl 证书签发服务制作 etcd 需要的证书,并分发到三台 etcd 虚拟主机。

注:在hubo-200主机上操作

创建根证书配置文件 /opt/certs/ca-config.json

{

"signing": {

"default": {

"expiry": "175200h"

},

"profiles": {

"server": {

"expiry": "175200h",

"usages": [

"signing",

"key encipherment",

"server auth"

]

},

"client": {

"expiry": "175200h",

"usages": [

"signing",

"key encipherment",

"client auth"

]

},

"peer": {

"expiry": "175200h",

"usages": [

"signing",

"key encipherment",

"server auth",

"client auth"

]

}

}

}

}

profiles 说明:

server 表示服务端连接客户端时携带的证书,用于客户端验证服务端身份

client 表示客户端连接服务端时携带的证书,用于服务端验证客户端身份

peer 表示相互之间连接时使用的证书,如etcd节点之间验证

expiry: 过期时间,有效时长

创建 etcd 证书配置:/opt/certs/etcd-peer-csr.json

{

"CN": "k8s-etcd",

"hosts": [

"192.168.116.11",

"192.168.116.12",

"192.168.116.21",

"192.168.116.22"

],

"key": {

"algo": "rsa",

"size": 2048

},

"names": [

{

"C": "CN",

"ST": "liaoning",

"L": "shenyang",

"O": "edu",

"OU": "development"

}

]

}

host: 所有可能的etcd服务器添加到host列表

签发 ectd-peer 证书:

## 在 /opt/certs/ 目录下

cfssl gencert -ca=ca.pem -ca-key=ca-key.pem -config=ca-config.json -profile=peer etcd-peer-csr.json | cfssl-json -bare etcd-peer

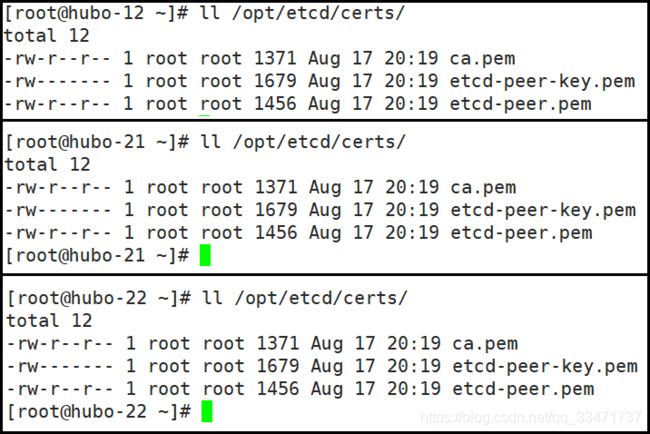

hubo-200 证书签发服务向 etcd 服务分发证书:

##

for i in 12 21 22;do scp ca.pem etcd-peer.pem etcd-peer-key.pem hubo-${i}:/opt/etcd/certs/ ;done

启动 etcd 服务

创建 etcd 的启动脚本:

在三台待安装 etcd 服务的虚拟主机中分别创建

/opt/etcd/etcd-server-startup.sh启动脚本

vi /opt/etcd/etcd-server-startup.sh

etcd-server-startup.sh(以192.168.116.12 主机为例)

#!/bin/sh

WORK_DIR=$(dirname $(readlink -f $0))

[ $? -eq 0 ] && cd $WORK_DIR || exit

/opt/etcd/etcd --name etcd-server-12 \

--data-dir /data/etcd/etcd-server \

--listen-peer-urls https://192.168.116.12:2380 \

--listen-client-urls https://192.168.116.12:2379,http://127.0.0.1:2379 \

--quota-backend-bytes 8000000000 \

--initial-advertise-peer-urls https://192.168.116.12:2380 \

--advertise-client-urls https://192.168.116.12:2379,http://127.0.0.1:2379 \

--initial-cluster etcd-server-12=https://192.168.116.12:2380,etcd-server-21=https://192.168.116.21:2380,etcd-server-22=https://192.168.116.22:2380 \

--trusted-ca-file ./certs/ca.pem \

--cert-file ./certs/etcd-peer.pem \

--key-file ./certs/etcd-peer-key.pem \

--client-cert-auth=true \

--peer-trusted-ca-file ./certs/ca.pem \

--peer-cert-file ./certs/etcd-peer.pem \

--peer-key-file ./certs/etcd-peer-key.pem \

--peer-client-cert-auth=true \

--log-outputs stdout

三台 etcd 主机中的启动脚本略有差异,需要修改的参数:name,listen-peer-urls,listen-client-urls,initial-advertise-peer-urls

修改 etcd 目录及文件的所属和权限

## /opt

chmod u+x /opt/etcd/etcd-server-startup.sh

chown -R etcd.etcd /opt/etcd/ /data/etcd /data/logs/etcd-server

supervisor 管理启动 etcd 服务

至此已经可以使用

./etcd-server-startup.sh启动 etcd 服务。

注意:如若直接使用 root 用户通过./etcd-server-startup.sh指令启动 etcd 服务,etcd 服务会在 /data/etcd 目录下创建 root 权限的一些文件夹 etcd-server ,这样以后用 etcd 用户启动时会出现权限问题,需要该权限

因为这些进程都是要启动为后台进程,要么手动启动,要么采用后台进程管理工具,此处使用后台管理工具 supervisor 来管理 etcd 服务。

## 安装 supervisor 工具

yum install -y supervisor

## 启动 supervisor

systemctl start supervisord

## 允许 supervisor 开机启动

systemctl enable supervisord

## 向 supervisor 中增加一个启动 etcd 的配置

vi /etc/supervisord.d/etcd-server.ini

/etc/supervisord.d/etcd-server.ini 内容:

[program:etcd-server-12]

command=/opt/etcd/etcd-server-startup.sh ; the program (relative uses PATH, can take args)

numprocs=1 ; number of processes copies to start (def 1)

directory=/opt/etcd ; directory to cwd to before exec (def no cwd)

autostart=true ; start at supervisord start (default: true)

autorestart=true ; retstart at unexpected quit (default: true)

startsecs=30 ; number of secs prog must stay running (def. 1)

startretries=3 ; max # of serial start failures (default 3)

exitcodes=0,2 ; 'expected' exit codes for process (default 0,2)

stopsignal=QUIT ; signal used to kill process (default TERM)

stopwaitsecs=10 ; max num secs to wait b4 SIGKILL (default 10)

user=etcd ; setuid to this UNIX account to run the program

redirect_stderr=true ; redirect proc stderr to stdout (default false)

stdout_logfile=/data/logs/etcd-server/etcd.stdout.log ; stdout log path, NONE for none; default AUTO

stdout_logfile_maxbytes=64MB ; max # logfile bytes b4 rotation (default 50MB)

stdout_logfile_backups=5 ; # of stdout logfile backups (default 10)

stdout_capture_maxbytes=1MB ; number of bytes in 'capturemode' (default 0)

stdout_events_enabled=false ; emit events on stdout writes (default false)

更新 supervisor

supervisorctl update

### 输出 etcd-server-12: added process group

supervisor的使用命令:

## 以下命令 [servername] 换成 all 时针对 supervisor 管理的所有后台服务,(也可以使用分组)

# 启动服务命令 supervisorctl start [servername]

supervisorctl start etcd-server-12

# 停止服务命令 supervisorctl start [servername]

supervisorctl stop etcd-server-12

# 重启服务命令 supervisorctl start [servername]

supervisorctl restart etcd-server-12

# 查看指定服务状态命令 supervisorctl start [servername]

supervisorctl status etcd-server-12

相同方式启动另外两台主机的 etcd

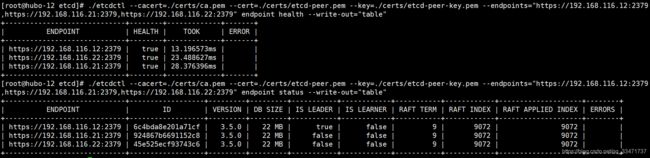

检测 etcd :

### 在 /opt/etcd 目录下

cd /opt/etcd

## 查看各 etcd 服务是否正常

./etcdctl --cacert=./certs/ca.pem --cert=./certs/etcd-peer.pem --key=./certs/etcd-peer-key.pem --endpoints="https://192.168.116.12:2379,https://192.168.116.21:2379,https://192.168.116.22:2379" endpoint health --write-out="table"

## 查看各 etcd 服务状态

./etcdctl --cacert=./certs/ca.pem --cert=./certs/etcd-peer.pem --key=./certs/etcd-peer-key.pem --endpoints="https://192.168.116.12:2379,https://192.168.116.21:2379,https://192.168.116.22:2379" endpoint status --write-out="table"

使用客户端(如:etcdctl工具)连接 etcd 服务时,需要带上证书,需要验证。其他 etcd 数据操作命令可以参考 官方文件

![]()

k8s 主控节点

部署 kube-apiserver 集群

部署计划:在 hubo-21 和 hubo-22 上部署

下载资源:

- kubernetes的github页面: https://github.com/kubernetes/kubernetes

- 选择一个 release 版本:https://github.com/kubernetes/kubernetes/releases/tag/v1.19.11

- 下载已经编译好的组件包(二进制文件)地址:https://github.com/kubernetes/kubernetes/blob/master/CHANGELOG/CHANGELOG-1.19.md#downloads-for-v11914

- 下载对应的二进制文件:kubernetes-server-linux-amd64.tar.gz

下载下来放到/opt/application-src目录下:

## 在先获取, /opt/application-src 下执行

wget https://dl.k8s.io/v1.19.14/kubernetes-server-linux-amd64.tar.gz

## 解压

tar -xf kubernetes-server-linux-amd64.tar.gz

## 移动并重命名,加上版本号便于区分

mv kubernetes /opt/kubernetes-1.19.14

## 到/opt 目录 创建软连接

cd /opt/

ln -s /opt/kubernetes /opt/kubernetes-1.19.14

删除一些没有用的资源

## 到 /opt/kubernetes 目录下 删除源码包 kubernetes-src.tar.gz

cd /opt/kubernetes

rm -f kubernetes-src.tar.gz

## 到 /opt/kubernetes/server/bin 路径下删除不需要的 docker 镜像文件

cd /opt/kubernetes/server/bin

rm -f *.tar *_tag

签发证书

hubo-200 主机制作证书,并下发。所以在 hubo-200 的 /opt/certs 目录下完成。

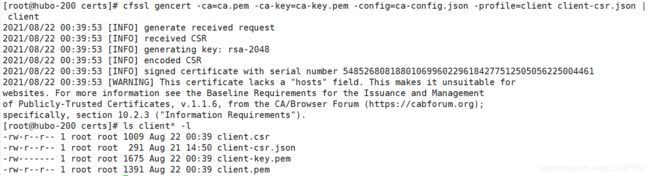

客户端与服务端通信时需要的证书:client 证书

例如 apiserver 与 etcd 服务通信时,需要携带 client 证书

客户端证书配置文件:client-csr.json

## hubo-200 主机 /opt/certs/ 目录下

vi /opt/certs/client-csr.json

{

"CN": "k8s-node",

"hosts": [

],

"key": {

"algo": "rsa",

"size": 2048

},

"names": [

{

"C": "CN",

"ST": "liaoning",

"L": "shenyang",

"O": "edu",

"OU": "development"

}

]

}

制作证书:

## /opt/certs 目录下

cfssl gencert -ca=ca.pem -ca-key=ca-key.pem -config=ca-config.json -profile=client client-csr.json | cfssl-json -bare client

服务端与客户端通信时需要的证书:server 证书

例如 apiserver 和其它 k8s 组件通信使用

编辑配置文件:

vi /opt/certs/apiserver-csr.json

{

"CN": "k8s-apiserver",

"hosts": [

"127.0.0.1",

"192.168.0.1",

"kubernetes.default",

"kubernetes.default.svc",

"kubernetes.default.svc.cluster",

"kubernetes.default.svc.cluster.local",

"192.168.116.10",

"192.168.116.21",

"192.168.116.22",

"192.168.116.23"

],

"key": {

"algo": "rsa",

"size": 2048

},

"names": [

{

"C": "CN",

"ST": "liaoning",

"L": "shenyang",

"O": "edu",

"OU": "development"

}

]

}

签发证书

## /opt/certs 目录下

cfssl gencert -ca=ca.pem -ca-key=ca-key.pem -config=ca-config.json -profile=server apiserver-csr.json | cfssl-json -bare apiserver

证书下发到 hubo-21 和 hubo-22

for i in 21 22;do echo hubo-$i;ssh hubo-$i "mkdir /opt/kubernetes/server/bin/certs";scp apiserver-key.pem apiserver.pem ca-key.pem ca.pem client-key.pem client.pem hubo-$i:/opt/kubernetes/server/bin/certs/;done

配置 apiserver 日志审计

涉及此配置的主机: hubo-21 、hubo-22

mkdir /opt/kubernetes/conf

vi /opt/kubernetes/conf/audit.yaml

apiVersion: audit.k8s.io/v1beta1 # This is required.

kind: Policy

# Don't generate audit events for all requests in RequestReceived stage.

omitStages:

- "RequestReceived"

rules:

# Log pod changes at RequestResponse level

- level: RequestResponse

resources:

- group: ""

# Resource "pods" doesn't match requests to any subresource of pods,

# which is consistent with the RBAC policy.

resources: ["pods"]

# Log "pods/log", "pods/status" at Metadata level

- level: Metadata

resources:

- group: ""

resources: ["pods/log", "pods/status"]

# Don't log requests to a configmap called "controller-leader"

- level: None

resources:

- group: ""

resources: ["configmaps"]

resourceNames: ["controller-leader"]

# Don't log watch requests by the "system:kube-proxy" on endpoints or services

- level: None

users: ["system:kube-proxy"]

verbs: ["watch"]

resources:

- group: "" # core API group

resources: ["endpoints", "services"]

# Don't log authenticated requests to certain non-resource URL paths.

- level: None

userGroups: ["system:authenticated"]

nonResourceURLs:

- "/api*" # Wildcard matching.

- "/version"

# Log the request body of configmap changes in kube-system.

- level: Request

resources:

- group: "" # core API group

resources: ["configmaps"]

# This rule only applies to resources in the "kube-system" namespace.

# The empty string "" can be used to select non-namespaced resources.

namespaces: ["kube-system"]

# Log configmap and secret changes in all other namespaces at the Metadata level.

- level: Metadata

resources:

- group: "" # core API group

resources: ["secrets", "configmaps"]

# Log all other resources in core and extensions at the Request level.

- level: Request

resources:

- group: "" # core API group

- group: "extensions" # Version of group should NOT be included.

# A catch-all rule to log all other requests at the Metadata level.

- level: Metadata

# Long-running requests like watches that fall under this rule will not

# generate an audit event in RequestReceived.

omitStages:

- "RequestReceived"

配置启动脚本

涉及此配置的主机: hubo-21 、hubo-22

vi /opt/kubernetes/server/bin/kube-apiserver-startup.sh

#!/bin/bash

WORK_DIR=$(dirname $(readlink -f $0))

[ $? -eq 0 ] && cd $WORK_DIR || exit

/opt/kubernetes/server/bin/kube-apiserver \

--apiserver-count 2 \

--audit-log-path /data/logs/kubernetes/kube-apiserver/audit-log \

--audit-policy-file ../../conf/audit.yaml \

--authorization-mode RBAC \

--client-ca-file ./certs/ca.pem \

--requestheader-client-ca-file ./certs/ca.pem \

--enable-admission-plugins NamespaceLifecycle,LimitRanger,ServiceAccount,DefaultStorageClass,DefaultTolerationSeconds,MutatingAdmissionWebhook,ValidatingAdmissionWebhook,ResourceQuota \

--etcd-cafile ./certs/ca.pem \

--etcd-certfile ./certs/client.pem \

--etcd-keyfile ./certs/client-key.pem \

--etcd-servers https://192.168.116.12:2379,https://192.168.116.21:2379,https://192.168.116.22:2379 \

--service-account-key-file ./certs/ca-key.pem \

--service-cluster-ip-range 192.100.0.0/16 \

--service-node-port-range 3000-29999 \

--target-ram-mb=1024 \

--kubelet-client-certificate ./certs/client.pem \

--kubelet-client-key ./certs/client-key.pem \

--log-dir /data/logs/kubernetes/kube-apiserver \

--tls-cert-file ./certs/apiserver.pem \

--tls-private-key-file ./certs/apiserver-key.pem \

--v 2

这里需要修改 --etcd-servers https://192.168.116.12:2379,https://192.168.116.21:2379,https://192.168.116.22:2379 分别三台etcd的地址

添加可执行权限:

chmod +x /opt/kubernetes/server/bin/kube-apiserver-startup.sh

supervisor 管理 apiserver

涉及此配置的主机: hubo-21 、hubo-22

添加 supervisor 启动配置:

vi /etc/supervisord.d/kube-apiserver.ini

[program:kube-apiserver-21]

command=/opt/kubernetes/server/bin/kube-apiserver-startup.sh

numprocs=1

directory=/opt/kubernetes/server/bin

autostart=true

autorestart=true

startsecs=30

startretries=3

exitcodes=0,2

stopsignal=QUIT

stopwaitsecs=10

user=root

redirect_stderr=true

stdout_logfile=/data/logs/kubernetes/kube-apiserver/apiserver.stdout.log

stdout_logfile_maxbytes=64MB

stdout_logfile_backups=5

stdout_capture_maxbytes=1MB

stdout_events_enabled=false

program:kube-apiserver-21 须有根据不同的主机设置

创建需要的目录:

mkdir -p /data/logs/kubernetes/kube-apiserver/

更新 supervisor 的配置,查看状态

supervisorctl state

supervisorctl status

启动停止命令:

supervisorctl start kube-apiserver-21

supervisorctl stop kube-apiserver-21

supervisorctl restart kube-apiserver-21

supervisorctl status kube-apiserver-21

通过进程查看启动状态:

netstat -lntp|grep api

ps uax|grep kube-apiserver|grep -v grep

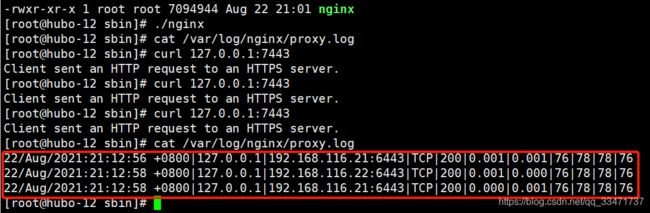

apiserver L4代理

在 hubo-11 和 hubo-12 主机为 apiserver 做代理

源码编译安装:

## 安装必要的编译环境

yum -y install gcc gcc-c++ make automake autoconf pcre pcre-devel zlib zlib-devel openssl openssl-devel libtool

## /opt/application-src 目录下

wget http://nginx.org/download/nginx-1.20.1.tar.gz

tar -xf nginx-1.20.1.tar.gz

mv nginx-1.20.1 /opt/nginx-1.20.1

cd /opt

ln -s nginx-1.20.1 nginx

cd nginx

### 配置编译目标文件(根据个人需求可对配置命令做出修改) 具体选型可以 ./configure --help 查看

./configure --prefix=/usr/local/nginx --sbin-path=/usr/local/nginx/sbin/nginx --conf-path=/usr/local/nginx/conf/nginx.conf --error-log-path=/var/log/nginx/error.log --http-log-path=/var/log/nginx/access.log --pid-path=/var/run/nginx.pid --lock-path=/var/lock/nginx.lock --user=nginx --group=nginx --with-http_ssl_module --with-http_stub_status_module --with-http_gzip_static_module --http-client-body-temp-path=/var/tmp/nginx/client/ --http-proxy-temp-path=/var/tmp/nginx/proxy/ --http-fastcgi-temp-path=/var/tmp/nginx/fcgi/ --http-uwsgi-temp-path=/var/tmp/nginx/uwsgi --http-scgi-temp-path=/var/tmp/nginx/scgi --with-stream --with-pcre

## 创建需要的目录

mkdir -p /var/tmp/nginx/client/

## 防止启动时保持 nginx: [emerg] open() "/var/run/nginx/nginx.pid" failed (2: No such file or directory)

mkdir /var/run/nginx

## 安装

make && make install

## 检测配置

/usr/local/nginx/sbin/nginx -t

## 修改配置

vi /usr/local/nginx/conf/nginx.conf

## 不属于 http 块,在文件最后追加

stream {

log_format proxy '$time_local|$remote_addr|$upstream_addr|$protocol|$status|'

'$session_time|$upstream_connect_time|$bytes_sent|$bytes_received|'

'$upstream_bytes_sent|$upstream_bytes_received' ;

upstream kube-apiserver {

server 192.168.116.21:6443 max_fails=3 fail_timeout=30s;

server 192.168.116.22:6443 max_fails=3 fail_timeout=30s;

}

server {

listen 7443;

proxy_connect_timeout 2s;

proxy_timeout 900s;

proxy_pass kube-apiserver;

access_log /var/log/nginx/proxy.log proxy;

}

}

启动 Nginx 服务:

## 启动

/usr/local/nginx/sbin/nginx -c /usr/local/nginx/conf/nginx.conf

/usr/local/nginx/sbin/nginx -s stop #停止Nginx

/usr/local/nginx/sbin/nginx -s reload #不停止服务,重新加载配置文件

测试:

ps aux|grep nginx

curl 127.0.0.1:7443

cat /var/log/nginx/proxy.log

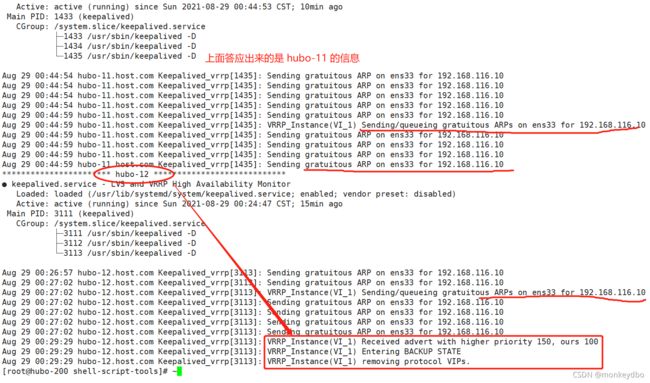

keepalive 安装部署—实现 vip :192.168.116.10

yum install keepalived -y

vi /etc/keepalived/check_port.sh

#!/bin/bash

CHK_PORT=$1

if [ -n "$CHK_PORT" ];then

PORT_PROCESS=`ss -lnt|grep $CHK_PORT|wc -l`

if [ $PORT_PROCESS -eq 0 ];then

echo "Port $CHK_PORT Is Not Used,End."

exit 1

fi

else

echo "Check Port Can Not Be Empty!"

fi

配置主备 keepalived :

hubo-11 这只为主节点:配置如下:

[root@hubo-11 ~]# vi /etc/keepalived/keepalived.conf

! Configuration File for keepalived

global_defs {

router_id 192.168.116.11

}

vrrp_script chk_nginx {

script "/etc/keepalived/check_port.sh 7443"

interval 2

weight -20

}

vrrp_instance VI_1 {

state MASTER

interface ens33

virtual_router_id 50

mcast_src_ip 192.168.116.11

priority 150

advert_int 1

authentication {

auth_type PASS

auth_pass 1111

}

track_script {

chk_nginx

}

virtual_ipaddress {

192.168.116.10/24 label ens33:0

}

}

hubo-12 设置为备用:配置如下

[root@hubo-12 ~]# vi /etc/keepalived/keepalived.conf

! Configuration File for keepalived

global_defs {

router_id 192.168.116.12

}

vrrp_script chk_nginx {

script "/etc/keepalived/check_port.sh 7443"

interval 2

weight -20

}

vrrp_instance VI_1 {

state BACKUP

interface ens33

virtual_router_id 50

mcast_src_ip 192.168.116.12

priority 100

advert_int 1

authentication {

auth_type PASS

auth_pass 1111

}

track_script {

chk_nginx

}

virtual_ipaddress {

192.168.116.10/24

}

}

启动keepalived 并设置开机启动,测试方式很简单,查看 ip addr 主节点上会有 vip 192.168.116.10,如果主节点宕机,vip 会出现在备用节点。

curl 192.168.116.10:7443 也可以在对应的 nginx 日志中看到访问记录。

状态查看:systemctl status keepalived

keepalive 详细内容参考: 参考1 、参考2

controller-manager 安装

部署计划:在 hubo-21 和 hubo-22 上部署

controller-manager 设置为只调用当前主机的 apiserver 服务,走 127.0.0.1 ,因此不配制SSL证书。

创建启动脚本

vi /opt/kubernetes/server/bin/kube-controller-manager-startup.sh

#!/bin/sh

WORK_DIR=$(dirname $(readlink -f $0))

[ $? -eq 0 ] && cd $WORK_DIR || exit

/opt/kubernetes/server/bin/kube-controller-manager \

--cluster-cidr=172.7.0.0/16 \

--leader-elect=true \

--log-dir=/data/logs/kubernetes/kube-controller-manager \

--master=http://127.0.0.1:8080 \

--service-account-private-key-file=./certs/ca-key.pem \

--service-cluster-ip-range=192.100.0.0/16 \

--root-ca-file=./certs/ca.pem \

--v=2

常规操作,添加可执行权限:chmod u+x /opt/kubernetes/server/bin/kube-controller-manager-startup.sh

配置supervisor启动配置

vi /etc/supervisord.d/kube-controller-manager.ini

[program:kube-controller-manager-21]

command=/opt/kubernetes/server/bin/kube-controller-manager-startup.sh ; the program (relative uses PATH, can take args)

numprocs=1 ; number of processes copies to start (def 1)

directory=/opt/kubernetes/server/bin ; directory to cwd to before exec (def no cwd)

autostart=true ; start at supervisord start (default: true)

autorestart=true ; retstart at unexpected quit (default: true)

startsecs=30 ; number of secs prog must stay running (def. 1)

startretries=3 ; max # of serial start failures (default 3)

exitcodes=0,2 ; 'expected' exit codes for process (default 0,2)

stopsignal=QUIT ; signal used to kill process (default TERM)

stopwaitsecs=10 ; max num secs to wait b4 SIGKILL (default 10)

user=root ; setuid to this UNIX account to run the program

redirect_stderr=true ; redirect proc stderr to stdout (default false)

stdout_logfile=/data/logs/kubernetes/kube-controller-manager/controller.stdout.log ; stderr log path, NONE for none; default AUTO

stdout_logfile_maxbytes=64MB ; max # logfile bytes b4 rotation (default 50MB)

stdout_logfile_backups=4 ; # of stdout logfile backups (default 10)

stdout_capture_maxbytes=1MB ; number of bytes in 'capturemode' (default 0)

stdout_events_enabled=false ; emit events on stdout writes (default false)

## 创建日志目录

mkdir -p /data/logs/kubernetes/kube-controller-manager/

启动

## supervisor 更新配置

supervisorctl update

## 查看状态

supervisorctl status

kube-scheduler 调度服务安装

部署计划:在 hubo-21 和 hubo-22 上部署

kube-scheduler 设置为只调用当前机器的 apiserver,走127.0.0.1网卡,因此不配制SSL证书

创建启动脚本

vi /opt/kubernetes/server/bin/kube-scheduler-startup.sh

#!/bin/sh

WORK_DIR=$(dirname $(readlink -f $0))

[ $? -eq 0 ] && cd $WORK_DIR || exit

/opt/kubernetes/server/bin/kube-scheduler \

--leader-elect \

--log-dir /data/logs/kubernetes/kube-scheduler \

--master http://127.0.0.1:8080 \

--v 2

## 添加可执行权限

chmod u+x /opt/kubernetes/server/bin/kube-scheduler-startup.sh

## 创建调度日志目录

mkdir -p /data/logs/kubernetes/kube-scheduler

配置supervisor启动配置

vi /etc/supervisord.d/kube-scheduler.ini

[program:kube-scheduler-21]

command=/opt/kubernetes/server/bin/kube-scheduler-startup.sh

numprocs=1

directory=/opt/kubernetes/server/bin

autostart=true

autorestart=true

startsecs=30

startretries=3

exitcodes=0,2

stopsignal=QUIT

stopwaitsecs=10

user=root

redirect_stderr=true

stdout_logfile=/data/logs/kubernetes/kube-scheduler/scheduler.stdout.log

stdout_logfile_maxbytes=64MB

stdout_logfile_backups=4

stdout_capture_maxbytes=1MB

stdout_events_enabled=false

启动

supervisorctl update

supervisorctl status

检查主控节点状态

## 创建一个软连接导 /usr/local/bin/ 方便使用命令

ln -s /opt/kubernetes/server/bin/kubectl /usr/local/bin/

kubectl get cs

k8s 运算节点

主控节点和运算节点何以在同一台机器上,逻辑上把他们看成分开的,所以这里将运算节点也部署在 hubo-21 和 hubo-22 上。

kubelet 部署

签发证书

配置:

由 hubo-200 主机签发 kubelet 服务使用的证书

## 在 hubo-200 主机

cd /opt/certs/

证书配置 json 文件:kubelet-csr.json

{

"CN": "k8s-kubelet",

"hosts": [

"127.0.0.1",

"192.168.116.10",

"192.168.116.21",

"192.168.116.22",

"192.168.116.23",

"192.168.116.24",

"192.168.116.25",

"192.168.116.26",

"192.168.116.27",

"192.168.116.28"

],

"key": {

"algo": "rsa",

"size": 2048

},

"names": [

{

"C": "CN",

"ST": "liaoning",

"L": "shenyang",

"O": "edu",

"OU": "development"

}

]

}

hosts 中填写所有可能的 kubelet 机器IP。

签发:

## /opt/certs/ 目录下制作证书

cfssl gencert -ca=ca.pem -ca-key ca-key.pem -config=ca-config.json -profile=server kubelet-csr.json | cfssl-json -bare kubelet

## 查看

ls kubelet* -l

## 分发

scp kubelet.pem kubelet-key.pem hubo-21:/opt/kubernetes/server/bin/certs/

scp kubelet.pem kubelet-key.pem hubo-22:/opt/kubernetes/server/bin/certs/

创建 kubelet 配置

在 hubo-21 和 hubo-22 主机上操作,在

/opt/kubernetes/conf/目录下创建配置

-

set-cluster

Sets a cluster entry in kubeconfigkubectl config set-cluster myk8s \ --certificate-authority=/opt/kubernetes/server/bin/certs/ca.pem \ --embed-certs=true \ --server=https://192.168.116.10:7443 \ --kubeconfig=/opt/kubernetes/conf/kubelet.kubeconfigmyk8s 是给集群起的一个名称

server 参数指向的是 apiserver,但是为了高可用做了代理,并且使用了 vip ,所有指向了192.168.116.10:7443

kubeconfig 表示配置文件的位置,将生成/opt/kubernetes/conf/kubelet.kubeconfig文件

embed-certs 表示是否下给你配置文件中嵌入证书,true 时会把正式通过base64编码后写道配置文件中命令的详细信息可以使用 -h 产看:

kubectl config set-cluster -h -

set-credentials

Sets a user entry in kubeconfigkubectl config set-credentials k8s-node \ --client-certificate=/opt/kubernetes/server/bin/certs/client.pem \ --client-key=/opt/kubernetes/server/bin/certs/client-key.pem \ --embed-certs=true \ --kubeconfig=/opt/kubernetes/conf/kubelet.kubeconfig -

set-context

Sets a context entry in kubeconfigkubectl config set-context myk8s-context \ --cluster=myk8s \ --user=k8s-node \ --kubeconfig=/opt/kubernetes/conf/kubelet.kubeconfig这里

user=k8s-node是用户名,这个会对应到 client 证书中设置的CN值。也就是说,之前创建的 client 证书在 k8s 集群中只能给用户名为user=k8s-node的用户使用。 -

use-context

Sets the current-context in a kubeconfig filekubectl config use-context myk8s-context --kubeconfig=/opt/kubernetes/conf/kubelet.kubeconfig

在 hubo-21 主机上指向完成以上四个步骤后,会在 /opt/kubernetes/conf/ 目录下生成一个 kubelet 的配置文件 kubelet.kubeconfig,其中存储了以上四个步骤的配置,把改文件传到 hubo-22 主机上对应的位置即可,不需要在 hubo-22 上再重发这个步骤。

## 在 hubo-21 上执行

scp /opt/kubernetes/conf/kubelet.kubeconfig hubo-22:/opt/kubernetes/conf/

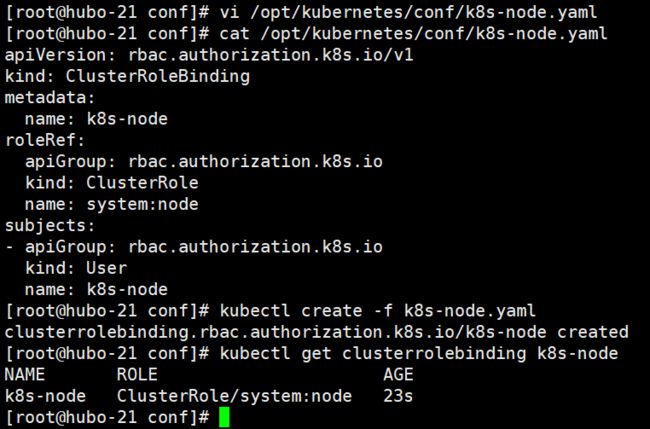

角色绑定(ClusterRoleBinding —— 是一个集群资源,被存储到了 etcd 中)

授权 k8s-node 用户,在配置 kubelet 时设置了一个k8s集群用户 k8s-node,现在需要授予它一个集群权限,这个权限使它在集群中具备成为运算节点的权限。【RBAC】

创建配置文件:vi /opt/kubernetes/conf/k8s-node.yaml

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRoleBinding

metadata:

name: k8s-node

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: ClusterRole

name: system:node

subjects:

- apiGroup: rbac.authorization.k8s.io

kind: User

name: k8s-node

### 创建

kubectl create -f k8s-node.yaml

### 查看

kubectl get clusterrolebinding k8s-node

kubectl get clusterrolebinding k8s-node -o yaml

这个集群用户角色在 hubo-21 上创建即可,创建成功后,在 hubo-22 也可以查到。

删除指令kubectl delete -f k8s-node.yaml

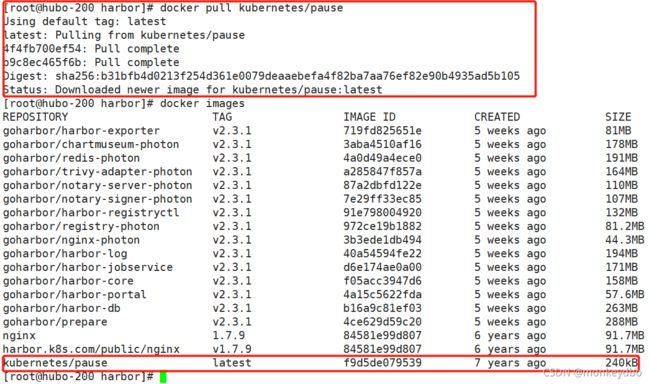

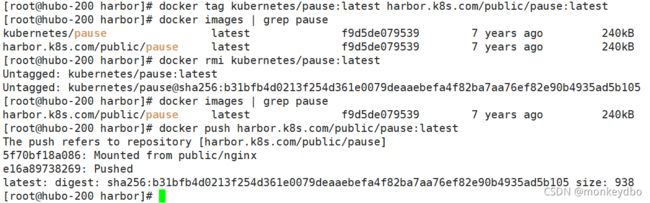

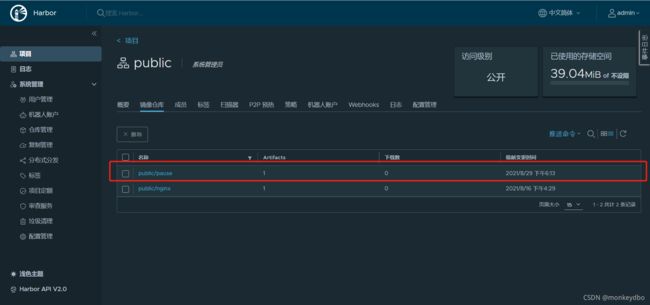

装备 pause 基础镜像

在hubo-200 主机上操作,从共有镜像仓库拉取 pause 镜像(或者上传镜像文件,docker load 加载到 hubo-200 主机的docker 环境中),然后上传到私有仓库。

pause 基础镜像与 Pod 一一对应的,在初始话 Pod 时又它来完成一些共享资源的定义,然后 Pod 中的其他资源都会共享这些资源(如网络资源)。【详细参考1,详细参考2】

## hubo-200 上执行

docker pull kubernetes/pause

docker tag kubernetes/pause:latest harbor.k8s.com/public/pause:latest

docker push harbor.k8s.com/public/pause:latest

关于 docker 的基础操作参考连接: docker 学习笔记专栏

如果传不上去检查是否登录,如果没有:docker login -u admin harbor.k8s.com详细内容在本文 harbor 仓库搭建章节又介绍。

创建启动参数配置文件和启动脚本

在 hubo-21 、 hubo-22 (即前期设计的 node 节点)创建脚本。

启动参数配置文件

官网参考文档 KubeletConfiguration.yml

vi /opt/kubernetes/conf/kubelet-configuration.yaml

apiVersion: kubelet.config.k8s.io/v1beta1

kind: KubeletConfiguration

cgroupDriver: systemd

clusterDNS:

- 192.100.0.2

clusterDomain: cluster.local

#cgroupRoot: /systemd/system.slice

kubeletCgroups: /systemd/system.slice

failSwapOn: false

authentication:

x509:

clientCAFile: /opt/kubernetes/server/bin/certs/ca.pem

anonymous:

enabled: false

tlsCertFile: /opt/kubernetes/server/bin/certs/kubelet.pem

tlsPrivateKeyFile: /opt/kubernetes/server/bin/certs/kubelet-key.pem

imageGCHighThresholdPercent: 80

imageGCLowThresholdPercent: 20

踩坑:老版本中这些参数都可以在启动命令中指定,但是在较新的版本中有些参数必须在这个配置文件中指定,然后再启动时通过 --config [file-path] 来指定参数配置文件

详细内容:官网:Kubelet Configuration (v1beta1)

启动脚本

vi /opt/kubernetes/server/bin/kubelet-startup.sh

#!/bin/sh

WORK_DIR=$(dirname $(readlink -f $0))

[ $? -eq 0 ] && cd $WORK_DIR || exit

/opt/kubernetes/server/bin/kubelet \

--config /opt/kubernetes/conf/kubelet-configuration.yaml \

--runtime-cgroups=/systemd/system.slice \

--hostname-override hubo-21.host.com \

--kubeconfig ../../conf/kubelet.kubeconfig \

--log-dir /data/logs/kubernetes/kube-kubelet \

--pod-infra-container-image harbor.k8s.com/public/pause:latest \

--root-dir /data/kubelet

chmod u+x /opt/kubernetes/server/bin/kubelet-startup.sh

mkdir -p /data/logs/kubernetes/kube-kubelet /data/kubelet

启动时踩的坑:【misconfiguration : kubelet cgroup driver: “systemd” is different from docker cgroup driver: "cgro】- - - - 分析原因:这是由于 docker cgroup driver 和kubelet cgroup driver 配置不一致导致

解决办法:修改 docker 的daemon.json文件

重启:systemctl restart docker

交给 supervisor 管理

vi /etc/supervisord.d/kube-kubelet.ini

[program:kube-kubelet-21]

command=/opt/kubernetes/server/bin/kubelet-startup.sh

numprocs=1

directory=/opt/kubernetes/server/bin

autostart=true

autorestart=true

startsecs=30

startretries=3

exitcodes=0,2

stopsignal=QUIT

stopwaitsecs=10

user=root

redirect_stderr=true

stdout_logfile=/data/logs/kubernetes/kube-kubelet/kubelet.stdout.log

stdout_logfile_maxbytes=64MB

stdout_logfile_backups=5

stdout_capture_maxbytes=1MB

stdout_events_enabled=false

supervisorctl update

supervisorctl status

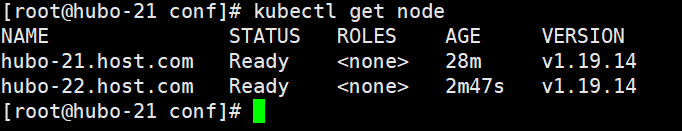

## 查看集群中的节点资源

kubectl get node

## 加 node 标签

kubectl label node hubo-21.host.com node-role.kubernetes.io/node=

kubectl label node hubo-22.host.com node-role.kubernetes.io/node=

## 加 master标签

kubectl label node hubo-21.host.com node-role.kubernetes.io/master=

kubectl label node hubo-22.host.com node-role.kubernetes.io/master=

kubectl get node

## 标记后的结果(这个只是标记作用,应用:例如污点处理)

NAME STATUS ROLES AGE VERSION

hubo-21.host.com Ready master,node 31m v1.19.14

hubo-22.host.com Ready master,node 5m40s v1.19.14

kube-proxy部署

签发证书

由 hubo-200 主机签发 kube-proxy服务使用的证书

配置:

vi /opt/certs/kube-proxy-csr.json

{

"CN": "system:kube-proxy",

"key": {

"algo": "rsa",

"size": 2048

},

"names": [

{

"C": "CN",

"ST": "liaoning",

"L": "shenyang",

"O": "edu",

"OU": "development"

}

]

}

CN可以对应 k8s 集群中的角色,这里设置为system:kube-proxy对应的就是集群中的system:kube-proxy角色。

在上一节(kubelet 部署)中通过kubectl create -f k8s-node.yaml创建了一个角色绑定资源,创建的角色是system:node,并绑定了k8s-node用户。

在之前创建 client 证书时,使用的 CN 是k8s-node,意思是对应集群中的k8s-node用户,也就是说,这个证书在 k8s 集群中只能给k8s-node用户使用。所以kube-proxy用户(该用户在后面的配置中设置)需要额外定制自己的证书。【当然,kube-proxy也可以叫k8s-node,者应就可以用一套client证书了】

签发证书

## /opt/certs/ 目录下执行

cfssl gencert -ca=ca.pem -ca-key=ca-key.pem -config=ca-config.json -profile=client kube-proxy-csr.json |cfssl-json -bare kube-proxy-client

## 查看

ls kube-proxy-c* -l

分发证书

## /opt/certs/ 目录下执行

scp kube-proxy-client-key.pem kube-proxy-client.pem hubo-21:/opt/kubernetes/server/bin/certs/

scp kube-proxy-client-key.pem kube-proxy-client.pem hubo-22:/opt/kubernetes/server/bin/certs/

执行结果:

[root@hubo-200 certs]# scp kube-proxy-client-key.pem kube-proxy-client.pem hubo-21:/opt/kubernetes/server/bin/certs/

kube-proxy-client-key.pem 100% 1679 1.7MB/s 00:00

kube-proxy-client.pem 100% 1403 776.0KB/s 00:00

[root@hubo-200 certs]# scp kube-proxy-client-key.pem kube-proxy-client.pem hubo-22:/opt/kubernetes/server/bin/certs/

kube-proxy-client-key.pem 100% 1679 1.4MB/s 00:00

kube-proxy-client.pem 100% 1403 845.2KB/s 00:00

创建 kube-proxy 配置

同 kubelet 创建配置类似(过程相同,内容有细微区别),在 hubo-21 上完成,然后把生成的文件复制到 hubo-22上即可。

在/opt/kubernetes/conf/目录下操作

-

set-cluster

kubectl config set-cluster myk8s \ --certificate-authority=/opt/kubernetes/server/bin/certs/ca.pem \ --embed-certs=true \ --server=https://192.168.116.10:7443 \ --kubeconfig=/opt/kubernetes/conf/kube-proxy.kubeconfig -

set-credentials

kubectl config set-credentials kube-proxy \ --client-certificate=/opt/kubernetes/server/bin/certs/kube-proxy-client.pem \ --client-key=/opt/kubernetes/server/bin/certs/kube-proxy-client-key.pem \ --embed-certs=true \ --kubeconfig=/opt/kubernetes/conf/kube-proxy.kubeconfig创建一个

kube-proxy用户记录。 -

set-context

Sets a context entry in kubeconfigkubectl config set-context myk8s-context \ --cluster=myk8s \ --user=kube-proxy \ --kubeconfig=/opt/kubernetes/conf/kube-proxy.kubeconfig这里

user=kube-proxy是选用的用户. -

use-context

Sets the current-context in a kubeconfig filekubectl config use-context myk8s-context --kubeconfig=/opt/kubernetes/conf/kube-proxy.kubeconfig

将 kube-proxy.kubeconfig 配置文件同步到 hubo-22 主机:

## 在 hubo-21 上执行

scp /opt/kubernetes/conf/kube-proxy.kubeconfig hubo-22:/opt/kubernetes/conf/

加载ipvs模块

三种流量调度模式(代理模式): namespace,iptables,ipvs

详细参考【kube-proxy的三种代理模式】

## 加载 ipvs 模块

for i in $(ls /usr/lib/modules/$(uname -r)/kernel/net/netfilter/ipvs|grep -o "^[^.]*");do echo $i; /sbin/modinfo -F filename $i >/dev/null 2>&1 && /sbin/modprobe $i;done

## 查看ipvs模块

lsmod | grep ip_vs

创建启动脚本

启动参数配置文件

官网参考文档 KubeProxyConfiguration.yml

官网参考文档

KubeProxyConfiguration.yml中的数据多了一层,KubeProxyConfiguration:,添加了这个启动会失败,层次不对,导致读不到响应的配置参数。

参数配置文件以 hubo-21 为例,hubo-22上需要改一下--hostnameOverride的值。

vi /opt/kubernetes/conf/kube-proxy-configuration.yaml

apiVersion: kubeproxy.config.k8s.io/v1alpha1

clientConnection:

kubeconfig: /opt/kubernetes/conf/kube-proxy.kubeconfig

clusterCIDR: "172.7.0.0/16"

hostnameOverride: "hubo-21.host.com"

ipvs:

scheduler: "nq"

kind: KubeProxyConfiguration

mode: "ipvs"

参数详细说明:官网:kube-proxy Configuration (v1alpha1)

启动脚本

vi /opt/kubernetes/server/bin/kube-proxy-startup.sh

#!/bin/sh

WORK_DIR=$(dirname $(readlink -f $0))

[ $? -eq 0 ] && cd $WORK_DIR || exit

/opt/kubernetes/server/bin/kube-proxy --config /opt/kubernetes/conf/kube-proxy-configuration.yaml

授与可执行权限:

chmod u+x /opt/kubernetes/server/bin/kube-proxy-startup.sh

创建用到的目录:

mkdir -p /data/logs/kubernetes/kube-proxy

交给 supervisor 管理

以 hubo-21 为例,hubo-22上需要改一下

program名称

vi /etc/supervisord.d/kube-proxy.ini

[program:kube-proxy-21]

command=/opt/kubernetes/server/bin/kube-proxy-startup.sh

numprocs=1

directory=/opt/kubernetes/server/bin

autostart=true

autorestart=true

startsecs=30

startretries=3

exitcodes=0,2

stopsignal=QUIT

stopwaitsecs=10

user=root

redirect_stderr=true

stdout_logfile=/data/logs/kubernetes/kube-proxy/proxy.stdout.log

stdout_logfile_maxbytes=64MB

stdout_logfile_backups=5

stdout_capture_maxbytes=1MB

stdout_events_enabled=false

## 更新 supervisor 配置

supervisorctl update

## 检查是否启动成功

supervisorctl status

查看结果:所有组件均已启动

[root@hubo-21 bin]# supervisorctl status

etcd-server-21 RUNNING pid 1102, uptime 3:00:30

kube-apiserver-21 RUNNING pid 1080, uptime 3:00:30

kube-controller-manager-21 RUNNING pid 1800, uptime 2:57:32

kube-kubelet-21 RUNNING pid 1087, uptime 3:00:30

kube-proxy-21 RUNNING pid 2659, uptime 0:00:47

kube-scheduler-21 RUNNING pid 1791, uptime 2:57:32

说明:这里主控节点的组件和运算节点的组件都部署在了同一台机器上,主控节点和运算节点可以分开部署,原理是一样的。同意,如果主控节点不要求高可用,也可以之部署一台主控节点,其他的运算节点都与它交互。

验证集群

利用 ipvsadm 查看集群网络结构

yum install -y ipvsadm

ipvsadm -Ln

[root@hubo-21 bin]# ipvsadm -Ln

IP Virtual Server version 1.2.1 (size=4096)

Prot LocalAddress:Port Scheduler Flags

-> RemoteAddress:Port Forward Weight ActiveConn InActConn

TCP 192.168.0.1:443 nq

-> 192.168.116.21:6443 Masq 1 0 0

-> 192.168.116.22:6443 Masq 1 0 0

[root@hubo-22 bin]# ipvsadm -Ln

IP Virtual Server version 1.2.1 (size=4096)

Prot LocalAddress:Port Scheduler Flags

-> RemoteAddress:Port Forward Weight ActiveConn InActConn

TCP 192.168.0.1:443 nq

-> 192.168.116.21:6443 Masq 1 0 0

-> 192.168.116.22:6443 Masq 1 0 0

创建 pod 资源

Pod 资源配置文件

官方参考文档 demo-pod.yml

## 把一些业务资源的配置文件放到 /opt/kubernetes/conf/resource/ 里面,方便查找

mkdir /opt/kubernetes/conf/resource

cd /opt/kubernetes/conf/resource/

## 创建一个 nginx 的 pod 资源配置文件

vi nginx-ds.yml

apiVersion: v1

kind: Pod

metadata:

name: nginx-ds

labels:

app: nginx-ds

spec:

containers:

- name: my-nginx

image: harbor.k8s.com/public/nginx:v1.7.9

ports:

- containerPort: 80

name: "http-server"

通过刚才的配置文件创建资源

## 在 /opt/kubernetes/conf/resource/ 目录下执行

kubectl create -f nginx-ds.yml

结果:

[root@hubo-22 resource]# kubectl create -f nginx-ds.yml

pod/nginx-ds created

查看集群中的 pod 资源(在另一台机器上查看,检查集群是否正确运行)

## 在 hubo-21 执行

kubectl get pods

结果:

[root@hubo-21 bin]# kubectl get pods

NAME READY STATUS RESTARTS AGE

nginx-ds 0/1 ContainerCreating 0 26s

[root@hubo-21 ~]# kubectl get pods

NAME READY STATUS RESTARTS AGE

nginx-ds 1/1 Running 0 3m33s

[root@hubo-21 ~]#

## 查看 pods 信息

kubectl get pods -owide

[root@hubo-21 ~]# kubectl get pods -owide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

nginx-ds 1/1 Running 0 5m13s 172.7.21.2 hubo-21.host.com

可以看出,这个 pod 被放到了 hubo-21 宿主机(虽然是在hubo-22上创建的资源)。此时测试是否可以访问,因为这是一个 pod 资源,还没有接 service 资源,现在只能内部访问,在 hubo-21 上 curl 一下:

[root@hubo-21 ~]# curl 172.7.21.2

Welcome to nginx!

Welcome to nginx!

If you see this page, the nginx web server is successfully installed and

working. Further configuration is required.

For online documentation and support please refer to

nginx.org.

Commercial support is available at

nginx.com.

Thank you for using nginx.

但是在 hubo-22 上curl 这个地址会超时,因为此时他们直接的完了还没有通,需要借助 flannel 完成 Pod 网络的互联。

![]()