使用Transformers 和 Tokenizers从头训练一个 language model

这是训练一个 ‘小’ 模型的demo (84 M parameters = 6 layers, 768 hidden size, 12 attention heads) – 跟DistilBERT有着相同的layers & heads,语言不是英语,而是 Esperanto 。然后可以微调这个模型在下游的序列标注任务。

下载数据集

Esperanto 的text语料:OSCAR corpus 和 Leipzig Corpora Collection

总共的训练语料库的大小为3 GB,仍然很小对于模型,可以获得更多的数据进行预处理,从而获得更好的结果。

#在这我们只使用第一部分语料做演示

!wget -c https://cdn-datasets.huggingface.co/EsperBERTo/data/oscar.eo.txt

训练一个 tokenizer

这里选择使用与RoBERTa相同的special tokens来训练字节级字节对encoding tokenizer (与GPT-2相同)。任意选择其大小为52000。

建议训练字节级BPE(而不是像BERT这样的WordPiece tokenizer),因为它将从单个字节的字母表开始构建词汇表,因此所有单词都将被分解为 tokens(不再有 tokens!)。

# 在这我们不需要tensorflow

#!pip uninstall -y tensorflow

# 从github安装 `transformers`

!pip install git+https://github.com/huggingface/transformers

!pip list | grep -E 'transformers|tokenizers'

# transformers version at notebook update --- 2.11.0

# tokenizers version at notebook update --- 0.8.0rc1

%%time

from pathlib import Path

from tokenizers import ByteLevelBPETokenizer

paths = [str(x) for x in Path(".").glob("**/*.txt")]

# 初始化一个 tokenizer

tokenizer = ByteLevelBPETokenizer()

# 自定义 training

tokenizer.train(files=paths, vocab_size=52_000, min_frequency=2, special_tokens=[

"",

"" ,

"",

"" ,

"" ,

])

运行结果:

CPU times: user 4min, sys: 3min 7s, total: 7min 7s

Wall time: 2min 25s

# 新建文件夹保存训练好的tokenizer

!mkdir EsperBERTo

tokenizer.save_model("EsperBERTo")

我们现在既有vocb.json(按频率排序的最频繁的 tokens 列表)也有merges.txt合并列表。

{

"": 0,

"" : 1,

"": 2,

"" : 3,

"" : 4,

"!": 5,

"\"": 6,

"#": 7,

"$": 8,

"%": 9,

"&": 10,

"'": 11,

"(": 12,

")": 13,

# ...

}

# merges.txt

l a

Ġ k

o n

Ġ la

t a

Ġ e

Ġ d

Ġ p

# ...

现在的 tokenizer 对Esperanto 进行了优化,跟英语训练的 tokenizer 相比,更多 native words 由一个未拆分的token表示。变音符号,比如 Esperanto 里的重读音 – ĉ, ĝ, ĥ, ĵ, ŝ, and ŭ 是encoded natively的。在这个语料库中,编码序列的平均长度比使用预训练的GPT-2 tokenizer时小约30%。

以下是如何在 tokenizers 中使用它,包括处理RoBERTa special tokens,当然,也可以直接从transformers 中使用它。

from tokenizers.implementations import ByteLevelBPETokenizer

from tokenizers.processors import BertProcessing

tokenizer = ByteLevelBPETokenizer(

"./EsperBERTo/vocab.json",

"./EsperBERTo/merges.txt",

)

tokenizer._tokenizer.post_processor = BertProcessing(

("", tokenizer.token_to_id("")),

("", tokenizer.token_to_id("")),

)

tokenizer.enable_truncation(max_length=512)

tokenizer.encode("Mi estas Julien.")

#Encoding(num_tokens=7, attributes=[ids, type_ids, tokens, offsets, attention_mask, special_tokens_mask, overflowing])

tokenizer.encode("Mi estas Julien.").tokens

#['', 'Mi', 'Ġestas', 'ĠJuli', 'en', '.', '']

从头训练一个 language model

参考脚本:run_language_modeling.py 和Trainer

接下来将训练一个类似RoBERTa的模型,这是一个类似BERT的模型,并进行了一些更改(查看

文档了解更多详细信息)。

由于该模型类似于BERT,将对其进行掩码语言建模任务的训练,即预测如何填充我们在数据集中随机掩码的任意令牌。这由示例脚本处理。

# 检查 GPU

!nvidia-smi

Fri May 15 21:17:12 2020

+-----------------------------------------------------------------------------+

| NVIDIA-SMI 440.82 Driver Version: 418.67 CUDA Version: 10.1 |

|-------------------------------+----------------------+----------------------+

| GPU Name Persistence-M| Bus-Id Disp.A | Volatile Uncorr. ECC |

| Fan Temp Perf Pwr:Usage/Cap| Memory-Usage | GPU-Util Compute M. |

|===============================+======================+======================|

| 0 Tesla P100-PCIE... Off | 00000000:00:04.0 Off | 0 |

| N/A 38C P0 26W / 250W | 0MiB / 16280MiB | 0% Default |

+-------------------------------+----------------------+----------------------+

+-----------------------------------------------------------------------------+

| Processes: GPU Memory |

| GPU PID Type Process name Usage |

|=============================================================================|

| No running processes found |

+-----------------------------------------------------------------------------+

# 检查 PyTorch

import torch

torch.cuda.is_available()

为模型定义以下配置

from transformers import RobertaConfig

config = RobertaConfig(

vocab_size=52_000,

max_position_embeddings=514,

num_attention_heads=12,

num_hidden_layers=6,

type_vocab_size=1,

)

在transformers 中重新构建tokenizer

from transformers import RobertaTokenizerFast

tokenizer = RobertaTokenizerFast.from_pretrained("./EsperBERTo", max_len=512)

最后,初始化模型。

PS:当从头开始训练时,只从配置进行初始化,而不是从现有的 pretrained model or checkpoint进行初始化。

from transformers import RobertaForMaskedLM

model = RobertaForMaskedLM(config=config)

model.num_parameters()

# => 84 million parameters

# 84095008

构建 training Dataset

通过将 tokenizer 应用于文本文件来构建数据集。

这里,由于只有一个文本文件,甚至不需要自定义数据集。这里将直接使用LineByLineDataset。

%%time

from transformers import LineByLineTextDataset

dataset = LineByLineTextDataset(

tokenizer=tokenizer,

file_path="./oscar.eo.txt",

block_size=128,

)

'''

CPU times: user 4min 54s, sys: 2.98 s, total: 4min 57s

Wall time: 1min 37s

'''

与run_language_modeling.py脚本一样,需要定义一个data_collator。它将数据集的不同样本一起批处理到PyTorch识别如何对其执行反向操作的对象中。

from transformers import DataCollatorForLanguageModeling

data_collator = DataCollatorForLanguageModeling(

tokenizer=tokenizer, mlm=True, mlm_probability=0.15

)

一个简单版本的EsperantoDataset:

from torch.utils.data import Dataset

class EsperantoDataset(Dataset):

def __init__(self, evaluate: bool = False):

tokenizer = ByteLevelBPETokenizer(

"./models/EsperBERTo-small/vocab.json",

"./models/EsperBERTo-small/merges.txt",

)

tokenizer._tokenizer.post_processor = BertProcessing(

("", tokenizer.token_to_id("")),

("", tokenizer.token_to_id("")),

)

tokenizer.enable_truncation(max_length=512)

# or use the RobertaTokenizer from `transformers` directly.

self.examples = []

src_files = Path("./data/").glob("*-eval.txt") if evaluate else Path("./data/").glob("*-train.txt")

for src_file in src_files:

print("", src_file)

lines = src_file.read_text(encoding="utf-8").splitlines()

self.examples += [x.ids for x in tokenizer.encode_batch(lines)]

def __len__(self):

return len(self.examples)

def __getitem__(self, i):

# We’ll pad at the batch level.

return torch.tensor(self.examples[i])

初始化 Trainer

超参数和参数建议:

--output_dir ./models/EsperBERTo-small-v1

--model_type roberta

--mlm

--config_name ./models/EsperBERTo-small

--tokenizer_name ./models/EsperBERTo-small

--do_train

--do_eval

--learning_rate 1e-4

--num_train_epochs 5

--save_total_limit 2

--save_steps 2000

--per_gpu_train_batch_size 16

--evaluate_during_training

--seed 42

定义 trainer

from transformers import Trainer, TrainingArguments

training_args = TrainingArguments(

output_dir="./EsperBERTo",

overwrite_output_dir=True,

num_train_epochs=1,

per_gpu_train_batch_size=64,

save_steps=10_000,

save_total_limit=2,

prediction_loss_only=True,

)

trainer = Trainer(

model=model,

args=training_args,

data_collator=data_collator,

train_dataset=dataset,

)

开始训练

%%time

trainer.train()

Epoch: 100%

1/1 [2:46:46<00:00, 10006.17s/it]

Iteration: 100%

15228/15228 [2:46:46<00:00, 1.52it/s]

{"loss": 7.152712148666382, "learning_rate": 4.8358287365379566e-05, "epoch": 0.03283425269240872, "step": 500}

{"loss": 6.928811420440674, "learning_rate": 4.671657473075913e-05, "epoch": 0.06566850538481744, "step": 1000}

{"loss": 6.789419063568115, "learning_rate": 4.5074862096138694e-05, "epoch": 0.09850275807722617, "step": 1500}

{"loss": 6.688932447433472, "learning_rate": 4.343314946151826e-05, "epoch": 0.1313370107696349, "step": 2000}

{"loss": 6.595982004165649, "learning_rate": 4.179143682689782e-05, "epoch": 0.1641712634620436, "step": 2500}

{"loss": 6.545944199562073, "learning_rate": 4.0149724192277385e-05, "epoch": 0.19700551615445233, "step": 3000}

{"loss": 6.4864857263565066, "learning_rate": 3.850801155765695e-05, "epoch": 0.22983976884686105, "step": 3500}

{"loss": 6.412427802085876, "learning_rate": 3.686629892303651e-05, "epoch": 0.2626740215392698, "step": 4000}

{"loss": 6.363630670547486, "learning_rate": 3.522458628841608e-05, "epoch": 0.29550827423167847, "step": 4500}

{"loss": 6.273832890510559, "learning_rate": 3.358287365379564e-05, "epoch": 0.3283425269240872, "step": 5000}

{"loss": 6.197585330963134, "learning_rate": 3.1941161019175205e-05, "epoch": 0.3611767796164959, "step": 5500}

{"loss": 6.097779376983643, "learning_rate": 3.029944838455477e-05, "epoch": 0.39401103230890466, "step": 6000}

{"loss": 5.985456382751464, "learning_rate": 2.8657735749934332e-05, "epoch": 0.42684528500131336, "step": 6500}

{"loss": 5.8448616371154785, "learning_rate": 2.70160231153139e-05, "epoch": 0.4596795376937221, "step": 7000}

{"loss": 5.692522863388062, "learning_rate": 2.5374310480693457e-05, "epoch": 0.4925137903861308, "step": 7500}

{"loss": 5.562082152366639, "learning_rate": 2.3732597846073024e-05, "epoch": 0.5253480430785396, "step": 8000}

{"loss": 5.457240365982056, "learning_rate": 2.2090885211452588e-05, "epoch": 0.5581822957709482, "step": 8500}

{"loss": 5.376953645706177, "learning_rate": 2.0449172576832152e-05, "epoch": 0.5910165484633569, "step": 9000}

{"loss": 5.298609251022339, "learning_rate": 1.8807459942211716e-05, "epoch": 0.6238508011557657, "step": 9500}

{"loss": 5.225468152046203, "learning_rate": 1.716574730759128e-05, "epoch": 0.6566850538481744, "step": 10000}

{"loss": 5.174519973754883, "learning_rate": 1.5524034672970843e-05, "epoch": 0.6895193065405831, "step": 10500}

{"loss": 5.113943946838379, "learning_rate": 1.3882322038350407e-05, "epoch": 0.7223535592329918, "step": 11000}

{"loss": 5.08140989112854, "learning_rate": 1.2240609403729971e-05, "epoch": 0.7551878119254006, "step": 11500}

{"loss": 5.072491912841797, "learning_rate": 1.0598896769109535e-05, "epoch": 0.7880220646178093, "step": 12000}

{"loss": 5.012459496498108, "learning_rate": 8.957184134489099e-06, "epoch": 0.820856317310218, "step": 12500}

{"loss": 4.999591351509094, "learning_rate": 7.315471499868663e-06, "epoch": 0.8536905700026267, "step": 13000}

{"loss": 4.994838352203369, "learning_rate": 5.673758865248227e-06, "epoch": 0.8865248226950354, "step": 13500}

{"loss": 4.955870885848999, "learning_rate": 4.032046230627791e-06, "epoch": 0.9193590753874442, "step": 14000}

{"loss": 4.941655583381653, "learning_rate": 2.390333596007355e-06, "epoch": 0.9521933280798529, "step": 14500}

{"loss": 4.931783639907837, "learning_rate": 7.486209613869189e-07, "epoch": 0.9850275807722616, "step": 15000}

CPU times: user 1h 43min 36s, sys: 1h 3min 28s, total: 2h 47min 4s

Wall time: 2h 46min 46s

TrainOutput(global_step=15228, training_loss=5.762423221226405)

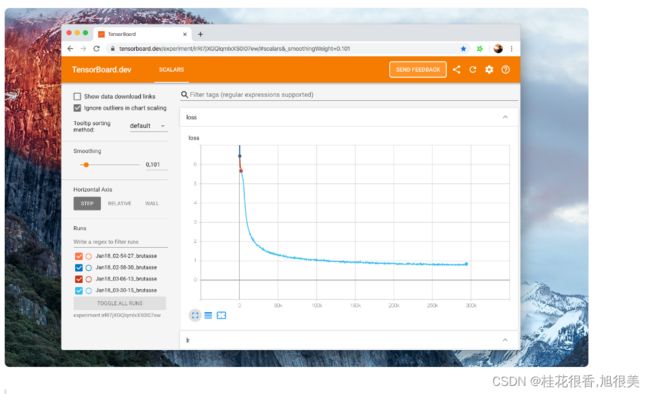

示例脚本默认登录到Tensorboard格式,在runs/目录下,运行一下代码查看训练参数变化

tensorboard dev upload --logdir runs

保存 model (+ tokenizer + config) 到本地

trainer.save_model("./EsperBERTo")

核验 LM 确实被训练过

from transformers import pipeline

fill_mask = pipeline(

"fill-mask",

model="./models/EsperBERTo-small",

tokenizer="./models/EsperBERTo-small"

)

# The sun .

# =>

result = fill_mask("La suno ." )

# {'score': 0.2526160776615143, 'sequence': ' La suno brilis.', 'token': 10820}

# {'score': 0.0999930202960968, 'sequence': ' La suno lumis.', 'token': 23833}

# {'score': 0.04382849484682083, 'sequence': ' La suno brilas.', 'token': 15006}

# {'score': 0.026011141017079353, 'sequence': ' La suno falas.', 'token': 7392}

# {'score': 0.016859788447618484, 'sequence': ' La suno pasis.', 'token': 4552}

好的,简单的语法/语法可以用。尝试下更复杂的提示:

fill_mask("Jen la komenco de bela ." )

# This is the beginning of a beautiful .

# =>

# {

# 'score':0.06502299010753632

# 'sequence':' Jen la komenco de bela vivo.'

# 'token':1099

# }

# {

# 'score':0.0421181358397007

# 'sequence':' Jen la komenco de bela vespero.'

# 'token':5100

# }

# {

# 'score':0.024884626269340515

# 'sequence':' Jen la komenco de bela laboro.'

# 'token':1570

# }

# {

# 'score':0.02324388362467289

# 'sequence':' Jen la komenco de bela tago.'

# 'token':1688

# }

# {

# 'score':0.020378097891807556

# 'sequence':' Jen la komenco de bela festo.'

# 'token':4580

# }

通过更复杂的提示,可以探究刚训练的语言模型是否捕获了更多的语义知识,甚至是某种(统计)常识推理。

在下游任务上微调LM

现在可以在 词性标注 的下游任务上微调 Esperanto 语言模型。

如前所述,Esperanto 是一种高度规则的语言,词尾通常会影响语音的语法部分。使用以 CoNLL-2003 格式格式化的带注释的 Esperanto POS标签数据集(参见下面的示例),可以使用transformer中的run_ner.py脚本。

POS标记与NER一样是一项 token 分类任务,因此可以使用完全相同的脚本。

数据格式

所有数据文件每行包含一个单词,空行表示句子边界。在每一行的末尾都有一个标记,说明当前单词是否位于命名实体内。标记还对命名实体的类型进行编码。下面是一个示例句子:

训练了 3 个epoch , batch size 等于 64 。训练和评估损失收敛到很小的值——能够端到端训练。

这次,使用TokenClassificationPipeline:

from transformers import TokenClassificationPipeline, pipeline

MODEL_PATH = "./models/EsperBERTo-small-pos/"

nlp = pipeline(

"ner",

model=MODEL_PATH,

tokenizer=MODEL_PATH,

)

# or instantiate a TokenClassificationPipeline directly.

nlp("Mi estas viro kej estas tago varma.")

# {'entity': 'PRON', 'score': 0.9979867339134216, 'word': ' Mi'}

# {'entity': 'VERB', 'score': 0.9683094620704651, 'word': ' estas'}

# {'entity': 'VERB', 'score': 0.9797462821006775, 'word': ' estas'}

# {'entity': 'NOUN', 'score': 0.8509314060211182, 'word': ' tago'}

# {'entity': 'ADJ', 'score': 0.9996201395988464, 'word': ' varma'}

开源你的 model

- 使用CLI(脚手架)上传你的模型:

transformers-cli upload - 编写一个README.md模型卡,并将其添加到model_cards/下的存储库中。理想情况下,您的模型卡应包括:

- 模型描述,

- 训练参数(数据集、预处理、超参数),

- 评估结果,

- 预期用途和限制

- 任何其他有用的东西…

然后你的模型在 https://huggingface.co/models 上就有一个页面,其他人也可以使用它了:

AutoModel.from_pretrained("username/model_name")