Kubeedge和Sedna的安装以及终身学习案例

文章目录

- 一、安装Kubeedge

-

- 1 主节点和边缘节点都干的事

-

- 1.1 我的ip以及更改hostname

- 1.2 Docker

- 1.3 下载kubeedge相关

- 2 主节点

-

- 2.1 kubelet, kubeadm, kubectl

- 2.2 网络插件flannel

-

- 2.1.1 方法一

- 2.1.2 方法二

- 2.1.3 重新配置

- 2.3 检查k8s是否成功

- 2.4 开始kubeedge

-

- 2.4.1 将之前下载好的文件传输到此文件夹中,并解压

- 2.4.2 添加环境变量

- 2.4.3 初始化

- 2.4.4 检查cloudcore是否启动

- 2.4.5 查看日志

- 2.4.6 查看端口(要有这两个端口)

- 2.4.7 查看启动状态

- 2.4.8 设置开机启动

- 2.4.9 get token为接下来边缘节点join做准备

- 3 边缘节点

-

- 3.1 将之前下载好的文件传输到此文件夹中,并解压

- 3.2 加入

- 3.3 检查

- 3.4 主节点查看

- 4 Kubeedge结束

-

- 4.1 Kubeedge相关的高质量Blog

- 5 Debug

-

- 5.1 The connection to the server....:6443 was refused - did you specify the right host or port?

- 二、安装Edgemesh

- 三、安装Sedna

-

- 1 官方地址

- 2 Deploy

-

- 2.1 正常跟官网

- 2.2 网络超时问题

- 2.3 重来

- 3 Debug

- 四、Sedna终身学习热舒适度案例

-

- 1 官方地址

- 2 准备数据集

- 3 Create Job

-

- 3.1 Create Dataset

- 3.2 Start The Lifelong Learning Job

- 4 Check

-

- 4.1 查看service status

- 4.2 查看结果

- 五、Sedna终身学习相关内容梳理

一、安装Kubeedge

1 主节点和边缘节点都干的事

1.1 我的ip以及更改hostname

|主节点|192.168.120.99|

|从节点|192.168.120.100|

#分别在master和edge上修改主机名称

hostnamectl set-hostname master

hostnamectl set-hostname edge

1.2 Docker

sudo apt-get -y update

sudo apt-get -y install apt-transport-https ca-certificates curl software-properties-common

#安装GPG证书,如果不安装则没有权限从软件源下载Docker

curl -fsSL https://mirrors.aliyun.com/docker-ce/linux/ubuntu/gpg | sudo apt-key add -

# 写入软件源信息(通过这个软件源下载Docker):

sudo add-apt-repository "deb [arch=amd64] https://mirrors.aliyun.com/docker-ce/linux/ubuntu $(lsb_release -cs) stable"

#安装docker

apt-get install docker.io

docker --version

sudo gedit /etc/docker/daemon.json

#主节点添加:

{

"registry-mirrors": [

"https://dockerhub.azk8s.cn",

"https://reg-mirror.qiniu.com",

"https://quay-mirror.qiniu.com"

],

"exec-opts": ["native.cgroupdriver=systemd"]

}

#从节点添加:

#因为kubelet cgroup driver指定cgroupfs,不然报错。

{

"registry-mirrors": [

"https://dockerhub.azk8s.cn",

"https://reg-mirror.qiniu.com",

"https://quay-mirror.qiniu.com"

],

"exec-opts": ["native.cgroupdriver=cgroupfs"]

}

sudo systemctl daemon-reload

sudo systemctl restart docker

sudo systemctl restart edgecore

#查看修改后的docker Cgroup的参数

docker info | grep Cgroup

1.3 下载kubeedge相关

#先把keadm-v1.10.0-linux-amd64.tar.gz和kubeedge-v1.10.0-linux-amd64.tar.gz下载到宿主机

#我的版本是1.10.0

https://github.com/kubeedge/kubeedge/releases

#再用xftp传输到虚拟机中

2 主节点

2.1 kubelet, kubeadm, kubectl

apt-get update && apt-get install -y apt-transport-https

#下载镜像源密钥

curl https://mirrors.aliyun.com/kubernetes/apt/doc/apt-key.gpg | apt-key add -

#添加 k8s 镜像源

cat </etc/apt/sources.list.d/kubernetes.list

deb https://mirrors.aliyun.com/kubernetes/apt/ kubernetes-xenial main

EOF

apt-get update

apt-get install -y kubelet=1.21.5-00 kubeadm=1.21.5-00 kubectl=1.21.5-00

#初始化

#只需要改apiserver-advertise-address,填master的ip地址

kubeadm init \

--apiserver-advertise-address=192.168.120.99 \

--image-repository registry.aliyuncs.com/google_containers \

--kubernetes-version v1.21.5 \

--service-cidr=10.96.0.0/12 \

--pod-network-cidr=10.244.0.0/16

#系统让干,干就完事了

mkdir -p $HOME/.kube

sudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/config

sudo chown $(id -u):$(id -g) $HOME/.kube/config

#系统让干,干就完事了

export KUBECONFIG=/etc/kubernetes/admin.conf

2.2 网络插件flannel

2.1.1 方法一

#最好挂梯子

kubectl apply -f https://raw.githubusercontent.com/coreos/flannel/master/Documentation/kube-flannel.yml

2.1.2 方法二

直接新建一个flannel.yaml

把这个粘贴进去

kubectl apply -f flannel.yaml

---

kind: Namespace

apiVersion: v1

metadata:

name: kube-flannel

labels:

pod-security.kubernetes.io/enforce: privileged

---

kind: ClusterRole

apiVersion: rbac.authorization.k8s.io/v1

metadata:

name: flannel

rules:

- apiGroups:

- ""

resources:

- pods

verbs:

- get

- apiGroups:

- ""

resources:

- nodes

verbs:

- list

- watch

- apiGroups:

- ""

resources:

- nodes/status

verbs:

- patch

---

kind: ClusterRoleBinding

apiVersion: rbac.authorization.k8s.io/v1

metadata:

name: flannel

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: ClusterRole

name: flannel

subjects:

- kind: ServiceAccount

name: flannel

namespace: kube-flannel

---

apiVersion: v1

kind: ServiceAccount

metadata:

name: flannel

namespace: kube-flannel

---

kind: ConfigMap

apiVersion: v1

metadata:

name: kube-flannel-cfg

namespace: kube-flannel

labels:

tier: node

app: flannel

data:

cni-conf.json: |

{

"name": "cbr0",

"cniVersion": "0.3.1",

"plugins": [

{

"type": "flannel",

"delegate": {

"hairpinMode": true,

"isDefaultGateway": true

}

},

{

"type": "portmap",

"capabilities": {

"portMappings": true

}

}

]

}

net-conf.json: |

{

"Network": "10.244.0.0/16",

"Backend": {

"Type": "vxlan"

}

}

---

apiVersion: apps/v1

kind: DaemonSet

metadata:

name: kube-flannel-cloud-ds

namespace: kube-flannel

labels:

tier: node

app: flannel

spec:

selector:

matchLabels:

app: flannel

template:

metadata:

labels:

tier: node

app: flannel

spec:

affinity:

nodeAffinity:

requiredDuringSchedulingIgnoredDuringExecution:

nodeSelectorTerms:

- matchExpressions:

- key: kubernetes.io/os

operator: In

values:

- linux

- key: node-role.kubernetes.io/agent # 注意缩进

operator: DoesNotExist

hostNetwork: true

priorityClassName: system-node-critical

tolerations:

- operator: Exists

effect: NoSchedule

serviceAccountName: flannel

initContainers:

- name: install-cni-plugin

#image: flannelcni/flannel-cni-plugin:v1.1.0 for ppc64le and mips64le (dockerhub limitations may apply)

image: docker.io/rancher/mirrored-flannelcni-flannel-cni-plugin:v1.1.0

command:

- cp

args:

- -f

- /flannel

- /opt/cni/bin/flannel

volumeMounts:

- name: cni-plugin

mountPath: /opt/cni/bin

- name: install-cni

#image: flannelcni/flannel:v0.19.1 for ppc64le and mips64le (dockerhub limitations may apply)

image: docker.io/rancher/mirrored-flannelcni-flannel:v0.19.1

command:

- cp

args:

- -f

- /etc/kube-flannel/cni-conf.json

- /etc/cni/net.d/10-flannel.conflist

volumeMounts:

- name: cni

mountPath: /etc/cni/net.d

- name: flannel-cfg

mountPath: /etc/kube-flannel/

containers:

- name: kube-flannel

#image: flannelcni/flannel:v0.19.1 for ppc64le and mips64le (dockerhub limitations may apply)

image: docker.io/rancher/mirrored-flannelcni-flannel:v0.19.1

command:

- /opt/bin/flanneld

args:

- --ip-masq

- --kube-subnet-mgr

resources:

requests:

cpu: "100m"

memory: "50Mi"

limits:

cpu: "100m"

memory: "50Mi"

securityContext:

privileged: false

capabilities:

add: ["NET_ADMIN", "NET_RAW"]

env:

- name: POD_NAME

valueFrom:

fieldRef:

fieldPath: metadata.name

- name: POD_NAMESPACE

valueFrom:

fieldRef:

fieldPath: metadata.namespace

- name: EVENT_QUEUE_DEPTH

value: "5000"

volumeMounts:

- name: run

mountPath: /run/flannel

- name: flannel-cfg

mountPath: /etc/kube-flannel/

- name: xtables-lock

mountPath: /run/xtables.lock

volumes:

- name: run

hostPath:

path: /run/flannel

- name: cni-plugin

hostPath:

path: /opt/cni/bin

- name: cni

hostPath:

path: /etc/cni/net.d

- name: flannel-cfg

configMap:

name: kube-flannel-cfg

- name: xtables-lock

hostPath:

path: /run/xtables.lock

type: FileOrCreate

2.1.3 重新配置

kubectl delete -f https://raw.githubusercontent.com/coreos/flannel/master/Documentation/kube-flannel.yml

2.3 检查k8s是否成功

kubectl get pods -n kube-system

kubectl get nodes

2.4 开始kubeedge

2.4.1 将之前下载好的文件传输到此文件夹中,并解压

#新建目录

mkdir /etc/kubeedge/

#解压

cd /etc/kubeedge/

#权限

chmod 777 /etc/kubeedge/

#解压

tar -zxvf keadm-v1.10.0-linux-amd64.tar.gz

2.4.2 添加环境变量

cd /etc/kubeedge/keadm-v1.10.0-linux-amd64/keadm

#将其配置进入环境变量,方便使用

cp keadm /usr/sbin/

2.4.3 初始化

cd /etc/kubeedge/keadm-v1.10.3-linux-amd64/keadm

sudo keadm init --advertise-address=192.168.120.99 --kubeedge-version=1.10.0

踩的坑:配置文件问题。

![]()

上图是我一直的报错。

我正确的初始化命令是:

sudo keadm init --advertise-address="192.168.120.101" --kubeedge-version=1.10.3 --kube-config=/home/levent/.kube/config

请认真看报错的原因!!!

正确运行的截图:

![]()

2.4.4 检查cloudcore是否启动

ps -ef|grep cloudcore

2.4.5 查看日志

journalctl -u cloudcore.service -xe

#或

vim /var/log/kubeedge/cloudcore.log

2.4.6 查看端口(要有这两个端口)

netstat -tpnl

![]()

2.4.7 查看启动状态

systemctl status cloudcore

![]()

2.4.8 设置开机启动

#启动cloudcore

systemctl start cloudcore

#设置开机自启动

systemctl enable cloudcore.service

#查看cloudcore开机启动状态 enabled:开启, disabled:关闭

systemctl is-enabled cloudcore.service

2.4.9 get token为接下来边缘节点join做准备

sudo keadm gettoken

3 边缘节点

3.1 将之前下载好的文件传输到此文件夹中,并解压

sudo mkdir /etc/kubeedge/

将kubeedge-v1.10.0-linux-amd64.tar.gz和keadm-v1.10.0-linux-amd64.tar.gz 放到此目录下。

#解压

sudo tar -zxvf keadm-v1.10.0-linux-amd64.tar.gz

3.2 加入

cd keadm-v1.10.0-linux-amd64/keadm/

#join token是上面生成的token

#cloudcoreipport的端口号不用改

sudo keadm join --cloudcore-ipport=192.168.120.99:10000 --edgenode-name=node --kubeedge-version=1.10.0 --token=

#为了让flannel能够访问到 http://127.0.0.1:10550,可能需要配置EdgeCore的metaServer功能

sudo gedit /etc/kubeedge/config/edgecore.yaml

3.3 检查

#启动edgecore

systemctl start edgecore

#设置开机自启

systemctl enable edgecore.service

#查看edgecore开机启动状态 enabled:开启, disabled:关闭

systemctl is-enabled edgecore

#查看状态

systemctl status edgecore

#查看日志

journalctl -u edgecore.service -b

![]()

3.4 主节点查看

#主节点查看

kubectl get nodes

kubectl get pod -n kube-system

![]()

4 Kubeedge结束

4.1 Kubeedge相关的高质量Blog

https://blog.csdn.net/qq_43475285/article/details/126760865

5 Debug

5.1 The connection to the server…:6443 was refused - did you specify the right host or port?

https://blog.csdn.net/sinat_28371057/article/details/109895159

二、安装Edgemesh

就跟着官网装,没问题,推荐手动安装,不用helm

https://edgemesh.netlify.app/zh/guide

三、安装Sedna

跟着官网安装基本就没问题。由于我的笔记本运行内存为8G,运行太卡了,出现的许多问题仅仅是因为硬件,前期走了较多弯路。

1 官方地址

https://github.com/kubeedge/sedna

2 Deploy

2.1 正常跟官网

curl https://raw.githubusercontent.com/kubeedge/sedna/main/scripts/installation/install.sh | SEDNA_ACTION=create bash -

2.2 网络超时问题

参考博士学长的blog(安装正确的截图也在他的blog中)

https://zhuanlan.zhihu.com/p/516314772

#如果出现了windows和linux换行符的问题,转换一下install.sh

sudo apt install dos2unix

dos2unix install.sh

2.3 重来

curl https://raw.githubusercontent.com/kubeedge/sedna/main/scripts/installation/install.sh | SEDNA_ACTION=delete bash -

3 Debug

kubectl get deploy -n sedna gm

kubectl get ds lc -n sedna

kubectl get pod -n sedna

目前仍为一主一从,集群里有其他人的节点。

我的主节点为master,从节点为cloud-node。

四、Sedna终身学习热舒适度案例

1 官方地址

https://github.com/kubeedge/sedna/blob/main/examples/lifelong_learning/atcii/README.md

#例程官方说明文档地址

https://sedna.readthedocs.io/en/latest/proposals/lifelong-learning.html

2 准备数据集

cd /data

wget https://kubeedge.obs.cn-north-1.myhuaweicloud.com/examples/atcii-classifier/dataset.tar.gz

tar -zxvf dataset.tar.gz

3 Create Job

需要修改的地方:

nodeName

image版本

dnsPolicy: ClusterFirstWithHostNet

3.1 Create Dataset

kubectl create -f - <.io/v1alpha1

kind: Dataset

metadata:

name: lifelong-dataset8

spec:

url: "/data/trainData.csv"

format: "csv"

nodeName: "iai-master"

EOF

![]()

3.2 Start The Lifelong Learning Job

kubectl create -f - <.io/v1alpha1

kind: LifelongLearningJob

metadata:

name: atcii-classifier-demo8

spec:

dataset:

name: "lifelong-dataset8"

trainProb: 0.8

trainSpec:

template:

spec:

nodeName: "iai-master"

dnsPolicy: ClusterFirstWithHostNet

containers:

- image: kubeedge/sedna-example-lifelong-learning-atcii-classifier:v0.5.0

name: train-worker

imagePullPolicy: IfNotPresent

args: ["train.py"] # training script

env: # Hyperparameters required for training

- name: "early_stopping_rounds"

value: "100"

- name: "metric_name"

value: "mlogloss"

trigger:

checkPeriodSeconds: 60

timer:

start: 00:01

end: 24:00

condition:

operator: ">"

threshold: 500

metric: num_of_samples

evalSpec:

template:

spec:

nodeName: "iai-master"

dnsPolicy: ClusterFirstWithHostNet

containers:

- image: kubeedge/sedna-example-lifelong-learning-atcii-classifier:v0.5.0

name: eval-worker

imagePullPolicy: IfNotPresent

args: ["eval.py"]

env:

- name: "metrics"

value: "precision_score"

- name: "metric_param"

value: "{'average': 'micro'}"

- name: "model_threshold" # Threshold for filtering deploy models

value: "0.5"

deploySpec:

template:

spec:

nodeName: "iai-master"

dnsPolicy: ClusterFirstWithHostNet

containers:

- image: kubeedge/sedna-example-lifelong-learning-atcii-classifier:v0.5.0

name: infer-worker

imagePullPolicy: IfNotPresent

args: ["inference.py"]

env:

- name: "UT_SAVED_URL" # unseen tasks save path

value: "/ut_saved_url"

- name: "infer_dataset_url" # simulation of the inference samples

value: "/data/testData.csv"

volumeMounts:

- name: utdir

mountPath: /ut_saved_url

- name: inferdata

mountPath: /data/

resources: # user defined resources

limits:

memory: 2Gi

volumes: # user defined volumes

- name: utdir

hostPath:

path: /lifelong/unseen_task/

type: DirectoryOrCreate

- name: inferdata

hostPath:

path: /data/

type: DirectoryOrCreate

outputDir: "/output"

EOF

![]()

4 Check

4.1 查看service status

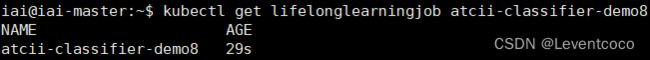

kubectl get lifelonglearningjob atcii-classifier-demo8

kubectl get pods -o wide -A

kubectl get pod

#将显示与任务相关的三个pods,分别对应train, eval, infer

4.2 查看结果

五、Sedna终身学习相关内容梳理

详见我的另一篇文章https://blog.csdn.net/Leventcoco/article/details/128668873