torch.Tensor.requires_grad_(requires_grad=True)的使用说明

参考链接: requires_grad_(requires_grad=True) → Tensor

原文及翻译:

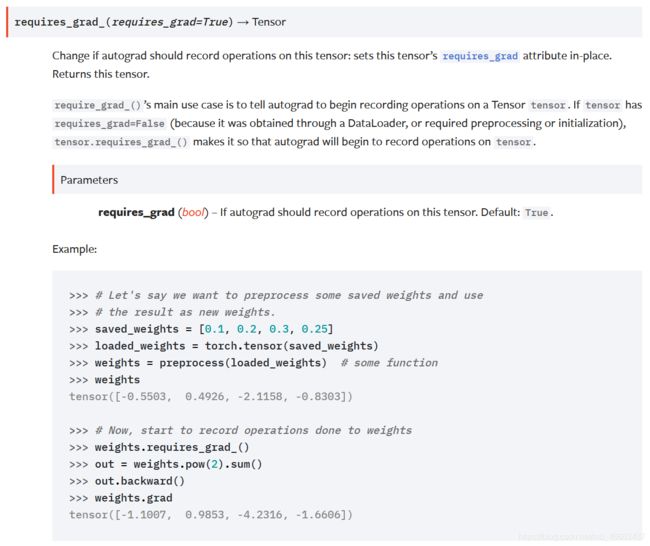

requires_grad_(requires_grad=True) → Tensor

方法: requires_grad_(requires_grad=True) 返回类型是 Tensor

Change if autograd should record operations on this tensor:

sets this tensor’s requires_grad attribute in-place. Returns

this tensor.

该方法能够决定自动梯度机制是否需要为当前这个张量计算记录运算操作.

该方法能对当前张量的requires_grad属性进行原地操作.返回这个当前张量.

require_grad_()’s main use case is to tell autograd to begin

recording operations on a Tensor tensor. If tensor has

requires_grad=False (because it was obtained through a DataLoader,

or required preprocessing or initialization),

tensor.requires_grad_() makes it so that autograd will begin to

record operations on tensor.

require_grad_()方法的主要使用场景是告诉自动梯度机制开始记录追踪这个张量tensor

的操作.如果张量tensor的属性requires_grad=False(因为这个张量是从

DataLoader中获得到的,或者这个张量需要预处理或初始化),该张量执行tensor.requires_grad_()

方法将会让自动梯度autograd开始跟踪并记录这个张量上的运算操作.

Parameters 参数

requires_grad (bool) – If autograd should record operations on this tensor. Default: True.

requires_grad (布尔类型) – 用于告诉自动梯度机制,是否需要记录这个张量上的运算操作.默认值是True.

Example: 例子:

>>> # Let's say we want to preprocess some saved weights and use

>>> # the result as new weights.

>>> # 假设我们想要预处理一些已保存好的权重,并且要使用这些结果作为新的权重.

>>> saved_weights = [0.1, 0.2, 0.3, 0.25]

>>> loaded_weights = torch.tensor(saved_weights)

>>> weights = preprocess(loaded_weights) # some function 某个函数

>>> weights

tensor([-0.5503, 0.4926, -2.1158, -0.8303])

>>> # Now, start to record operations done to weights

>>> # 现在我们开始记录在这个weights张量上所执行的运算操作.

>>> weights.requires_grad_()

>>> out = weights.pow(2).sum()

>>> out.backward()

>>> weights.grad

tensor([-1.1007, 0.9853, -4.2316, -1.6606])

实验代码:

Microsoft Windows [版本 10.0.18363.1316]

(c) 2019 Microsoft Corporation。保留所有权利。

C:\Users\chenxuqi>conda activate ssd4pytorch1_2_0

(ssd4pytorch1_2_0) C:\Users\chenxuqi>python

Python 3.7.7 (default, May 6 2020, 11:45:54) [MSC v.1916 64 bit (AMD64)] :: Anaconda, Inc. on win32

Type "help", "copyright", "credits" or "license" for more information.

>>> import torch

>>> torch.manual_seed(seed=20200910)

<torch._C.Generator object at 0x000002BF3761D330>

>>>

>>> a = torch.randn(3,5)

>>> a

tensor([[ 0.2824, -0.3715, 0.9088, -1.7601, -0.1806],

[ 2.0937, 1.0406, -1.7651, 1.1216, 0.8440],

[ 0.1783, 0.6859, -1.5942, -0.2006, -0.4050]])

>>> a.requires_grad

False

>>> a.requires_grad()

Traceback (most recent call last):

File "" , line 1, in <module>

TypeError: 'bool' object is not callable

>>> a.requires_grad_()

tensor([[ 0.2824, -0.3715, 0.9088, -1.7601, -0.1806],

[ 2.0937, 1.0406, -1.7651, 1.1216, 0.8440],

[ 0.1783, 0.6859, -1.5942, -0.2006, -0.4050]], requires_grad=True)

>>> a

tensor([[ 0.2824, -0.3715, 0.9088, -1.7601, -0.1806],

[ 2.0937, 1.0406, -1.7651, 1.1216, 0.8440],

[ 0.1783, 0.6859, -1.5942, -0.2006, -0.4050]], requires_grad=True)

>>> a.requires_grad

True

>>> a.requires_grad_()

tensor([[ 0.2824, -0.3715, 0.9088, -1.7601, -0.1806],

[ 2.0937, 1.0406, -1.7651, 1.1216, 0.8440],

[ 0.1783, 0.6859, -1.5942, -0.2006, -0.4050]], requires_grad=True)

>>> a.requires_grad_(False)

tensor([[ 0.2824, -0.3715, 0.9088, -1.7601, -0.1806],

[ 2.0937, 1.0406, -1.7651, 1.1216, 0.8440],

[ 0.1783, 0.6859, -1.5942, -0.2006, -0.4050]])

>>> a.requires_grad_()

tensor([[ 0.2824, -0.3715, 0.9088, -1.7601, -0.1806],

[ 2.0937, 1.0406, -1.7651, 1.1216, 0.8440],

[ 0.1783, 0.6859, -1.5942, -0.2006, -0.4050]], requires_grad=True)

>>> a.requires_grad_(requires_grad=False)

tensor([[ 0.2824, -0.3715, 0.9088, -1.7601, -0.1806],

[ 2.0937, 1.0406, -1.7651, 1.1216, 0.8440],

[ 0.1783, 0.6859, -1.5942, -0.2006, -0.4050]])

>>> a

tensor([[ 0.2824, -0.3715, 0.9088, -1.7601, -0.1806],

[ 2.0937, 1.0406, -1.7651, 1.1216, 0.8440],

[ 0.1783, 0.6859, -1.5942, -0.2006, -0.4050]])

>>> a.requires_grad_(requires_grad=False)

tensor([[ 0.2824, -0.3715, 0.9088, -1.7601, -0.1806],

[ 2.0937, 1.0406, -1.7651, 1.1216, 0.8440],

[ 0.1783, 0.6859, -1.5942, -0.2006, -0.4050]])

>>> a

tensor([[ 0.2824, -0.3715, 0.9088, -1.7601, -0.1806],

[ 2.0937, 1.0406, -1.7651, 1.1216, 0.8440],

[ 0.1783, 0.6859, -1.5942, -0.2006, -0.4050]])

>>> a.requires_grad

False

>>>

>>>

>>>

>>> b = torch.randn(3,5,requires_grad=True)

>>> b

tensor([[-0.5556, 0.9571, 0.7435, -0.2974, -2.2825],

[-0.6627, -1.1902, -0.1748, 1.2125, 0.6630],

[-0.5813, -0.1549, -0.4551, -0.4570, 0.4547]], requires_grad=True)

>>> b.requires_grad

True

>>> b.requires_grad_()

tensor([[-0.5556, 0.9571, 0.7435, -0.2974, -2.2825],

[-0.6627, -1.1902, -0.1748, 1.2125, 0.6630],

[-0.5813, -0.1549, -0.4551, -0.4570, 0.4547]], requires_grad=True)

>>> b

tensor([[-0.5556, 0.9571, 0.7435, -0.2974, -2.2825],

[-0.6627, -1.1902, -0.1748, 1.2125, 0.6630],

[-0.5813, -0.1549, -0.4551, -0.4570, 0.4547]], requires_grad=True)

>>> b.requires_grad

True

>>> b.requires_grad_(False)

tensor([[-0.5556, 0.9571, 0.7435, -0.2974, -2.2825],

[-0.6627, -1.1902, -0.1748, 1.2125, 0.6630],

[-0.5813, -0.1549, -0.4551, -0.4570, 0.4547]])

>>> b

tensor([[-0.5556, 0.9571, 0.7435, -0.2974, -2.2825],

[-0.6627, -1.1902, -0.1748, 1.2125, 0.6630],

[-0.5813, -0.1549, -0.4551, -0.4570, 0.4547]])

>>> b.requires_grad_(False)

tensor([[-0.5556, 0.9571, 0.7435, -0.2974, -2.2825],

[-0.6627, -1.1902, -0.1748, 1.2125, 0.6630],

[-0.5813, -0.1549, -0.4551, -0.4570, 0.4547]])

>>> b.requires_grad_(False)

tensor([[-0.5556, 0.9571, 0.7435, -0.2974, -2.2825],

[-0.6627, -1.1902, -0.1748, 1.2125, 0.6630],

[-0.5813, -0.1549, -0.4551, -0.4570, 0.4547]])

>>> b.requires_grad_()

tensor([[-0.5556, 0.9571, 0.7435, -0.2974, -2.2825],

[-0.6627, -1.1902, -0.1748, 1.2125, 0.6630],

[-0.5813, -0.1549, -0.4551, -0.4570, 0.4547]], requires_grad=True)

>>> b

tensor([[-0.5556, 0.9571, 0.7435, -0.2974, -2.2825],

[-0.6627, -1.1902, -0.1748, 1.2125, 0.6630],

[-0.5813, -0.1549, -0.4551, -0.4570, 0.4547]], requires_grad=True)

>>> b.requires_grad_()

tensor([[-0.5556, 0.9571, 0.7435, -0.2974, -2.2825],

[-0.6627, -1.1902, -0.1748, 1.2125, 0.6630],

[-0.5813, -0.1549, -0.4551, -0.4570, 0.4547]], requires_grad=True)

>>> b

tensor([[-0.5556, 0.9571, 0.7435, -0.2974, -2.2825],

[-0.6627, -1.1902, -0.1748, 1.2125, 0.6630],

[-0.5813, -0.1549, -0.4551, -0.4570, 0.4547]], requires_grad=True)

>>>

>>>

>>> b

tensor([[-0.5556, 0.9571, 0.7435, -0.2974, -2.2825],

[-0.6627, -1.1902, -0.1748, 1.2125, 0.6630],

[-0.5813, -0.1549, -0.4551, -0.4570, 0.4547]], requires_grad=True)

>>>

>>> c = 2.0*b

>>> c

tensor([[-1.1113, 1.9141, 1.4871, -0.5948, -4.5650],

[-1.3254, -2.3805, -0.3497, 2.4250, 1.3261],

[-1.1625, -0.3099, -0.9102, -0.9141, 0.9093]], grad_fn=<MulBackward0>)

>>> c.requires_grad

True

>>> c.requires_grad_()

tensor([[-1.1113, 1.9141, 1.4871, -0.5948, -4.5650],

[-1.3254, -2.3805, -0.3497, 2.4250, 1.3261],

[-1.1625, -0.3099, -0.9102, -0.9141, 0.9093]], grad_fn=<MulBackward0>)

>>> c.requires_grad_(False)

Traceback (most recent call last):

File "" , line 1, in <module>

RuntimeError: you can only change requires_grad flags of leaf variables. If you want to use a computed variable in a subgraph that doesn't require differentiation use var_no_grad = var.detach().

>>>

>>> c

tensor([[-1.1113, 1.9141, 1.4871, -0.5948, -4.5650],

[-1.3254, -2.3805, -0.3497, 2.4250, 1.3261],

[-1.1625, -0.3099, -0.9102, -0.9141, 0.9093]], grad_fn=<MulBackward0>)

>>> d = c.detach()

>>> d

tensor([[-1.1113, 1.9141, 1.4871, -0.5948, -4.5650],

[-1.3254, -2.3805, -0.3497, 2.4250, 1.3261],

[-1.1625, -0.3099, -0.9102, -0.9141, 0.9093]])

>>> c

tensor([[-1.1113, 1.9141, 1.4871, -0.5948, -4.5650],

[-1.3254, -2.3805, -0.3497, 2.4250, 1.3261],

[-1.1625, -0.3099, -0.9102, -0.9141, 0.9093]], grad_fn=<MulBackward0>)

>>> d.requires_grad

False

>>> d.is_leaf

True

>>> d.requires_grad_(False)

tensor([[-1.1113, 1.9141, 1.4871, -0.5948, -4.5650],

[-1.3254, -2.3805, -0.3497, 2.4250, 1.3261],

[-1.1625, -0.3099, -0.9102, -0.9141, 0.9093]])

>>> d.requires_grad_()

tensor([[-1.1113, 1.9141, 1.4871, -0.5948, -4.5650],

[-1.3254, -2.3805, -0.3497, 2.4250, 1.3261],

[-1.1625, -0.3099, -0.9102, -0.9141, 0.9093]], requires_grad=True)

>>>

>>>

>>>

实验代码:关于中间节点(非叶节点张量)保持梯度不释放中间节点.

Microsoft Windows [版本 10.0.18363.1316]

(c) 2019 Microsoft Corporation。保留所有权利。

C:\Users\chenxuqi>conda activate ssd4pytorch1_2_0

(ssd4pytorch1_2_0) C:\Users\chenxuqi>python

Python 3.7.7 (default, May 6 2020, 11:45:54) [MSC v.1916 64 bit (AMD64)] :: Anaconda, Inc. on win32

Type "help", "copyright", "credits" or "license" for more information.

>>> import torch

>>> torch.manual_seed(seed=20200910)

<torch._C.Generator object at 0x000001CDB4A5D330>

>>> data_in = torch.randn(3,5,requires_grad=True)

>>> data_in

tensor([[ 0.2824, -0.3715, 0.9088, -1.7601, -0.1806],

[ 2.0937, 1.0406, -1.7651, 1.1216, 0.8440],

[ 0.1783, 0.6859, -1.5942, -0.2006, -0.4050]], requires_grad=True)

>>> data_mean = data_in.mean()

>>> data_mean

tensor(0.0585, grad_fn=<MeanBackward0>)

>>> data_in.requires_grad

True

>>> data_mean.requires_grad

True

>>> data_1 = data_mean * 20200910.0

>>> data_1

tensor(1182591., grad_fn=<MulBackward0>)

>>> data_2 = data_1 * 15.0

>>> data_2

tensor(17738864., grad_fn=<MulBackward0>)

>>> data_2.retain_grad()

>>> data_3 = 2 * (data_2 + 55.0)

>>> loss = data_3 / 2.0 +89.2

>>> loss

tensor(17739010., grad_fn=<AddBackward0>)

>>>

>>> data_in.grad

>>> data_mean.grad

>>> data_1.grad

>>> data_2.grad

>>> data_3.grad

>>> loss.grad

>>> print(data_in.grad, data_mean.grad, data_1.grad, data_2.grad, data_3.grad, loss.grad)

None None None None None None

>>>

>>> loss.backward()

>>> data_in.grad

tensor([[20200910., 20200910., 20200910., 20200910., 20200910.],

[20200910., 20200910., 20200910., 20200910., 20200910.],

[20200910., 20200910., 20200910., 20200910., 20200910.]])

>>> data_mean.grad

>>> data_mean.grad

>>> data_1.grad

>>> data_2.grad

tensor(1.)

>>> data_3.grad

>>> loss.grad

>>>

>>>

>>> print(data_in.grad, data_mean.grad, data_1.grad, data_2.grad, data_3.grad, loss.grad)

tensor([[20200910., 20200910., 20200910., 20200910., 20200910.],

[20200910., 20200910., 20200910., 20200910., 20200910.],

[20200910., 20200910., 20200910., 20200910., 20200910.]]) None None tensor(1.) None None

>>>

>>>

>>> print(data_in.is_leaf, data_mean.is_leaf, data_1.is_leaf, data_2.is_leaf, data_3.is_leaf, loss.is_leaf)

True False False False False False

>>>

>>>

>>>