SKS 是什么?

SKS 全称 SMTX Kubernetes Service , 是 SmartX 公司退出的容器 IAAS 平台,如下图所示,在基于 SMTX OS 的平台上,可以通过 SKS 创建 Kubernetes Cluster 并进行管理。

SKS 的安装和管理依赖 管理工具CloudTower。

SKS 安装

配套视频:https://www.bilibili.com/video/BV1pd4y1L77D/?vd_source=33b17c80f501bc5db46f7dcb22fc96cb

安装 CloudTower

现阶段 CloudTower 支持 SKS 的版本还是测试版,主要包括 CloudTower 安装文件、SKS 部署文件、镜像仓库部署文件和节点相关文件。其中节点相关文件即 VM-Kubernetes Node 安装模板,类似于Tanzu 的[photon-ova](https://wp-content.vmware.com/v2/latest/ob-20611023-photon-3-amd64-vmi-k8s-v1.23.8---vmware.2-tkg.1-zshippable/photon-ova.ovf),也是基于不同Kubernetes 版本的。

- 系统配置

| 配置等级 | CloudTower 管理能⼒ | CloudTower 所在的虚拟机占⽤的系统资源 |

|---|---|---|

| 低配 | 10 个集群/100 台主机/1000 个虚拟机 | 4 核 vCPU/8 GiB 内存/100 GiB 存储空间 |

| ⾼配 | 100 个集群/1000 台主机/10000 个虚拟机 | 8 核 vCPU/16 GiB 内存/400 GiB 存储空间 |

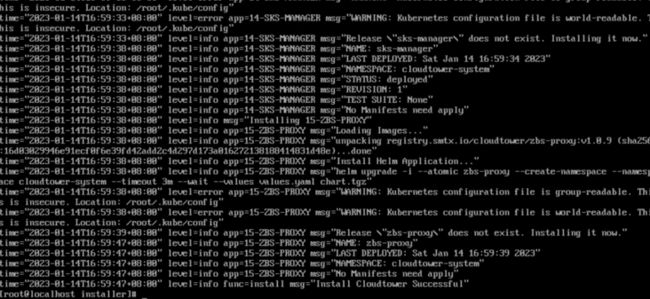

选用高配,CloudTower 基于 CentOS,安装过程也类似,不同的是挂载安装 ISO 文件启动后,VM 会自动安装。

虚拟机 Guest OS 的默认⽤⼾名 smartx,初始密码 HC!r0cks,进入系统后执行以下命令完成安装,在此之前确保 IP 地址配置成功:

sudo bash

cd /usr/share/smartx/tower

sh ./preinstall.sh

cd ./installer

./binary/installer deploy完成以后:

Web 登录 CloudTower 的IP 地址即进入管理界面。

SKS 初始化

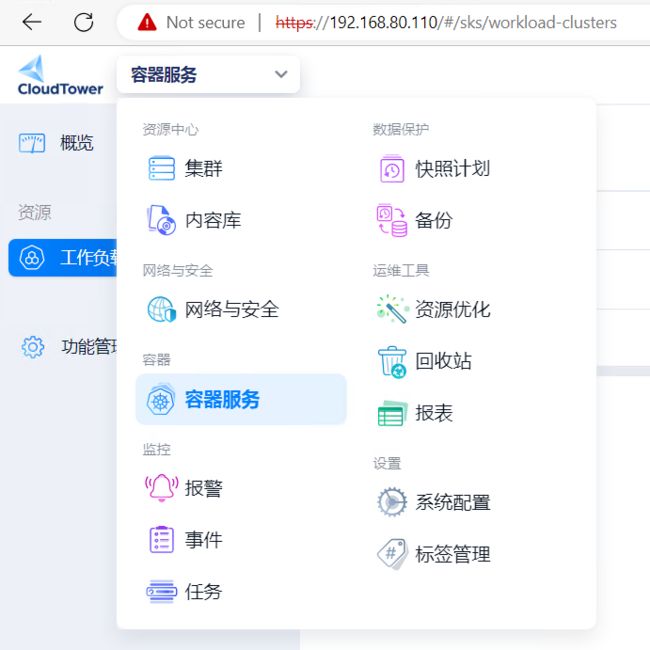

关联SMTX OS 集群后,在左上角库进入容器服务

- 创建镜像仓库

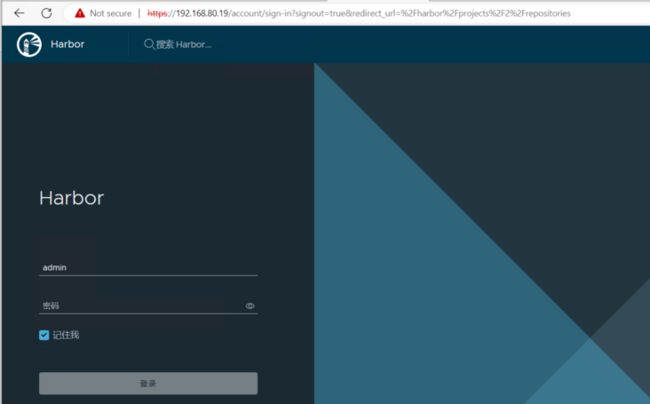

上传镜像仓库的文件并输入 IP 地址等参数后,系统会自动创建镜像仓库。创建好以后,可以使Web 方式登录,发现是 Harbor,初始用户名和密码是 admin/HC!r0cks

创建工作负载管控集群

- 集群的Control-plane 只能选择 1、3、5;Worker 节点可以任意选择;

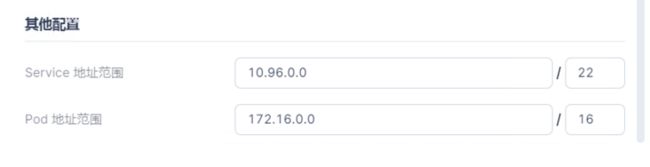

- 需要指定节点 IP 地址,配置 IP Pool 和 API Server 地址;

- 需要指定上传文件的MD5值,Win下可以使用以下命令

C:\Users\Administrator\Downloads>certutil -hashfile sks-installer-all-bundle-v0.8.1-20230104152731.tar MD5 MD5 哈希(文件 sks-installer-all-bundle-v0.8.1-20230104152731.tar): 5510635fcf878f048b674a6427f681b5 CertUtil: -hashfile 命令成功完成。部署完成后的管控集群如下,使用Kubernetes 1.24.8 版本。

部署工作负载集群

在 容器服务→资源→工作负载集群 ,点击 +创建工作负载集群

其中,

- Kubernetes 版本可以通过上传节点相关文件提供多个;

- CNI 暂时只有Calico;

- CSI 默认把本集群的分布式存储通过 CSI Driver 提供给Pod 使用(Default StorageClass),如果系统配置了其他 ZBS iSCSI ,可以选SMTX ZBS iSCSI;

工作负载集群的control-plane 和 worker 节点数量可以按需设置;

节点的访问可以通过密码或密钥,用户名是smartx;

配置API Server 的接入点和节点 IP 地址;

点击创建后,系统会耗时 10-30分钟(视节点的多少)创建工作负载集群。

SKS 集群使用

创建完成工作负载集群后,就可以使用了。

工作负载集群的访问

点击创建的集群,可以看到一个集群专属界面

右上角 +创建 旁是本集群的 Kubeconfig 文件,通过它可以配置访问该集群。

root@client:/home/zyi# kubectl get node

NAME STATUS ROLES AGE VERSION

k8s-01-controlplane-v1-25-4-bmtjms-45b52 Ready control-plane 31h v1.25.4

k8s-01-controlplane-v1-25-4-bmtjms-58h4s Ready control-plane 31h v1.25.4

k8s-01-controlplane-v1-25-4-bmtjms-sspc2 Ready control-plane 31h v1.25.4

k8s-01-workergroup1-v1-25-4-r3izcm-bcb89 Ready 31h v1.25.4

k8s-01-workergroup1-v1-25-4-r3izcm-ggblg Ready 31h v1.25.4

k8s-01-workergroup1-v1-25-4-r3izcm-jlfxd Ready 31h v1.25.4

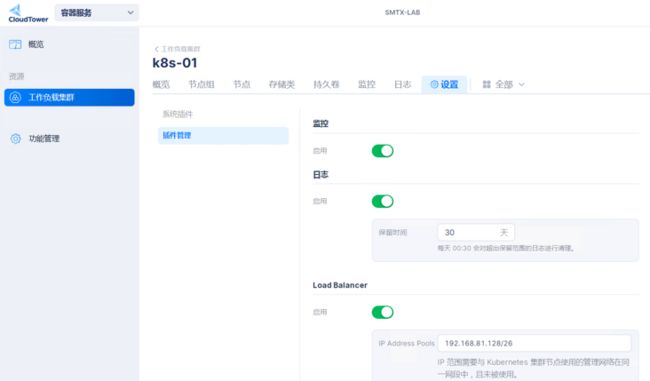

k8s-01-workergroup1-v1-25-4-r3izcm-p8c6x Ready 31h v1.25.4 - 插件设置

在每个集群,都可以按需安装插件,SKS 提供的插件有 监控、日志、LoadBalancer、Ingress 和 CSI (默认安装)

保存以后,系统会自动安装。

使用LoadBalancer

SKS 使用的 Load Balancer(MetaLB 的 L2 模式),需要配置IP pool 给对外的Service。

MetaLB的官网:https://metallb.org/concepts/

root@client:/home/zyi# kubectl get po -n sks-system-metallb

NAME READY STATUS RESTARTS AGE

metallb-controller-8546c76b7b-8gxzd 1/1 Running 0 31h

metallb-speaker-28tb4 1/1 Running 0 31h

metallb-speaker-4lj7b 1/1 Running 0 31h

metallb-speaker-6jrmz 1/1 Running 0 31h

metallb-speaker-82gjl 1/1 Running 0 31h

metallb-speaker-jc56z 1/1 Running 0 31h

metallb-speaker-tz59m 1/1 Running 0 31h

metallb-speaker-xf48h 1/1 Running 0 31h我们使用以下yaml 测试 LBer:

apiVersion: v1

kind: Service

metadata:

name: hello-kubernetes

spec:

type: LoadBalancer

ports:

- port: 80

targetPort: 8080

selector:

app: hello-kubernetes

---

apiVersion: apps/v1

kind: Deployment

metadata:

name: hello-kubernetes

spec:

replicas: 3

selector:

matchLabels:

app: hello-kubernetes

template:

metadata:

labels:

app: hello-kubernetes

spec:

containers:

- name: hello-kubernetes

image: paulbouwer/hello-kubernetes:1.5

ports:

- containerPort: 8080

env:

- name: MESSAGE

value: I just deployed a PodVM on the SKS Cluster!!效果如下:

root@client:/home/zyi# kubectl get all |grep hello

pod/hello-kubernetes-74898bf87-5k8g4 1/1 Running 0 31h

pod/hello-kubernetes-74898bf87-kqwpb 1/1 Running 0 31h

pod/hello-kubernetes-74898bf87-w6tjq 1/1 Running 0 31h

service/hello-kubernetes LoadBalancer 10.96.3.145 192.168.81.128 80:30437/TCP 31h

deployment.apps/hello-kubernetes 3/3 3 3 31h

replicaset.apps/hello-kubernetes-74898bf87 3 3 3 31h通过Web 访问

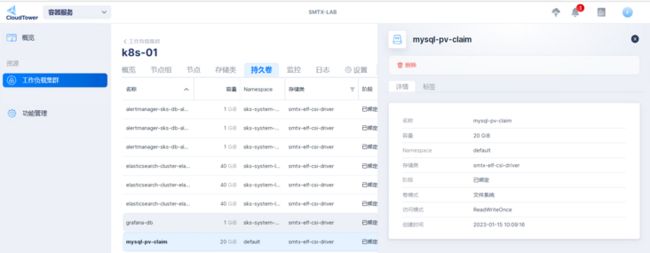

CSI 测试

采用Kubernetes 官网 Wordpress 的例子。

apiVersion: v1

kind: Service

metadata:

name: wordpress-mysql

labels:

app: wordpress

spec:

ports:

- port: 3306

selector:

app: wordpress

tier: mysql

clusterIP: None

---

apiVersion: v1

kind: PersistentVolumeClaim

metadata:

name: mysql-pv-claim

labels:

app: wordpress

spec:

accessModes:

- ReadWriteOnce

storageClassName: smtx-elf-csi-driver #增加本环境的存储类

resources:

requests:

storage: 20Gi

---

apiVersion: apps/v1 # for versions before 1.9.0 use apps/v1beta2

kind: Deployment

metadata:

name: wordpress-mysql

labels:

app: wordpress

spec:

selector:

matchLabels:

app: wordpress

tier: mysql

strategy:

type: Recreate

template:

metadata:

labels:

app: wordpress

tier: mysql

spec:

containers:

- image: mysql:5.6

name: mysql

env:

- name: MYSQL_ROOT_PASSWORD

valueFrom:

secretKeyRef:

name: mysql-pass

key: password

ports:

- containerPort: 3306

name: mysql

volumeMounts:

- name: mysql-persistent-storage

mountPath: /var/lib/mysql

volumes:

- name: mysql-persistent-storage

persistentVolumeClaim:

claimName: mysql-pv-claim

---

apiVersion: v1

kind: Service

metadata:

name: wordpress

labels:

app: wordpress

spec:

ports:

- port: 80

selector:

app: wordpress

tier: frontend

type: LoadBalancer

---

apiVersion: v1

kind: PersistentVolumeClaim

metadata:

name: wp-pv-claim

labels:

app: wordpress

spec:

accessModes:

- ReadWriteOnce

storageClassName: smtx-elf-csi-driver #增加本环境的存储类

resources:

requests:

storage: 20Gi

---

apiVersion: apps/v1 # for versions before 1.9.0 use apps/v1beta2

kind: Deployment

metadata:

name: wordpress

labels:

app: wordpress

spec:

selector:

matchLabels:

app: wordpress

tier: frontend

strategy:

type: Recreate

template:

metadata:

labels:

app: wordpress

tier: frontend

spec:

containers:

- image: wordpress:4.8-apache

name: wordpress

env:

- name: WORDPRESS_DB_HOST

value: wordpress-mysql

- name: WORDPRESS_DB_PASSWORD

valueFrom:

secretKeyRef:

name: mysql-pass

key: password

ports:

- containerPort: 80

name: wordpress

volumeMounts:

- name: wordpress-persistent-storage

mountPath: /var/www/html

volumes:

- name: wordpress-persistent-storage

persistentVolumeClaim:

claimName: wp-pv-claim以上 Yaml 使用的StorageClass 可以查看

root@client:/home/zyi# kubectl get sc

NAME PROVISIONER RECLAIMPOLICY VOLUMEBINDINGMODE ALLOWVOLUMEEXPANSION AGE

smtx-elf-csi-driver (default) com.smartx.elf-csi-driver Delete Immediate false 32h在应用该yaml 之前,需要创建所需的Secret:mysql-pass

kubectl create secret generic mysql-pass --from-literal password='HC!r0cks'部署完成以后:

root@client:/home/zyi# kubectl get pvc

NAME STATUS VOLUME CAPACITY ACCESS MODES STORAGECLASS AGE

mysql-pv-claim Bound pvc-b5690b99-bf61-432b-99d1-e5f1924b6acd 20Gi RWO smtx-elf-csi-driver 31h

wp-pv-claim Bound pvc-67040f10-89e1-4a86-8b5e-082786b67507 20Gi RWO smtx-elf-csi-driver 31h

root@client:/home/zyi# kubectl get svc |grep word

wordpress LoadBalancer 10.96.1.17 192.168.81.129 80:30090/TCP 31h

wordpress-mysql ClusterIP None 3306/TCP 31h 可以看到生产的 PVC ,同样在系统中也可以看到

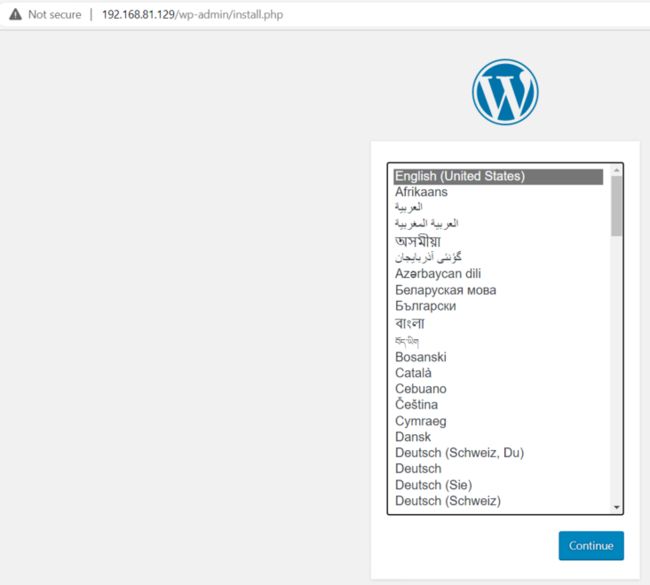

查看Wordpress 的可用性

以及可以使用了。

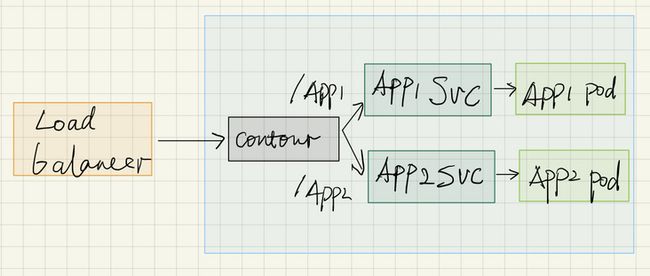

Contour 测试

Contour 官网:github.com/heptio/contour

实现:Go

许可证:Apache 2.0

Contour 和 Envoy 由同一个作者开发,它基于 Envoy。它最特别的功能是可以通过 CRD(IngressRoute)管理 Ingress 资源,对于多团队需要同时使用一个集群的组织来说,这有助于保护相邻环境中的流量,使它们免受 Ingress 资源更改的影响。

它还提供了一组扩展的负载均衡算法(镜像、自动重复、限制请求率等),以及详细的流量和故障监控。

测试采用的例子来源于blog:

Kubernetes Ingress with Contour

环境:

SKS:K8S Cluster 3 contorl-plane ,4 worker

Register : 192.168.80.19

Go 文件

安装golang

apt install golang-go -y go env -w GO111MODULE=auto go mod init basic-web-serverGolang文件 xx.go

package main import ( "fmt" "log" "net/http" "os" ) func main() { fmt.Println("Starting web server...") message := os.Getenv("MESSAGE") fmt.Printf("Using message: %s\n", message) http.HandleFunc("/", func(res http.ResponseWriter, req *http.Request) { fmt.Println("Request received") fmt.Fprintf(res, "Message: %s", message) }) log.Fatal(http.ListenAndServe(":8000", nil)) }

Dockerfile

文件

FROM golang:1.17 AS builder COPY . /var/app WORKDIR /var/app RUN ["go", "build", "-o", "app", "."] FROM debian:bullseye WORKDIR /var/app COPY --from=builder /var/app/app . CMD ["./app"]Build

docker build -t 192.168.80.19/sks/basic-web-server:v1 . root@client:/home/zyi/contour-test# docker images REPOSITORY TAG IMAGE ID CREATED SIZE 192.168.80.19/sks/basic-web-server v1 eafc463bc7e4 49 minutes ago 130MB

Push to Harbor

- Download file ca.crt

Create folder and cp ca to it

mkdir -p /etc/docker/certs.d/192.168.80.19 cp ca.crt /etc/docker/certs.d/192.168.80.19/Login and Push

docker login 192.168.80.19 docker push 192.168.80.19/sks/basic-web-server:v1

Create the applications in the Kubernetes cluster

App1

kind: Namespace apiVersion: v1 metadata: name: app1 --- kind: Deployment apiVersion: apps/v1 metadata: name: app1 namespace: app1 spec: replicas: 2 selector: matchLabels: app: app1 template: metadata: labels: app: app1 spec: containers: - name: app1 image: 192.168.80.19/sks/basic-web-server:v1 imagePullPolicy: Always ports: - containerPort: 8000 env: - name: MESSAGE value: hello from app1 --- kind: Service apiVersion: v1 metadata: name: app1 namespace: app1 spec: selector: app: app1 ports: - port: 80 targetPort: 8000App2:

kind: Namespace apiVersion: v1 metadata: name: app2 --- kind: Deployment apiVersion: apps/v1 metadata: name: app2 namespace: app2 spec: replicas: 2 selector: matchLabels: app: app2 template: metadata: labels: app: app2 spec: containers: - name: app2 image: 192.168.80.19/sks/basic-web-server:v1 imagePullPolicy: Always ports: - containerPort: 8000 env: - name: MESSAGE value: hello from app2 --- kind: Service apiVersion: v1 metadata: name: app2 namespace: app2 spec: selector: app: app2 ports: - port: 80 targetPort: 8000

Proxy

Setup the routing so that our Contour ingress controller knows where to send traffic to. Do this by creating a single root proxy and include two other proxies to direct traffic to their respective application.App-proxy

kind: HTTPProxy apiVersion: projectcontour.io/v1 metadata: name: app1 namespace: app1 spec: routes: - services: - name: app1 port: 80 --- kind: HTTPProxy apiVersion: projectcontour.io/v1 metadata: name: app2 namespace: app2 spec: routes: - services: - name: app2 port: 80There’s not much to these, they are pretty much just target proxies that can be referenced and route all traffic to their service and port. The real routing comes into play with the root proxy:

kind: HTTPProxy apiVersion: projectcontour.io/v1 metadata: name: main spec: virtualhost: fqdn: myapps includes: - name: app1 namespace: app1 conditions: - prefix: /app1 - name: app2 namespace: app2 conditions: - prefix: /app2Check the proxies

root@client:/home/zyi/contour-test# kubectl get httpproxies.projectcontour.io -A NAMESPACE NAME FQDN TLS SECRET STATUS STATUS DESCRIPTION app1 app1 valid Valid HTTPProxy app2 app2 valid Valid HTTPProxy default main myapps valid Valid HTTPProxy

Check the results

root@client:/home/zyi/contour-test# kubectl get svc -A NAMESPACE NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE app1 app1 ClusterIP 10.96.2.20680/TCP 58m app2 app2 ClusterIP 10.96.1.37 80/TCP 58m calico-apiserver calico-api ClusterIP 10.96.1.71 443/TCP 6h53m calico-system calico-kube-controllers-metrics ClusterIP 10.96.0.251 9094/TCP 6h53m calico-system calico-typha ClusterIP 10.96.2.255 5473/TCP 6h53m default hello-kubernetes LoadBalancer 10.96.3.145 192.168.81.128 80:30437/TCP 6h42m default kubernetes ClusterIP 10.96.0.1 443/TCP 6h54m default wordpress LoadBalancer 10.96.1.17 192.168.81.129 80:30090/TCP 6h32m default wordpress-mysql ClusterIP None 3306/TCP 6h32m kapp-controller packaging-api ClusterIP 10.96.2.99 443/TCP 6h54m kube-system kube-controller-manager ClusterIP 10.96.2.92 10257/TCP 145m kube-system kube-dns ClusterIP 10.96.0.10 53/UDP,53/TCP,9153/TCP 6h54m kube-system kube-scheduler ClusterIP 10.96.3.58 10259/TCP 145m kube-system kubelet ClusterIP None 10250/TCP,10255/TCP,4194/TCP 145m sks-system-contour contour ClusterIP 10.96.3.100 8001/TCP 5h59m sks-system-contour contour-default-backend ClusterIP 10.96.0.83 80/TCP 5h59m sks-system-contour contour-envoy LoadBalancer 10.96.1.236 192.168.81.130 80:31054/TCP,443:32273/TCP 5h59m root@client:/home/zyi/contour-test# INGRESS_IP=$(kubectl get svc -n sks-system-contour contour-envoy -o jsonpath='{.status.loadBalancer.ingress[0].ip}') root@client:/home/zyi/contour-test# echo $INGRESS_IP 192.168.81.130Curl app

root@client:/home/zyi/contour-test# curl -H "host:myapps" $INGRESS_IP/app1 Message: hello from app1 root@client:/home/zyi/contour-test# curl -H "host:myapps" $INGRESS_IP/app2 Message: hello from app2

附 VMware Example:

VMware 在官网的推荐的Ingress 工具也是Contour,不过官网的例子没有更新,链接:

使用 Contour 的 Ingress

Error :

apiVersion: networking.k8s.io/v1beta1

kind: Ingress

metadata:

name: ingress-nihao

spec:

rules:

- http:

paths:

- path: /nihao

backend:

serviceName: nihao

servicePort: 80root@client:/home/zyi/contour-test# kubectl apply -f nihao.yaml

Error from server (BadRequest): error when creating "nihao.yaml": Ingress in version "v1" cannot be handled as a Ingress: strict decoding error: unknown field "spec.rules[0].http.paths[0].backend.serviceName", unknown field "spec.rules[0].http.paths[0].backend.servicePort"change new:

apiVersion: networking.k8s.io/v1

kind: Ingress

metadata:

name: ingress-nihao

spec:

rules:

- http:

paths:

- path: /nihao

pathType: Prefix

backend:

service:

name: nihao

port:

number: 80