读写文本格式的数据

import pandas as pd

import numpy as np

from pandas import Series,DataFrame

!type "E:\python_study_files\python\pydata-book-2nd-edition\examples\ex1.csv"

a,b,c,d,message

1,2,3,4,hello

5,6,7,8,world

9,10,11,12,foo

df = pd.read_csv("E:\python_study_files\python\pydata-book-2nd-edition\examples\ex1.csv")

df = pd.read_table("E:\python_study_files\python\pydata-book-2nd-edition\examples\ex1.csv",sep=',')

pd.read_csv("E:\python_study_files\python\pydata-book-2nd-edition\examples\ex2.csv",header=None)

|

0 |

1 |

2 |

3 |

4 |

| 0 |

1 |

2 |

3 |

4 |

hello |

| 1 |

5 |

6 |

7 |

8 |

world |

| 2 |

9 |

10 |

11 |

12 |

foo |

pd.read_csv("E:\python_study_files\python\pydata-book-2nd-edition\examples\ex2.csv",names=['a','b','c','d','message'])

|

a |

b |

c |

d |

message |

| 0 |

1 |

2 |

3 |

4 |

hello |

| 1 |

5 |

6 |

7 |

8 |

world |

| 2 |

9 |

10 |

11 |

12 |

foo |

names=['a','b','c','d','message']

pd.read_csv("E:\python_study_files\python\pydata-book-2nd-edition\examples\ex2.csv",names=names,index_col='message')

|

a |

b |

c |

d |

| message |

|

|

|

|

| hello |

1 |

2 |

3 |

4 |

| world |

5 |

6 |

7 |

8 |

| foo |

9 |

10 |

11 |

12 |

parsed = pd.read_csv("E:\python_study_files\python\pydata-book-2nd-edition\examples\csv_mindex.csv",index_col=['key1','key2'])

parsed

|

|

value1 |

value2 |

| key1 |

key2 |

|

|

| one |

a |

1 |

2 |

| b |

3 |

4 |

| c |

5 |

6 |

| d |

7 |

8 |

| two |

a |

9 |

10 |

| b |

11 |

12 |

| c |

13 |

14 |

| d |

15 |

16 |

list(open("E:\python_study_files\python\pydata-book-2nd-edition\examples\ex3.txt"))

[' A B C\n',

'aaa -0.264438 -1.026059 -0.619500\n',

'bbb 0.927272 0.302904 -0.032399\n',

'ccc -0.264273 -0.386314 -0.217601\n',

'ddd -0.871858 -0.348382 1.100491\n']

result = pd.read_table("E:\python_study_files\python\pydata-book-2nd-edition\examples\ex3.txt",sep='\s+')

result

|

A |

B |

C |

| aaa |

-0.264438 |

-1.026059 |

-0.619500 |

| bbb |

0.927272 |

0.302904 |

-0.032399 |

| ccc |

-0.264273 |

-0.386314 |

-0.217601 |

| ddd |

-0.871858 |

-0.348382 |

1.100491 |

pd.read_csv("E:\python_study_files\python\pydata-book-2nd-edition\examples\ex4.csv",skiprows=[0,2,3])

|

a |

b |

c |

d |

message |

| 0 |

1 |

2 |

3 |

4 |

hello |

| 1 |

5 |

6 |

7 |

8 |

world |

| 2 |

9 |

10 |

11 |

12 |

foo |

result = pd.read_csv("E:\python_study_files\python\pydata-book-2nd-edition\examples\ex5.csv")

result

|

something |

a |

b |

c |

d |

message |

| 0 |

one |

1 |

2 |

3.0 |

4 |

NaN |

| 1 |

two |

5 |

6 |

NaN |

8 |

world |

| 2 |

three |

9 |

10 |

11.0 |

12 |

foo |

pd.isnull(result)

|

something |

a |

b |

c |

d |

message |

| 0 |

False |

False |

False |

False |

False |

True |

| 1 |

False |

False |

False |

True |

False |

False |

| 2 |

False |

False |

False |

False |

False |

False |

pandas会用一组经常出现的标记值进行识别。如NA、-1.#IND以及NULL和空字符串等

result = pd.read_csv("E:\python_study_files\python\pydata-book-2nd-edition\examples\ex5.csv",na_values=['NULL'])

pd.isnull(result)

|

something |

a |

b |

c |

d |

message |

| 0 |

False |

False |

False |

False |

False |

True |

| 1 |

False |

False |

False |

True |

False |

True |

| 2 |

False |

False |

False |

False |

False |

False |

sentinels = {'message':['foo','NA'],'something':['two']}

pd.read_csv("E:\python_study_files\python\pydata-book-2nd-edition\examples\ex5.csv",na_values=sentinels)

|

something |

a |

b |

c |

d |

message |

| 0 |

one |

1 |

2 |

3.0 |

4 |

NaN |

| 1 |

NaN |

5 |

6 |

NaN |

8 |

world |

| 2 |

three |

9 |

10 |

11.0 |

12 |

NaN |

import matplotlib.pyplot as plt

from pylab import *

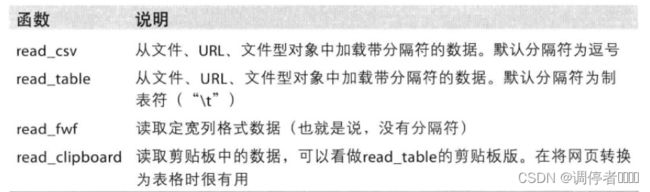

img = plt.imread('read_csv或read_table函数的参数.png')

imshow(img)

[外链图片转存失败,源站可能有防盗链机制,建议将图片保存下来直接上传(img-etaY4igA-1645969826326)(output_17_1.png)]

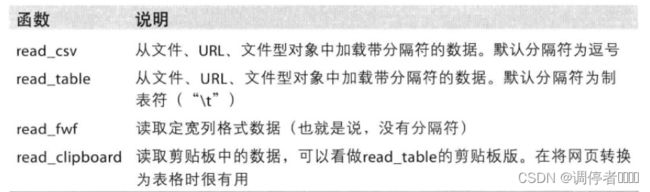

img = plt.imread('read_csv或read_table函数的参数2.png')

imshow(img)

[外链图片转存失败,源站可能有防盗链机制,建议将图片保存下来直接上传(img-WniQIXjq-1645969826327)(output_18_1.png)]

逐块读取文本文件

result = pd.read_csv("E:\python_study_files\python\pydata-book-2nd-edition\examples\ex6.csv")

pd.read_csv("E:\python_study_files\python\pydata-book-2nd-edition\examples\ex6.csv",nrows=5)

|

one |

two |

three |

four |

key |

| 0 |

0.467976 |

-0.038649 |

-0.295344 |

-1.824726 |

L |

| 1 |

-0.358893 |

1.404453 |

0.704965 |

-0.200638 |

B |

| 2 |

-0.501840 |

0.659254 |

-0.421691 |

-0.057688 |

G |

| 3 |

0.204886 |

1.074134 |

1.388361 |

-0.982404 |

R |

| 4 |

0.354628 |

-0.133116 |

0.283763 |

-0.837063 |

Q |

chunker = pd.read_csv("E:\python_study_files\python\pydata-book-2nd-edition\examples\ex6.csv",chunksize=1000)

chunker

tot = Series([])

for piece in chunker:

tot = tot.add(piece['key'].value_counts(),fill_value=0)

tot = tot.sort_values(ascending=False)

tot[:10]

E 368.0

X 364.0

L 346.0

O 343.0

Q 340.0

M 338.0

J 337.0

F 335.0

K 334.0

H 330.0

dtype: float64

get_chunk方法可以读取任意大小的块

将数据写出到文本格式

data = pd.read_csv("E:\python_study_files\python\pydata-book-2nd-edition\examples\ex5.csv")

data.to_csv("E:\python_study_files\ipython_data_analysis\out.csv")

import sys

data.to_csv(sys.stdout,sep='|')

|something|a|b|c|d|message

0|one|1|2|3.0|4|

1|two|5|6||8|world

2|three|9|10|11.0|12|foo

data.to_csv(sys.stdout,na_rep='NULL')

,something,a,b,c,d,message

0,one,1,2,3.0,4,NULL

1,two,5,6,NULL,8,world

2,three,9,10,11.0,12,foo

data.to_csv(sys.stdout,index=False,header=False)

one,1,2,3.0,4,

two,5,6,,8,world

three,9,10,11.0,12,foo

data.to_csv(sys.stdout,index=False,columns=['a','b','c'])

a,b,c

1,2,3.0

5,6,

9,10,11.0

9,10,11.0

dates = pd.date_range('1/1/2000',periods=7)

ts = Series(np.arange(7),index=dates)

ts.to_csv("E:\\python_study_files\\ipython_data_analysis\\tseries.csv")

!type "E:\python_study_files\ipython_data_analysis\tseries.csv"

,0

2000-01-01,0

2000-01-02,1

2000-01-03,2

2000-01-04,3

2000-01-05,4

2000-01-06,5

2000-01-07,6

dates

DatetimeIndex(['2000-01-01', '2000-01-02', '2000-01-03', '2000-01-04',

'2000-01-05', '2000-01-06', '2000-01-07'],

dtype='datetime64[ns]', freq='D')

pd.read_csv("E:\\python_study_files\\ipython_data_analysis\\tseries.csv",parse_dates=True)

|

Unnamed: 0 |

0 |

| 0 |

2000-01-01 |

0 |

| 1 |

2000-01-02 |

1 |

| 2 |

2000-01-03 |

2 |

| 3 |

2000-01-04 |

3 |

| 4 |

2000-01-05 |

4 |

| 5 |

2000-01-06 |

5 |

| 6 |

2000-01-07 |

6 |

手工处理分隔符格式

!type "E:\python_study_files\python\pydata-book-2nd-edition\examples\ex7.csv"

"a","b","c"

"1","2","3"

"1","2","3"

import csv

f = open("E:\python_study_files\python\pydata-book-2nd-edition\examples\ex7.csv")

reader = csv.reader(f)

reader

<_csv.reader at 0x1c28677b160>

for line in reader:

print(line)

['a', 'b', 'c']

['1', '2', '3']

['1', '2', '3']

lines = list(csv.reader(open("E:\python_study_files\python\pydata-book-2nd-edition\examples\ex7.csv")))

header,values = lines[0],lines[1:]

data_dict = {h: v for h, v in zip(header,zip(*values))}

data_dict

{'a': ('1', '1'), 'b': ('2', '2'), 'c': ('3', '3')}

zip(*values)

with open('mydata.csv','w') as f:

writer = csv.writer(f,dialect=my_dialect)

writer.writerow(('one','two','three'))

writer.writerow(('1','2','3'))

---------------------------------------------------

NameError: name 'my_dialect' is not defined

JSON数据

obj = """

{"name":"Wes",

"places_lived":["United States","Spain","Germany"],

"pet":null,

"siblings":[{"name":"Scott","age":25,"pet":"Zuko"},

{"name":"Katie","age":33,"pet":"Cisco"}]

}

"""

import json

result = json.loads(obj)

result

{'name': 'Wes',

'places_lived': ['United States', 'Spain', 'Germany'],

'pet': None,

'siblings': [{'name': 'Scott', 'age': 25, 'pet': 'Zuko'},

{'name': 'Katie', 'age': 33, 'pet': 'Cisco'}]}

asjson = json.dumps(result)

siblings = DataFrame(result['siblings'],columns=['name','age'])

siblings

|

name |

age |

| 0 |

Scott |

25 |

| 1 |

Katie |

33 |

XML和HTML:Web信息收集

from lxml.html import parse

import urllib.request

parsed = parse(urllib.request.urlopen('http://www.stats.gov.cn/tjsj/ndsj/2021/indexch.htm'))

doc = parsed.getroot()

links = doc.findall('.//a')

links

[]

lnk = links[28]

lnk.get('href')

lnk.text_content()

urls = [lnk.get('href') for lnk in doc.findall('.//a')]

from pandas.io.parsers import TextParser

from lxml import objectify

path = 'Performance_MNR.xml'

parsed = objectify.parse(open(path))

root = parsed.getroot()

data = []

skip_fields = ['PARENT_SEQ','INDICATOR_SEQ','DESIRED_CHANGE','DECIMAL_PLACES']

for elt in root.INDICATOR:

el_data = {}

for child in elt.getchildren():

if child.tag in skip_fields:

continue

el_data[child.tag] = child.pyval

data.append(el_data)

perf = DataFrame(data)

perf

|

AGENCY_NAME |

INDICATOR_NAME |

DESCRIPTION |

PERIOD_YEAR |

PERIOD_MONTH |

CATEGORY |

FREQUENCY |

INDICATOR_UNIT |

YTD_TARGET |

YTD_ACTUAL |

MONTHLY_TARGET |

MONTHLY_ACTUAL |

| 0 |

Metro-North Railroad |

On-Time Performance (West of Hudson) |

Percent of commuter trains that arrive at thei... |

2008 |

1 |

Service Indicators |

M |

% |

95.0 |

96.9 |

95.0 |

96.9 |

| 1 |

Metro-North Railroad |

On-Time Performance (West of Hudson) |

Percent of commuter trains that arrive at thei... |

2008 |

2 |

Service Indicators |

M |

% |

95.0 |

96.0 |

95.0 |

95.0 |

| 2 |

Metro-North Railroad |

On-Time Performance (West of Hudson) |

Percent of commuter trains that arrive at thei... |

2008 |

3 |

Service Indicators |

M |

% |

95.0 |

96.3 |

95.0 |

96.9 |

| 3 |

Metro-North Railroad |

On-Time Performance (West of Hudson) |

Percent of commuter trains that arrive at thei... |

2008 |

4 |

Service Indicators |

M |

% |

95.0 |

96.8 |

95.0 |

98.3 |

| 4 |

Metro-North Railroad |

On-Time Performance (West of Hudson) |

Percent of commuter trains that arrive at thei... |

2008 |

5 |

Service Indicators |

M |

% |

95.0 |

96.6 |

95.0 |

95.8 |

| ... |

... |

... |

... |

... |

... |

... |

... |

... |

... |

... |

... |

... |

| 643 |

Metro-North Railroad |

Escalator Availability |

Percent of the time that escalators are operat... |

2011 |

8 |

Service Indicators |

M |

% |

97.0 |

|

97.0 |

|

| 644 |

Metro-North Railroad |

Escalator Availability |

Percent of the time that escalators are operat... |

2011 |

9 |

Service Indicators |

M |

% |

97.0 |

|

97.0 |

|

| 645 |

Metro-North Railroad |

Escalator Availability |

Percent of the time that escalators are operat... |

2011 |

10 |

Service Indicators |

M |

% |

97.0 |

|

97.0 |

|

| 646 |

Metro-North Railroad |

Escalator Availability |

Percent of the time that escalators are operat... |

2011 |

11 |

Service Indicators |

M |

% |

97.0 |

|

97.0 |

|

| 647 |

Metro-North Railroad |

Escalator Availability |

Percent of the time that escalators are operat... |

2011 |

12 |

Service Indicators |

M |

% |

97.0 |

|

97.0 |

|

648 rows × 12 columns

二进制数据格式

pickle最好只用于短期存储,难以保证该格式永远稳定。

frame = pd.read_csv("E:\python_study_files\python\pydata-book-2nd-edition\examples\ex1.csv")

frame

|

a |

b |

c |

d |

message |

| 0 |

1 |

2 |

3 |

4 |

hello |

| 1 |

5 |

6 |

7 |

8 |

world |

| 2 |

9 |

10 |

11 |

12 |

foo |

frame.to_pickle('frame_pickle')

pd.read_pickle('frame_pickle')

|

a |

b |

c |

d |

message |

| 0 |

1 |

2 |

3 |

4 |

hello |

| 1 |

5 |

6 |

7 |

8 |

world |

| 2 |

9 |

10 |

11 |

12 |

foo |

使用HDF5格式

如果需要处理海量数据,最好看看PyTables和h5py,因为很多数据分析问题都是IO密集型(不是CPU密集型)。但HDF5适合用作“一次写多次读”的数据集。

store = pd.HDFStore('mydata.h5')

store['obj1'] = frame

store['obj1_col'] = frame['a']

store

---------------------------------------------------------------------------

ImportError: Missing optional dependency 'tables'. Use pip or conda to install tables.

读取Microsoft Excel文件

import openpyxl

xls_file = pd.ExcelFile('data.xlsx')

table = xls_file.parse('Sheet1')

使用HTML和Web API

import requests

url = 'http://www.json.org.cn/resource/json-in-javascript.htm'

resp = requests.get(url)

resp

import json

data = json.loads(resp.text)

data.keys()

---------------------------------------------------------------------------

JSONDecodeError: Expecting value: line 1 column 1 (char 0)

使用数据库

import sqlite3

query = """

CREATE TABLE test

(a VARCHAR(20),b VARCHAR(20),

c REAL, d INTEGER

);"""

con = sqlite3.connect(':memory:')

con.execute(query)

con.commit()

data = [('Atlanta','Georgia',1.25,6),

('Tallahassee','Florida',2.6,3),

('Sacramento','California',1.7,5)]

stmt = "INSERT INTO test VALUES(?,?,?,?)"

con.executemany(stmt,data)

con.commit()

cursor = con.execute('select * from test')

rows = cursor.fetchall()

rows

[('Atlanta', 'Georgia', 1.25, 6),

('Tallahassee', 'Florida', 2.6, 3),

('Sacramento', 'California', 1.7, 5)]

cursor.description

(('a', None, None, None, None, None, None),

('b', None, None, None, None, None, None),

('c', None, None, None, None, None, None),

('d', None, None, None, None, None, None))

DataFrame(rows,columns=zip(*cursor.description)[0])

---------------------------------------------------------------------------

TypeError: 'zip' object is not subscriptable

存取MongoDB中的数据

from pymongo import MongoClient

con = MongoClient('localhost',port=27017)