tensorrt deeplabv3+ 部署踩坑

deeplabv3+模型转onnx模型

一开始我用的paddlepaddle的实现,转换成onnx模型再转trt模型推理需要2s左右

后面我用得pytorch的deeplabv3+实现

https://github.com/yassouali/pytorch-segmentation

转换成onnx模型再转trt模型推理也在2s左右

后面参考tensorrtx项目和一个识别指针的项目使用c++手写deeplabv3+网络,然后再用gen_wrt.py把权重导出来再手动塞到c++手写的模型中,发现也是2s左右。

最后使用trt的打印每层执行时间发现在下采样部分特别慢,后面我把python里的和c++里的上采样使用近邻上采样替换掉双线性下采样后就快了,大概在20ms左右。

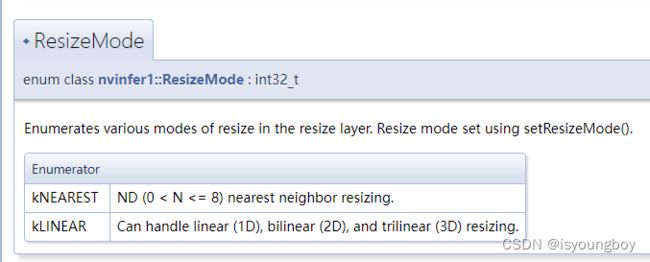

一开始这个应该是trt不支持双线性下采样,转过来的onnx模型使用卷积操作代替上采样所以很慢,网上百度,trt实现双线性下采样需要自己写插件,后面查看文档发现kLINEAR有写支持bilinear,但是我没找到api指定bilinear。

trt文档 https://docs.nvidia.com/deeplearning/tensorrt/api/c_api/classnvinfer1_1_1_i_profiler.html

双线性上采样插件代码参考

/*

* Copyright (c) 2018, NVIDIA CORPORATION. All rights reserved.

*

* Permission is hereby granted, free of charge, to any person obtaining a

* copy of this software and associated documentation files (the "Software"),

* to deal in the Software without restriction, including without limitation

* the rights to use, copy, modify, merge, publish, distribute, sublicense,

* and/or sell copies of the Software, and to permit persons to whom the

* Software is furnished to do so, subject to the following conditions:

*

* The above copyright notice and this permission notice shall be included in

* all copies or substantial portions of the Software.

*

* THE SOFTWARE IS PROVIDED "AS IS", WITHOUT WARRANTY OF ANY KIND, EXPRESS OR

* IMPLIED, INCLUDING BUT NOT LIMITED TO THE WARRANTIES OF MERCHANTABILITY,

* FITNESS FOR A PARTICULAR PURPOSE AND NONINFRINGEMENT. IN NO EVENT SHALL

* THE AUTHORS OR COPYRIGHT HOLDERS BE LIABLE FOR ANY CLAIM, DAMAGES OR OTHER

* LIABILITY, WHETHER IN AN ACTION OF CONTRACT, TORT OR OTHERWISE, ARISING

* FROM, OUT OF OR IN CONNECTION WITH THE SOFTWARE OR THE USE OR OTHER

* DEALINGS IN THE SOFTWARE.

*/

#include "ResizeBilinear.hpp"

#include

#include

// TODO: Move this to a common header

inline bool is_CHW(nvinfer1::Dims const& dims) {

return (dims.nbDims == 3 &&

dims.type[0] == nvinfer1::DimensionType::kCHANNEL &&

dims.type[1] == nvinfer1::DimensionType::kSPATIAL &&

dims.type[2] == nvinfer1::DimensionType::kSPATIAL);

}

nvinfer1::Dims ResizeBilinearPlugin::getOutputDimensions(int index,

const nvinfer1::Dims *inputDims,

int nbInputs) {

assert(nbInputs == 1);

nvinfer1::Dims const& input = inputDims[0];

assert(is_CHW(input));

assert(_ndims == 2);

assert(index == 0);

nvinfer1::Dims output;

output.nbDims = input.nbDims;

int s = 0;

for( int d=0; dgetOutputDimensions(0, &this->getInputDims(0), 1);

assert(is_CHW(this->getInputDims(0)));

assert(is_CHW(_output_dims));

assert(_ndims == 2);

return 0;

}

template

__global__

void resize_bilinear_kernel_2d(int nbatch,

float2 scale,

int2 isize,

int2 osize,

Data const* idata, int istride, int ibatchstride,

Data* odata, int ostride, int obatchstride) {

int x0 = threadIdx.x + blockIdx.x * blockDim.x;

int y0 = threadIdx.y + blockIdx.y * blockDim.y;

int z0 = blockIdx.z;

for( int batch=z0; batchgetInputDims(0);

int nchan = input_dims.d[0];

switch( _ndims ) {

case 2: {

float2 scale = {_scale[1], _scale[0]};

int2 isize = {input_dims.d[2], input_dims.d[1]};

int2 osize = {_output_dims.d[2], _output_dims.d[1]};

int istride = input_dims.d[2];

int ostride = _output_dims.d[2];

int ibatchstride = input_dims.d[1] * istride;

int obatchstride = _output_dims.d[1] * ostride;

dim3 block(16, 16);

dim3 grid((osize.x - 1) / block.x + 1,

(osize.y - 1) / block.y + 1,

std::min(batchSize * nchan, 65535));

if (getDataType()==nvinfer1::DataType::kFLOAT) {

resize_bilinear_kernel_2d<<>>

(batchSize * nchan, scale, isize, osize,

static_cast( inputs[0]), istride, ibatchstride,

static_cast(outputs[0]), ostride, obatchstride);

} else {

resize_bilinear_kernel_2d<<>>

(batchSize * nchan, scale, isize, osize,

static_cast<__half const*>( inputs[0]), istride, ibatchstride,

static_cast<__half* >(outputs[0]), ostride, obatchstride);

}

return cudaGetLastError() != cudaSuccess;

}

default: return -1;

}

}

https://github.com/chenjun2hao/onnx-tensorrt/commit/b5f9e9219cbc21ab1262018f42f12d8319217500