SLAM第十一讲实践:【回环检测】DBoW3安装以及用ORB特征创建字典,回环相似度检测,增加字典规模再回环检测的详细实践

2022.10.19 bionic

目录

-

- 1 安装DBoW3

- 2 用ORB特征创建字典

-

- 2.1 修改cmake

- 2.2 修改feature_training.cpp中的数据集路径

- 2.3 输出

- 3 相似度的计算

-

- 3.1 修改cmake

- 3.2 修改loop_closure.cpp中的数据集路径

- 3.3 输出

- 4 增加字典规模

-

- 4.1 修改cmake

- 4.2 获取扩展数据集

- 4.3 修改gen_vocab_large.cpp

- 4.4 输出

- 4.5 使用该字典进行回环

1 安装DBoW3

首先获取安装包,事实证明,使用高博的gaoxiang12/slambook第一版里面的安装包才可以

链接: link

提取码:8ct5

解压缩后组合拳完成安装

cd DBow3/

mkdir build

cd build/

cmake ..

make -j4

sudo make install

2 用ORB特征创建字典

回到slambook2中根据已有的数据建立字典

文件中有一个十张照片的数据集,用到feature_training.cpp这个文件

2.1 修改cmake

cmake_minimum_required( VERSION 2.8 )

project( loop_closure )

set( CMAKE_BUILD_TYPE "Release" )

set( CMAKE_CXX_FLAGS "-std=c++14 -O3" )

# opencv

find_package( OpenCV 3.1 REQUIRED )

include_directories( ${OpenCV_INCLUDE_DIRS} )

# dbow3

# dbow3 is a simple lib so I assume you installed it in default directory

set( DBoW3_INCLUDE_DIRS "/usr/local/include" )

set( DBoW3_LIBS "/usr/local/lib/libDBoW3.a" )

add_executable( feature_training src/feature_training.cpp )

target_link_libraries( feature_training ${OpenCV_LIBS} ${DBoW3_LIBS} )

2.2 修改feature_training.cpp中的数据集路径

一定要修改这里的路径,否则建立不了字典,到时候词数为0

int main( int argc, char** argv ) {

// read the image

cout<<"reading images... "<<endl;

vector<Mat> images;

for ( int i=0; i<10; i++ )

{

//修改路径

string path = "/home/zhangyuanbo/Slam14_2/ch11/data/"+to_string(i+1)+".png";

images.push_back( imread(path) );

}

// detect ORB features

cout<<"detecting ORB features ... "<<endl;

Ptr< Feature2D > detector = ORB::create();

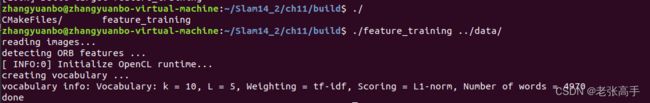

2.3 输出

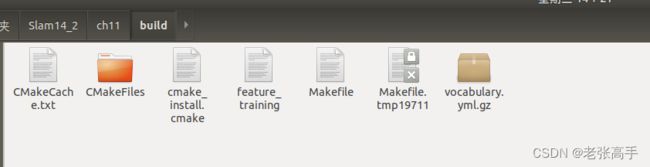

组合拳后

会生成一个vocabulary.yml.gz文件,后面会有用,如下图所示

使用如下代码生成字典

./feature_training ../data/

可以看到:分支数量k为10,深度L为5,单词数量为4970,没有达到最大容量。Weighting是权重,Scoring是评分

3 相似度的计算

3.1 修改cmake

cmake_minimum_required( VERSION 2.8 )

project( loop_closure )

set( CMAKE_BUILD_TYPE "Release" )

set( CMAKE_CXX_FLAGS "-std=c++14 -O3" )

# opencv

find_package( OpenCV 3.1 REQUIRED )

include_directories( ${OpenCV_INCLUDE_DIRS} )

# dbow3

# dbow3 is a simple lib so I assume you installed it in default directory

set( DBoW3_INCLUDE_DIRS "/usr/local/include" )

set( DBoW3_LIBS "/usr/local/lib/libDBoW3.a" )

add_executable( loop_closure loop_closure.cpp )

target_link_libraries( loop_closure ${OpenCV_LIBS} ${DBoW3_LIBS} )

3.2 修改loop_closure.cpp中的数据集路径

修改路径和之前一样,不然读不到数据集

int main(int argc, char **argv) {

// read the images and database

cout << "reading database" << endl;

DBoW3::Vocabulary vocab("./vocabulary.yml.gz");

// DBoW3::Vocabulary vocab("./vocab_larger.yml.gz"); // use large vocab if you want:

if (vocab.empty()) {

cerr << "Vocabulary does not exist." << endl;

return 1;

}

cout << "reading images... " << endl;

vector<Mat> images;

for (int i = 0; i < 10; i++) {

string path = "/home/zhangyuanbo/Slam14_2/ch11/data/" + to_string(i + 1) + ".png";

images.push_back(imread(path));

}

3.3 输出

./loop_closure

zhangyuanbo@zhangyuanbo-virtual-machine:~/Slam14_2/ch11/build$ ./loop_closure

reading database

reading images...

detecting ORB features ...

[ INFO:0] Initialize OpenCL runtime...

comparing images with images

image 0 vs image 0 : 1

image 0 vs image 1 : 0.0238961

image 0 vs image 2 : 0.0198893

image 0 vs image 3 : 0.0339413

image 0 vs image 4 : 0.0160477

image 0 vs image 5 : 0.0300543

image 0 vs image 6 : 0.0222911

image 0 vs image 7 : 0.0197256

image 0 vs image 8 : 0.0228385

image 0 vs image 9 : 0.0583173

image 1 vs image 1 : 1

image 1 vs image 2 : 0.0422068

image 1 vs image 3 : 0.0282324

image 1 vs image 4 : 0.0225322

image 1 vs image 5 : 0.025146

image 1 vs image 6 : 0.0161122

image 1 vs image 7 : 0.0166048

image 1 vs image 8 : 0.0350047

image 1 vs image 9 : 0.0326933

image 2 vs image 2 : 1

image 2 vs image 3 : 0.0361374

image 2 vs image 4 : 0.0330401

image 2 vs image 5 : 0.0189786

image 2 vs image 6 : 0.0182909

image 2 vs image 7 : 0.0137075

image 2 vs image 8 : 0.0270761

image 2 vs image 9 : 0.0219518

image 3 vs image 3 : 1

image 3 vs image 4 : 0.0304209

image 3 vs image 5 : 0.03611

image 3 vs image 6 : 0.0205856

image 3 vs image 7 : 0.0208058

image 3 vs image 8 : 0.0312382

image 3 vs image 9 : 0.0329747

image 4 vs image 4 : 1

image 4 vs image 5 : 0.0498645

image 4 vs image 6 : 0.0345081

image 4 vs image 7 : 0.0227451

image 4 vs image 8 : 0.0208472

image 4 vs image 9 : 0.0266803

image 5 vs image 5 : 1

image 5 vs image 6 : 0.0198106

image 5 vs image 7 : 0.0162269

image 5 vs image 8 : 0.0259153

image 5 vs image 9 : 0.0220667

image 6 vs image 6 : 1

image 6 vs image 7 : 0.0212959

image 6 vs image 8 : 0.0188494

image 6 vs image 9 : 0.0208606

image 7 vs image 7 : 1

image 7 vs image 8 : 0.012798

image 7 vs image 9 : 0.0205016

image 8 vs image 8 : 1

image 8 vs image 9 : 0.0228186

image 9 vs image 9 : 1

comparing images with database

database info: Database: Entries = 10, Using direct index = no. Vocabulary: k = 10, L = 5, Weighting = tf-idf, Scoring = L1-norm, Number of words = 4970

searching for image 0 returns 4 results:

<EntryId: 0, Score: 1>

<EntryId: 9, Score: 0.0583173>

<EntryId: 3, Score: 0.0339413>

<EntryId: 5, Score: 0.0300543>

searching for image 1 returns 4 results:

<EntryId: 1, Score: 1>

<EntryId: 2, Score: 0.0422068>

<EntryId: 8, Score: 0.0350047>

<EntryId: 9, Score: 0.0326933>

searching for image 2 returns 4 results:

<EntryId: 2, Score: 1>

<EntryId: 1, Score: 0.0422068>

<EntryId: 3, Score: 0.0361374>

<EntryId: 4, Score: 0.0330401>

searching for image 3 returns 4 results:

<EntryId: 3, Score: 1>

<EntryId: 2, Score: 0.0361374>

<EntryId: 5, Score: 0.03611>

<EntryId: 0, Score: 0.0339413>

searching for image 4 returns 4 results:

<EntryId: 4, Score: 1>

<EntryId: 5, Score: 0.0498645>

<EntryId: 6, Score: 0.0345081>

<EntryId: 2, Score: 0.0330401>

searching for image 5 returns 4 results:

<EntryId: 5, Score: 1>

<EntryId: 4, Score: 0.0498645>

<EntryId: 3, Score: 0.03611>

<EntryId: 0, Score: 0.0300543>

searching for image 6 returns 4 results:

<EntryId: 6, Score: 1>

<EntryId: 4, Score: 0.0345081>

<EntryId: 0, Score: 0.0222911>

<EntryId: 7, Score: 0.0212959>

searching for image 7 returns 4 results:

<EntryId: 7, Score: 1>

<EntryId: 4, Score: 0.0227451>

<EntryId: 6, Score: 0.0212959>

<EntryId: 3, Score: 0.0208058>

searching for image 8 returns 4 results:

<EntryId: 8, Score: 1>

<EntryId: 1, Score: 0.0350047>

<EntryId: 3, Score: 0.0312382>

<EntryId: 2, Score: 0.0270761>

searching for image 9 returns 4 results:

<EntryId: 9, Score: 1>

<EntryId: 0, Score: 0.0583173>

<EntryId: 3, Score: 0.0329747>

<EntryId: 1, Score: 0.0326933>

done.

可以看到不同的图像与相似图像的评分有多大差异。我们看到,明显相似的图1和图10(在C++中下标为0和9)其相似度评分约为0.058,而其他图像约为0.02。

4 增加字典规模

4.1 修改cmake

cmake_minimum_required( VERSION 2.8 )

project( loop_closure )

set( CMAKE_BUILD_TYPE "Release" )

set( CMAKE_CXX_FLAGS "-std=c++14 -O3" )

# opencv

find_package( OpenCV 3.1 REQUIRED )

include_directories( ${OpenCV_INCLUDE_DIRS} )

# dbow3

# dbow3 is a simple lib so I assume you installed it in default directory

set( DBoW3_INCLUDE_DIRS "/usr/local/include" )

set( DBoW3_LIBS "/usr/local/lib/libDBoW3.a" )

add_executable( gen_vocab gen_vocab_large.cpp )

target_link_libraries( gen_vocab ${OpenCV_LIBS} ${DBoW3_LIBS} )

4.2 获取扩展数据集

rgbd_dataset_freiburg1_desk2

这里给出我的百度

链接: link

提取码:fdje

4.3 修改gen_vocab_large.cpp

注意修改新增数据集的路径

#include

// return 1;

// }

// vector rgb_files, depth_files;

// vector rgb_times, depth_times;

// while ( !fin.eof() )

// {

// string rgb_time, rgb_file, depth_time, depth_file;

// fin>>rgb_time>>rgb_file>>depth_time>>depth_file;

// rgb_times.push_back ( atof ( rgb_time.c_str() ) );

// depth_times.push_back ( atof ( depth_time.c_str() ) );

// rgb_files.push_back ( dataset_dir+"/"+rgb_file );

// depth_files.push_back ( dataset_dir+"/"+depth_file );

// if ( fin.good() == false )

// break;

// }

// fin.close();

cout<<"generating features ... "<<endl;//输出generating features (正在检测ORB特征)...

vector<Mat> descriptors;//描述子

Ptr< Feature2D > detector = ORB::create();

int index = 1;

for ( String path : imagesPath )

{

Mat image = imread(path);

vector<KeyPoint> keypoints; //关键点

Mat descriptor;//描述子

detector->detectAndCompute( image, Mat(), keypoints, descriptor );

descriptors.push_back( descriptor );

cout<<"extracting features from image " << index++ <<endl;//输出extracting features from image(从图像中提取特征)

}

cout<<"extract total "<<descriptors.size()*500<<" features."<<endl;

// create vocabulary

cout<<"creating vocabulary, please wait ... "<<endl;//输出creating vocabulary, please wait (创建词典,请稍等)...

DBoW3::Vocabulary vocab;

vocab.create( descriptors );

cout<<"vocabulary info: "<<vocab<<endl;

vocab.save( "vocab_larger.yml.gz" );//保存词典

cout<<"done"<<endl;

return 0;

}

4.4 输出

./gen_vocab

extract total 320000 features.

creating vocabulary, please wait ...

vocabulary info: Vocabulary: k = 10, L = 5, Weighting = tf-idf, Scoring = L1-norm, Number of words = 89849

done

同时会生成文件vocab_larger.yml.gz,这个文件,是通过640个图片训练得到的字典,可以在loop_closure中

4.5 使用该字典进行回环

修改loop_closure.cpp文件中的

DBoW3::Vocabulary vocab("./vocab_larger.yml.gz");

把这行注释放开

using namespace cv;

using namespace std;

/***************************************************

* 本节演示了如何根据前面训练的字典计算相似性评分

* ************************************************/

int main(int argc, char **argv) {

// read the images and database

cout << "reading database" << endl;

//DBoW3::Vocabulary vocab("./vocabulary.yml.gz");

DBoW3::Vocabulary vocab("./vocab_larger.yml.gz"); // use large vocab if you want:

if (vocab.empty()) {

cerr << "Vocabulary does not exist." << endl;

return 1;

}

然后参照3再make以下,执行回环,数据比较多,所以我只是执行了前10张

这个实验大体上就是这样的