AI中Deepfake的部分研究前沿与文献综述

AI中Deepfake的部分研究前沿与文献综述

- 一、研究现状

- 二、典型算法:

- 三、存在问题

- 四、未来的研究热点

- 参考文献:

一、研究现状

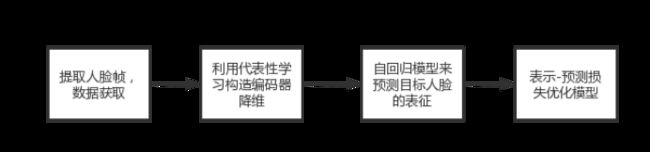

由于Deepfake其潜在的安全威胁,它已经引起了学术界和工业界的研究兴趣。为了减轻这种风险,人们提出了许多对策。现有的Deepfake检测方法在处理视觉质量较低的Deepfake媒体时,可以通过明显的视觉伪影来区分。然而,随着深度生成模型的发展,Deepfake媒体的真实感得到了显著的提高,对现有的检测模型提出了严峻的挑战。在本文中,我们提出了一个基于框架推理的检测框架(FInfer)来解决高视觉质量的Deepfake检测问题。具体来说,我们首先学习当前和未来帧的面部的引用表示。然后,利用当前帧的面部表征,利用自回归模型预测未来帧的面部表征。最后,设计了一种表示预测损失来最大化真实视频和假视频的鉴别能力。我们通过信息论分析证明了我们的FInfer框架的有效性。熵和互信息分析表明,在真实视频中预测表征和参考表征之间的相关性高于高视觉质量的Deepfake视频。大量的实验表明,我们的方法在高视觉质量Deepfake视频的数据集内检测性能、检测效率和跨数据集检测性能方面都很有前景。

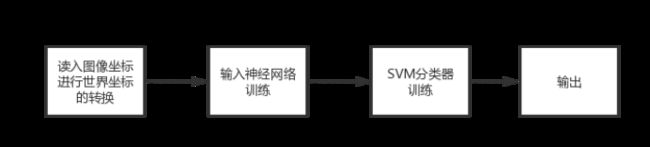

由于机器学习的最新发展,操纵和制作图像和视频的技术已经达到了一个新的复杂水平。这一趋势的前沿是所谓的深度造假(Deep Fakes),它是通过将使用深度神经网络合成的人脸插入到原始图像/视频中而产生的。深度造假与通过数字社交网络分享的其他形式的虚假信息一起,已经成为一个严重的问题,对社会产生了负面影响。因此,迫切需要有效的方法来揭露深度造假。迄今为止,深度造假的检测方法依赖于合成算法固有的伪影或不一致性,例如,缺乏逼真的眨眼和不匹配的颜色配置文件。基于神经网络的分类方法也被用于直接识别Deep Fakes中的真实图像。在这项工作中,我们提出了一种检测深度造假的新方法。我们的方法是基于深度神经网络人脸合成模型的内在局限性,而深度神经网络人脸合成模型是深度伪造生产管道的核心组件。具体来说,这些算法创建了不同的人的面部,但保留了原始人物的面部表情。然而,这两张脸的面部标志不匹配,这些标志是人类面部上与眼睛和嘴尖等重要结构相对应的位置,由于神经网络合成算法并不能保证原始人脸与合成人脸具有一致的面部标志。

二、典型算法:

接下来要介绍的三种算法是:基于用不一致的头部姿势暴露深度假,基于双分支循环网络的视频深度造假分离,基于帧推理的高视觉质量视频深度虚假检测。我们将之前所述的方法分别记作算法①②③,下面进行依次介绍。

深度造假是在原图的基础上将部分图像进行高质量的替换,同时在边缘位置进行平滑操作从而达到深度造假的效果。在利用头部姿势的不一致来进行深度造假的算法中,其算法根本是利用经过Deepfake之后人的头部姿势会因为改动而产生一定的变化,根据此来进行识别,在大量样本和场景的实验中证实有效[1]。

基于双分支循环网络的视频造假识别算法的原理是:双分支结构:一个分支传播原始信息,而另一个分支抑制面部内容,但使用拉普拉斯高斯(LoG)作为瓶颈层来放大多波段频率。为了更好地隔离被操纵的人脸,我们推导了一个新的代价函数,与常规分类不同,它压缩了自然人脸的可变性,并在特征空间中推开了不现实的人脸样本。[2]

FInfer为基于帧推理的高视觉质量视频虚假探测的简称,由四个部分组成:人脸预处理、人脸代表性学习、人脸预测学习和基于相关性的学习。首先,我们从视频中提取帧并从帧中检测人脸。利用高斯-拉普拉斯金字塔块对人脸数据进行变换。其次,由于视频帧的数据维数巨大,我们利用代表性学习构造编码器,将源和参考目标面编码到低维空间。该编码器将人脸的空间特征编码到一个紧凑的潜在嵌入空间中,保证了预测的有效性。第三,我们使用自回归模型来预测目标人脸的表示。预测模型集成源人脸信息,预测目标人脸的表示形式。第四,我们利用基于相关性的学习模块,利用设计的表示-预测损失优化模型。表示预测损失允许整个模型被端到端训练。FInfer可以将损失反馈给代表学习模块和预测学习模块,这将帮助模型编码人脸表示,预测目标表示,并检测视频。[3]

三种方法的优缺点比较:方法①的思维创新新颖,标新立异,是很好的想法也有不错的效果,但是由于是刚刚提出应用范围不广,还需要时间和实践的检验。方法②是在深度学习中进行的方法的提出改进和优化,在代价函数,神经网络的设计上都有突出的贡献。对于方法③来说,基于帧推理的视频识别从另一种角度出发,算法思想和视频压缩还原的算法进行了有机结合,具有突出贡献和效果,值得推广应用。以上介绍的三种算法从某种程度上可以归类Deepfake的研究方法,从常识性的角度或者标新立异的方法,基于传统深度学习神经网络的方法和与实际图像处理相结合的方法。

三、存在问题

最近Deepfake的视频检测方法大致可以分为三类,即线索启发方法、数据驱动方法和多域融合方法。线索启发方法(Li, Chang, and Lyu 2018;Ciftci, Demir, and Yin 2020;yang, Li, and Lyu 2019;Koopman, Rodriguez, and Geradts 2018;Li和Lyu 2019)揭示了可观察到的特征,如眨眼不一致、生物信号和不现实的de36 AAAI人工智能会议(AAAI-22) 951尾巴来检测Deepfake视频。但是,在生成假视频的过程中,通过有目的的训练,可以绕过这些检测方法。数据驱动方法(Afchar et al 2018;Nguyen, Y amagishi,和Echizen 2019;Nguyen等2019;Tan and Le 2019;Rossler等人2019;赵等2021;Liu et al 2021;Xu等人2021)提取不可见的特征来有效地检测这些伪造品。这些方法没有将空间信息与其他域信息相结合,可能会忽略视频的关键特征。为此,多域融合方法(Güera和Delp 2018;Zhao, Wang, and Lu 2020;Qian等2020;Masi等2020;Hu et al 2021;Sun等人2021)跨多个域训练检测模型,如空间域、时间域和频域制造过程。虽然上述方法在检测早期数据集方面取得了良好的表现,但在最近开发的高视觉质量Deepfake视频中仍需要改进。之前的方法(Li, Chang, and Lyu 2018;Afchar等2018;Yang, Li, and Lyu 2019;Hu et al 2021)侧重于在低视觉质量视频中容易跟踪的特定特征,而这些特征在高视觉质量视频中可能会被严重削弱,导致检测性能降低。因此,我们需要一种更普遍的方式来放大假视频的篡改痕迹。

此外,上述方法的工件依赖性(Rossler et al 2019;Zhao et al 2021)在进行跨数据集检测时也可能导致严重过拟合。对训练数据进行扩展是解决过拟合问题的有效方法。然而,现有的方法只关注性能而不关注计算效率,这带来了不必要的时间成本。此外,现有的大部分检测方法都得益于CNN强大的能力,但基于CNN的方法缺乏理论解释,不利于对检测技术的理解。综上所述,在检测高视觉质量Deepfake视频时面临三大挑战,即1)如何放大高视觉质量Deepfake视频中的篡改痕迹以获得更好的性能,2)如何提高跨数据集检测的鲁棒性并提高检测效率,3)如何提供可解释的理论分析。

四、未来的研究热点

人脸取证数据集和评估。与人脸识别数据集的激增不同,社区中一直缺乏用于培训和评估的大规模人脸取证数据集。虽然人脸交换可以作为一种拼接图像伪造技术,并且一些通用取证集包含人脸拼接和复制-移动伪造,但早期的特定人脸操作检测工具主要是在静态图像上进行评估。为深度造假检测发布的小规模基准测试是在受控环境中生成的。就在最近,Rossler等人提出了几个版本的FaceForensics++,这是一个中等规模的操纵视频集合,总共有180万个使用四种方法操纵帧:FaceSwap, DeepFakes, Face2Face和NeuralTextures。谷歌Research用另一组包含deepfake视频的数据集增强了同一数据集,即谷歌deepfake Detection (DFD)。与此同时,Facebook和其他公司共同努力,创建了一场在网络上检测假货的比赛,发布了一个预览数据集“Deepfake Detection Challenge (DFDC)”,以及新的评估指标。除了之外,有趣的新颖之处在于性能是在视频级别而不是帧级别上考虑的,以低虚警率有效地评估模型。在之前,精度是用于衡量假检测性能的唯一指标,只有少数例外。尽管有这些贡献,但与网络上流传的视频相比,这些集提供的合成视频的感知质量似乎仍然较低,因此Li等人最近发布了Celeb-DF来生成超逼真的深度伪造,并将帧级AUC作为一个度量标准。这一基准非常引人注目,提供5369个高质量视频,总帧数为210万帧。

检测面部操作。虽然图像取证已经被广泛研究了很长一段时间,但深度伪造是最近的技术,因此最近提出了几个正交工作来解决检测人脸操作的问题。深度伪造检测方法可以大致分为两个宏观组(i)使用头部和面部不同语义不一致的判别分类器;以及(ii)数据驱动方法,直接从数据中学习判别函数。考虑到第一组,Agarwal等人使用一类支持向量机(SVM)和从动作单元(AU)和3D头部姿势运动中计算的特征构建了特定于人的分类器。同样,Li等人使用了深度伪造的初始版本不眨眼的观察结果。后来,他们扩展了这项工作,以检查3D头部姿势的不一致性。他们还训练了一个DCNN,尽管他们使用了经过扭曲的伪影作为负样本,以模拟深度假拼接过程。GAN合成检测。最后,相关研究的平行线正在检测完全由gan合成的人脸图像,例如使用StyleGAN。

参考文献:

[1]Masi, I., Killekar, A., Mascarenhas, R.M., Gurudatt, S.P., AbdAlmageed, W. (2020). Two-Branch Recurrent Network for Isolating Deepfakes in Videos. In: Vedaldi, A., Bischof, H., Brox, T., Frahm, JM. (eds) Computer Vision – ECCV 2020. ECCV 2020. Lecture Notes in Computer Science(), vol 12352. Springer, Cham. https://doi.org/10.1007/978-3-030-58571-6_39

[2]Hu, J., Liao, X., Liang, J., Zhou, W., & Qin, Z. (2022). FInfer: Frame Inference-Based Deepfake Detection for High-Visual-Quality Videos. Proceedings of the AAAI Conference on Artificial Intelligence, 36(1), 951-959. https://doi.org/10.1609/aaai.v36i1.19978

[3]Yang X, Li Y, Lyu S. Exposing deep fakes using inconsistent head poses[C]//ICASSP 2019-2019 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP). IEEE, 2019: 8261-8265.

[1] Robert Chesney and Danielle Keats Citron, “Deep Fakes: A

Looming Challenge for Privacy, Democracy, and National Se-

curity,” 107 California Law Review (2019, F orthcoming); U of

Texas Law, Public Law Research Paper No. 692; U of Maryland

Legal Studies Research Paper No. 2018-21.

[2] Y uezun Li, Ming-Ching Chang, and Siwei Lyu, “In ictu oculi:

Exposing ai generated fake face videos by detecting eye blink-

ing,” in IEEE International Workshop on Information F orensics

and Security (WIFS), 2018.

[3] Haodong Li, Bin Li, Shunquan Tan, and Jiwu Huang, “Detec-

tion of deep network generated images using disparities in color

components,” arXiv preprint arXiv:1808.07276, 2018.

[4] Darius Afchar, Vincent Nozick, Junichi Yamagishi, and Isao

Echizen, “Mesonet: a compact facial video forgery detec-

tion network,” in IEEE International Workshop on Information

F orensics and Security (WIFS), 2018.

[5] G. Bradski, “The OpenCV Library,” Dr . Dobb’s Journal of

Software Tools, 2000.

[6] Davis E. King, “Dlib-ml: A machine learning toolkit,” Journal

of Machine Learning Research, vol. 10, pp. 1755–1758, 2009.

[7] Tadas Baltrusaitis, Amir Zadeh, Yao Chong Lim, and Louis-

Philippe Morency, “Openface 2.0: Facial behavior analysis

toolkit,” in Automatic Face & Gesture Recognition (FG 2018),

2018 13th IEEE International Conference on. IEEE, 2018, pp.

59–66.18 I. Masi et al.

- CNN - business - when seeing is no longer believing inside the pentagons race

against deepfake videos. https://www.cnn.com/interactive/2019/01/business/

pentagons-race-against-deepfakes/ 2 - DeepFaceLab. https://github.com/iperov/DeepFaceLab 2

- DeepTrace - the antivirus of deepfakes - the state of deepfakes. https://

deeptracelabs.com 2 - ZAO app. https://apps.apple.com/cn/app/zao/ 2

- MSR Image Recognition Challenge (IRC) at ACM Multimedia 2016 (July 2016) 3

- Afchar, D., Nozick, V., Yamagishi, J., Echizen, I.: Mesonet: a compact facial video

forgery detection network. In: WIFS. pp. 1–7. IEEE (2018) 4, 13, 14 - Agarwal, S., Farid, H., Gu, Y., He, M., Nagano, K., Li, H.: Protecting world leaders

against deep fakes. In: CVPR Workshops (June 2019) 4 - Bayar, B., Stamm, M.C.: A deep learning approach to universal image manipula-

tion detection using a new convolutional layer. In: ACM Workshop on Information

Hiding and Multimedia Security. pp. 5–10 (2016) 14 - Bulat, A., Tzimiropoulos, G.: How far are we from solving the 2d & 3d face align-

ment problem?(and a dataset of 230,000 3d facial landmarks). In: ICCV (2017)

10 - Burt, P., Adelson, E.: The laplacian pyramid as a compact image code. IEEE

Transactions on communications 31(4), 532–540 (1983) 3, 5, 7 - Chollet, F.: Xception: Deep Learning With Depthwise Separable Convolutions. In:

CVPR. pp. 1251–1258 (2017), http://openaccess.thecvf.com/content_cvpr_

2017/html/Chollet_Xception_Deep_Learning_CVPR_2017_paper.html 4 - Cozzolino, D., Poggi, G., Verdoliva, L.: Recasting residual-based local descrip-

tors as convolutional neural networks: an application to image forgery detection.

In: ACM Workshop on Information Hiding and Multimedia Security. pp. 159–164

(2017) 14 - Cozzolino, D., Thies, J., R¨ ossler, A., Riess, C., Nießner, M., Verdoliva, L.: Foren-

sictransfer: Weakly-supervised domain adaptation for forgery detection. arXiv

preprint arXiv:1812.02510 (2018) 4, 7 - Dolhansky, B., Howes, R., Pflaum, B., Baram, N., Ferrer, C.C.: The Deepfake

Detection Challenge (DFDC) Preview Dataset. arXiv:1910.08854 [cs] (Oct 2019),

http://arxiv.org/abs/1910.08854, arXiv: 1910.08854 3, 4, 9, 13, 14 - Domingos, P.M.: A few useful things to know about machine learning. Commun.

acm 55(10), 78–87 (2012) 3 - Dufour, N., Gully, A., Karlsson, P., Vorbyov, A.V., Leung, T., Childs, J., Bregler,

C.: Deepfakes detection dataset by Google and Jigsaw (2019) 4 - Farid, H.: Photo forensics. MIT Press (2016) 4

16 I. Masi et al. - Fridrich, J., Kodovsky, J.: Rich models for steganalysis of digital images. TIFS

7(3), 868–882 (2012) 14 - Gellately, R.: Lenin, Stalin, and Hitler: The age of social catastrophe. Alfred a

Knopf Incorporated (2007) 2 - Goodfellow, I., Pouget-Abadie, J., Mirza, M., Xu, B., Warde-Farley, D., Ozair, S.,

Courville, A., Bengio, Y.: Generative adversarial nets. In: NIPS (2014) 2 - G¨ uera, D., Delp, E.J.: Deepfake video detection using recurrent neural networks.

In: A VSS. pp. 1–6. IEEE (2018) 2, 3 - Guo, Y., Zhang, L., Hu, Y., He, X., Gao, J.: Ms-celeb-1m: A dataset and benchmark

for large-scale face recognition. In: ECCV (2016) 3 - Han, X., Morariu, V., Larry Davis, P.I., et al.: Two-stream neural networks for

tampered face detection. In: CVPR Workshops. pp. 19–27 (2017) 3, 4, 13 - Heller, S., Rossetto, L., Schuldt, H.: The PS-Battles Dataset – an Image Collec-

tion for Image Manipulation Detection. CoRR abs/1804.04866 (2018), http:

//arxiv.org/abs/1804.04866 2, 3 - Huang, G., Liu, Z., Van Der Maaten, L., Weinberger, K.Q.: Densely connected

convolutional networks. In: CVPR. pp. 4700–4708 (2017) 3, 5, 6 - Huang, G.B., Ramesh, M., Berg, T., Learned-Miller, E.: Labeled faces in the wild:

A database for studying face recognition in unconstrained environments. Tech.

Rep. 07-49, UMass, Amherst (October 2007) 3 - Ioannou, Y., Robertson, D., Cipolla, R., Criminisi, A.: Deep roots: Improving cnn

efficiency with hierarchical filter groups. In: CVPR. pp. 1231–1240 (2017) 6 - Karras, T., Laine, S., Aila, T.: A style-based generator architecture for generative

adversarial networks. In: CVPR. pp. 4401–4410 (2019) 4 - Kemelmacher-Shlizerman, I., Seitz, S.M., Miller, D., Brossard, E.: The MegaFace

benchmark: 1 million faces for recognition at scale. In: CVPR (2016) 3 - King, D.E.: Dlib-ml: A machine learning toolkit. JMLR 10, 1755–1758 (2009) 10

- Kingma, D.P., Welling, M.: Auto-encoding variational bayes. arXiv preprint

arXiv:1312.6114 (2013) 2 - Klare, B.F., Klein, B., Taborsky, E., Blanton, A., Cheney, J., Allen, K., Grother,

P., Mah, A., Burge, M., Jain, A.K.: Pushing the frontiers of unconstrained face

detection and recognition: IARPA Janus Benchmark A. In: CVPR. pp. 1931–1939

(2015) 3 - Korshunov, P., Marcel, S.: Deepfakes: a new threat to face recognition? assessment

and detection. arXiv preprint arXiv:1812.08685 (2018) 3, 4, 10 - Korshunov, P., Marcel, S.: Vulnerability assessment and detection of deepfake

videos. In: ICB. Crete, Greece (Jun 2019) 3 - Krizhevsky, A., Sutskever, I., Hinton, G.E.: Imagenet classification with deep con-

volutional neural networks. In: NIPS. pp. 1097–1105 (2012) 2 - Li, Y., Chang, M.C., Lyu, S.: In ictu oculi: Exposing ai created fake videos by

detecting eye blinking. In: WIFS. pp. 1–7 (2018) 3, 4 - Li, Y., Lyu, S.: Exposing deepfake videos by detecting face warping artifacts. In:

CVPR Workshops (June 2019) 4, 11, 12, 13 - Li, Y., Yang, X., Sun, P., Qi, H., Lyu, S.: Celeb-df: A large-scale challenging dataset

for deepfake forensics. In: CVPR (June 2020) 3, 4, 9, 13 - Maaten, L.v.d., Hinton, G.: Visualizing data using t-sne. Journal of machine learn-

ing research 9(Nov), 2579–2605 (2008) 8, 9 - Marra, F., Gragnaniello, D., Verdoliva, L., Poggi, G.: Do gans leave artificial fin-

gerprints? In: Conference on Multimedia Information Processing and Retrieval

(MIPR). pp. 506–511 (2019) 4

Two-branch Recurrent Network for Deepfake Detection 17 - Matern, F., Riess, C., Stamminger, M.: Exploiting visual artifacts to expose deep-

fakes and face manipulations. In: W ACV Workshops. pp. 83–92. IEEE (2019) 4,

13 - McClish, D.K.: Analyzing a portion of the roc curve. Medical Decision Making

9(3), 190–195 (1989) 3, 10 - Nagrani, A., Chung, J.S., Xie, W., Zisserman, A.: Voxceleb: Large-scale speaker

verification in the wild. Computer Speech & Language 60, 101027 (2020) 14 - Nguyen, H.H., Fang, F., Yamagishi, J., Echizen, I.: Multi-task learning for detecting

and segmenting manipulated facial images and videos. In: BTAS (2019) 4, 13 - Nguyen, H.H., Yamagishi, J., Echizen, I.: Capsule-forensics: Using capsule networks

to detect forged images and videos. In: ICASSSP. pp. 2307–2311. IEEE (2019) 4,

13 - Nguyen, T.T., Nguyen, C.M., Nguyen, D.T., Nguyen, D.T., Nahavandi, S.: Deep

learning for deepfakes creation and detection. arXiv preprint arXiv:1909.11573

(2019) 4 - Nirkin, Y., Masi, I., Tran, A., Hassner, T., Medioni, G.: On face segmentation, face

swapping, and face perception. In: AFGR (2018) 2 - Pedro, D.: A unified bias-variance decomposition and its applications. In: 17th

International Conference on Machine Learning. pp. 231–238 (2000) 3 - Rahmouni, N., Nozick, V., Yamagishi, J., Echizen, I.: Distinguishing computer

graphics from natural images using convolution neural networks. In: WIFS. pp. 1–

6 (2017) 14 - R¨ ossler, A., Cozzolino, D., Verdoliva, L., Riess, C., Thies, J., Nießner, M.: Face-

forensics++: Learning to detect manipulated facial images. In: ICCV (2019) 3, 4,

5, 7, 9, 10, 11, 12, 13, 14 - Ruff, L., Vandermeulen, R., Goernitz, N., Deecke, L., Siddiqui, S.A., Binder, A.,

Müller, E., Kloft, M.: Deep one-class classification. In: ICML. pp. 4393–4402 (2018)

7, 8, 9 - Sabir, E., Cheng, J., Jaiswal, A., AbdAlmageed, W., Masi, I., Natarajan, P.: Recur-

rent convolutional strategies for face manipulation detection in videos. In: CVPR

Workshops. pp. 80–87 (2019) 3, 5, 6, 7, 11 - Sanderson, C., Lovell, B.C.: Multi-region probabilistic histograms for robust and

scalable identity inference. In: ICB. pp. 199–208. Springer (2009) 3 - Schroff, F., Kalenichenko, D., Philbin, J.: Facenet: A unified embedding for face

recognition and clustering. In: CVPR (2015) 9 - Stehouwer, J., Dang, H., Liu, F., Liu, X., Jain, A.: On the detection of digital face

manipulation. arXiv preprint arXiv:1910.01717 (2019) 3, 4, 10 - Thies, J., Zollh¨ ofer, M., Nießner, M.: Deferred neural rendering: Image synthesis

using neural textures. ACM Transactions on Graphics (TOG) (2019) 4 - Thies, J., Zollhofer, M., Stamminger, M., Theobalt, C., Nießner, M.: Face2face:

Real-time face capture and reenactment of rgb videos. In: Proceedings of the IEEE

Conference on Computer Vision and Pattern Recognition. pp. 2387–2395 (2016) 4 - Tolosana, R., Vera-Rodriguez, R., Fierrez, J., Morales, A., Ortega-Garcia, J.: Deep-

fakes and beyond: A survey of face manipulation and fake detection. arXiv preprint

arXiv:2001.00179 (2020) 4 - Valentini, G., Dietterich, T.G.: Bias-variance analysis of support vector machines

for the development of svm-based ensemble methods. JMLR 5(Jul), 725–775 (2004)

3 - Verdoliva, D.C.G.P.L.: Extracting camera-based fingerprints for video forensics

(2019) 4 - Verdoliva, L.: Media forensics and deepfakes: an overview. IEEE Journal of Selected

Topics in Signal Processing (2020) 4 - Wang, F., Cheng, J., Liu, W., Liu, H.: Additive margin softmax for face verification.

IEEE Signal Processing Letters 25(7), 926–930 (2018) 9 - Weisstein, E.W.: Hypersphere (2002) 10

- Wen, Y., Zhang, K., Li, Z., Qiao, Y.: A discriminative feature learning approach

for deep face recognition. In: ECCV (2016) 9 - Wu, C.Y., Manmatha, R., Smola, A.J., Krahenbuhl, P.: Sampling matters in deep

embedding learning. In: ICCV (Oct 2017) 9 - Xie, C., Wu, Y., Maaten, L.v.d., Yuille, A.L., He, K.: Feature denoising for im-

proving adversarial robustness. In: CVPR. pp. 501–509 (2019) 7 - Yang, X., Li, Y., Lyu, S.: Exposing deep fakes using inconsistent head poses. In:

ICASSSP. pp. 8261–8265. IEEE (2019) 13 - Yu, N., Davis, L.S., Fritz, M.: Attributing fake images to GANs: Learning and

analyzing GAN fingerprints. In: ICCV. pp. 7556–7566 (2019) 4 - Zhang, X., Karaman, S., Chang, S.F.: Detecting and simulating artifacts in gan

fake images. In: WIFS (2019) 4, 7