.mp4 文件转化成 .bag 文件并在 rviz 中显示

文章目录

-

-

- 一、Python实现.mp4和.bag相互转化

-

- 1、.mp4转.bag

- 验证是否转换成功:使用 rosplay

- 2、.bag转.mp4

- 二、rviz 读取 *.bag 数据包并显示

-

- 1、查看bag数据包的基本信息

- 2、rviz 显示信息

-

一、Python实现.mp4和.bag相互转化

1、.mp4转.bag

# -*- coding: utf-8 -*-

##import logging

##logging.basicConfig()

import time ,sys ,os

import rosbag

import roslib,rospy

from cv_bridge import CvBridge

import cv2

from sensor_msgs.msg import Image

TOPIC = 'camera/image_raw'

def my_resize(my_img,x,y):

resized = cv2.resize(my_img,( int(my_img.shape[1]*x),int(my_img.shape[0]*y) ))

return resized

def CreateVideoBag(videopath, bagname):

'''Creates a bag file with a video file'''

print(videopath)

print(bagname)

bag = rosbag.Bag(bagname, 'w')

cap = cv2.VideoCapture(videopath)

cb = CvBridge()

# prop_fps = cap.get(cv2.cv.CV_CAP_PROP_FPS) # 源代码是这个,不能正常运行

prop_fps = cap.get(cv2.CAP_PROP_FPS) # 帧速率

if prop_fps != prop_fps or prop_fps <= 1e-2:

print ("Warning: can't get FPS. Assuming 24.")

prop_fps = 24

print(prop_fps)

ret = True

frame_id = 0

while(ret):

ret, frame = cap.read()

if not ret:

break

frame = cv2.resize(frame,( 960,540 ))

stamp = rospy.rostime.Time.from_sec(float(frame_id) / prop_fps)

frame_id += 1

image = cb.cv2_to_imgmsg(frame, encoding='bgr8')

image.header.stamp = stamp

image.header.frame_id = "camera"

bag.write(TOPIC, image, stamp)

cap.release()

bag.close()

if __name__ == "__main__":

CreateVideoBag('./123.mp4','./123.bag')

##if __name__ == "__main__":

## if len( sys.argv ) == 3:

## CreateVideoBag(*sys.argv[1:])

## else:

## print( "Usage: video2bag videofilename bagfilename")

运行方式: 修改话题名TOPIC、videopath、bagname后直接F5运行代码

或者

参考链接:https://stackoverflow.com/questions/31432870/how-do-i-convert-a-video-or-a-sequence-of-images-to-a-bag-file

import time, sys, os

from ros import rosbag

import roslib, rospy

roslib.load_manifest('sensor_msgs')

from sensor_msgs.msg import Image

from cv_bridge import CvBridge

import cv2

TOPIC = 'camera/image_raw'

def CreateVideoBag(videopath, bagname):

'''Creates a bag file with a video file'''

print videopath

print bagname

bag = rosbag.Bag(bagname, 'w')

cap = cv2.VideoCapture(videopath)

cb = CvBridge()

# prop_fps = cap.get(cv2.cv.CV_CAP_PROP_FPS) # 源代码是这个,不能正常运行

prop_fps = cap.get(cv2.CAP_PROP_FPS) # 我该成了这个

if prop_fps != prop_fps or prop_fps <= 1e-2:

print "Warning: can't get FPS. Assuming 24."

prop_fps = 24

prop_fps = 24 # 我手机拍摄的是29.78,我还是转成24的。

print prop_fps

ret = True

frame_id = 0

while(ret):

ret, frame = cap.read()

if not ret:

break

stamp = rospy.rostime.Time.from_sec(float(frame_id) / prop_fps)

frame_id += 1

image = cb.cv2_to_imgmsg(frame, encoding='bgr8')

image.header.stamp = stamp

image.header.frame_id = "camera"

bag.write(TOPIC, image, stamp)

cap.release()

bag.close()

if __name__ == "__main__":

if len( sys.argv ) == 3:

CreateVideoBag(*sys.argv[1:])

else:

print( "Usage: video2bag videofilename bagfilename")

运行方式: python Video2ROSbag.py TLout.mp4 TLout.bag

验证是否转换成功:使用 rosplay

rosbag info TLout.bag # 查看 bag 包信息

rosbag play -l TLout.bag camera/image_raw:=image_raw0 # 循环播放图片,并重命名成自己需要的话题名

rqt_image_view # 显示播放的图片,能正常显示说明ros包制作成功。

2、.bag转.mp4

# -*- coding: utf-8 -*-

#!/usr/bin/env python2

import roslib

#roslib.load_manifest('rosbag')

import rospy

import rosbag

import sys, getopt

import os

from sensor_msgs.msg import CompressedImage #压缩图片

from sensor_msgs.msg import Image

import cv2

import numpy as np

import shlex, subprocess #读取命令行参数

#subprocess 是一个 python 标准类库,用于创建进程运行系统命令,并且可以连接进程的输入输出和

#错误管道,获取它们的返回,使用起来要优于 os.system,在这里我们使用这个库运行 hive 语句并获取返回结果。

#shlex 是一个 python 标准类库,使用这个类我们可以轻松的做出对 linux shell 的词法分析,在

#这里我们将格式化好的 hive 连接语句用 shlex 切分,配合 subprocess.run 使用。

MJPEG_VIDEO = 1

RAWIMAGE_VIDEO = 2

VIDEO_CONVERTER_TO_USE = "ffmpeg" # or you may want to use "avconv" #视频转换器

def print_help():

print('rosbag2video.py [--fps 25] [--rate 1] [-o outputfile] [-v] [-s] [-t topic] bagfile1 [bagfile2] ...')

print()

print('Converts image sequence(s) in ros bag file(s) to video file(s) with fixed frame rate using',VIDEO_CONVERTER_TO_USE)

print(VIDEO_CONVERTER_TO_USE,'needs to be installed!')

print()

print('--fps Sets FPS value that is passed to',VIDEO_CONVERTER_TO_USE)

print(' Default is 25.')

print('-h Displays this help.')

print('--ofile (-o) sets output file name.')

print(' If no output file name (-o) is given the filename \'.mp4\' is used and default output codec is h264.' 使用方法:

$ python rosbag2video.py [-h] [-s] [-v] [-r] [-o outputfile] [-t topic] [-p prefix_name] [--fps 25] [--rate 1.0] [--ofile output_file_name] [--start start_time] [--end end_time] bagfile1 [bagfile2] ...

二、rviz 读取 *.bag 数据包并显示

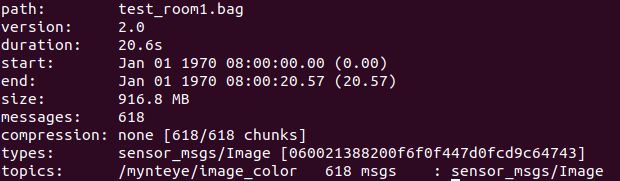

1、查看bag数据包的基本信息

已有数据:test_room1.bag

查看.bag信息:rosbag info test_room1.bag

可以看出 topic:/mynteye/image_color

可以看出 topic:/mynteye/image_color

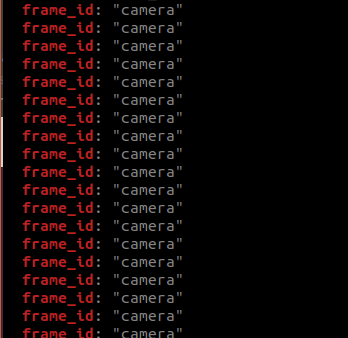

查看frame_id

//打开终端

roscore

//打开新终端 播放数据

rosbag play test_room1.bag

// 打开新终端 查看/velodyne_points的frame_id

rostopic echo /mynteye/image_color | grep frame_id

若想更改frame_id,可以参考:改变ros bag 中消息的frame_id 和话题名

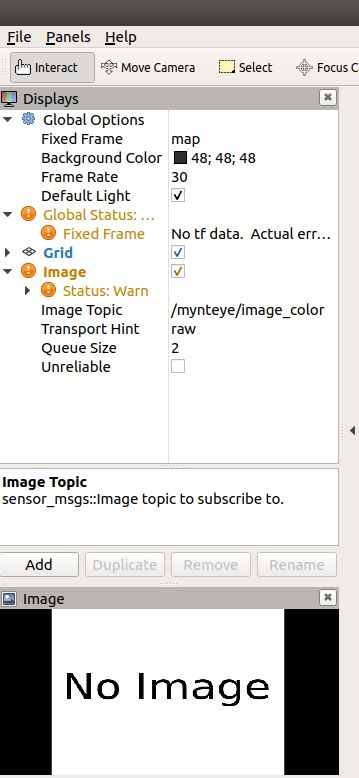

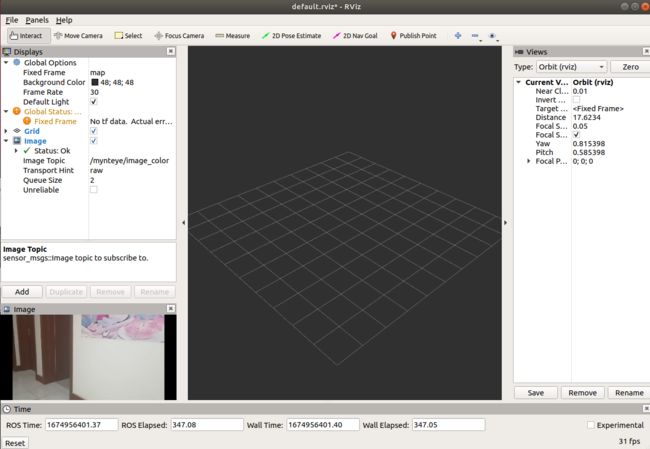

2、rviz 显示信息

执行以下命令:

roscore

//打开新终端

rosrun rviz rviz

// 打开rviz

rviz 设置:add->image

image 设置:Image Topic 设置为 /mynteye/image_color(上面info显示的topic)

播放bag包:rosbag play nsh_indoor_outdoor.bag

显示结果:

若需要显示的是点云,则需要设置pointcloud2

设置rviz:add->PointCloud2

设置:

fix-frame=camera

topic:/mynteye/image_color