解决pyspark环境下GraphFrames报错问题

背景

Spark图计算实战:在pyspark环境下使用GraphFrames库

环境

- mac os

- conda→python=3.8

- jupyter notebook

- pyspark=3.3.0

- graphframes=0.6

代码

from pyspark import SparkConf, SparkContext

from pyspark.sql import SparkSession

from graphframes import GraphFrame

sc = SparkContext()

spark = SparkSession(sc)

# Vertics DataFrame

vertics = spark.createDataFrame([

("a", "Alice", 34),

("b", "Bob", 36),

("c", "Charlie", 37),

("d", "David", 29),

("e", "Esther", 32),

("f", "Fanny", 38),

("g", "Gabby", 60)

], ["id", "name", "age"])

vertics.show()

# Edges DataFrame

edges = spark.createDataFrame([

("a", "b", "friend"),

("b", "c", "follow"),

("c", "b", "follow"),

("f", "c", "follow"),

("e", "f", "follow"),

("e", "d", "friend"),

("d", "a", "friend"),

("a", "e", "friend"),

("g", "e", "follow")

], ["src", "dst", "relationship"])

edges.show()

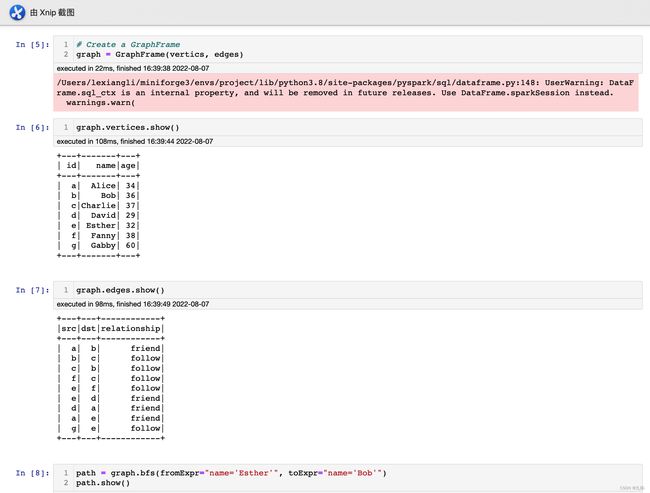

# Create a GraphFrame

graph = GraphFrame(vertics, edges)

报错信息

在执行graph初始化语句时,报错信息如下

pyspark: Py4JJavaError: An error occurred while calling o138.loadClass.: java.lang.ClassNotFoundException: org.graphframes.GraphFramePythonAPI

排查错误

参考

graphframes环境报错:

- 参考1

- 参考2

在终端执行

pyspark --packages graphframes:graphframes:0.8.0-spark3.0-s_2.12 --jars graphframes-0.8.0-spark3.0-s_2.12.jar

发现报错原因是缺少graphframes-0.8.0-spark3.0-s_2.12.jar,因此需要到官网下载

官网链接:graphframes

下载之后,由于终端启动pyspark路径定位在user路径下,如果要在终端启动pyspark,需要将jar包放在图片路径上

对于jupyter notebook,只需要声明SparkContext环境后,添加jar包所在路径即可,对jar包位置没有要求

修改后的代码

from pyspark import SparkConf, SparkContext

from pyspark.sql import SparkSession

from graphframes import GraphFrame

sc = SparkContext()

spark = SparkSession(sc)

# 添加jar包路径,修复bug

sc.addPyFile("../envs/project/lib/python3.8/site-packages/pyspark/jars/graphframes-0.8.0-spark3.0-s_2.12.jar")

# Vertics DataFrame

vertics = spark.createDataFrame([

("a", "Alice", 34),

("b", "Bob", 36),

("c", "Charlie", 37),

("d", "David", 29),

("e", "Esther", 32),

("f", "Fanny", 38),

("g", "Gabby", 60)

], ["id", "name", "age"])

vertics.show()

# Edges DataFrame

edges = spark.createDataFrame([

("a", "b", "friend"),

("b", "c", "follow"),

("c", "b", "follow"),

("f", "c", "follow"),

("e", "f", "follow"),

("e", "d", "friend"),

("d", "a", "friend"),

("a", "e", "friend"),

("g", "e", "follow")

], ["src", "dst", "relationship"])

edges.show()

# Create a GraphFrame

graph = GraphFrame(vertics, edges)

正常运行

一些关于Spark Graph图计算模块的参考

Spark raphFrames图计算API:https://blog.csdn.net/weixin_45839604/article/details/117751806

基于pyspark图计算的算法实例:

https://blog.csdn.net/weixin_39198406/article/details/104940179

基于SparkGraph的社交关系图谱实战

https://blog.51cto.com/u_11200224/5275069

graphframes环境报错:

https://blog.csdn.net/m0_37754282/article/details/110086095

https://blog.csdn.net/qq_42166929/article/details/105983616