pytorch学习笔记13-利用GPU训练

目录

- GPU训练方式1

-

- 方式1如何操作

- 完整代码

- 结果

- 如果电脑上没有GPU,可以使用Google的colab

- GPU训练方式2

-

- 方式2如何操作

- 完整代码

- 用Google colab的输出

GPU训练方式1

方式1如何操作

找到神经网络模型、数据(包括输入、标签等)和损失函数,调用他们的.cuda(),然后再返回就可以了。

对网络模型用cuda():

# ...

model = Model()

if torch.cuda.is_available():

model = model.cuda()

# ...

对损失函数:

# ...

loss_fn = nn.CrossEntropyLoss()

if torch.cuda.is_available():

loss_fn = loss_fn.cuda()

# ...

对数据:

训练集

# ...

for data in train_dataloader:

imgs, targets = data

if torch.cuda.is_available():

imgs = imgs.cuda()

targets = targets.cuda()

outputs = model(imgs)

# ...

测试集

# ...

with torch.no_grad():

for data in test_dataloader:

imgs, tartgets = data

if torch.cuda.is_available():

imgs = imgs.cuda()

targets = targets.cuda()

outputs = model(imgs)

# ...

设定时间,比较用两种方式的时间差:

import time

start_time = time.time()

# ......

end_time = time.time()

print(end_time - start_time)

完整代码

from torch.utils.tensorboard import SummaryWriter

import time

import torch

# from model import *

import torchvision.datasets

from torch import nn

from torch.utils.data import DataLoader

# 准备数据集

train_data = torchvision.datasets.CIFAR10(root="P20_dataset", train=True, transform=torchvision.transforms.ToTensor(),

download=True)

test_data = torchvision.datasets.CIFAR10(root="P20_dataset", train=False, transform=torchvision.transforms.ToTensor(),

download=True)

writer = SummaryWriter("P27_train")

# 查看数据集大小

train_data_size = len(train_data)

test_data_size = len(test_data)

print("训练集的长度为:{}".format(train_data_size))

print("测试集的长度为:{}".format(test_data_size))

# 用dataloader加载数据集

train_dataloader = DataLoader(train_data, batch_size=64)

test_dataloader = DataLoader(test_data, batch_size=64)

# 创建网络模型

class Model(nn.Module):

def __init__(self):

super(Model, self).__init__()

self.model = nn.Sequential(

nn.Conv2d(3, 32, 5, 1, 2), # 输入通道3,输出通道32,卷积核大小5*5,步长为1,填充2(根据公式计算)

nn.MaxPool2d(2),

nn.Conv2d(32, 32, 5, 1, 2),

nn.MaxPool2d(2),

nn.Conv2d(32, 64, 5, 1, 2),

nn.MaxPool2d(2),

nn.Flatten(), # 展平后变成64*64*4了

nn.Linear(64*4*4, 64),

nn.Linear(64, 10)

)

def forward(self, x):

x = self.model(x)

return x

model = Model()

if torch.cuda.is_available():

model = model.cuda() # 把模型转移到GPU上

# 创建损失函数

loss_fn = nn.CrossEntropyLoss() # 交叉熵,fn是function缩写

if torch.cuda.is_available():

loss_fn = loss_fn.cuda()

# 优化器

learning_rate = 1e-2

optimizer = torch.optim.SGD(model.parameters(), lr=learning_rate) # 随机梯度下降优化器

# 设置训练网络的参数

# 记录训练的次数

total_train_step = 0

# 记录测试的次数

total_test_step = 0

# 设置训练的轮数

epoch = 10

start_time = time.time()

for i in range(epoch):

print("--------------第 {} 轮训练开始---------------".format(i+1))

# 训练步骤

model.train()

for data in train_dataloader:

imgs, targets = data

if torch.cuda.is_available():

imgs = imgs.cuda()

targets = targets.cuda()

outputs = model(imgs)

loss = loss_fn(outputs, targets) # 计算实际输出与目标输出的差距

# 优化器优化模型

optimizer.zero_grad() # 梯度清零

loss.backward() # 反向传播,计算参数对损失函数的梯度

optimizer.step() # 根据梯度,对网络的参数进行调优

total_train_step += 1

# 使用item()函数取出的元素值的精度更高,所以在求损失函数时一般用item()

if total_train_step % 100 == 0:

end_time = time.time()

print(end_time - start_time)

print("训练次数: {}, Loss: {} ".format(total_train_step, loss.item()))

writer.add_scalar("train_loss", loss.item(), total_train_step)

# 测试步骤开始,每轮训练后都查看在测试集上的Loss情况

model.eval()

# 以测试集上的损失或者正确率来判断模型是否训练的好

# 验证集与测试集不一样的,验证集是在训练中用的,反正模型过拟合,测试集是在模型完全训练好后使用的

# 验证集用来调整超参数,相当于真题,测试集是考试

total_test_loss = 0

total_accuracy = 0

with torch.no_grad():

for data in test_dataloader:

imgs, tartgets = data

if torch.cuda.is_available():

imgs = imgs.cuda()

targets = targets.cuda()

outputs = model(imgs)

loss = loss_fn(outputs, targets)

total_test_loss = total_test_loss + loss.item()

accuracy = (outputs.argmax(1) == targets).sum() # 横向设为1

total_accuracy = total_accuracy + accuracy

print("整体测试集上的Loss: {}".format(total_test_loss))

print("整体测试集上的准确率:{}".format(total_accuracy/test_data_size))

writer.add_scalar("test_loss", total_test_loss, total_test_step)

writer.add_scalar("test_accuracy", total_accuracy/test_data_size, total_test_step)

total_test_step += 1

torch.save(model, "model_{}.pth".format(i)) # 保存每轮训练后的模型结构和参数

print("模型已保存")

writer.close()

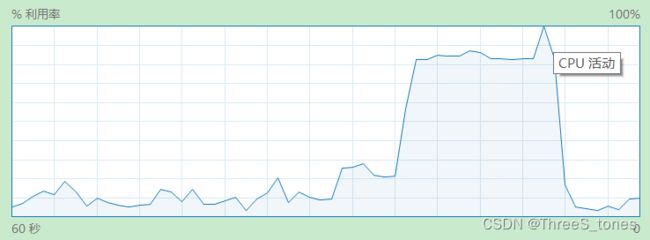

结果

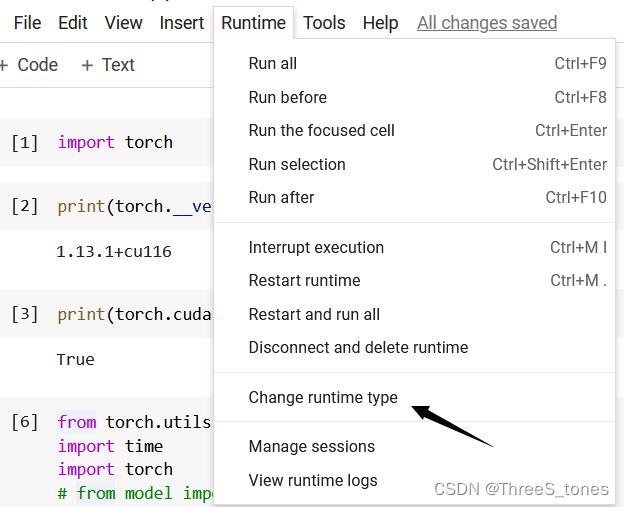

如果电脑上没有GPU,可以使用Google的colab

可以使用Google colab上的notebook。

设置使用GPU。

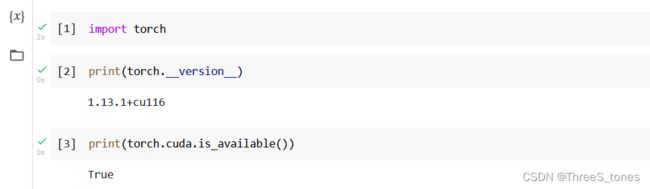

查看cuda可用:

下面就可以将上面的完整代码复制过来执行。

可以看到比我们的电脑要快很多。

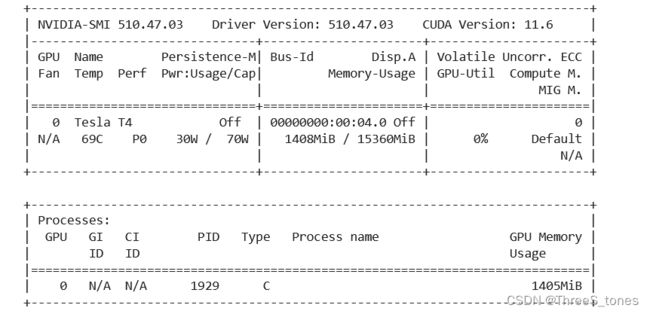

使用命令行查看显卡信息(加一个感叹号)。

!nvidia-smi

15G显卡,Tesla T4,比大多数人的显卡都好了。

据说每周可免费使用30小时。

GPU训练方式2

方式2如何操作

方式2是更加常用的训练方式。

找到神经网络模型、数据(包括输入、标签等)和损失函数,调用他们的.to(device),然后再返回就可以了(网络模型和损失函数不需要返回,返不返回都可以)。

训练之前定义训练的设备:

# 定义训练的设备

device = torch.device("cpu")

print(device)

对网络模型调用.to():

model = Model()

model = model.to(device)

对损失函数:

loss_fn = nn.CrossEntropyLoss()

loss_fn = loss_fn.to(device)

对训练集:

for data in train_dataloader:

imgs, targets = data

imgs = imgs.to(device)

targets = targets.to(device)

outputs = model(imgs)

loss = loss_fn(outputs, targets)

对测试集:

with torch.no_grad():

for data in test_dataloader:

imgs, tartgets = data

imgs = imgs.to(device)

targets = targets.to(device)

outputs = model(imgs)

有单个显卡的话device = torch.device("cuda:0")与device = torch.device("cuda")没有差别。

有多个显卡可以指定显卡1和显卡2:device = torch.device("cuda:1")或device = torch.device("cuda:2")

为了适应各种环境,定义训练设备时更常见的写法是:

device = torch.device("cuda" if torch.cuda.is_available() else "cpu")

完整代码

from torch.utils.tensorboard import SummaryWriter

import time

import torch

# from model import *

import torchvision.datasets

from torch import nn

from torch.utils.data import DataLoader

# 准备数据集

train_data = torchvision.datasets.CIFAR10(root="P20_dataset", train=True, transform=torchvision.transforms.ToTensor(),

download=True)

test_data = torchvision.datasets.CIFAR10(root="P20_dataset", train=False, transform=torchvision.transforms.ToTensor(),

download=True)

writer = SummaryWriter("P27_train")

# 定义训练的设备

device = torch.device("cpu")

print(device)

# 查看数据集大小

train_data_size = len(train_data)

test_data_size = len(test_data)

print("训练集的长度为:{}".format(train_data_size))

print("测试集的长度为:{}".format(test_data_size))

# 用dataloader加载数据集

train_dataloader = DataLoader(train_data, batch_size=64, drop_last=True)

test_dataloader = DataLoader(test_data, batch_size=64, drop_last=True)

# 创建网络模型

class Model(nn.Module):

def __init__(self):

super(Model, self).__init__()

self.model = nn.Sequential(

nn.Conv2d(3, 32, 5, 1, 2),

nn.MaxPool2d(2),

nn.Conv2d(32, 32, 5, 1, 2),

nn.MaxPool2d(2),

nn.Conv2d(32, 64, 5, 1, 2),

nn.MaxPool2d(2),

nn.Flatten(),

nn.Linear(64*4*4, 64),

nn.Linear(64, 10)

)

def forward(self, x):

x = self.model(x)

return x

model = Model()

model = model.to(device)

# 创建损失函数

loss_fn = nn.CrossEntropyLoss()

loss_fn = loss_fn.to(device)

# 优化器

learning_rate = 1e-2

optimizer = torch.optim.SGD(model.parameters(), lr=learning_rate)

# 设置训练网络的参数

# 记录训练的次数

total_train_step = 0

# 记录测试的次数

total_test_step = 0

# 设置训练的轮数

epoch = 10

start_time = time.time()

for i in range(epoch):

print("--------------第 {} 轮训练开始---------------".format(i+1))

# 训练步骤

model.train()

for data in train_dataloader:

imgs, targets = data

imgs = imgs.to(device)

targets = targets.to(device)

outputs = model(imgs)

loss = loss_fn(outputs, targets)

# 优化器优化模型

optimizer.zero_grad()

loss.backward()

optimizer.step()

total_train_step += 1

# 使用item()函数取出的元素值的精度更高,所以在求损失函数时一般用item()

if total_train_step % 100 == 0:

end_time = time.time()

print(end_time - start_time)

print("训练次数: {}, Loss: {} ".format(total_train_step, loss.item()))

writer.add_scalar("train_loss", loss.item(), total_train_step)

# 测试步骤开始

model.eval()

# 以测试集上的损失或者正确率来判断模型是否训练的好

# 验证集与测试集不一样的,验证集是在训练中用的,反正模型过拟合,测试集是在模型完全训练好后使用的

# 验证集用来调整超参数,相当于真题,测试集是考试

total_test_loss = 0

total_accuracy = 0

with torch.no_grad():

for data in test_dataloader:

imgs, tartgets = data

imgs = imgs.to(device)

targets = targets.to(device)

outputs = model(imgs)

loss = loss_fn(outputs, targets)

total_test_loss += loss.item()

accuracy = (outputs.argmax(1) == targets).sum() # 横向设为1

total_accuracy += accuracy

print("整体测试集上的Loss: {}".format(total_test_loss))

print("整体测试集上的准确率:{}".format(total_accuracy/test_data_size))

writer.add_scalar("test_loss", total_test_loss, total_test_step)

writer.add_scalar("test_accuracy", total_accuracy/test_data_size, total_test_step)

total_test_step += 1

torch.save(model, "model_{}.pth".format(i))

print("模型已保存")

writer.close()