Python GPU编程实例(最简单, 入门版)

1. 首先需要安装numba(python的CUDA版)

conda install numba & conda install cudatoolkit

2. 导入numba

from numba import jit, cuda3. 以我的一个机器学习学习作业为例, 比较GPU与不带GPU的运行速度差异, 只需要在定义的函数前面加上 @jit 即可,

#%%deine functions

from numba import jit, cuda

from timeit import default_timer as timer

def computeCost(X, y, theta):

### X = (47, 3), theta = (3, 1)

### START CODE HERE ###

m = X.shape[0]

diff = np.dot(X,theta) - y;

cost = np.power(diff, 2).sum()/2/m

return cost

@jit

def computeCost_gpu(X, y, theta):

### X = (47, 3), theta = (3, 1)

### START CODE HERE ###

m = X.shape[0]

diff = np.dot(X,theta) - y;

cost = np.power(diff, 2).sum()/2/m

return cost

def gradient(X, y, theta):

### START CODE HERE ###

m = X.shape[0]

j = np.dot(X.T, (np.dot(X,theta) - y))

return j

### END CODE HERE ###

@jit

def gradient_gpu(X, y, theta):

### START CODE HERE ###

m = X.shape[0]

j = np.dot(X.T, (np.dot(X,theta) - y))

return j

### END CODE HERE ###

def batch_gradient_descent(X, y, theta, epoch = 10, lr=0.02):

### START CODE HERE ###

for k in range(epoch):

cost = computeCost(X, y, theta)

j = gradient(X, y, theta)

theta = theta - lr*j

cost = computeCost(X, y, theta)

return theta, cost

@jit

def batch_gradient_descent_gpu(X, y, theta, epoch = 10, lr=0.02):

### START CODE HERE ###

for k in range(epoch):

cost = computeCost_gpu(X, y, theta)

j = gradient_gpu(X, y, theta)

theta = theta - lr*j

cost = computeCost_gpu(X, y, theta)

return theta, cost

#%%test gpu speed

def gpu_speed(epoch):

lr = 0.02;

theta = np.zeros((3,1))

start = timer()

theta_pred, final_cost = batch_gradient_descent(X, y, theta, epoch, lr=lr)

#print("without GPU:", timer()-start)

without_gpu = timer()-start;

start = timer()

theta_pred, final_cost = batch_gradient_descent_gpu(X, y, theta, epoch, lr=lr)

#print("with GPU:", timer()-start)

with_gpu = timer()-start;

return with_gpu, without_gpu

epochs = np.arange(1, 5000)

with_gpu_time = np.zeros((epochs.shape[0]))

without_gpu_time = np.zeros((epochs.shape[0]))

for kk, epoch in enumerate(epochs):

with_gpu_time[kk], without_gpu_time[kk] = gpu_speed(epoch)

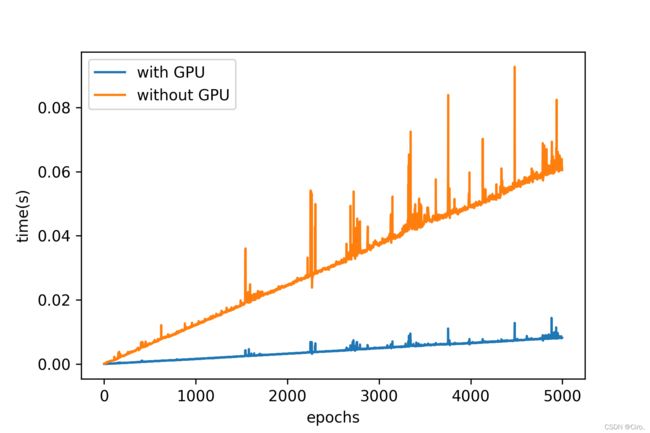

#%%gpu difference plot

plt.plot(with_gpu_time[0:kk], label = 'with GPU')

plt.plot(without_gpu_time[0:kk], label = 'without GPU')

plt.xlabel('epochs')

plt.ylabel('time(s)')

plt.legend()

plt.savefig('gpu_difference', dpi = 300)