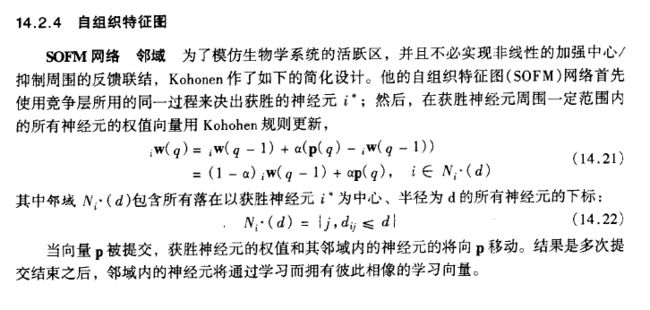

自组织特征图SOFM网络的实现

这里直接给出Qt代码段和演示结果。

理论部分请移步另一位博主的 自组织特征图(SOFM)详解_datamonday的博客-CSDN博客_sofm算法

算法基于 Martin H. Hagan 的《神经网络设计》 第十四章竞争网络实现。其中按照原书,使用相关性(Wp)而不是距离(||W-P||)作为获胜判定。以后将在学习向量量化(LVQ)网络中使用距离

算法改进包括:

引入逐渐变小的学习步长

引入墨西哥草帽函数实现“加强中心/抑制周围”

参考代码 AANN_sofm::initNeighborMatrix_MexicoStrawHat(int N)

引入良心变数(conscience),并限制良心变数使用的频率

使用胜出训练参数和需要调整的W(i)之间的距离来决定学习步长a,否则在实践中会发现向量很难收敛在训练数据集形成的曲面上。

(auto a = (P.transpose() - m_layer.W.row(maxIndex)).squaredNorm()/(0.01+m_layer.W.row(maxIndex).squaredNorm());)

AANN_sofm.h 文件

#ifndef AANN_SOFM_H

#define AANN_SOFM_H

#include

#include

#include

#include "QEigenExt.h"

using namespace Eigen;

#include "AANN_instar.h"

/**

*

* 自联想神经网络(Auto-Associative Neural Network , 缩写为AANN)

* 是1987年Ballard提出的,其网络原型是一种具有对称拓扑结构的五层前馈传递网络,

* AANN 应用到数据检验问题时具有比较明显的物理意义。是BP神经网络的一种特殊情形。

* 其特点是有对称拓扑结构,即输出量等于输入量。

*/

class AANN_sofm

{

public:

AANN_sofm();

public:

// int GetLayerNum() const;

// bool AppendLayer(AANN_LAYER_PARAM_F layer);

virtual bool train( const Eigen::VectorXf &P, int N );

virtual bool calc( const Eigen::VectorXf &P, Eigen::VectorXf *A = NULL );

float getAlpha( ) const;

float getGamma( ) const;

long getRound( ) const;

void setAlpha( const float alpha);

void setGamma( const float gamma);

bool setNeighborMatrix( const MatrixXf neighbors );

bool initNeighborMatrix( int N, int type );

bool initNeighborMatrix_MexicoStrawHat( int N );

bool initNeighborMatrix_Sombrero( int N );

const MatrixXf getWMatrix() const;

private:

void init();

private:

// AANN_LAYER_LIST_F m_layerList;

AANN_LAYER_PARAM_F m_layer;

float m_alpha;

float m_gamma;

long m_round;

};

#endif // AANN_SOFM_H

AANN_sofm.cpp文件

#include "AANN_sofm.h"

#include "activation.h"

#include

#include

#include

AANN_sofm::AANN_sofm()

{

m_alpha = 0.5f;

m_gamma = 0.5f;

init();

}

void AANN_sofm::init()

{

m_layer.maxB = -1;

m_layer.func = hardlim;

m_round = 0;

}

float AANN_sofm::getAlpha( ) const

{

return m_alpha;

}

float AANN_sofm::getGamma( ) const

{

return m_gamma;

}

long AANN_sofm::getRound( ) const

{

return m_round;

}

void AANN_sofm::setAlpha( const float alpha)

{

m_alpha = alpha;

}

void AANN_sofm::setGamma( const float gamma)

{

m_gamma = gamma;

}

bool AANN_sofm::setNeighborMatrix( const MatrixXf neighbors )

{

if( !neighbors.size() || neighbors.rows() != neighbors.cols())

{

return false;

}

m_layer.Neighbors = neighbors;

qDebug() << QString( " --------------- neighbors %1 x %2 ---------------" )

.arg(neighbors.rows()).arg(neighbors.cols());

for(int row=0; row 8 9

* v

* 10 11 12 13 14

* 15 16 17 18 19

* 20 21 22 23 24

*

* Then, neighbor Matrix will be

* 0 1 2 3 4 5 6 7 8 9 10 11 12 ..... 20 21 22 23 24

* +----+----+----+----+----+----+----+----+----+----+----+----+----+----+----+----+----+----+----+----+

* 0 | 1 1 0 0 0 1 0 ..... 0 0 0 0 0

* 1 | 1 1 1 0 0 0 1 0 ..... 0 0 0 0 0

* 2 | 0 1 1 1 0 0 0 1 0 ..... 0 0 0 0 0

* 3 | 0 0 1 1 1 0 0 0 1 0 ..... 0 0 0 0 0

* 4 | 0 0 0 1 1 0 0 0 0 1 0 ..... 0 0 0 0 0

* 5 | 1 0 0 0 0 1 1 0 0 0 1 0 ..... 0 0 0 0 0

* 6 | 0 1 0 0 0 1 1 1 0 0 0 1 0 ..... 0 0 0 0 0

* 7 | 0 1 0 0 0 1

* 8 | 0 1 0 0 0

* 9 | 0 1 0 0

* 10 | 0 1 0

* . | 0 1

* . |

* . |

* . |

* . |

* 22 | 0 0 0 0 0 0 0 0 0 0 0 0 0 ... 0 1 1 1 0

* 23 | 0 0 0 0 0 0 0 0 0 0 0 0 0 ... 0 0 1 1 1

* 24 | 0 0 0 0 0 0 0 0 0 0 0 0 0 ... 0 0 0 1 1

*/

bool AANN_sofm::initNeighborMatrix( int N, int type = 0 )

{

Q_UNUSED( type );

if( N <= 0 || N > 10000 )

{

return false;

}

MatrixXf neighbor = MatrixXf::Identity( N*N, N*N ) - MatrixXf::Ones( N*N, N*N )/((N+4)*(N+4));

for( int row = 0; row < N*N; row++)

{

int x = row%N;

int y = row/N;

if( (y+N-1)%N < y )

{

neighbor( ((y+N-1)%N)*N+x, row ) = 1.f/((N+1)*(N+1));

}

if( (y+1)%N >y )

{

neighbor( ((y+1)%N)*N+x, row ) = 1.f/((N+1)*(N+1));

}

if( (x+N-1)%N < x )

{

neighbor( row, y*N+(x+N-1)%N ) = 1.f/((N+1)*(N+1));

}

if( (x+1)%N > x ) {

neighbor( row, y*N+(x+1)%N ) = 1.f/((N+1)*(N+1));

}

}

return setNeighborMatrix( neighbor );

}

bool AANN_sofm::initNeighborMatrix_MexicoStrawHat( int N )

{

if( N <= 0 || N > 10000 )

{

return false;

}

MatrixXf neighbor = MatrixXf::Identity( N*N, N*N );

for( int row = 0; row < N*N; row++)

for( int col = row+1; col < N*N; col++)

{

int dx = row%N - col%N;

int dy = row/N - col/N;

float d1 = 1+dx*dx+dy*dy;

float d2 = (dx*dx+dy*dy)*d1*d1;

neighbor( row, col ) = neighbor( col, row ) = (1.0f/d2 - 0.07f)/d1;

}

return setNeighborMatrix( neighbor );

}

/**

* @brief AANN_sofm::initNeighborMatrix_Sombrero

* refer to https://baike.baidu.com/item/Sombrero%20function/16550687

* @return

*/

bool AANN_sofm::initNeighborMatrix_Sombrero( int N )

{

if( N <= 0 || N > 10000 )

{

return false;

}

MatrixXf neighbor = 0.98*MatrixXf::Identity( N*N, N*N );

for( int row = 0; row < N*N; row++)

for( int col = row+1; col < N*N; col++)

{

int dx = row%N - col%N;

int dy = row/N - col/N;

float d2 = dx*dx+dy*dy;

float d1 = sqrt( d2 );

float d4 = exp(2*d2);

// float cc = cos( (3.14f/2-0.1)*d1 );

// float dd = cc/d4;

neighbor( row, col ) = neighbor( col, row ) = cos( (3.14159/2-0.01)*d1 )/d4;

}

return setNeighborMatrix( neighbor );

}

const MatrixXf AANN_sofm::getWMatrix() const

{

return m_layer.W;

}

/**

* @brief AANN_sofm::train

* @param P measured vector : 可度量特征信息:如形状,味道,温度,颜色,重量等

* @param T 感知的结果,或期望的结果

* @return

*/

bool AANN_sofm::train( const Eigen::VectorXf &P, int S )

{

const int R = P.rows();

/* return false if input Dim is different */

if( S <= 0 )

{

return false;

}

/* return false if input Dim is different */

if( ( m_layer.W.cols() > 0 ) && ( m_layer.W.cols() != R ) )

{

return false;

}

/* return false if output Dim is different */

if( ( m_layer.W.rows() > 0 ) && ( m_layer.W.rows() != S ) )

{

return false;

}

if( m_layer.Neighbors.size() && (m_layer.Neighbors.rows() != S || m_layer.Neighbors.cols() != S ))

{

return false;

}

if( S != m_layer.W.rows() || R != m_layer.W.cols() )

{

srand( time(NULL) );

m_layer.W = MatrixXf::Random( S, R );

m_layer.Conscience = VectorXf::Ones( S );

}

int maxIndex;

m_layer.N = (m_layer.W * P).array() + R;

/**

* @brief round_mask

* 随着训练次数增长,应用良心的间隔越来越大,但总是有机会使用

* M = 3 时

* m_round : 0~7 8~15 16~31 32~63 ... 2^(n+3) ~ 2^(n+3)-1

* N : 0 1 2 4 ... 2^n

* round_mask : 0x...FFFFF 1 3 7 ... (2^(n+1))-1

* apply conscience : 8 x 8 x 8 x 8 x ... 8 x

* M = 6 时

* m_round : 0~63 64~127 128~255 256~511 ... 2^(n+6) ~ 2^(n+6)-1

* N : 0 1 2 4 ... 2^n

* round_mask : 0x...FFFFF 1 3 7 ... (2^(n+1))-1

* apply conscience : 64 x 64 x 64 x 64 x ... 64 x

*

* 应用良心的频率随 M 的增加而呈指数增加,测试中 M=3 能得到比较好的性能

*/

static int round_mask = 1;

int N = m_round >> 3;

if( 0 == ( N&(N-1) ) )

{

round_mask = (N<<1)-1; // 0,1,2,4,8,16,...

}

if( 0 == ( m_round & round_mask ) )

{

(m_layer.N.array() * m_layer.Conscience.array()).maxCoeff(&maxIndex);

} else {

m_layer.N.maxCoeff(&maxIndex);

}

m_layer.A = VectorXf::Zero(S);

m_layer.A[maxIndex] = 1;

m_layer.Conscience = m_layer.Conscience.array()/(1+m_layer.A.array()).array();

m_layer.Conscience.normalize();

VectorXf vecA;

if( m_layer.Neighbors.rows() == S )

{

// vecA = ( m_layer.Neighbors * m_layer.A ).unaryExpr(std::ref(satlin));

vecA = (m_layer.Neighbors * m_layer.A).normalized();

} else {

vecA = m_layer.A;

}

/*

* delta W = W(q) - W(q-1) = alpha * A(q) * P(q) - gamma * A(q).diagonal * W(q-1)

*/

auto a = (P.transpose() - m_layer.W.row(maxIndex)).squaredNorm()/(0.01+m_layer.W.row(maxIndex).squaredNorm());

a = fmin( 1.0, fmax( a, m_alpha ));

m_layer.deltaW = a * vecA * P.transpose() - a * vecA.asDiagonal() * m_layer.W;

m_layer.W = (m_layer.W + m_layer.deltaW);//.rowwise().normalized();

m_round++;

return true;

}

/**

* @brief AANN_sofm::calc

* @param P measured vector : 可度量特征信息:如形状,味道,温度,颜色,重量等

* @return

*/

bool AANN_sofm::calc( const Eigen::VectorXf &P, Eigen::VectorXf *A )

{

AANN_LAYER_PARAM_F layer = m_layer;

Eigen::VectorXf e;

const int R = P.rows();

/* return false if input Dim is different */

if( ( m_layer.W.cols() <= 0 ) || ( m_layer.W.cols() != R ) )

{

qWarning() << QString( __FUNCTION__ " : Error! input dim is different : expect %1, but input data is %2 " )

.arg(m_layer.W.cols()).arg( R );

return false;

}

qDebug() << __FUNCTION__ " --- CALC -----------------------";

qDebug() << __FUNCTION__ " P = " << P.eval() ;

m_layer.A = (m_layer.W * P).unaryExpr(std::ref(m_layer.func));

qDebug() << __FUNCTION__ " A = " << m_layer.A;

if( A ) { *A = m_layer.A; }

return true;

}

线程执行代码段:

void DataWorker::on_start()

{

for(int k = 0; !m_bUserQuit && (k < 100000); k++ ) // while( false == m_bUserQuit )

{

updateNnData();

QThread::msleep(10);

}

}

#define N 3

void DataWorker::reset()

{

m_sofm.initNeighborMatrix_Sombrero(N);

}

void DataWorker::updateNnData( )

{

QMutexLocker locker( &m_nnLocker );

float r = sqrt(m_sofm.getRound()) ;

float alpha = (r+500.0f)/( 100*r+1000.0f);

m_sofm.setAlpha( alpha );

m_sofm.setGamma( alpha );

#define PI 3.14159265358979

{

float a = - acos(sqrt( (rand() % 257) / 256.0001)); // -3.14 * (rand() % 256) / 512.0;

float b = PI * (rand() % 12345) / 1000.0;

VectorXf P(3);

P << cos(a)*sin(b), sin(a), cos(a)*cos(b);

if(m_trainSetModel && ( m_sofm.getRound() < 10000) )

{

// WData d(cos(a)*sin(b), cos(a)*cos(b), sin(a));

m_trainSetModel->append( WData(P[0],P[1],P[2]));

m_trainSetModel->dataChanged( m_trainSetModel->index(0,0), m_trainSetModel->index(-1,-1));

}

m_sofm.train( P, N*N );

}

m_dataModel->update(m_sofm.getWMatrix());

}以下是36点在半球形中的演示结果

以下是9点在半球形中的演示结果。看得出,用距离来决定学习步长在这时造成了结果的发散

演示代码