猜数字游戏代码:importrandomdefpythonit():a=random.randint(1,100)n=int(input("输入你猜想的数字:"))whilen!=a:ifn>a:print("很遗憾,猜大了")n=int(input("请再次输入你猜想的数字:"))elifna::如果玩家猜的数字n大于随机数字a,则输出"很遗憾,猜大了",并提示玩家再次输入。elifn

计算机木马详细编写思路

小熊同学哦

php开发语言木马木马思路

导语:计算机木马(ComputerTrojan)是一种恶意软件,通过欺骗用户从而获取系统控制权限,给黑客打开系统后门的一种手段。虽然木马的存在给用户和系统带来严重的安全风险,但是了解它的工作原理与编写思路,对于我们提高防范意识、构建更健壮的网络安全体系具有重要意义。本篇博客将深入剖析计算机木马的详细编写思路,以及如何复杂化挑战,以期提高读者对计算机木马的认识和对抗能力。计算机木马的基本原理计算机木

tiff批量转png

诺有缸的高飞鸟

opencv图像处理pythonopencv图像处理

目录写在前面代码完写在前面1、本文内容tiff批量转png2、平台/环境opencv,python3、转载请注明出处:https://blog.csdn.net/qq_41102371/article/details/132975023代码importnumpyasnpimportcv2importosdeffindAllFile(base):file_list=[]forroot,ds,fsin

vue+el-table 可输入表格使用上下键进行input框切换

以对_

vue学习记录vue.jsjavascript前端

使用上下键进行完工数量这一列的切换-->//键盘触发事件show(ev,index){letnewIndex;letinputAll=document.querySelectorAll('.table_inputinput');//向上=38if(ev.keyCode==38){if(index==0){//如果是第一行,回到最后一个newIndex=inputAll.length-1}elsei

Linux CTF逆向入门

蚁景网络安全

linux运维CTF

1.ELF格式我们先来看看ELF文件头,如果想详细了解,可以查看ELF的manpage文档。关于ELF更详细的说明:e_shoff:节头表的文件偏移量(字节)。如果文件没有节头表,则此成员值为零。sh_offset:表示了该section(节)离开文件头部位置的距离+-------------------+|ELFheader|---++--------->+-------------------

浅谈MapReduce

Android路上的人

Hadoop分布式计算mapreduce分布式框架hadoop

从今天开始,本人将会开始对另一项技术的学习,就是当下炙手可热的Hadoop分布式就算技术。目前国内外的诸多公司因为业务发展的需要,都纷纷用了此平台。国内的比如BAT啦,国外的在这方面走的更加的前面,就不一一列举了。但是Hadoop作为Apache的一个开源项目,在下面有非常多的子项目,比如HDFS,HBase,Hive,Pig,等等,要先彻底学习整个Hadoop,仅仅凭借一个的力量,是远远不够的。

【2023年】云计算金砖牛刀小试6

geekgold

云计算服务器网络kubernetes容器

第一套【任务1】私有云服务搭建[10分]【题目1】基础环境配置[0.5分]使用提供的用户名密码,登录提供的OpenStack私有云平台,在当前租户下,使用CentOS7.9镜像,创建两台云主机,云主机类型使用4vCPU/12G/100G_50G类型。当前租户下默认存在一张网卡,自行创建第二张网卡并连接至controller和compute节点(第二张网卡的网段为10.10.X.0/24,X为工位号

HarmonyOS开发实战( Beta5.0)搜索框热搜词自动切换

让开,我要吃人了

OpenHarmonyHarmonyOS鸿蒙开发harmonyos华为鸿蒙移动开发鸿蒙系统前端开发语言

鸿蒙HarmonyOS开发往期必看:HarmonyOSNEXT应用开发性能实践总结最新版!“非常详细的”鸿蒙HarmonyOSNext应用开发学习路线!(从零基础入门到精通)介绍本示例介绍使用TextInput组件与Swiper组件实现搜索框内热搜词自动切换。效果图预览使用说明页面顶部搜索框内热搜词条自动切换,编辑搜索框时自动隐藏。实现思路使用TextInput实现搜索框TextInput({te

string trim的实现

JamesSawyer

if(typeofString.prototype.trim!=='function'){String.prototype.trim=function(){//这个正则的意思是//'^''$'表示结束和开始//'^\s*'表示任意以空格开头的空格//'\s*$'表示任意以空格结尾的空格//'\S*'表示任意非空字符//'$1'表示'(\S*(\s*\S*)*)'returnthis.replace

uniapp使用内置地图选择插件,实现地址选择并在地图上标点

神夜大侠

Uniappvue.jsuniapp

uniapp使用内置地图选择插件,实现地址选择并在地图上标点代码如下:page{background:#F4F5F6;}::-webkit-scrollbar{width:0;height:0;color:transparent;}page{height:100%;width:100%;font-size:24rpx;}image,view,input,textarea,label,text,na

mysql学习教程,从入门到精通,TOP 和MySQL LIMIT 子句(15)

知识分享小能手

大数据数据库MySQLmysql学习oracle数据库开发语言adb大数据

1、TOP和MySQLLIMIT子句内容在SQL中,不同的数据库系统对于限制查询结果的数量有不同的实现方式。TOP关键字主要用于SQLServer和Access数据库中,而LIMIT子句则主要用于MySQL、PostgreSQL(通过LIMIT/OFFSET语法)、SQLite等数据库中。下面将分别详细介绍这两个功能的语法、语句以及案例。1.1、TOP子句(SQLServer和Access)1.1

metaRTC/webRTC QOS 方案与实践

metaRTC

metaRTC解决方案webrtcqos

概述质量服务(QOS/QualityofService)是指利用各种技术方案提高网络通信质量的技术,网络通信质量需要解决下面两个问题:网络问题:UDP/不稳定网络/弱网下的丢包/延时/乱序/抖动数据量问题:发送数据量超带宽负载和平滑发送拥塞控制是各种技术方案的数据基础,丢包恢复解决丢包问题,抗乱序抖动解决网络乱序抖动问题,流量控制解决平滑发送数据/数据超带宽负载/延时问题。拥塞控制(Congest

metaRTC5.0 API编程指南(一)

metaRTC

metaRTCc++c语言webrtc

概述metaRTC5.0版本API进行了重构,本篇文章将介绍webrtc传输调用流程和例子。metaRTC5.0版本提供了C++和纯C两种接口。纯C接口YangPeerConnection头文件:include/yangrtc/YangPeerConnection.htypedefstruct{void*conn;YangAVInfo*avinfo;YangStreamConfigstreamco

LeetCode 673. Number of Longest Increasing Subsequence (Java版; Meidum)

littlehaes

字符串动态规划算法leetcode数据结构

welcometomyblogLeetCode673.NumberofLongestIncreasingSubsequence(Java版;Meidum)题目描述Givenanunsortedarrayofintegers,findthenumberoflongestincreasingsubsequence.Example1:Input:[1,3,5,4,7]Output:2Explanatio

linux 安装Sublime Text 3

hhyiyuanyu

Python学习linuxsublimetext

方法/步骤打开官网http://www.sublimetext.com/3,选择64位进行下载执行命令wgethttps://download.sublimetext.com/sublime_text_3_build_3126_x64.tar.bz2进行下载3、下载完成进行解压,执行tar-xvvfsublime_text_3_build_3126_x64.tar.bz解压4、解压完成以后,移动到

【大模型应用开发 动手做AI Agent】第一轮行动:工具执行搜索

AI大模型应用之禅

计算科学神经计算深度学习神经网络大数据人工智能大型语言模型AIAGILLMJavaPython架构设计AgentRPA

【大模型应用开发动手做AIAgent】第一轮行动:工具执行搜索作者:禅与计算机程序设计艺术/ZenandtheArtofComputerProgramming1.背景介绍1.1问题的由来随着人工智能技术的飞速发展,大模型应用开发已经成为当下热门的研究方向。AIAgent作为人工智能领域的一个重要分支,旨在模拟人类智能行为,实现智能决策和自主行动。在AIAgent的构建过程中,工具执行搜索是至关重要

bat+ffmpeg批处理图片,图片批量转码

张雨zy

音视频ffmpeg

直接在cmd中输入//批量转码文件for%ain("*.png")doffmpeg-i"%a"-fs1024k"%~na.webp"//删除所有pngdel*.png@echooff表示执行了这条命令后关闭所有命令(包括本身这条命令)的回显。而echooff命令则表示关闭其他所有命令(不包括本身这条命令)的回显,@的作用就是关闭紧跟其后的一条命令的回显脚本完整代码写入脚本中后,需要多加一个%,例如

2021-06-07 Do What You Are Meant To Do

春生阁

Don’tgiveupontryingtofindbalanceinyourlife.Sticktoyourpriorities.Rememberwhat’smostimportanttoyouanddoeverythingyoucantoputyourselfinapositionwhereyoucanfocusonthosepriorities,ratherthanbeingpulledbyt

KVM+GFS分布式存储系统构建KVM高可用

henan程序媛

分布式GFS高可用KVM

一、案列分析1.1案列概述本章案例主要使用之前章节所学的KVM及GlusterFs技术,结合起来从而实现KVM高可用。利用GlusterFs分布式复制卷,对KVM虚拟机文件进行分布存储和冗余。分布式复制卷主要用于需要冗余的情况下把一个文件存放在两个或两个以上的节点,当其中一个节点数据丢失或者损坏之后,KVM仍然能够通过卷组找到另一节点上存储的虚拟机文件,以保证虚拟机正常运行。当节点修复之后,Glu

Kubernetes部署MySQL数据持久化

沫殇-MS

KubernetesMySQL数据库kubernetesmysql容器

一、安装配置NFS服务端1、安装nfs-kernel-server:sudoapt-yinstallnfs-kernel-server2、服务端创建共享目录#列出所有可用块设备的信息lsblk#格式化磁盘sudomkfs-text4/dev/sdb#创建一个目录:sudomkdir-p/data/nfs/mysql#更改目录权限:sudochown-Rnobody:nogroup/data/nfs

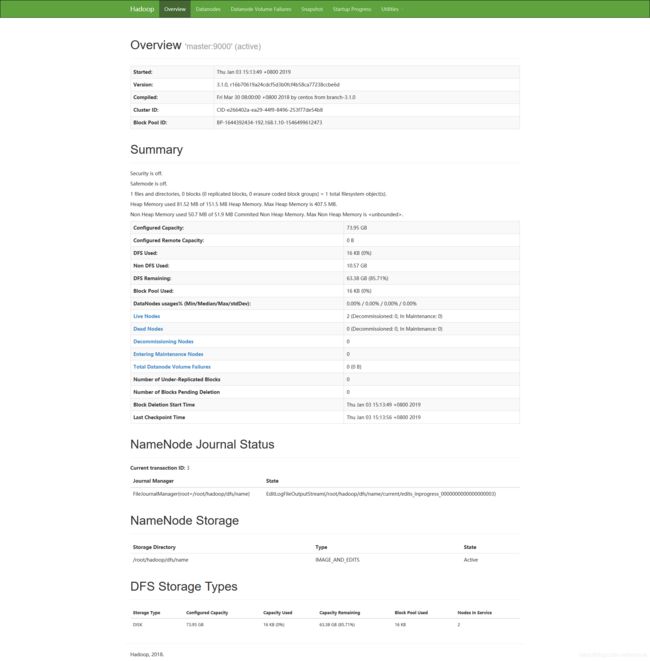

Hadoop

傲雪凌霜,松柏长青

后端大数据hadoop大数据分布式

ApacheHadoop是一个开源的分布式计算框架,主要用于处理海量数据集。它具有高度的可扩展性、容错性和高效的分布式存储与计算能力。Hadoop核心由四个主要模块组成,分别是HDFS(分布式文件系统)、MapReduce(分布式计算框架)、YARN(资源管理)和HadoopCommon(公共工具和库)。1.HDFS(HadoopDistributedFileSystem)HDFS是Hadoop生

tcp线程进程多并发

@莫福瑞

算法

tcp线程多并发#include#defineSERPORT8888#defineSERIP"192.168.0.118"#defineBACKLOG20typedefstruct{intnewfd;structsockaddr_incin;}BMH;void*fun1(void*sss){intnewfd=accept((BMH*)sss)->newfd;structsockaddr_incin

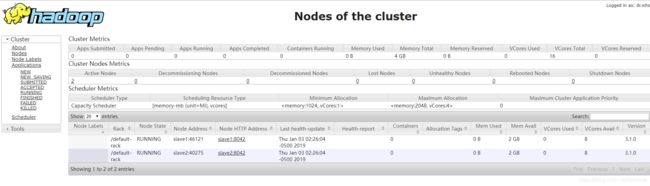

Hadoop架构

henan程序媛

hadoop大数据分布式

一、案列分析1.1案例概述现在已经进入了大数据(BigData)时代,数以万计用户的互联网服务时时刻刻都在产生大量的交互,要处理的数据量实在是太大了,以传统的数据库技术等其他手段根本无法应对数据处理的实时性、有效性的需求。HDFS顺应时代出现,在解决大数据存储和计算方面有很多的优势。1.2案列前置知识点1.什么是大数据大数据是指无法在一定时间范围内用常规软件工具进行捕捉、管理和处理的大量数据集合,

SQL的各种连接查询

xieke90

UNION ALLUNION外连接内连接JOIN

一、内连接

概念:内连接就是使用比较运算符根据每个表共有的列的值匹配两个表中的行。

内连接(join 或者inner join )

SQL语法:

select * fron

java编程思想--复用类

百合不是茶

java继承代理组合final类

复用类看着标题都不知道是什么,再加上java编程思想翻译的比价难懂,所以知道现在才看这本软件界的奇书

一:组合语法:就是将对象的引用放到新类中即可

代码:

package com.wj.reuse;

/**

*

* @author Administrator 组

[开源与生态系统]国产CPU的生态系统

comsci

cpu

计算机要从娃娃抓起...而孩子最喜欢玩游戏....

要让国产CPU在国内市场形成自己的生态系统和产业链,国家和企业就不能够忘记游戏这个非常关键的环节....

投入一些资金和资源,人力和政策,让游

JVM内存区域划分Eden Space、Survivor Space、Tenured Gen,Perm Gen解释

商人shang

jvm内存

jvm区域总体分两类,heap区和非heap区。heap区又分:Eden Space(伊甸园)、Survivor Space(幸存者区)、Tenured Gen(老年代-养老区)。 非heap区又分:Code Cache(代码缓存区)、Perm Gen(永久代)、Jvm Stack(java虚拟机栈)、Local Method Statck(本地方法栈)。

HotSpot虚拟机GC算法采用分代收

页面上调用 QQ

oloz

qq

<A href="tencent://message/?uin=707321921&Site=有事Q我&Menu=yes">

<img style="border:0px;" src=http://wpa.qq.com/pa?p=1:707321921:1></a>

一些问题

文强chu

问题

1.eclipse 导出 doc 出现“The Javadoc command does not exist.” javadoc command 选择 jdk/bin/javadoc.exe 2.tomcate 配置 web 项目 .....

SQL:3.mysql * 必须得放前面 否则 select&nbs

生活没有安全感

小桔子

生活孤独安全感

圈子好小,身边朋友没几个,交心的更是少之又少。在深圳,除了男朋友,没几个亲密的人。不知不觉男朋友成了唯一的依靠,毫不夸张的说,业余生活的全部。现在感情好,也很幸福的。但是说不准难免人心会变嘛,不发生什么大家都乐融融,发生什么很难处理。我想说如果不幸被分手(无论原因如何),生活难免变化很大,在深圳,我没交心的朋友。明

php 基础语法

aichenglong

php 基本语法

1 .1 php变量必须以$开头

<?php

$a=” b”;

echo

?>

1 .2 php基本数据库类型 Integer float/double Boolean string

1 .3 复合数据类型 数组array和对象 object

1 .4 特殊数据类型 null 资源类型(resource) $co

mybatis tools 配置详解

AILIKES

mybatis

MyBatis Generator中文文档

MyBatis Generator中文文档地址:

http://generator.sturgeon.mopaas.com/

该中文文档由于尽可能和原文内容一致,所以有些地方如果不熟悉,看中文版的文档的也会有一定的障碍,所以本章根据该中文文档以及实际应用,使用通俗的语言来讲解详细的配置。

本文使用Markdown进行编辑,但是博客显示效

继承与多态的探讨

百合不是茶

JAVA面向对象 继承 对象

继承 extends 多态

继承是面向对象最经常使用的特征之一:继承语法是通过继承发、基类的域和方法 //继承就是从现有的类中生成一个新的类,这个新类拥有现有类的所有extends是使用继承的关键字:

在A类中定义属性和方法;

class A{

//定义属性

int age;

//定义方法

public void go

JS的undefined与null的实例

bijian1013

JavaScriptJavaScript

<form name="theform" id="theform">

</form>

<script language="javascript">

var a

alert(typeof(b)); //这里提示undefined

if(theform.datas

TDD实践(一)

bijian1013

java敏捷TDD

一.TDD概述

TDD:测试驱动开发,它的基本思想就是在开发功能代码之前,先编写测试代码。也就是说在明确要开发某个功能后,首先思考如何对这个功能进行测试,并完成测试代码的编写,然后编写相关的代码满足这些测试用例。然后循环进行添加其他功能,直到完全部功能的开发。

[Maven学习笔记十]Maven Profile与资源文件过滤器

bit1129

maven

什么是Maven Profile

Maven Profile的含义是针对编译打包环境和编译打包目的配置定制,可以在不同的环境上选择相应的配置,例如DB信息,可以根据是为开发环境编译打包,还是为生产环境编译打包,动态的选择正确的DB配置信息

Profile的激活机制

1.Profile可以手工激活,比如在Intellij Idea的Maven Project视图中可以选择一个P

【Hive八】Hive用户自定义生成表函数(UDTF)

bit1129

hive

1. 什么是UDTF

UDTF,是User Defined Table-Generating Functions,一眼看上去,貌似是用户自定义生成表函数,这个生成表不应该理解为生成了一个HQL Table, 貌似更应该理解为生成了类似关系表的二维行数据集

2. 如何实现UDTF

继承org.apache.hadoop.hive.ql.udf.generic

tfs restful api 加auth 2.0认计

ronin47

目前思考如何给tfs的ngx-tfs api增加安全性。有如下两点:

一是基于客户端的ip设置。这个比较容易实现。

二是基于OAuth2.0认证,这个需要lua,实现起来相对于一来说,有些难度。

现在重点介绍第二种方法实现思路。

前言:我们使用Nginx的Lua中间件建立了OAuth2认证和授权层。如果你也有此打算,阅读下面的文档,实现自动化并获得收益。SeatGe

jdk环境变量配置

byalias

javajdk

进行java开发,首先要安装jdk,安装了jdk后还要进行环境变量配置:

1、下载jdk(http://java.sun.com/javase/downloads/index.jsp),我下载的版本是:jdk-7u79-windows-x64.exe

2、安装jdk-7u79-windows-x64.exe

3、配置环境变量:右击"计算机"-->&quo

《代码大全》表驱动法-Table Driven Approach-2

bylijinnan

java

package com.ljn.base;

import java.io.BufferedReader;

import java.io.FileInputStream;

import java.io.InputStreamReader;

import java.util.ArrayList;

import java.util.Collections;

import java.uti

SQL 数值四舍五入 小数点后保留2位

chicony

四舍五入

1.round() 函数是四舍五入用,第一个参数是我们要被操作的数据,第二个参数是设置我们四舍五入之后小数点后显示几位。

2.numeric 函数的2个参数,第一个表示数据长度,第二个参数表示小数点后位数。

例如:

select cast(round(12.5,2) as numeric(5,2))

c++运算符重载

CrazyMizzz

C++

一、加+,减-,乘*,除/ 的运算符重载

Rational operator*(const Rational &x) const{

return Rational(x.a * this->a);

}

在这里只写乘法的,加减除的写法类似

二、<<输出,>>输入的运算符重载

&nb

hive DDL语法汇总

daizj

hive修改列DDL修改表

hive DDL语法汇总

1、对表重命名

hive> ALTER TABLE table_name RENAME TO new_table_name;

2、修改表备注

hive> ALTER TABLE table_name SET TBLPROPERTIES ('comment' = new_comm

jbox使用说明

dcj3sjt126com

Web

参考网址:http://www.kudystudio.com/jbox/jbox-demo.html jBox v2.3 beta [

点击下载]

技术交流QQGroup:172543951 100521167

[2011-11-11] jBox v2.3 正式版

- [调整&修复] IE6下有iframe或页面有active、applet控件

UISegmentedControl 开发笔记

dcj3sjt126com

// typedef NS_ENUM(NSInteger, UISegmentedControlStyle) {

// UISegmentedControlStylePlain, // large plain

&

Slick生成表映射文件

ekian

scala

Scala添加SLICK进行数据库操作,需在sbt文件上添加slick-codegen包

"com.typesafe.slick" %% "slick-codegen" % slickVersion

因为我是连接SQL Server数据库,还需添加slick-extensions,jtds包

"com.typesa

ES-TEST

gengzg

test

package com.MarkNum;

import java.io.IOException;

import java.util.Date;

import java.util.HashMap;

import java.util.Map;

import javax.servlet.ServletException;

import javax.servlet.annotation

为何外键不再推荐使用

hugh.wang

mysqlDB

表的关联,是一种逻辑关系,并不需要进行物理上的“硬关联”,而且你所期望的关联,其实只是其数据上存在一定的联系而已,而这种联系实际上是在设计之初就定义好的固有逻辑。

在业务代码中实现的时候,只要按照设计之初的这种固有关联逻辑来处理数据即可,并不需要在数据库层面进行“硬关联”,因为在数据库层面通过使用外键的方式进行“硬关联”,会带来很多额外的资源消耗来进行一致性和完整性校验,即使很多时候我们并不

领域驱动设计

julyflame

VODAO设计模式DTOpo

概念:

VO(View Object):视图对象,用于展示层,它的作用是把某个指定页面(或组件)的所有数据封装起来。

DTO(Data Transfer Object):数据传输对象,这个概念来源于J2EE的设计模式,原来的目的是为了EJB的分布式应用提供粗粒度的数据实体,以减少分布式调用的次数,从而提高分布式调用的性能和降低网络负载,但在这里,我泛指用于展示层与服务层之间的数据传输对

单例设计模式

hm4123660

javaSingleton单例设计模式懒汉式饿汉式

单例模式是一种常用的软件设计模式。在它的核心结构中只包含一个被称为单例类的特殊类。通过单例模式可以保证系统中一个类只有一个实例而且该实例易于外界访问,从而方便对实例个数的控制并节约系统源。如果希望在系统中某个类的对象只能存在一个,单例模式是最好的解决方案。

&nb

logback

zhb8015

loglogback

一、logback的介绍

Logback是由log4j创始人设计的又一个开源日志组件。logback当前分成三个模块:logback-core,logback- classic和logback-access。logback-core是其它两个模块的基础模块。logback-classic是log4j的一个 改良版本。此外logback-class

整合Kafka到Spark Streaming——代码示例和挑战

Stark_Summer

sparkstormzookeeperPARALLELISMprocessing

作者Michael G. Noll是瑞士的一位工程师和研究员,效力于Verisign,是Verisign实验室的大规模数据分析基础设施(基础Hadoop)的技术主管。本文,Michael详细的演示了如何将Kafka整合到Spark Streaming中。 期间, Michael还提到了将Kafka整合到 Spark Streaming中的一些现状,非常值得阅读,虽然有一些信息在Spark 1.2版

spring-master-slave-commondao

王新春

DAOspringdataSourceslavemaster

互联网的web项目,都有个特点:请求的并发量高,其中请求最耗时的db操作,又是系统优化的重中之重。

为此,往往搭建 db的 一主多从库的 数据库架构。作为web的DAO层,要保证针对主库进行写操作,对多个从库进行读操作。当然在一些请求中,为了避免主从复制的延迟导致的数据不一致性,部分的读操作也要到主库上。(这种需求一般通过业务垂直分开,比如下单业务的代码所部署的机器,读去应该也要从主库读取数