CCF大数据与计算智能大赛中个贷违约预测比赛的特征处理

比赛地址传送门:

CCF大数据与计算智能大赛

首先先读取数据

import matplotlib.pyplot as plt

import seaborn as sns

import gc

import re

import pandas as pd

import lightgbm as lgb

import numpy as np

from sklearn.metrics import roc_auc_score, precision_recall_curve, roc_curve, average_precision_score

from sklearn.model_selection import KFold

from lightgbm import LGBMClassifier

import matplotlib.pyplot as plt

import seaborn as sns

import gc

from sklearn.model_selection import StratifiedKFold

from dateutil.relativedelta import relativedelta

from sklearn.preprocessing import OneHotEncoder

train_data = pd.read_csv(r'data/data117603/train_public.csv')

test_public = pd.read_csv(r'data/data117603/test_public.csv')

df_features = train_data.append(test_public)

先试着不对数据进行处理直接投入模型的分数是多少

先对文本特征进行LabelEncoder

cat_cols = ['class', 'employer_type', 'industry', 'work_year', 'issue_date', 'earlies_credit_mon']

from sklearn.preprocessing import LabelEncoder

for feat in cat_cols:

lbl = LabelEncoder()

df_features[feat] = lbl.fit_transform(df_features[feat])

使用lgb分类模型进行预测

df_train = df_features[~df_features['isDefault'].isnull()]

df_train = df_train.reset_index(drop=True)

df_test = df_features[df_features['isDefault'].isnull()]

no_features = ['user_id', 'loan_id', 'isDefault']

# 输入特征列

features = [col for col in df_train.columns if col not in no_features]

X = df_train[features] # 训练集输入

y = df_train['isDefault'] # 训练集标签

X_test = df_test[features] # 测试集输入

folds = KFold(n_splits=5, shuffle=True, random_state=2019)

oof_preds = np.zeros(X.shape[0])

sub_preds = np.zeros(X_test.shape[0])

for n_fold, (trn_idx, val_idx) in enumerate(folds.split(X)):

trn_x, trn_y = X[features].iloc[trn_idx], y.iloc[trn_idx]

val_x, val_y = X[features].iloc[val_idx], y.iloc[val_idx]

clf = LGBMClassifier()

clf.fit(trn_x, trn_y,

eval_set= [(trn_x, trn_y), (val_x, val_y)],

eval_metric='auc', verbose=100, early_stopping_rounds=40 #30

)

oof_preds[val_idx] = clf.predict_proba(val_x, num_iteration=clf.best_iteration_)[:, 1]

sub_preds += clf.predict_proba(X_test[features], num_iteration=clf.best_iteration_)[:, 1] / folds.n_splits

print('Fold %2d AUC : %.6f' % (n_fold + 1, roc_auc_score(val_y, oof_preds[val_idx])))

del clf, trn_x, trn_y, val_x, val_y

print('Full AUC score %.6f' % roc_auc_score(y, oof_preds))

这里可以看到,当我仅仅对文本特征进行LabelEncoder的话AUC分数只有0.878533

接下来试着对数据进行一些处理

因为在特征’work_year’中存在着一些< 1已经10+的特征,所以我在这里先对这些不符合要求的特征进行处理

def workYearDIc(x):

if str(x)=='nan':

return -1

try:

x = x.replace('< 1','0').replace('10+ ','10')

except:

pass

return int(re.search('(\d+)', x).group())

df_features['work_year'] = df_features['work_year'].map(workYearDIc)

在下面我将根据特征本身进行有关于时间的特征进行处理

a = []

for i in range(15000):

try:

a.append(pd.to_datetime(df_features['earlies_credit_mon'].values[i]))

except:

try:

a.append(pd.to_datetime('9' + df_features['earlies_credit_mon'].values[i]))

except:

a.append(pd.to_datetime('20' + df_features['earlies_credit_mon'].values[i]))

df_features['earlies_credit_mon'] = a

df_features['earlies_credit_mon'] = pd.to_datetime(df_features['earlies_credit_mon'])

df_features['issue_date'] = pd.to_datetime(df_features['issue_date'])

df_features['issue_date_month'] = df_features['issue_date'].dt.month

df_features['issue_date_dayofweek'] = df_features['issue_date'].dt.dayofweek

df_features['earliesCreditMon'] = df_features['earlies_credit_mon'].dt.month

df_features['earliesCreditYear'] = df_features['earlies_credit_mon'].dt.year

对类别特征做标识处理

df_features['class'] = df_features['class'].map({'A': 0, 'B': 1, 'C': 2, 'D': 3, 'E': 4, 'F': 5, 'G': 6})

对两个特征进行LabelEncoder处理

cat_cols = ['employer_type', 'industry']

from sklearn.preprocessing import LabelEncoder

for col in cat_cols:

lbl = LabelEncoder()

df_features[col] = lbl.fit_transform(df_features[col])

然后删掉前面做过处理的两个特征,而特征’policy_code’由于它的值都是零,所以也删除掉

col_to_drop = ['issue_date', 'earlies_credit_mon', 'policy_code']

df_features = df_features.drop(col_to_drop, axis=1)

再对一些有缺失值的特征进行填补**-1**

df_features['pub_dero_bankrup'].fillna(-1, inplace=True)

df_features['f0'].fillna(-1, inplace=True)

df_features['f1'].fillna(-1, inplace=True)

df_features['f2'].fillna(-1, inplace=True)

df_features['f3'].fillna(-1, inplace=True)

df_features['f4'].fillna(-1, inplace=True)

然后再划分训练和测试集

df_train = df_features[~df_features['isDefault'].isnull()]

df_train = df_train.reset_index(drop=True)

df_test = df_features[df_features['isDefault'].isnull()]

no_features = ['user_id', 'loan_id', 'isDefault']

# 输入特征列

features = [col for col in df_train.columns if col not in no_features]

X = df_train[features] # 训练集输入

y = df_train['isDefault'] # 训练集标签

X_test = df_test[features] # 测试集输入

之后对训练集和测试集进行标准化处理,在这里我尝试过最值归一化处理与均值方差归一化处理,发现均值方差归一化处理效果更好所以我采用了均值方差归一化处理

class StandardScaler:

def __init__(self):

self.mean_ = None

self.scale_ = None

def fit(self,X):

'''根据训练数据集X获得数据的均值和方差'''

self.mean_ = np.array([np.mean(X[:,i]) for i in range(X.shape[1])])

self.scale_ = np.array([np.std(X[:,i]) for i in range(X.shape[1])])

return self

def transform(self,X):

'''将X根据Standardcaler进行均值方差归一化处理'''

resX = np.empty(shape=X.shape,dtype=float)

for col in range(X.shape[1]):

resX[:,col] = (X[:,col]-self.mean_[col]) / (self.scale_[col])

return resX

X_col = X.columns

X_test_col = X_test.columns

StandardScaler = StandardScaler()

StandardScaler.fit(X.values)

X = StandardScaler.transform(X.values)

X_test = StandardScaler.transform(X_test.values)

X = pd.DataFrame(X, columns=X_col)

X_test = pd.DataFrame(X_test, columns=X_test_col)

由于这个训练集存在着样本不均衡的问题,在这里我是用SMOTE函数进行过采样处理以解决不平衡问题,在这里可能使用下采样或者其他处理样本不均衡的方法会更好,但是我没有尝试其它的,大家可以去试试其它的

from imblearn.over_sampling import SMOTE

X, y = SMOTE(random_state=42).fit_resample(X, y)

接下来投入模型训练样本看看效果

folds = KFold(n_splits=5, shuffle=True, random_state=2019)

oof_preds = np.zeros(X.shape[0])

sub_preds = np.zeros(X_test.shape[0])

for n_fold, (trn_idx, val_idx) in enumerate(folds.split(X)):

trn_x, trn_y = X[features].iloc[trn_idx], y.iloc[trn_idx]

val_x, val_y = X[features].iloc[val_idx], y.iloc[val_idx]

clf = LGBMClassifier()

clf.fit(trn_x, trn_y,

eval_set= [(trn_x, trn_y), (val_x, val_y)],

eval_metric='auc', verbose=100, early_stopping_rounds=40 #30

)

oof_preds[val_idx] = clf.predict_proba(val_x, num_iteration=clf.best_iteration_)[:, 1]

sub_preds += clf.predict_proba(X_test[features], num_iteration=clf.best_iteration_)[:, 1] / folds.n_splits

print('Fold %2d AUC : %.6f' % (n_fold + 1, roc_auc_score(val_y, oof_preds[val_idx])))

del clf, trn_x, trn_y, val_x, val_y

print('Full AUC score %.6f' % roc_auc_score(y, oof_preds))

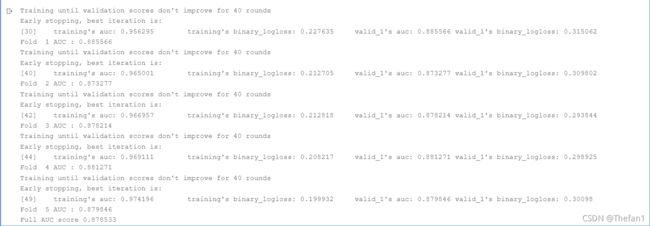

在最后的结果中可以看出做了特征处理后的AUC分数提升了挺大的

然后我们再看看特征之间的相关性来根据这些相关性来构造一些新特征

对特征之间进行相关性计算并且用热力图展示出来

from pylab import mpl

mpl.rcParams['font.sans-serif'] = ['FangSong'] # 指定默认字体

mpl.rcParams['axes.unicode_minus'] = False # 解决保存图像是负

plt.figure(figsize=(30, 30))

ax = sns.heatmap(df_features.corr(),linewidths=5,vmax=1.0, square=True,linecolor='white', annot=True)

ax.tick_params(labelsize=10)

plt.show()

看这个热力图是我们不难找到一些特征之间的相关性较大

我通过这些特征之间相关性大的特征进行了融合,我尝试过很多次,发现了以下几个是对分数提升最大的

但是我之前是一个一个试的,这几个新特征对AUC提升都比较明显,但是当我把这几个新特征一起加入到模型中训练的时候反而发现对AUC提升不是很大,不过我还是都列出来,并且一起加进去训练

df_features['new_1'] = df_features['total_loan'] / df_features['monthly_payment']

df_features['new_3'] = df_features['known_dero'] - df_features['pub_dero_bankrup']

df_features['new_6'] = df_features['known_outstanding_loan'] * df_features['pub_dero_bankrup']

df_features['new_11'] = df_features['f0'] / df_features['f3']

df_features['new_12'] = df_features['f0'] + df_features['f4']

df_features['new_18'] = df_features['f3'] * df_features['f4']

最后我们再对数据进行伪标签处理来进一步提升我们的AUC

在这里有关于伪标签的介绍

在这里我将预测值小于0.05的数据全部将标签设置为0作为伪标签加入到训练集中,因为预测结果小于等于0.05的一般可以看做结果就是0

test_public['isDefault'] = sub_preds

test_public.loc[test_public['isDefault']<0.05,'isDefault'] = 0

InteId = test_public.loc[test_public.isDefault<0.05, 'loan_id'].tolist()

use_te = test_public[test_public.loan_id.isin( InteId )].copy()

然后重新读取训练集和预测集的数据,之后将这两个数据与之前我们要作为伪标签的数据一起合并成为一个新数据

test = pd.read_csv(r'data/data117603/test_public.csv')

train = pd.read_csv(r'data/data117603/train_public.csv')

df_features = pd.concat([train,test,use_te]).reset_index(drop=True)

之后的特征处理和上文一模一样然后再投入到模型进行训练

训练出来的结果如下:

从结果上可以看出提升还是挺大的,

在这里其实可以进行多次迭代来加入更多的伪标签,但是这里我就不弄这么多次了

而且在特征处理上依托于业务背景来做是效果会更好,不过因为我不了解这个业务的背景所以并没有做到依托于业务背景做数据处理