k8s笔记

目录

k8s介绍

一、kubernetes 部署

二、harbor仓库搭建

三、k8s流量入口Ingress

3.1 helm3安装 ingress-nginx

四、k8sHPA 自动水平伸缩pod

五、k8s存储

5.1 k8s持久化存储02pv pvc

5.2 StorageClass

5.ubuntu20.04系统

六、k8s架构师课程之有状态服务StatefulSet

七、k8s一次性和定时任务

八、k8sRBAC角色访问控制

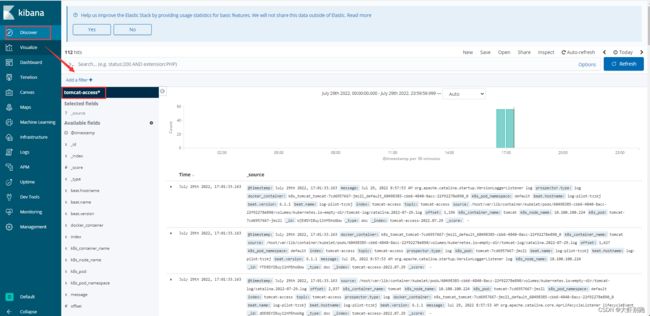

九、k8s业务日志收集上节介绍、下节实战

十、k8s的Prometheus监控实战

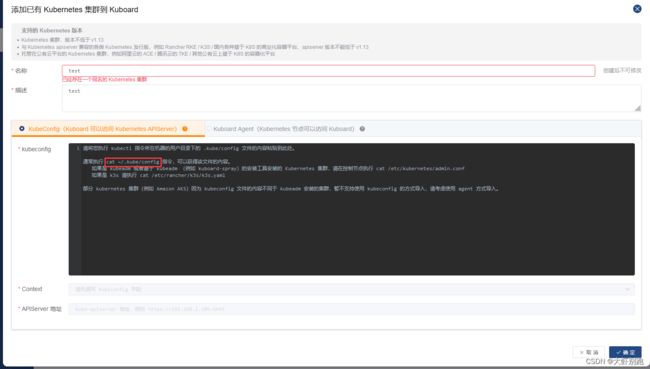

十一、k8s安装kuboard图形化界面

11.离线安装下载:

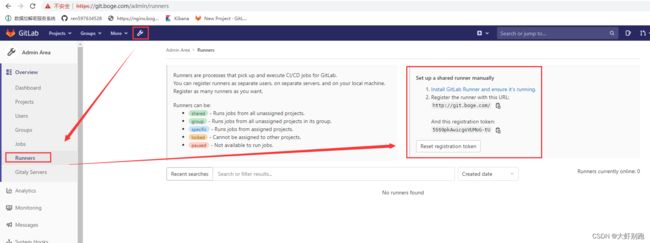

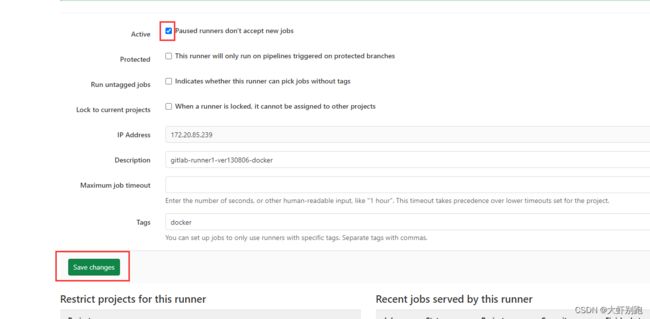

十二、 k8s架构师课程基于gitlab的CICD自动化

十三、k8s安装kubesphere3.3

目录

k8s介绍

一、kubernetes 部署

二、harbor仓库搭建

三、k8s流量入口Ingress

3.1 helm3安装 ingress-nginx

四、k8sHPA 自动水平伸缩pod

五、k8s存储

5.1 k8s持久化存储02pv pvc

5.2 StorageClass

5.ubuntu20.04系统

六、k8s架构师课程之有状态服务StatefulSet

七、k8s一次性和定时任务

八、k8sRBAC角色访问控制

九、k8s业务日志收集上节介绍、下节实战

十、k8s的Prometheus监控实战

十一、k8s安装kuboard图形化界面

11.离线安装下载:

十二、 k8s架构师课程基于gitlab的CICD自动化

十三、k8s安装kubesphere3.3

k8s介绍

传统部署时代:

早期,各个组织是在物理服务器上运行应用程序。 由于无法限制在物理服务器中运行的应用程序资源使用,因此会导致资源分配问题。 例如,如果在同一台物理服务器上运行多个应用程序, 则可能会出现一个应用程序占用大部分资源的情况,而导致其他应用程序的性能下降。 一种解决方案是将每个应用程序都运行在不同的物理服务器上, 但是当某个应用程式资源利用率不高时,剩余资源无法被分配给其他应用程式, 而且维护许多物理服务器的成本很高。

虚拟化部署时代:

因此,虚拟化技术被引入了。虚拟化技术允许你在单个物理服务器的 CPU 上运行多台虚拟机(VM)。 虚拟化能使应用程序在不同 VM 之间被彼此隔离,且能提供一定程度的安全性, 因为一个应用程序的信息不能被另一应用程序随意访问。

虚拟化技术能够更好地利用物理服务器的资源,并且因为可轻松地添加或更新应用程序, 而因此可以具有更高的可扩缩性,以及降低硬件成本等等的好处。 通过虚拟化,你可以将一组物理资源呈现为可丢弃的虚拟机集群。

每个 VM 是一台完整的计算机,在虚拟化硬件之上运行所有组件,包括其自己的操作系统。

容器部署时代:

容器类似于 VM,但是更宽松的隔离特性,使容器之间可以共享操作系统(OS)。 因此,容器比起 VM 被认为是更轻量级的。且与 VM 类似,每个容器都具有自己的文件系统、CPU、内存、进程空间等。 由于它们与基础架构分离,因此可以跨云和 OS 发行版本进行移植。

容器因具有许多优势而变得流行起来,例如:

-

敏捷应用程序的创建和部署:与使用 VM 镜像相比,提高了容器镜像创建的简便性和效率。

-

持续开发、集成和部署:通过快速简单的回滚(由于镜像不可变性), 提供可靠且频繁的容器镜像构建和部署。

-

关注开发与运维的分离:在构建、发布时创建应用程序容器镜像,而不是在部署时, 从而将应用程序与基础架构分离。

-

可观察性:不仅可以显示 OS 级别的信息和指标,还可以显示应用程序的运行状况和其他指标信号。

-

跨开发、测试和生产的环境一致性:在笔记本计算机上也可以和在云中运行一样的应用程序。

-

跨云和操作系统发行版本的可移植性:可在 Ubuntu、RHEL、CoreOS、本地、 Google Kubernetes Engine 和其他任何地方运行。

-

以应用程序为中心的管理:提高抽象级别,从在虚拟硬件上运行 OS 到使用逻辑资源在 OS 上运行应用程序。

-

松散耦合、分布式、弹性、解放的微服务:应用程序被分解成较小的独立部分, 并且可以动态部署和管理 - 而不是在一台大型单机上整体运行。

-

资源隔离:可预测的应用程序性能。

-

资源利用:高效率和高密度。

为什么需要 Kubernetes,它能做什么?

容器是打包和运行应用程序的好方式。在生产环境中, 你需要管理运行着应用程序的容器,并确保服务不会下线。 例如,如果一个容器发生故障,则你需要启动另一个容器。 如果此行为交由给系统处理,是不是会更容易一些?

这就是 Kubernetes 要来做的事情! Kubernetes 为你提供了一个可弹性运行分布式系统的框架。 Kubernetes 会满足你的扩展要求、故障转移你的应用、提供部署模式等。 例如,Kubernetes 可以轻松管理系统的 Canary 部署。

Kubernetes 为你提供:

-

服务发现和负载均衡

Kubernetes 可以使用 DNS 名称或自己的 IP 地址来曝露容器。 如果进入容器的流量很大, Kubernetes 可以负载均衡并分配网络流量,从而使部署稳定。

-

存储编排

Kubernetes 允许你自动挂载你选择的存储系统,例如本地存储、公共云提供商等。

-

自动部署和回滚

你可以使用 Kubernetes 描述已部署容器的所需状态, 它可以以受控的速率将实际状态更改为期望状态。 例如,你可以自动化 Kubernetes 来为你的部署创建新容器, 删除现有容器并将它们的所有资源用于新容器。

-

自动完成装箱计算

你为 Kubernetes 提供许多节点组成的集群,在这个集群上运行容器化的任务。 你告诉 Kubernetes 每个容器需要多少 CPU 和内存 (RAM)。 Kubernetes 可以将这些容器按实际情况调度到你的节点上,以最佳方式利用你的资源。

-

自我修复

Kubernetes 将重新启动失败的容器、替换容器、杀死不响应用户定义的运行状况检查的容器, 并且在准备好服务之前不将其通告给客户端。

-

密钥与配置管理

Kubernetes 允许你存储和管理敏感信息,例如密码、OAuth 令牌和 ssh 密钥。 你可以在不重建容器镜像的情况下部署和更新密钥和应用程序配置,也无需在堆栈配置中暴露密钥。

Kubernetes 不是什么

Kubernetes 不是传统的、包罗万象的 PaaS(平台即服务)系统。 由于 Kubernetes 是在容器级别运行,而非在硬件级别,它提供了 PaaS 产品共有的一些普遍适用的功能, 例如部署、扩展、负载均衡,允许用户集成他们的日志记录、监控和警报方案。 但是,Kubernetes 不是单体式(monolithic)系统,那些默认解决方案都是可选、可插拔的。 Kubernetes 为构建开发人员平台提供了基础,但是在重要的地方保留了用户选择权,能有更高的灵活性。

Kubernetes:

-

不限制支持的应用程序类型。 Kubernetes 旨在支持极其多种多样的工作负载,包括无状态、有状态和数据处理工作负载。 如果应用程序可以在容器中运行,那么它应该可以在 Kubernetes 上很好地运行。

-

不部署源代码,也不构建你的应用程序。 持续集成(CI)、交付和部署(CI/CD)工作流取决于组织的文化和偏好以及技术要求。

-

不提供应用程序级别的服务作为内置服务,例如中间件(例如消息中间件)、 数据处理框架(例如 Spark)、数据库(例如 MySQL)、缓存、集群存储系统 (例如 Ceph)。这样的组件可以在 Kubernetes 上运行,并且/或者可以由运行在 Kubernetes 上的应用程序通过可移植机制 (例如开放服务代理)来访问。

-

不是日志记录、监视或警报的解决方案。 它集成了一些功能作为概念证明,并提供了收集和导出指标的机制。

-

不提供也不要求配置用的语言、系统(例如 jsonnet),它提供了声明性 API, 该声明性 API 可以由任意形式的声明性规范所构成。

-

不提供也不采用任何全面的机器配置、维护、管理或自我修复系统。

-

此外,Kubernetes 不仅仅是一个编排系统,实际上它消除了编排的需要。 编排的技术定义是执行已定义的工作流程:首先执行 A,然后执行 B,再执行 C。 而 Kubernetes 包含了一组独立可组合的控制过程,可以连续地将当前状态驱动到所提供的预期状态。 你不需要在乎如何从 A 移动到 C,也不需要集中控制,这使得系统更易于使用 且功能更强大、系统更健壮,更为弹性和可扩展

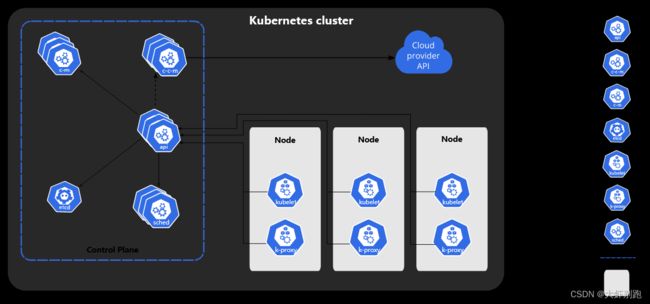

k8s架构图

一、kubernetes 部署

1.环境规划

系统版本 CentOS 7.9.2009

服务器配置2核4g

k8s版本1.20.2

docker版本docker-ce-19.03.14 docker-ce-cli-19.03.14

IP规划-3主2子节点

20.6.100.220 k8s-m01

20.6.100.221 k8s-m02

20.6.100.222 k8s-m03

20.6.100.223 k8s-node01

20.6.100.224 k8s-node02

20.6.100.225 k8s-ck

2.系统配置yum

#添加访问互联路由

cat > /etc/resolv.conf <3.二进制安装包下载

链接:https://pan.baidu.com/s/1OBT9pxcZiuHx0hLxS2Fd1g?pwd=fhbg

提取码:fhbg

4.安装脚本来着-博哥爱运维

cat k8s_install_new .sh

#!/bin/bash

# auther: boge

# descriptions: the shell scripts will use ansible to deploy K8S at binary for siample

# 传参检测

[ $# -ne 6 ] && echo -e "Usage: $0 rootpasswd netnum nethosts cri cni k8s-cluster-name\nExample: bash $0 newpasswd 20.6.100 220\ 221\ 222\ 223\ 224 [containerd|docker] [calico|flannel] test\n" && exit 11

# 变量定义

export release=3.0.0

export k8s_ver=v1.20.2 # v1.20.2, v1.19.7, v1.18.15, v1.17.17

rootpasswd=$1

netnum=$2

nethosts=$3

cri=$4

cni=$5

clustername=$6

if ls -1v ./kubeasz*.tar.gz &>/dev/null;then software_packet="$(ls -1v ./kubeasz*.tar.gz )";else software_packet="";fi

pwd="/etc/kubeasz"

# deploy机器升级软件库

if cat /etc/redhat-release &>/dev/null;then

yum update -y

else

apt-get update && apt-get upgrade -y && apt-get dist-upgrade -y

[ $? -ne 0 ] && apt-get -yf install

fi

# deploy机器检测python环境

python2 -V &>/dev/null

if [ $? -ne 0 ];then

if cat /etc/redhat-release &>/dev/null;then

yum install gcc openssl-devel bzip2-devel wget -y

wget https://www.python.org/ftp/python/2.7.16/Python-2.7.16.tgz

tar xzf Python-2.7.16.tgz

cd Python-2.7.16

./configure --enable-optimizations

make altinstall

ln -s /usr/bin/python2.7 /usr/bin/python

cd -

else

apt-get install -y python2.7 && ln -s /usr/bin/python2.7 /usr/bin/python

fi

fi

# deploy机器设置pip安装加速源

if [[ $clustername != 'aws' ]]; then

mkdir ~/.pip

cat > ~/.pip/pip.conf </dev/null;then

yum install git python-pip sshpass wget -y

[ -f ./get-pip.py ] && python ./get-pip.py || {

wget https://bootstrap.pypa.io/pip/2.7/get-pip.py && python get-pip.py

}

else

apt-get install git python-pip sshpass -y

[ -f ./get-pip.py ] && python ./get-pip.py || {

wget https://bootstrap.pypa.io/pip/2.7/get-pip.py && python get-pip.py

}

fi

python -m pip install --upgrade "pip < 21.0"

pip -V

pip install --no-cache-dir ansible netaddr

# 在deploy机器做其他node的ssh免密操作

for host in `echo "${nethosts}"`

do

echo "============ ${netnum}.${host} ===========";

if [[ ${USER} == 'root' ]];then

[ ! -f /${USER}/.ssh/id_rsa ] &&\

ssh-keygen -t rsa -P '' -f /${USER}/.ssh/id_rsa

else

[ ! -f /home/${USER}/.ssh/id_rsa ] &&\

ssh-keygen -t rsa -P '' -f /home/${USER}/.ssh/id_rsa

fi

sshpass -p ${rootpasswd} ssh-copy-id -o StrictHostKeyChecking=no ${USER}@${netnum}.${host}

if cat /etc/redhat-release &>/dev/null;then

ssh -o StrictHostKeyChecking=no ${USER}@${netnum}.${host} "yum update -y"

else

ssh -o StrictHostKeyChecking=no ${USER}@${netnum}.${host} "apt-get update && apt-get upgrade -y && apt-get dist-upgrade -y"

[ $? -ne 0 ] && ssh -o StrictHostKeyChecking=no ${USER}@${netnum}.${host} "apt-get -yf install"

fi

done

# deploy机器下载k8s二进制安装脚本

if [[ ${software_packet} == '' ]];then

curl -C- -fLO --retry 3 https://github.com/easzlab/kubeasz/releases/download/${release}/ezdown

sed -ri "s+^(K8S_BIN_VER=).*$+\1${k8s_ver}+g" ezdown

chmod +x ./ezdown

# 使用工具脚本下载

./ezdown -D && ./ezdown -P

else

tar xvf ${software_packet} -C /etc/

chmod +x ${pwd}/{ezctl,ezdown}

fi

# 初始化一个名为my的k8s集群配置

CLUSTER_NAME="$clustername"

${pwd}/ezctl new ${CLUSTER_NAME}

if [[ $? -ne 0 ]];then

echo "cluster name [${CLUSTER_NAME}] was exist in ${pwd}/clusters/${CLUSTER_NAME}."

exit 1

fi

if [[ ${software_packet} != '' ]];then

# 设置参数,启用离线安装

sed -i 's/^INSTALL_SOURCE.*$/INSTALL_SOURCE: "offline"/g' ${pwd}/clusters/${CLUSTER_NAME}/config.yml

fi

# to check ansible service

ansible all -m ping

#---------------------------------------------------------------------------------------------------

#修改二进制安装脚本配置 config.yml

sed -ri "s+^(CLUSTER_NAME:).*$+\1 \"${CLUSTER_NAME}\"+g" ${pwd}/clusters/${CLUSTER_NAME}/config.yml

## k8s上日志及容器数据存独立磁盘步骤(参考阿里云的)

[ ! -d /var/lib/container ] && mkdir -p /var/lib/container/{kubelet,docker}

## cat /etc/fstab

# UUID=105fa8ff-bacd-491f-a6d0-f99865afc3d6 / ext4 defaults 1 1

# /dev/vdb /var/lib/container/ ext4 defaults 0 0

# /var/lib/container/kubelet /var/lib/kubelet none defaults,bind 0 0

# /var/lib/container/docker /var/lib/docker none defaults,bind 0 0

## tree -L 1 /var/lib/container

# /var/lib/container

# ├── docker

# ├── kubelet

# └── lost+found

# docker data dir

DOCKER_STORAGE_DIR="/var/lib/container/docker"

sed -ri "s+^(STORAGE_DIR:).*$+STORAGE_DIR: \"${DOCKER_STORAGE_DIR}\"+g" ${pwd}/clusters/${CLUSTER_NAME}/config.yml

# containerd data dir

CONTAINERD_STORAGE_DIR="/var/lib/container/containerd"

sed -ri "s+^(STORAGE_DIR:).*$+STORAGE_DIR: \"${CONTAINERD_STORAGE_DIR}\"+g" ${pwd}/clusters/${CLUSTER_NAME}/config.yml

# kubelet logs dir

KUBELET_ROOT_DIR="/var/lib/container/kubelet"

sed -ri "s+^(KUBELET_ROOT_DIR:).*$+KUBELET_ROOT_DIR: \"${KUBELET_ROOT_DIR}\"+g" ${pwd}/clusters/${CLUSTER_NAME}/config.yml

if [[ $clustername != 'aws' ]]; then

# docker aliyun repo

REG_MIRRORS="https://pqbap4ya.mirror.aliyuncs.com"

sed -ri "s+^REG_MIRRORS:.*$+REG_MIRRORS: \'[\"${REG_MIRRORS}\"]\'+g" ${pwd}/clusters/${CLUSTER_NAME}/config.yml

fi

# [docker]信任的HTTP仓库

sed -ri "s+127.0.0.1/8+${netnum}.0/24+g" ${pwd}/clusters/${CLUSTER_NAME}/config.yml

# disable dashboard auto install

sed -ri "s+^(dashboard_install:).*$+\1 \"no\"+g" ${pwd}/clusters/${CLUSTER_NAME}/config.yml

# 融合配置准备

CLUSEER_WEBSITE="${CLUSTER_NAME}k8s.gtapp.xyz"

lb_num=$(grep -wn '^MASTER_CERT_HOSTS:' ${pwd}/clusters/${CLUSTER_NAME}/config.yml |awk -F: '{print $1}')

lb_num1=$(expr ${lb_num} + 1)

lb_num2=$(expr ${lb_num} + 2)

sed -ri "${lb_num1}s+.*$+ - "${CLUSEER_WEBSITE}"+g" ${pwd}/clusters/${CLUSTER_NAME}/config.yml

sed -ri "${lb_num2}s+(.*)$+#\1+g" ${pwd}/clusters/${CLUSTER_NAME}/config.yml

# node节点最大pod 数

MAX_PODS="120"

sed -ri "s+^(MAX_PODS:).*$+\1 ${MAX_PODS}+g" ${pwd}/clusters/${CLUSTER_NAME}/config.yml

# 修改二进制安装脚本配置 hosts

# clean old ip

sed -ri '/192.168.1.1/d' ${pwd}/clusters/${CLUSTER_NAME}/hosts

sed -ri '/192.168.1.2/d' ${pwd}/clusters/${CLUSTER_NAME}/hosts

sed -ri '/192.168.1.3/d' ${pwd}/clusters/${CLUSTER_NAME}/hosts

sed -ri '/192.168.1.4/d' ${pwd}/clusters/${CLUSTER_NAME}/hosts

# 输入准备创建ETCD集群的主机位

echo "enter etcd hosts here (example: 222 221 220) ↓"

read -p "" ipnums

for ipnum in `echo ${ipnums}`

do

echo $netnum.$ipnum

sed -i "/\[etcd/a $netnum.$ipnum" ${pwd}/clusters/${CLUSTER_NAME}/hosts

done

# 输入准备创建KUBE-MASTER集群的主机位

echo "enter kube-master hosts here (example: 222 221 220) ↓"

read -p "" ipnums

for ipnum in `echo ${ipnums}`

do

echo $netnum.$ipnum

sed -i "/\[kube_master/a $netnum.$ipnum" ${pwd}/clusters/${CLUSTER_NAME}/hosts

done

# 输入准备创建KUBE-NODE集群的主机位

echo "enter kube-node hosts here (example: 224 223) ↓"

read -p "" ipnums

for ipnum in `echo ${ipnums}`

do

echo $netnum.$ipnum

sed -i "/\[kube_node/a $netnum.$ipnum" ${pwd}/clusters/${CLUSTER_NAME}/hosts

done

# 配置容器运行时CNI

case ${cni} in

flannel)

sed -ri "s+^CLUSTER_NETWORK=.*$+CLUSTER_NETWORK=\"${cni}\"+g" ${pwd}/clusters/${CLUSTER_NAME}/hosts

;;

calico)

sed -ri "s+^CLUSTER_NETWORK=.*$+CLUSTER_NETWORK=\"${cni}\"+g" ${pwd}/clusters/${CLUSTER_NAME}/hosts

;;

*)

echo "cni need be flannel or calico."

exit 11

esac

# 配置K8S的ETCD数据备份的定时任务

if cat /etc/redhat-release &>/dev/null;then

if ! grep -w '94.backup.yml' /var/spool/cron/root &>/dev/null;then echo "00 00 * * * `which ansible-playbook` ${pwd}/playbooks/94.backup.yml &> /dev/null" >> /var/spool/cron/root;else echo exists ;fi

chown root.crontab /var/spool/cron/root

chmod 600 /var/spool/cron/root

else

if ! grep -w '94.backup.yml' /var/spool/cron/crontabs/root &>/dev/null;then echo "00 00 * * * `which ansible-playbook` ${pwd}/playbooks/94.backup.yml &> /dev/null" >> /var/spool/cron/crontabs/root;else echo exists ;fi

chown root.crontab /var/spool/cron/crontabs/root

chmod 600 /var/spool/cron/crontabs/root

fi

rm /var/run/cron.reboot

service crond restart

#---------------------------------------------------------------------------------------------------

# 准备开始安装了

rm -rf ${pwd}/{dockerfiles,docs,.gitignore,pics,dockerfiles} &&\

find ${pwd}/ -name '*.md'|xargs rm -f

read -p "Enter to continue deploy k8s to all nodes >>>" YesNobbb

# now start deploy k8s cluster

cd ${pwd}/

# to prepare CA/certs & kubeconfig & other system settings

${pwd}/ezctl setup ${CLUSTER_NAME} 01

sleep 1

# to setup the etcd cluster

${pwd}/ezctl setup ${CLUSTER_NAME} 02

sleep 1

# to setup the container runtime(docker or containerd)

case ${cri} in

containerd)

sed -ri "s+^CONTAINER_RUNTIME=.*$+CONTAINER_RUNTIME=\"${cri}\"+g" ${pwd}/clusters/${CLUSTER_NAME}/hosts

${pwd}/ezctl setup ${CLUSTER_NAME} 03

;;

docker)

sed -ri "s+^CONTAINER_RUNTIME=.*$+CONTAINER_RUNTIME=\"${cri}\"+g" ${pwd}/clusters/${CLUSTER_NAME}/hosts

${pwd}/ezctl setup ${CLUSTER_NAME} 03

;;

*)

echo "cri need be containerd or docker."

exit 11

esac

sleep 1

# to setup the master nodes

${pwd}/ezctl setup ${CLUSTER_NAME} 04

sleep 1

# to setup the worker nodes

${pwd}/ezctl setup ${CLUSTER_NAME} 05

sleep 1

# to setup the network plugin(flannel、calico...)

${pwd}/ezctl setup ${CLUSTER_NAME} 06

sleep 1

# to setup other useful plugins(metrics-server、coredns...)

${pwd}/ezctl setup ${CLUSTER_NAME} 07

sleep 1

# [可选]对集群所有节点进行操作系统层面的安全加固 https://github.com/dev-sec/ansible-os-hardening

#ansible-playbook roles/os-harden/os-harden.yml

#sleep 1

cd `dirname ${software_packet:-/tmp}`

k8s_bin_path='/opt/kube/bin'

echo "------------------------- k8s version list ---------------------------"

${k8s_bin_path}/kubectl version

echo

echo "------------------------- All Healthy status check -------------------"

${k8s_bin_path}/kubectl get componentstatus

echo

echo "------------------------- k8s cluster info list ----------------------"

${k8s_bin_path}/kubectl cluster-info

echo

echo "------------------------- k8s all nodes list -------------------------"

${k8s_bin_path}/kubectl get node -o wide

echo

echo "------------------------- k8s all-namespaces's pods list ------------"

${k8s_bin_path}/kubectl get pod --all-namespaces

echo

echo "------------------------- k8s all-namespaces's service network ------"

${k8s_bin_path}/kubectl get svc --all-namespaces

echo

echo "------------------------- k8s welcome for you -----------------------"

echo

# you can use k alias kubectl to siample

echo "alias k=kubectl && complete -F __start_kubectl k" >> ~/.bashrc

# get dashboard url

${k8s_bin_path}/kubectl cluster-info|grep dashboard|awk '{print $NF}'|tee -a /root/k8s_results

# get login token

${k8s_bin_path}/kubectl -n kube-system describe secret $(${k8s_bin_path}/kubectl -n kube-system get secret | grep admin-user | awk '{print $1}')|grep 'token:'|awk '{print $NF}'|tee -a /root/k8s_results

echo

echo "you can look again dashboard and token info at >>> /root/k8s_results <<<"

#echo ">>>>>>>>>>>>>>>>> You can excute command [ source ~/.bashrc ] <<<<<<<<<<<<<<<<<<<<"

echo ">>>>>>>>>>>>>>>>> You need to excute command [ reboot ] to restart all nodes <<<<<<<<<<<<<<<<<<<<"

rm -f $0

[ -f ${software_packet} ] && rm -f ${software_packet}

#rm -f ${pwd}/roles/deploy/templates/${USER_NAME}-csr.json.j2

#sed -ri "s+${USER_NAME}+admin+g" ${pwd}/roles/prepare/tasks/main.yml

5.运行脚本 并加入参数, newpasswd是服务器密码

bash k8s_install_new .sh newpasswd 20.6.100 220\ 221\ 222\ 223\ 224 docker calico test

#脚本运行中途需要输入如下参数

二、harbor仓库搭建

1.安装

#目录/root上传文件docker-compose和harbor-offline-installer-v1.2.0.tgz

mv /root/docker-compose /usr/local/bin/

chmod a+x /usr/local/bin/docker-compose

ln -s /usr/local/bin/docker-compose /usr/bin/docker-compose

tar -zxvf harbor-offline-installer-v2.4.1.tgz

mv harbor /usr/local/

cd /usr/local/harbor/

cp harbor.yml.tmpl harbor.yml

sed -i 's/hostname: reg.mydomain.com/hostname: 20.6.100.225/g' harbor.yml

sed -i 's/https/#https/g' harbor.yml

sed -i 's/certificate/#certificate/g' harbor.yml

sed -i 's/private_key/#private_key/g' harbor.yml

cat /etc/docker/daemon.json

{

"registry-mirrors": ["https://nr240upq.mirror.aliyuncs.com", "https://registry.docker-cn.com", "https://docker.mirrors.ustc.edu.cn", "https://dockerhub.azk8s.cn", "http://hub-mirror.c.163.com"],

"exec-opts": ["native.cgroupdriver=systemd"],

"log-driver": "json-file",

"log-opts": {

"max-size": "100m"

},

"insecure-registries": ["20.6.100.225:80"]

}

systemctl daemon-reload && systemctl restart docker

#安装

./install.sh

## 重启harbor

cd /usr/local/harbor/

docker-compose down -v

docker-compose up -d

docker ps|grep harbor

2.需要访问仓库的其他节点的 daemon.json添加如下内容

##-------------------

vim /etc/docker/daemon.json

"registry-mirrors": ["https://nr240upq.mirror.aliyuncs.com", "https://registry.docker-cn.com", "https://docker.mirrors.ustc.edu.cn", "https://dockerhub.azk8s.cn"],

"insecure-registries": ["20.6.100.225:80"],

##-------------------

systemctl daemon-reload

systemctl restart docker

3.节点使用仓库

#登入仓库网站

docker login -u admin -p Harbor12345 20.6.100.225:80

#下载镜像

docker pull daocloud.io/library/nginx:1.9.1

#给镜像打上标签

docker tag daocloud.io/library/nginx:1.9.1 20.6.100.225:5000/library/nginx:1.9.1

#镜像上传

docker push 20.6.100.225:80/library/nginx:1.9.1

#删除镜像

docker rmi 20.6.100.225:80/library/nginx:1.9.1

#打包

docker save daocloud.io/library/nginx:1.9.1 > /root/nginx-1.9.1.tar

#加载包

docker load -i /root/nginx-1.9.1.tar三、k8s流量入口Ingress

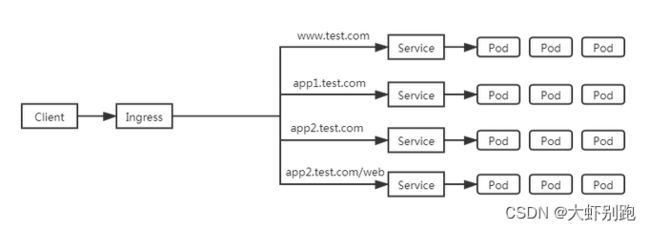

1.逻辑图

Ingress是允许入站连接到达集群服务的一组规则。即介于物理网络和群集svc之间的一组转发规则。

其实就是实现L4 L7的负载均衡:

注意:这里的Ingress并非将外部流量通过Service来转发到服务pod上,而只是通过Service来找到对应的Endpoint来发现pod进行转发

internet

|

[ Ingress ] ---> [ Services ] ---> [ Endpoint ]

--|-----|-- |

[ Pod,pod,...... ]<-------------------------|

aliyun-ingress-controller有一个很重要的修改,就是它支持路由配置的动态更新,

大家用过Nginx的可以知道,在修改完Nginx的配置,我们是需要进行nginx -s reload来重加载配置才能生效的,

在K8s上,这个行为也是一样的,但由于K8s运行的服务会非常多,所以它的配置更新是非常频繁的,

因此,如果不支持配置动态更新,对于在高频率变化的场景下,Nginx频繁Reload会带来较明显的请求访问问题:1.造成一定的QPS抖动和访问失败情况

2.对于长连接服务会被频繁断掉

3.造成大量的处于shutting down的Nginx Worker进程,进而引起内存膨胀

详细原理分析见这篇文章: https://developer.aliyun.com/article/692732

2.准备来部署aliyun-ingress-controller,下面直接是生产中在用的yaml配置,我们保存了aliyun-ingress-nginx.yaml准备开始部署:

cat > /data/k8s/aliyun-ingress-nginx.yaml <3.#开始部署

kubectl apply -f aliyun-ingress-nginx.yaml

4.我们查看下pod,会发现空空如也,为什么会这样呢?

kubectl -n ingress-nginx get pod

注意上面的yaml配置里面,我使用了节点选择配置,只有打了我指定lable标签的node节点,也会被允许调度pod上去运行

nodeSelector:

boge/ingress-controller-ready: “true”

5.给mast 打标签,想运行什么节点上就给什么节点打标签

kubectl label node 20.6.100.220 boge/ingress-controller-ready=true

kubectl label node 20.6.100.221 boge/ingress-controller-ready=true

kubectl label node 20.6.100.222 boge/ingress-controller-ready=true

6.接着可以看到pod就被调试到这两台node上启动了

kubectl -n ingress-nginx get pod -o wide

7 子节点修改haproxy.cfg

vi /etc/haproxy/haproxy.cfg

listen ingress-http

bind 0.0.0.0:80

mode tcp

option tcplog

option dontlognull

option dontlog-normal

balance roundrobin

server 20.6.100.220 20.6.100.220:80 check inter 2000 fall 2 rise 2 weight 1

server 20.6.100.221 20.6.100.221:80 check inter 2000 fall 2 rise 2 weight 1

server 20.6.100.222 20.6.100.222:80 check inter 2000 fall 2 rise 2 weight 1

listen ingress-https

bind 0.0.0.0:443

mode tcp

option tcplog

option dontlognull

option dontlog-normal

balance roundrobin

server 20.6.100.220 20.6.100.220:443 check inter 2000 fall 2 rise 2 weight 1

server 20.6.100.221 20.6.100.221:443 check inter 2000 fall 2 rise 2 weight 1

server 20.6.100.222 20.6.100.222:443 check inter 2000 fall 2 rise 2 weight 1

8.子节点安装keepalived

yum install -y keepalived

9.编辑配置修改为如下:

这里是node 20.6.100.223

cat > /etc/keepalived/keepalived.conf <10.这里是node 20.6.100.224

cat > /etc/keepalived/keepalived.conf <11.启动服务器

# 重启服务

systemctl restart haproxy.service

systemctl restart keepalived.service

# 查看运行状态

systemctl status haproxy.service

systemctl status keepalived.service

# 添加开机自启动(haproxy默认安装好就添加了自启动)

systemctl enable keepalived.service

# 查看是否添加成功

systemctl is-enabled keepalived.service

#enabled就代表添加成功了

# 同时我可查看下VIP是否已经生成

ip a|grep 226

12.然后准备nginx的ingress配置,保留为nginx-ingress.yaml,并执行它

apiVersion: v1

kind: Service

metadata:

namespace: test

name: nginx

labels:

app: nginx

spec:

ports:

- port: 80

protocol: TCP

targetPort: 80

selector:

app: nginx

---

apiVersion: apps/v1

kind: Deployment

metadata:

namespace: test

name: nginx

labels:

app: nginx

spec:

replicas: 1

selector:

matchLabels:

app: nginx

template:

metadata:

labels:

app: nginx

spec:

containers:

- name: nginx

image: nginx

ports:

- containerPort: 80

---

apiVersion: extensions/v1beta1

kind: Ingress

metadata:

namespace: test

name: nginx-ingress

spec:

rules:

- host: nginx.boge.com

http:

paths:

- backend:

serviceName: nginx

servicePort: 80

path: /

13.运行

kubectl apply -f nginx-ingress.yaml

#查看创建的ingress资源

# kubectl get ingress

NAME CLASS HOSTS ADDRESS PORTS AGE

nginx-ingress nginx.boge.com 80 13s

# 我们在其它节点上,加下本地hosts,来测试下效果

20.6.1.226 nginx.boge.com

#测试

curl nginx.boge.com

14.生产环境正常情况下大部分是一个Ingress对应一个Service服务,但在一些特殊情况,需要复用一个Ingress来访问多个服务的,下面我们来实践下

再创建一个nginx的deployment和service,注意名称修改下不要冲突了

# kubectl create deployment web --image=nginx

deployment.apps/web created

# kubectl expose deployment web --port=80 --target-port=80

service/web exposed

# 确认下创建结果

# kubectl get deployments.apps

NAME READY UP-TO-DATE AVAILABLE AGE

nginx 1/1 1 1 16h

web 1/1 1 1 45s

# kubectl get pod

# kubectl get svc

# 接着来修改Ingress

# 注意:这里可以通过两种方式来修改K8s正在运行的资源

# 第一种:直接通过edit修改在线服务的资源来生效,这个通常用在测试环境,在实际生产中不建议这么用

kubectl edit ingress nginx-ingress

# 第二种: 通过之前创建ingress的yaml配置,在上面进行修改,再apply更新进K8s,在生产中是建议这么用的,我们这里也用这种方式来修改

# vim nginx-ingress.yaml

apiVersion: extensions/v1beta1

kind: Ingress

metadata:

annotations:

nginx.ingress.kubernetes.io/rewrite-target: / # 注意这里需要把进来到服务的请求重定向到/,这个和传统的nginx配置是一样的,不配会404

name: nginx-ingress

spec:

rules:

- host: nginx.boge.com

http:

paths:

- backend:

serviceName: nginx

servicePort: 80

path: /nginx # 注意这里的路由名称要是唯一的

- backend: # 从这里开始是新增加的

serviceName: web

servicePort: 80

path: /web # 注意这里的路由名称要是唯一的

# 开始创建

[root@node-1 ~]# kubectl apply -f nginx-ingress.yaml

ingress.extensions/nginx-ingress configured

# 同时为了更直观的看到效果,我们按前面讲到的方法来修改下nginx默认的展示页面

# kubectl exec -it nginx-f89759699-6vgr8 -- bash

echo "i am nginx" > /usr/share/nginx/html/index.html

# kubectl exec -it web-5dcb957ccc-nr2m7 -- bash

echo "i am web" > /usr/share/nginx/html/index.html

15.因为http属于是明文传输数据不安全,在生产中我们通常会配置https加密通信,现在实战下Ingress的tls配置

# 这里我先自签一个https的证书

#1. 先生成私钥key

openssl genrsa -out tls.key 2048

#2.再基于key生成tls证书(注意:这里我用的*.boge.com,这是生成泛域名的证书,后面所有新增加的三级域名都是可以用这个证书的)

openssl req -new -x509 -key tls.key -out tls.cert -days 360 -subj /CN=*.boge.com

# 看下创建结果

# ll

-rw-r--r-- 1 root root 1099 Nov 27 11:44 tls.cert

-rw-r--r-- 1 root root 1679 Nov 27 11:43 tls.key

# 在K8s上创建tls的secret(注意默认ns是default)

kubectl create secret tls mytls --cert=tls.cert --key=tls.key

# 然后修改先的ingress的yaml配置

# cat nginx-ingress.yaml

apiVersion: extensions/v1beta1

kind: Ingress

metadata:

annotations:

nginx.ingress.kubernetes.io/rewrite-target: / # 注意这里需要把进来到服务的请求重定向到/,这个和传统的nginx配置是一样的,不配会404

name: nginx-ingress

spec:

rules:

- host: nginx.boge.com

http:

paths:

- backend:

serviceName: nginx

servicePort: 80

path: /nginx # 注意这里的路由名称要是唯一的

- backend: # 从这里开始是新增加的

serviceName: web

servicePort: 80

path: /web # 注意这里的路由名称要是唯一的

tls: # 增加下面这段,注意缩进格式

- hosts:

- nginx.boge.com # 这里域名和上面的对应

secretName: mytls # 这是我先生成的secret

# 进行更新

kubectl apply -f nginx-ingress.yaml

16.测试现在再来看看https访问的效果:

https://nginx.boge.com/nginx

https://nginx.boge.com/web

注意:这里因为是我自签的证书,所以浏览器地访问时会提示您的连接不是私密连接 ,我这里用的谷歌浏览器,直接点高级,再点击继续前往nginx.boge.com(不安全)

3.1 helm3安装 ingress-nginx

1.下载 ingress-nginx-4.2.5.tgz

helm fetch ingress-nginx/ingress-nginx --version 4.2.5

#或者curl -LO https://github.com/kubernetes/ingress-nginx/releases/download/helm-chart-4.2.5/ingress-nginx-4.2.5.tgz

#或者curl -LO https://storage.corpintra.plus/kubernetes/charts/ingress-nginx-4.2.5.tgz2.解压,修改文件

sudo tar -xvf ingress-nginx-4.2.5.tgz && sudo cd ingress-nginx

#下面是已经修改完毕的,可以直接使用

vim values.yaml

## nginx configuration

## Ref: https://github.com/kubernetes/ingress-nginx/blob/main/docs/user-guide/nginx-configuration/index.md

commonLabels: {}

# scmhash: abc123

# myLabel: aakkmd

controller:

name: controller

image:

chroot: false

registry: registry.cn-hangzhou.aliyuncs.com

image: google_containers/nginx-ingress-controller

## repository:

tag: "v1.3.1"

#digest: sha256:54f7fe2c6c5a9db9a0ebf1131797109bb7a4d91f56b9b362bde2abd237dd1974

#digestChroot: sha256:a8466b19c621bd550b1645e27a004a5cc85009c858a9ab19490216735ac432b1

pullPolicy: IfNotPresent

# www-data -> uid 101

runAsUser: 101

allowPrivilegeEscalation: true

# -- Use an existing PSP instead of creating one

existingPsp: ""

# -- Configures the controller container name

containerName: controller

# -- Configures the ports that the nginx-controller listens on

containerPort:

http: 80

https: 443

# -- Will add custom configuration options to Nginx https://kubernetes.github.io/ingress-nginx/user-guide/nginx-configuration/configmap/

config: {}

# -- Annotations to be added to the controller config configuration configmap.

configAnnotations: {}

# -- Will add custom headers before sending traffic to backends according to https://github.com/kubernetes/ingress-nginx/tree/main/docs/examples/customization/custom-headers

proxySetHeaders: {}

# -- Will add custom headers before sending response traffic to the client according to: https://kubernetes.github.io/ingress-nginx/user-guide/nginx-configuration/configmap/#add-headers

addHeaders: {}

# -- Optionally customize the pod dnsConfig.

dnsConfig: {}

# -- Optionally customize the pod hostname.

hostname: {}

# -- Optionally change this to ClusterFirstWithHostNet in case you have 'hostNetwork: true'.

# By default, while using host network, name resolution uses the host's DNS. If you wish nginx-controller

# to keep resolving names inside the k8s network, use ClusterFirstWithHostNet.

dnsPolicy: ClusterFirstWithHostNet

# -- Bare-metal considerations via the host network https://kubernetes.github.io/ingress-nginx/deploy/baremetal/#via-the-host-network

# Ingress status was blank because there is no Service exposing the NGINX Ingress controller in a configuration using the host network, the default --publish-service flag used in standard cloud setups does not apply

reportNodeInternalIp: false

# -- Process Ingress objects without ingressClass annotation/ingressClassName field

# Overrides value for --watch-ingress-without-class flag of the controller binary

# Defaults to false

watchIngressWithoutClass: false

# -- Process IngressClass per name (additionally as per spec.controller).

ingressClassByName: false

# -- This configuration defines if Ingress Controller should allow users to set

# their own *-snippet annotations, otherwise this is forbidden / dropped

# when users add those annotations.

# Global snippets in ConfigMap are still respected

allowSnippetAnnotations: true

# -- Required for use with CNI based kubernetes installations (such as ones set up by kubeadm),

# since CNI and hostport don't mix yet. Can be deprecated once https://github.com/kubernetes/kubernetes/issues/23920

# is merged

hostNetwork: true

## Use host ports 80 and 443

## Disabled by default

hostPort:

# -- Enable 'hostPort' or not

enabled: false

ports:

# -- 'hostPort' http port

http: 80

# -- 'hostPort' https port

https: 443

# -- Election ID to use for status update

electionID: ingress-controller-leader

## This section refers to the creation of the IngressClass resource

## IngressClass resources are supported since k8s >= 1.18 and required since k8s >= 1.19

ingressClassResource:

# -- Name of the ingressClass

name: nginx

# -- Is this ingressClass enabled or not

enabled: true

# -- Is this the default ingressClass for the cluster

default: false

# -- Controller-value of the controller that is processing this ingressClass

controllerValue: "k8s.io/ingress-nginx"

# -- Parameters is a link to a custom resource containing additional

# configuration for the controller. This is optional if the controller

# does not require extra parameters.

parameters: {}

# -- For backwards compatibility with ingress.class annotation, use ingressClass.

# Algorithm is as follows, first ingressClassName is considered, if not present, controller looks for ingress.class annotation

ingressClass: nginx

# -- Labels to add to the pod container metadata

podLabels: {}

# key: value

# -- Security Context policies for controller pods

podSecurityContext: {}

# -- See https://kubernetes.io/docs/tasks/administer-cluster/sysctl-cluster/ for notes on enabling and using sysctls

sysctls: {}

# sysctls:

# "net.core.somaxconn": "8192"

# -- Allows customization of the source of the IP address or FQDN to report

# in the ingress status field. By default, it reads the information provided

# by the service. If disable, the status field reports the IP address of the

# node or nodes where an ingress controller pod is running.

publishService:

# -- Enable 'publishService' or not

enabled: true

# -- Allows overriding of the publish service to bind to

# Must be /

pathOverride: ""

# Limit the scope of the controller to a specific namespace

scope:

# -- Enable 'scope' or not

enabled: false

# -- Namespace to limit the controller to; defaults to $(POD_NAMESPACE)

namespace: ""

# -- When scope.enabled == false, instead of watching all namespaces, we watching namespaces whose labels

# only match with namespaceSelector. Format like foo=bar. Defaults to empty, means watching all namespaces.

namespaceSelector: ""

# -- Allows customization of the configmap / nginx-configmap namespace; defaults to $(POD_NAMESPACE)

configMapNamespace: ""

tcp:

# -- Allows customization of the tcp-services-configmap; defaults to $(POD_NAMESPACE)

configMapNamespace: ""

# -- Annotations to be added to the tcp config configmap

annotations: {}

udp:

# -- Allows customization of the udp-services-configmap; defaults to $(POD_NAMESPACE)

configMapNamespace: ""

# -- Annotations to be added to the udp config configmap

annotations: {}

# -- Maxmind license key to download GeoLite2 Databases.

## https://blog.maxmind.com/2019/12/18/significant-changes-to-accessing-and-using-geolite2-databases

maxmindLicenseKey: ""

# -- Additional command line arguments to pass to nginx-ingress-controller

# E.g. to specify the default SSL certificate you can use

extraArgs: {}

## extraArgs:

## default-ssl-certificate: "/"

# -- Additional environment variables to set

extraEnvs: []

# extraEnvs:

# - name: FOO

# valueFrom:

# secretKeyRef:

# key: FOO

# name: secret-resource

# -- Use a `DaemonSet` or `Deployment`

kind: DaemonSet

# -- Annotations to be added to the controller Deployment or DaemonSet

##

annotations: {}

# keel.sh/pollSchedule: "@every 60m"

# -- Labels to be added to the controller Deployment or DaemonSet and other resources that do not have option to specify labels

##

labels: {}

# keel.sh/policy: patch

# keel.sh/trigger: poll

# -- The update strategy to apply to the Deployment or DaemonSet

##

updateStrategy: {}

# rollingUpdate:

# maxUnavailable: 1

# type: RollingUpdate

# -- `minReadySeconds` to avoid killing pods before we are ready

##

minReadySeconds: 0

# -- Node tolerations for server scheduling to nodes with taints

## Ref: https://kubernetes.io/docs/concepts/configuration/assign-pod-node/

##

tolerations: []

# - key: "key"

# operator: "Equal|Exists"

# value: "value"

# effect: "NoSchedule|PreferNoSchedule|NoExecute(1.6 only)"

# -- Affinity and anti-affinity rules for server scheduling to nodes

## Ref: https://kubernetes.io/docs/concepts/configuration/assign-pod-node/#affinity-and-anti-affinity

##

affinity: {}

# # An example of preferred pod anti-affinity, weight is in the range 1-100

# podAntiAffinity:

# preferredDuringSchedulingIgnoredDuringExecution:

# - weight: 100

# podAffinityTerm:

# labelSelector:

# matchExpressions:

# - key: app.kubernetes.io/name

# operator: In

# values:

# - ingress-nginx

# - key: app.kubernetes.io/instance

# operator: In

# values:

# - ingress-nginx

# - key: app.kubernetes.io/component

# operator: In

# values:

# - controller

# topologyKey: kubernetes.io/hostname

# # An example of required pod anti-affinity

# podAntiAffinity:

# requiredDuringSchedulingIgnoredDuringExecution:

# - labelSelector:

# matchExpressions:

# - key: app.kubernetes.io/name

# operator: In

# values:

# - ingress-nginx

# - key: app.kubernetes.io/instance

# operator: In

# values:

# - ingress-nginx

# - key: app.kubernetes.io/component

# operator: In

# values:

# - controller

# topologyKey: "kubernetes.io/hostname"

# -- Topology spread constraints rely on node labels to identify the topology domain(s) that each Node is in.

## Ref: https://kubernetes.io/docs/concepts/workloads/pods/pod-topology-spread-constraints/

##

topologySpreadConstraints: []

# - maxSkew: 1

# topologyKey: topology.kubernetes.io/zone

# whenUnsatisfiable: DoNotSchedule

# labelSelector:

# matchLabels:

# app.kubernetes.io/instance: ingress-nginx-internal

# -- `terminationGracePeriodSeconds` to avoid killing pods before we are ready

## wait up to five minutes for the drain of connections

##

terminationGracePeriodSeconds: 300

# -- Node labels for controller pod assignment

## Ref: https://kubernetes.io/docs/user-guide/node-selection/

##

nodeSelector:

kubernetes.io/os: linux

ingress: "true"

## Liveness and readiness probe values

## Ref: https://kubernetes.io/docs/concepts/workloads/pods/pod-lifecycle/#container-probes

##

## startupProbe:

## httpGet:

## # should match container.healthCheckPath

## path: "/healthz"

## port: 10254

## scheme: HTTP

## initialDelaySeconds: 5

## periodSeconds: 5

## timeoutSeconds: 2

## successThreshold: 1

## failureThreshold: 5

livenessProbe:

httpGet:

# should match container.healthCheckPath

path: "/healthz"

port: 10254

scheme: HTTP

initialDelaySeconds: 10

periodSeconds: 10

timeoutSeconds: 1

successThreshold: 1

failureThreshold: 5

readinessProbe:

httpGet:

# should match container.healthCheckPath

path: "/healthz"

port: 10254

scheme: HTTP

initialDelaySeconds: 10

periodSeconds: 10

timeoutSeconds: 1

successThreshold: 1

failureThreshold: 3

# -- Path of the health check endpoint. All requests received on the port defined by

# the healthz-port parameter are forwarded internally to this path.

healthCheckPath: "/healthz"

# -- Address to bind the health check endpoint.

# It is better to set this option to the internal node address

# if the ingress nginx controller is running in the `hostNetwork: true` mode.

healthCheckHost: ""

# -- Annotations to be added to controller pods

##

podAnnotations: {}

replicaCount: 1

minAvailable: 1

## Define requests resources to avoid probe issues due to CPU utilization in busy nodes

## ref: https://github.com/kubernetes/ingress-nginx/issues/4735#issuecomment-551204903

## Ideally, there should be no limits.

## https://engineering.indeedblog.com/blog/2019/12/cpu-throttling-regression-fix/

resources:

## limits:

## cpu: 100m

## memory: 90Mi

requests:

cpu: 100m

memory: 90Mi

# Mutually exclusive with keda autoscaling

autoscaling:

enabled: false

minReplicas: 1

maxReplicas: 11

targetCPUUtilizationPercentage: 50

targetMemoryUtilizationPercentage: 50

behavior: {}

# scaleDown:

# stabilizationWindowSeconds: 300

# policies:

# - type: Pods

# value: 1

# periodSeconds: 180

# scaleUp:

# stabilizationWindowSeconds: 300

# policies:

# - type: Pods

# value: 2

# periodSeconds: 60

autoscalingTemplate: []

# Custom or additional autoscaling metrics

# ref: https://kubernetes.io/docs/tasks/run-application/horizontal-pod-autoscale/#support-for-custom-metrics

# - type: Pods

# pods:

# metric:

# name: nginx_ingress_controller_nginx_process_requests_total

# target:

# type: AverageValue

# averageValue: 10000m

# Mutually exclusive with hpa autoscaling

keda:

apiVersion: "keda.sh/v1alpha1"

## apiVersion changes with keda 1.x vs 2.x

## 2.x = keda.sh/v1alpha1

## 1.x = keda.k8s.io/v1alpha1

enabled: false

minReplicas: 1

maxReplicas: 11

pollingInterval: 30

cooldownPeriod: 300

restoreToOriginalReplicaCount: false

scaledObject:

annotations: {}

# Custom annotations for ScaledObject resource

# annotations:

# key: value

triggers: []

# - type: prometheus

# metadata:

# serverAddress: http://:9090

# metricName: http_requests_total

# threshold: '100'

# query: sum(rate(http_requests_total{deployment="my-deployment"}[2m]))

behavior: {}

# scaleDown:

# stabilizationWindowSeconds: 300

# policies:

# - type: Pods

# value: 1

# periodSeconds: 180

# scaleUp:

# stabilizationWindowSeconds: 300

# policies:

# - type: Pods

# value: 2

# periodSeconds: 60

# -- Enable mimalloc as a drop-in replacement for malloc.

## ref: https://github.com/microsoft/mimalloc

##

enableMimalloc: true

## Override NGINX template

customTemplate:

configMapName: ""

configMapKey: ""

service:

enabled: true

# -- If enabled is adding an appProtocol option for Kubernetes service. An appProtocol field replacing annotations that were

# using for setting a backend protocol. Here is an example for AWS: service.beta.kubernetes.io/aws-load-balancer-backend-protocol: http

# It allows choosing the protocol for each backend specified in the Kubernetes service.

# See the following GitHub issue for more details about the purpose: https://github.com/kubernetes/kubernetes/issues/40244

# Will be ignored for Kubernetes versions older than 1.20

##

appProtocol: true

annotations: {}

labels: {}

# clusterIP: ""

# -- List of IP addresses at which the controller services are available

## Ref: https://kubernetes.io/docs/user-guide/services/#external-ips

##

externalIPs: []

# -- Used by cloud providers to connect the resulting `LoadBalancer` to a pre-existing static IP according to https://kubernetes.io/docs/concepts/services-networking/service/#loadbalancer

loadBalancerIP: ""

loadBalancerSourceRanges: []

enableHttp: true

enableHttps: true

## Set external traffic policy to: "Local" to preserve source IP on providers supporting it.

## Ref: https://kubernetes.io/docs/tutorials/services/source-ip/#source-ip-for-services-with-typeloadbalancer

# externalTrafficPolicy: ""

## Must be either "None" or "ClientIP" if set. Kubernetes will default to "None".

## Ref: https://kubernetes.io/docs/concepts/services-networking/service/#virtual-ips-and-service-proxies

# sessionAffinity: ""

## Specifies the health check node port (numeric port number) for the service. If healthCheckNodePort isn’t specified,

## the service controller allocates a port from your cluster’s NodePort range.

## Ref: https://kubernetes.io/docs/tasks/access-application-cluster/create-external-load-balancer/#preserving-the-client-source-ip

# healthCheckNodePort: 0

# -- Represents the dual-stack-ness requested or required by this Service. Possible values are

# SingleStack, PreferDualStack or RequireDualStack.

# The ipFamilies and clusterIPs fields depend on the value of this field.

## Ref: https://kubernetes.io/docs/concepts/services-networking/dual-stack/

ipFamilyPolicy: "SingleStack"

# -- List of IP families (e.g. IPv4, IPv6) assigned to the service. This field is usually assigned automatically

# based on cluster configuration and the ipFamilyPolicy field.

## Ref: https://kubernetes.io/docs/concepts/services-networking/dual-stack/

ipFamilies:

- IPv4

ports:

http: 80

https: 443

targetPorts:

http: http

https: https

type: LoadBalancer

## type: NodePort

## nodePorts:

## http: 32080

## https: 32443

## tcp:

## 8080: 32808

nodePorts:

http: ""

https: ""

tcp: {}

udp: {}

external:

enabled: true

internal:

# -- Enables an additional internal load balancer (besides the external one).

enabled: false

# -- Annotations are mandatory for the load balancer to come up. Varies with the cloud service.

annotations: {}

# loadBalancerIP: ""

# -- Restrict access For LoadBalancer service. Defaults to 0.0.0.0/0.

loadBalancerSourceRanges: []

## Set external traffic policy to: "Local" to preserve source IP on

## providers supporting it

## Ref: https://kubernetes.io/docs/tutorials/services/source-ip/#source-ip-for-services-with-typeloadbalancer

# externalTrafficPolicy: ""

# shareProcessNamespace enables process namespace sharing within the pod.

# This can be used for example to signal log rotation using `kill -USR1` from a sidecar.

shareProcessNamespace: false

# -- Additional containers to be added to the controller pod.

# See https://github.com/lemonldap-ng-controller/lemonldap-ng-controller as example.

extraContainers: []

# - name: my-sidecar

# - name: POD_NAME

# valueFrom:

# fieldRef:

# fieldPath: metadata.name

# - name: POD_NAMESPACE

# valueFrom:

# fieldRef:

# fieldPath: metadata.namespace

# volumeMounts:

# - name: copy-portal-skins

# mountPath: /srv/var/lib/lemonldap-ng/portal/skins

# -- Additional volumeMounts to the controller main container.

extraVolumeMounts: []

# - name: copy-portal-skins

# mountPath: /var/lib/lemonldap-ng/portal/skins

# -- Additional volumes to the controller pod.

extraVolumes: []

# - name: copy-portal-skins

# emptyDir: {}

# -- Containers, which are run before the app containers are started.

extraInitContainers: []

# - name: init-myservice

# command: ['sh', '-c', 'until nslookup myservice; do echo waiting for myservice; sleep 2; done;']

extraModules: []

## Modules, which are mounted into the core nginx image

# - name: opentelemetry

#

# The image must contain a `/usr/local/bin/init_module.sh` executable, which

# will be executed as initContainers, to move its config files within the

# mounted volume.

admissionWebhooks:

annotations: {}

# ignore-check.kube-linter.io/no-read-only-rootfs: "This deployment needs write access to root filesystem".

## Additional annotations to the admission webhooks.

## These annotations will be added to the ValidatingWebhookConfiguration and

## the Jobs Spec of the admission webhooks.

enabled: true

# -- Additional environment variables to set

extraEnvs: []

# extraEnvs:

# - name: FOO

# valueFrom:

# secretKeyRef:

# key: FOO

# name: secret-resource

# -- Admission Webhook failure policy to use

failurePolicy: Fail

# timeoutSeconds: 10

port: 8443

certificate: "/usr/local/certificates/cert"

key: "/usr/local/certificates/key"

namespaceSelector: {}

objectSelector: {}

# -- Labels to be added to admission webhooks

labels: {}

# -- Use an existing PSP instead of creating one

existingPsp: ""

networkPolicyEnabled: false

service:

annotations: {}

# clusterIP: ""

externalIPs: []

# loadBalancerIP: ""

loadBalancerSourceRanges: []

servicePort: 443

type: ClusterIP

createSecretJob:

resources: {}

# limits:

# cpu: 10m

# memory: 20Mi

# requests:

# cpu: 10m

# memory: 20Mi

patchWebhookJob:

resources: {}

patch:

enabled: true

image:

registry: registry.cn-hangzhou.aliyuncs.com

image: google_containers/kube-webhook-certgen

## for backwards compatibility consider setting the full image url via the repository value below

## use *either* current default registry/image or repository format or installing chart by providing the values.yaml will fail

## repository:

tag: v1.3.0

# digest: sha256:549e71a6ca248c5abd51cdb73dbc3083df62cf92ed5e6147c780e30f7e007a47

pullPolicy: IfNotPresent

# -- Provide a priority class name to the webhook patching job

##

priorityClassName: ""

podAnnotations: {}

nodeSelector:

kubernetes.io/os: linux

tolerations: []

# -- Labels to be added to patch job resources

labels: {}

securityContext:

runAsNonRoot: true

runAsUser: 2000

fsGroup: 2000

metrics:

port: 10254

# if this port is changed, change healthz-port: in extraArgs: accordingly

enabled: false

service:

annotations: {}

# prometheus.io/scrape: "true"

# prometheus.io/port: "10254"

# clusterIP: ""

# -- List of IP addresses at which the stats-exporter service is available

## Ref: https://kubernetes.io/docs/user-guide/services/#external-ips

##

externalIPs: []

# loadBalancerIP: ""

loadBalancerSourceRanges: []

servicePort: 10254

type: ClusterIP

# externalTrafficPolicy: ""

# nodePort: ""

serviceMonitor:

enabled: false

additionalLabels: {}

## The label to use to retrieve the job name from.

## jobLabel: "app.kubernetes.io/name"

namespace: ""

namespaceSelector: {}

## Default: scrape .Release.Namespace only

## To scrape all, use the following:

## namespaceSelector:

## any: true

scrapeInterval: 30s

# honorLabels: true

targetLabels: []

relabelings: []

metricRelabelings: []

prometheusRule:

enabled: false

additionalLabels: {}

# namespace: ""

rules: []

# # These are just examples rules, please adapt them to your needs

# - alert: NGINXConfigFailed

# expr: count(nginx_ingress_controller_config_last_reload_successful == 0) > 0

# for: 1s

# labels:

# severity: critical

# annotations:

# description: bad ingress config - nginx config test failed

# summary: uninstall the latest ingress changes to allow config reloads to resume

# - alert: NGINXCertificateExpiry

# expr: (avg(nginx_ingress_controller_ssl_expire_time_seconds) by (host) - time()) < 604800

# for: 1s

# labels:

# severity: critical

# annotations:

# description: ssl certificate(s) will expire in less then a week

# summary: renew expiring certificates to avoid downtime

# - alert: NGINXTooMany500s

# expr: 100 * ( sum( nginx_ingress_controller_requests{status=~"5.+"} ) / sum(nginx_ingress_controller_requests) ) > 5

# for: 1m

# labels:

# severity: warning

# annotations:

# description: Too many 5XXs

# summary: More than 5% of all requests returned 5XX, this requires your attention

# - alert: NGINXTooMany400s

# expr: 100 * ( sum( nginx_ingress_controller_requests{status=~"4.+"} ) / sum(nginx_ingress_controller_requests) ) > 5

# for: 1m

# labels:

# severity: warning

# annotations:

# description: Too many 4XXs

# summary: More than 5% of all requests returned 4XX, this requires your attention

# -- Improve connection draining when ingress controller pod is deleted using a lifecycle hook:

# With this new hook, we increased the default terminationGracePeriodSeconds from 30 seconds

# to 300, allowing the draining of connections up to five minutes.

# If the active connections end before that, the pod will terminate gracefully at that time.

# To effectively take advantage of this feature, the Configmap feature

# worker-shutdown-timeout new value is 240s instead of 10s.

##

lifecycle:

preStop:

exec:

command:

- /wait-shutdown

priorityClassName: ""

# -- Rollback limit

##

revisionHistoryLimit: 10

## Default 404 backend

##

defaultBackend:

##

enabled: false

name: defaultbackend

image:

registry: k8s.gcr.io

image: defaultbackend-amd64

## for backwards compatibility consider setting the full image url via the repository value below

## use *either* current default registry/image or repository format or installing chart by providing the values.yaml will fail

## repository:

tag: "1.5"

pullPolicy: IfNotPresent

# nobody user -> uid 65534

runAsUser: 65534

runAsNonRoot: true

readOnlyRootFilesystem: true

allowPrivilegeEscalation: false

# -- Use an existing PSP instead of creating one

existingPsp: ""

extraArgs: {}

serviceAccount:

create: true

name: ""

automountServiceAccountToken: true

# -- Additional environment variables to set for defaultBackend pods

extraEnvs: []

port: 8080

## Readiness and liveness probes for default backend

## Ref: https://kubernetes.io/docs/tasks/configure-pod-container/configure-liveness-readiness-probes/

##

livenessProbe:

failureThreshold: 3

initialDelaySeconds: 30

periodSeconds: 10

successThreshold: 1

timeoutSeconds: 5

readinessProbe:

failureThreshold: 6

initialDelaySeconds: 0

periodSeconds: 5

successThreshold: 1

timeoutSeconds: 5

# -- Node tolerations for server scheduling to nodes with taints

## Ref: https://kubernetes.io/docs/concepts/configuration/assign-pod-node/

##

tolerations: []

# - key: "key"

# operator: "Equal|Exists"

# value: "value"

# effect: "NoSchedule|PreferNoSchedule|NoExecute(1.6 only)"

affinity: {}

# -- Security Context policies for controller pods

# See https://kubernetes.io/docs/tasks/administer-cluster/sysctl-cluster/ for

# notes on enabling and using sysctls

##

podSecurityContext: {}

# -- Security Context policies for controller main container.

# See https://kubernetes.io/docs/tasks/administer-cluster/sysctl-cluster/ for

# notes on enabling and using sysctls

##

containerSecurityContext: {}

# -- Labels to add to the pod container metadata

podLabels: {}

# key: value

# -- Node labels for default backend pod assignment

## Ref: https://kubernetes.io/docs/user-guide/node-selection/

##

nodeSelector:

kubernetes.io/os: linux

# -- Annotations to be added to default backend pods

##

podAnnotations: {}

replicaCount: 1

minAvailable: 1

resources: {}

# limits:

# cpu: 10m

# memory: 20Mi

# requests:

# cpu: 10m

# memory: 20Mi

extraVolumeMounts: []

## Additional volumeMounts to the default backend container.

# - name: copy-portal-skins

# mountPath: /var/lib/lemonldap-ng/portal/skins

extraVolumes: []

## Additional volumes to the default backend pod.

# - name: copy-portal-skins

# emptyDir: {}

autoscaling:

annotations: {}

enabled: false

minReplicas: 1

maxReplicas: 2

targetCPUUtilizationPercentage: 50

targetMemoryUtilizationPercentage: 50

service:

annotations: {}

# clusterIP: ""

# -- List of IP addresses at which the default backend service is available

## Ref: https://kubernetes.io/docs/user-guide/services/#external-ips

##

externalIPs: []

# loadBalancerIP: ""

loadBalancerSourceRanges: []

servicePort: 80

type: ClusterIP

priorityClassName: ""

# -- Labels to be added to the default backend resources

labels: {}

## Enable RBAC as per https://github.com/kubernetes/ingress-nginx/blob/main/docs/deploy/rbac.md and https://github.com/kubernetes/ingress-nginx/issues/266

rbac:

create: true

scope: false

## If true, create & use Pod Security Policy resources

## https://kubernetes.io/docs/concepts/policy/pod-security-policy/

podSecurityPolicy:

enabled: false

serviceAccount:

create: true

name: ""

automountServiceAccountToken: true

# -- Annotations for the controller service account

annotations: {}

# -- Optional array of imagePullSecrets containing private registry credentials

## Ref: https://kubernetes.io/docs/tasks/configure-pod-container/pull-image-private-registry/

imagePullSecrets: []

# - name: secretName

# -- TCP service key-value pairs

## Ref: https://github.com/kubernetes/ingress-nginx/blob/main/docs/user-guide/exposing-tcp-udp-services.md

##

tcp: {}

# 8080: "default/example-tcp-svc:9000"

# -- UDP service key-value pairs

## Ref: https://github.com/kubernetes/ingress-nginx/blob/main/docs/user-guide/exposing-tcp-udp-services.md

##

udp: {}

# 53: "kube-system/kube-dns:53"

# -- Prefix for TCP and UDP ports names in ingress controller service

## Some cloud providers, like Yandex Cloud may have a requirements for a port name regex to support cloud load balancer integration

portNamePrefix: ""

# -- (string) A base64-encoded Diffie-Hellman parameter.

# This can be generated with: `openssl dhparam 4096 2> /dev/null | base64`

## Ref: https://github.com/kubernetes/ingress-nginx/tree/main/docs/examples/customization/ssl-dh-param

dhParam: 3.安装ingress

# 选择节点打label

kubectl label node k8s-node01 ingress=true # k8s-node01是自己自定义的node节点名称

kubectl get node --show-labels

#创建命名空间

kubectl create ns ingress-nginx

# 使用helm进行安装

helm install ingress-nginx -f values.yaml -n ingress-nginx .

helm list -n ingress-nginx

kubectl -n ingress-nginx get pods -o wide

kubectl -n ingress-nginx get svc -o wide

# 删除ingress-nginx

helm delete ingress-nginx -n ingress-nginx

# 更新ingress-nginx

helm upgrade ingress-nginx -n -f values.yaml -n ingress-nginx .4.测试网页

vim test-nginx.yaml

metadata:

name: web #命名空间名称

labels:

name: label-web #pod标签

---

apiVersion: apps/v1

kind: Deployment

metadata:

name: my-deploy-nginx

namespace: web

spec:

replicas: 2

selector:

matchLabels:

app: mynginx

template:

metadata:

labels:

app: mynginx

spec:

containers:

- name: mynginx

image: nginx

ports:

- containerPort: 80

---

kind: Service

apiVersion: v1

metadata:

name: myservice

namespace: web

spec:

ports:

- protocol: TCP

port: 80

targetPort: 80

selector:

app: mynginx

type: ClusterIP

---

apiVersion: networking.k8s.io/v1

kind: Ingress

metadata:

name: mynginx

namespace: web # 指定ingress的命名空间,害怕与其它Pod IP冲突

spec:

ingressClassName: "nginx" #在部署ingress-nginx时,valume.yaml文件中定义的

rules:

- host: nginx.rw.com

http:

paths:

- path: /

pathType: Prefix

backend:

service:

name: myservice

port:

number: 80

kubectl apply -f test-nginx.yaml

kubectl -n web get all

kubectl -n web get ingress

四、k8sHPA 自动水平伸缩pod

1.pod内资源分配的配置格式如下:

默认可以只配置requests,但根据生产中的经验,建议把limits资源限制也加上,因为对K8s来说,只有这两个都配置了且配置的值都要一样,这个pod资源的优先级才是最高的,在node资源不够的情况下,首先是把没有任何资源分配配置的pod资源给干掉,其次是只配置了requests的,最后才是两个都配置的情况,仔细品品

resources:

limits: # 限制单个pod最多能使用1核(1000m 毫核)cpu以及2G内存

cpu: "1"

memory: 2Gi

requests: # 保证这个pod初始就能分配这么多资源

cpu: "1"

memory: 2Gi

2.我们现在以上面创建的deployment资源web来实践下hpa的效果,首先用我们学到的方法导出web的yaml配置,并增加资源分配配置增加

apiVersion: v1

kind: Service

metadata:

labels:

app: web

name: web

spec:

ports:

- port: 80

protocol: TCP

targetPort: 80

selector:

app: web

---

apiVersion: apps/v1

kind: Deployment

metadata:

labels:

app: web

name: web

namespace: default

spec:

replicas: 1

selector:

matchLabels:

app: web

template:

metadata:

labels:

app: web

spec:

containers:

- image: nginx

name: web

resources:

limits: # 因为我这里是测试环境,所以这里CPU只分配50毫核(0.05核CPU)和20M的内存

cpu: "50m"

memory: 20Mi

requests: # 保证这个pod初始就能分配这么多资源

cpu: "50m"

memory: 20Mi

3.运行

kubectl apply -f web.yaml

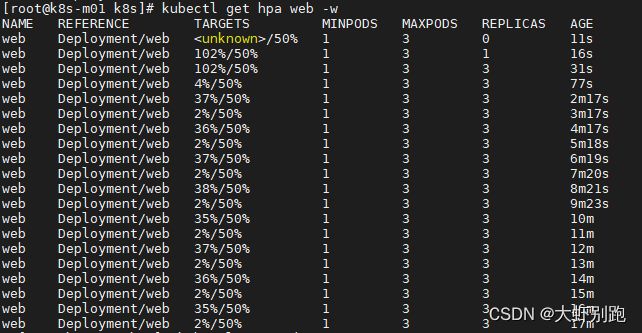

4.第一种:为deployment资源web创建hpa,pod数量上限3个,最低1个,在pod平均CPU达到50%后开始扩容

kubectl autoscale deployment web --max=3 --min=1 --cpu-percent=50

5.第二种创建hpa

cat hpa-web.yaml

apiVersion: autoscaling/v2beta1 # v2beta1版本

#apiVersion: apps/v1

kind: HorizontalPodAutoscaler

metadata:

name: web

spec:

maxReplicas: 10

minReplicas: 1 # 1-10个pod范围内扩容与裁剪

scaleTargetRef:

apiVersion: apps/v1

kind: Deployment

name: web

metrics:

- type: Resource

resource:

name: memory

targetAverageUtilization: 50 # 50%内存利用

6.执行

kubectl apply -f hpa-web.yaml7.我们启动一个临时pod,来模拟大量请求

kubectl run -it --rm busybox --image=busybox -- sh

/ # while :;do wget -q -O- http://web;done

#等待2 ~ 3分钟,注意k8s为了避免频繁增删pod,对副本的增加速度有限制

kubectl get hpa web -w

五、k8s存储

5.1 k8s持久化存储02pv pvc

开始部署NFS-SERVER

# 我们这里在10.0.1.201上安装(在生产中,大家要提供作好NFS-SERVER环境的规划)

yum -y install nfs-utils

# 创建NFS挂载目录

mkdir /nfs_dir

chown nobody.nobody /nfs_dir

# 修改NFS-SERVER配置

echo '/nfs_dir *(rw,sync,no_root_squash)' > /etc/exports

# 重启服务

systemctl restart rpcbind.service

systemctl restart nfs-utils.service

systemctl restart nfs-server.service

# 增加NFS-SERVER开机自启动

systemctl enable rpcbind.service

systemctl enable nfs-utils.service

systemctl enable nfs-server.service

# 验证NFS-SERVER是否能正常访问

showmount -e 10.0.1.201

#需要挂载的服务器执行

yum install nfs-utils -y

接着准备好pv的yaml配置,保存为pv1.yaml

# cat pv1.yaml

apiVersion: v1

kind: PersistentVolume

metadata:

name: pv1

labels:

type: test-claim # 这里建议打上一个独有的标签,方便在多个pv的时候方便提供pvc选择挂载

spec:

capacity:

storage: 1Gi # <---------- 1

accessModes:

- ReadWriteOnce # <---------- 2

persistentVolumeReclaimPolicy: Recycle # <---------- 3

storageClassName: nfs # <---------- 4

nfs:

path: /nfs_dir/pv1 # <---------- 5

server: 10.0.1.201

- capacity 指定 PV 的容量为 1G。

- accessModes 指定访问模式为 ReadWriteOnce,支持的访问模式有: ReadWriteOnce – PV 能以 read-write 模式 mount 到单个节点。 ReadOnlyMany – PV 能以 read-only 模式 mount 到多个节点。 ReadWriteMany – PV 能以 read-write 模式 mount 到多个节点。

- persistentVolumeReclaimPolicy 指定当 PV 的回收策略为 Recycle,支持的策略有: Retain – 需要管理员手工回收。 Recycle – 清除 PV 中的数据,效果相当于执行 rm -rf /thevolume/*。 Delete – 删除 Storage Provider 上的对应存储资源,例如 AWS EBS、GCE PD、Azure Disk、OpenStack Cinder Volume 等。

- storageClassName 指定 PV 的 class 为 nfs。相当于为 PV 设置了一个分类,PVC 可以指定 class 申请相应 class 的 PV。

- 指定 PV 在 NFS 服务器上对应的目录,这里注意,我测试的时候,需要手动先创建好这个目录并授权好,不然后面挂载会提示目录不存在 mkdir /nfsdata/pv1 && chown -R nobody.nogroup /nfsdata 。

创建这个pv

# kubectl apply -f pv1.yaml

persistentvolume/pv1 created

# STATUS 为 Available,表示 pv1 就绪,可以被 PVC 申请

# kubectl get pv

NAME CAPACITY ACCESS MODES RECLAIM POLICY STATUS CLAIM STORAGECLASS REASON AGE

pv1 1Gi RWO Recycle Available nfs 4m45s

接着准备PVC的yaml,保存为pvc1.yaml

cat pvc1.yaml

apiVersion: v1

kind: PersistentVolumeClaim

metadata:

name: pvc1

spec:

accessModes:

- ReadWriteOnce

resources:

requests:

storage: 1Gi

storageClassName: nfs

selector:

matchLabels:

type: test-claim

创建这个pvc

# kubectl apply -f pvc1.yaml

persistentvolumeclaim/pvc1 created

# 看下pvc的STATUS为Bound代表成功挂载到pv了

# kubectl get pvc

NAME STATUS VOLUME CAPACITY ACCESS MODES STORAGECLASS AGE

pvc1 Bound pv1 1Gi RWO nfs 2s

# 这个时候再看下pv,STATUS也是Bound了,同时CLAIM提示被default/pvc1消费

# kubectl get pv

NAME CAPACITY ACCESS MODES RECLAIM POLICY STATUS CLAIM STORAGECLASS REASON AGE

pv1 1Gi RWO Recycle Bound default/pvc1 nfs

下面讲下如何回收PVC以及PV

# 这里删除时会一直卡着,我们按ctrl+c看看怎么回事

# kubectl delete pvc pvc1

persistentvolumeclaim "pvc1" deleted

^C

# 看下pvc发现STATUS是Terminating删除中的状态,我分析是因为服务pod还在占用这个pvc使用中

# kubectl get pvc

NAME STATUS VOLUME CAPACITY ACCESS MODES STORAGECLASS AGE

pvc1 Terminating pv1 1Gi RWO nfs 21m

# 先删除这个pod

# kubectl delete pod nginx-569546db98-99qpq

pod "nginx-569546db98-99qpq" deleted

# 再看先删除的pvc已经没有了

# kubectl get pvc

No resources found in default namespace.

# 根据先前创建pv时的数据回收策略为Recycle – 清除 PV 中的数据,这时果然先创建的index.html已经被删除了,在生产中要尤其注意这里的模式,注意及时备份数据,注意及时备份数据,注意及时备份数据

# ll /nfs_dir/pv1/

total 0

# 虽然此时pv是可以再次被pvc来消费的,但根据生产的经验,建议在删除pvc时,也同时把它消费的pv一并删除,然后再重启创建都是可以的

5.2 StorageClass

1.k8s持久化存储的第三节,给大家带来 StorageClass动态存储的讲解。

我们上节课提到了K8s对于存储解耦的设计是,pv交给存储管理员来管理,我们只管用pvc来消费就好,但这里我们实际还是得一起管理pv和pvc,在实际工作中,我们(存储管理员)可以提前配置好pv的动态供给StorageClass,来根据pvc的消费动态生成pv。

StorageClass

我这是直接拿生产中用的实例来作演示,利用nfs-client-provisioner来生成一个基于nfs的StorageClass,部署配置yaml配置如下,保持为nfs-sc.yaml:

apiVersion: v1

kind: ServiceAccount

metadata:

name: nfs-client-provisioner

namespace: kube-system

---

kind: ClusterRole

apiVersion: rbac.authorization.k8s.io/v1

metadata:

name: nfs-client-provisioner-runner

rules:

- apiGroups: [""]

resources: ["persistentvolumes"]

verbs: ["get", "list", "watch", "create", "delete"]

- apiGroups: [""]

resources: ["persistentvolumeclaims"]

verbs: ["get", "list", "watch", "update"]

- apiGroups: ["storage.k8s.io"]

resources: ["storageclasses"]

verbs: ["get", "list", "watch"]

- apiGroups: [""]

resources: ["events"]

verbs: ["list", "watch", "create", "update", "patch"]

- apiGroups: [""]

resources: ["endpoints"]

verbs: ["get", "list", "watch", "create", "update", "patch"]

---

kind: ClusterRoleBinding

apiVersion: rbac.authorization.k8s.io/v1

metadata:

name: run-nfs-client-provisioner

subjects:

- kind: ServiceAccount

name: nfs-client-provisioner

namespace: kube-system

roleRef:

kind: ClusterRole

name: nfs-client-provisioner-runner

apiGroup: rbac.authorization.k8s.io

---

kind: Deployment

apiVersion: apps/v1

metadata:

name: nfs-provisioner-01

namespace: kube-system

spec:

replicas: 1

strategy:

type: Recreate

selector:

matchLabels:

app: nfs-provisioner-01

template:

metadata:

labels:

app: nfs-provisioner-01

spec:

serviceAccountName: nfs-client-provisioner

containers:

- name: nfs-client-provisioner

#老版本插件使用jmgao1983/nfs-client-provisioner:latest

# image: jmgao1983/nfs-client-provisioner:latest

image: vbouchaud/nfs-client-provisioner:latest

imagePullPolicy: IfNotPresent

volumeMounts:

- name: nfs-client-root

mountPath: /persistentvolumes

env:

- name: PROVISIONER_NAME

value: nfs-provisioner-01 # 此处供应者名字供storageclass调用

- name: NFS_SERVER

value: 10.0.1.201 # 填入NFS的地址

- name: NFS_PATH

value: /nfs_dir # 填入NFS挂载的目录

volumes:

- name: nfs-client-root

nfs:

server: 10.0.1.201 # 填入NFS的地址

path: /nfs_dir # 填入NFS挂载的目录

---

apiVersion: storage.k8s.io/v1

kind: StorageClass

metadata:

name: nfs-boge

provisioner: nfs-provisioner-01

# Supported policies: Delete、 Retain , default is Delete

reclaimPolicy: Retain

2.开始创建这个StorageClass

# kubectl apply -f nfs-sc.yaml

serviceaccount/nfs-client-provisioner created

clusterrole.rbac.authorization.k8s.io/nfs-client-provisioner-runner created

clusterrolebinding.rbac.authorization.k8s.io/run-nfs-client-provisioner created

deployment.apps/nfs-provisioner-01 created

orageclass.storage.k8s.io/nfs-boge created

# 注意这个是在放kube-system的namespace下面,这里面放置一些偏系统类的服务

# kubectl -n kube-system get pod -w

NAME READY STATUS RESTARTS AGE

calico-kube-controllers-7fdc86d8ff-dpdm5 1/1 Running 1 24h

calico-node-8jcp5 1/1 Running 1 24h

calico-node-m92rn 1/1 Running 1 24h

calico-node-xg5n4 1/1 Running 1 24h

calico-node-xrfqq 1/1 Running 1 24h

coredns-d9b6857b5-5zwgf 1/1 Running 1 24h

metrics-server-869ffc99cd-wfj44 1/1 Running 2 24h

nfs-provisioner-01-5db96d9cc9-qxlgk 0/1 ContainerCreating 0 9s

nfs-provisioner-01-5db96d9cc9-qxlgk 1/1 Running 0 21s

# StorageClass已经创建好了

# kubectl get sc

NAME PROVISIONER RECLAIMPOLICY VOLUMEBINDINGMODE ALLOWVOLUMEEXPANSION AGE

nfs-boge nfs-provisioner-01 Retain Immediate false 37s

3.我们来基于StorageClass创建一个pvc,看看动态生成的pv是什么效果

# vim pvc-sc.yaml

kind: PersistentVolumeClaim

apiVersion: v1

metadata:

name: pvc-sc

spec:

storageClassName: nfs-boge

accessModes:

- ReadWriteMany

resources:

requests:

storage: 1Mi

# kubectl apply -f pvc-sc.yaml

persistentvolumeclaim/pvc-sc created

# kubectl get pvc

NAME STATUS VOLUME CAPACITY ACCESS MODES STORAGECLASS AGE

pvc-sc Bound pvc-63eee4c7-90fd-4c7e-abf9-d803c3204623 1Mi RWX nfs-boge 3s

pvc1 Bound pv1 1Gi RWO nfs 24m

# kubectl get pv

NAME CAPACITY ACCESS MODES RECLAIM POLICY STATUS CLAIM STORAGECLASS REASON AGE

pv1 1Gi RWO Recycle Bound default/pvc1 nfs 49m

pvc-63eee4c7-90fd-4c7e-abf9-d803c3204623 1Mi RWX Retain Bound default/pvc-sc nfs-boge 7s

4.我们修改下nginx的yaml配置,将pvc的名称换成上面的pvc-sc:

# vim nginx.yaml

---

apiVersion: apps/v1

kind: Deployment

metadata:

labels:

app: nginx

name: nginx

spec:

replicas: 1

selector:

matchLabels:

app: nginx

template:

metadata:

labels:

app: nginx

spec:

containers:

- image: nginx

name: nginx

volumeMounts: # 我们这里将nginx容器默认的页面目录挂载

- name: html-files

mountPath: "/usr/share/nginx/html"

volumes:

- name: html-files

persistentVolumeClaim:

claimName: pvc-sc

# kubectl apply -f nginx.yaml

service/nginx unchanged

deployment.apps/nginx configured

# 这里注意下,因为是动态生成的pv,所以它的目录基于是一串随机字符串生成的,这时我们直接进到pod内来创建访问页面

# kubectl exec -it nginx-57cdc6d9b4-n497g -- bash

root@nginx-57cdc6d9b4-n497g:/# echo 'storageClass used' > /usr/share/nginx/html/index.html

root@nginx-57cdc6d9b4-n497g:/# exit

# curl 10.68.238.54

storageClass used

# 我们看下NFS挂载的目录

# ll /nfs_dir/

total 0

drwxrwxrwx 2 root root 24 Nov 27 17:52 default-pvc-sc-pvc-63eee4c7-90fd-4c7e-abf9-d803c3204623

drwxr-xr-x 2 root root 6 Nov 27 17:25 pv1

5.ubuntu20.04系统

#1安装nfs服务端

sudo apt install nfs-kernel-server -y

#2. 创建目录

sudo mkdir -p /nfs_dir/

#3. 使任何客户端均可访问

sudo chown nobody:nogroup /data/k8s/

#sudo chmod 755 /nfs_dir/

sudo chmod 777 /nfs_dir/

#4. 配置/etc/exports文件, 使任何ip均可访问(加入以下语句)

vi /etc/exports

/nfs_dir/ *(rw,sync,no_subtree_check)

#5. 检查nfs服务的目录

# (重新加载配置)

sudo exportfs -ra

#(查看共享的目录和允许访问的ip段)

sudo showmount -e

#6. 重启nfs服务使以上配置生效

sudo systemctl restart nfs-kernel-server

#sudo /etc/init.d/nfs-kernel-server restart

#查看nfs服务的状态是否为active状态:active(exited)或active(runing)

systemctl status nfs-kernel-server

#7. 测试nfs服务是否成功启动

#安装nfs 客户端

sudo apt-get install nfs-common

#创建挂载目录

sudo mkdir /nfs_dir/

#7.4 在主机上的Linux中测试是否正常

sudo mount -t nfs -o nolock -o tcp 192.168.100.11:/nfs_dir/ /nfs_dir/

#错误 mount.nfs: access denied by server while mounting

六、k8s架构师课程之有状态服务StatefulSet

1.StatefulSet

前面我们讲到了Deployment、DaemonSet都只适合用来跑无状态的服务pod,那么这里的StatefulSet(简写sts)就是用来跑有状态服务pod的。

那怎么理解有状态服务和无状态服务呢?简单快速地理解为:无状态服务最典型的是WEB服务器的每次http请求,它的每次请求都是全新的,和之前的没有关系;那么有状态服务用网游服务器来举例比较恰当了,每个用户的登陆请求,服务端都是先根据这个用户之前注册过的帐号密码等信息来判断这次登陆请求是否正常。

无状态服务因为相互之前都是独立的,很适合用横向扩充来增加服务的资源量

还有一个很形象的比喻,在K8s的无状态服务的pod有点类似于农村圈养的牲畜,饲养它们的人不会给它们每个都单独取个名字(pod都是随机名称,IP每次发生重启也是变化的),当其中一只病了或被卖了,带来的感观只是数量上的减少,这时再买些相应数量的牲畜回来就可以回到之前的状态了(当一个pod因为某些原来被删除掉的时候,K8s会启动一个新的pod来代替它);而有状态服务的pod就像养的一只只宠物,主人对待自己喜欢的宠物都会给它们取一个比较有特色的名字(在K8s上运行的有状态服务的pod,都会被给予一个独立的固定名称),并且每只宠物都有它独特的外貌和性格,如果万一这只宠物丢失了,那么需要到宠物店再买一只同样品种同样毛色的宠物来代替了(当有状态服务的pod删除时,K8s会启动一个和先前一模一样名称的pod来代替它)。

有状态服务sts比较常见的mongo复制集 ,redis cluster,rabbitmq cluster等等,这些服务基本都会用StatefulSet模式来运行,当然除了这个,它们内部集群的关系还需要一系列脚本或controller来维系它们间的状态,这些会在后面进阶课程专门来讲,现在为了让大家先更好的明白StatefulSet,我这里直接还是用nginx服务来实战演示

1、创建pv

-------------------------------------------

root@node1:~# cat web-pv.yaml

# mkdir -p /nfs_dir/{web-pv0,web-pv1}

apiVersion: v1

kind: PersistentVolume

metadata:

name: web-pv0

labels:

type: web-pv0

spec:

capacity:

storage: 1Gi

accessModes:

- ReadWriteOnce

persistentVolumeReclaimPolicy: Retain

storageClassName: my-storage-class

nfs:

path: /nfs_dir/web-pv0

server: 10.0.1.201

---

apiVersion: v1

kind: PersistentVolume

metadata:

name: web-pv1

labels:

type: web-pv1

spec:

capacity:

storage: 1Gi

accessModes:

- ReadWriteOnce

persistentVolumeReclaimPolicy: Retain

storageClassName: my-storage-class

nfs:

path: /nfs_dir/web-pv1

server: 10.0.1.201

2、创建pvc(这一步可以省去让其自动创建,这里手动创建是为了让大家能更清楚在sts里面pvc的创建过程)

-------------------------------------------

这一步非常非常的关键,因为如果创建的PVC的名称和StatefulSet中的名称没有对应上,

那么StatefulSet中的Pod就肯定创建不成功.

我们在这里创建了一个叫做www-web-0和www-web-1的PVC,这个名字是不是很奇怪,

而且在这个yaml里并没有提到PV的名字,所以PV和PVC是怎么bound起来的呢?

是通过labels标签下的key:value键值对来进行匹配的,

我们在创建PV时指定了label的键值对,在PVC里通过selector可以指定label。

然后再回到这个PVC的名称定义:www-web-0,为什么叫这样一个看似有规律的名字呢,

这里需要看看下面创建StatefulSet中的yaml,

首先我们看到StatefulSet的name叫web,设置的replicas为2个,

volumeMounts和volumeClaimTemplates的name必须相同,为www,

所以StatefulSet创建的第一个Pod的name应该为web-0,第二个为web-1。

这里StatefulSet中的Pod与PVC之间的绑定关系是通过名称来匹配的,即:

PVC_name = volumeClaimTemplates_name + "-" + pod_name

www-web-0 = www + "-" + web-0

www-web-1 = www + "-" + web-1

root@node1:~# cat web-pvc.yaml

kind: PersistentVolumeClaim

apiVersion: v1

metadata:

name: www-web-0

spec:

accessModes:

- ReadWriteOnce

resources:

requests:

storage: 1Gi

storageClassName: my-storage-class

selector:

matchLabels:

type: web-pv0

---

kind: PersistentVolumeClaim

apiVersion: v1

metadata:

name: www-web-1

spec:

accessModes:

- ReadWriteOnce

resources:

requests:

storage: 1Gi

storageClassName: my-storage-class

selector:

matchLabels:

type: web-pv1

3、创建Service 和 StatefulSet

-------------------------------------------

在上一步中我们已经创建了名为www-web-0的PVC了,接下来创建一个service和statefulset,

service的名称可以随意取,但是statefulset的名称已经定死了,为web,

并且statefulset中的volumeClaimTemplates_name必须为www,volumeMounts_name也必须为www。

只有这样,statefulset中的pod才能通过命名来匹配到PVC,否则会创建失败。

root@node1:~# cat web.yaml

apiVersion: v1

kind: Service

metadata:

name: web-headless

labels:

app: nginx

spec:

ports:

- port: 80

name: web

clusterIP: None

selector:

app: nginx

---

apiVersion: v1

kind: Service

metadata:

name: web

labels:

app: nginx

spec:

ports:

- port: 80

name: web

selector:

app: nginx

---

apiVersion: apps/v1

kind: StatefulSet

metadata:

name: web

spec:

selector:

matchLabels:

app: nginx # has to match .spec.template.metadata.labels

serviceName: "web-headless" #需要第4行的name一致

replicas: 2 # by default is 1

template:

metadata:

labels:

app: nginx # has to match .spec.selector.matchLabels

spec:

terminationGracePeriodSeconds: 10

containers:

- name: nginx

image: nginx

imagePullPolicy: IfNotPresent

ports:

- containerPort: 80

name: web

volumeMounts:

- name: www

mountPath: /usr/share/nginx/html

volumeClaimTemplates:

- metadata:

name: www

spec:

accessModes: [ "ReadWriteOnce" ]

storageClassName: "my-storage-class"

resources:

requests:

storage: 1Gi

2.动态存储创建sts-web.yaml

cat sts-web.yaml

apiVersion: v1

kind: Service

metadata:

name: web-headless

labels:

app: nginx

spec:

ports:

- port: 80

name: web

clusterIP: None

selector:

app: nginx

---

apiVersion: v1

kind: Service

metadata:

name: web

labels: