将Hugging Face模型转换成LibTorch模型

Hugging Face的模型

以waifu-diffusion模型为例,给出的实现一般是基于diffuser库,示例代码如下:

import torch

from torch import autocast

from diffusers import StableDiffusionPipeline

pipe = StableDiffusionPipeline.from_pretrained(

'hakurei/waifu-diffusion',

torch_dtype=torch.float32

).to('cuda')

prompt = "1girl, aqua eyes, baseball cap, blonde hair, closed mouth, earrings, green background, hat, hoop earrings, jewelry, looking at viewer, shirt, short hair, simple background, solo, upper body, yellow shirt"

with autocast("cuda"):

image = pipe(prompt, guidance_scale=6)["sample"][0]

image.save("test.png")

通过网络下载预训练模型,预训练模型直接加载,但其实这个模型是下载到了本地的,只不过看起来不是很轻松:

因为模型太大,分成了一些小的文件进行了下载,而且后面可以看出来模型实际上是由一些子模型组成的,所以这里面有几个比较大的文件应该是对应了unet、vae这种,看大小也差不多。

下载好了可以直接print(pipe),发现:

StableDiffusionPipeline {

"_class_name": "StableDiffusionPipeline",

"_diffusers_version": "0.11.0",

"feature_extractor": [

"transformers",

"CLIPImageProcessor"

],

"requires_safety_checker": true,

"safety_checker": [

"stable_diffusion",

"StableDiffusionSafetyChecker"

],

"scheduler": [

"diffusers",

"PNDMScheduler"

],

"text_encoder": [

"transformers",

"CLIPTextModel"

],

"tokenizer": [

"transformers",

"CLIPTokenizer"

],

"unet": [

"diffusers",

"UNet2DConditionModel"

],

"vae": [

"diffusers",

"AutoencoderKL"

]

}

果然是一系列的小模型以及一些不重要的参数,这个模型可以直接保存为.pth文件,同样也可以使用torch.load(pipe.pth)读入,但是在实例化模型的时候,会出现

Traceback (most recent call last):

File "/home/gaoyi/example-app/test.py", line 59, in <module>

traced_script_module = torch.jit.trace(model, example)

File "/home/gaoyi/anaconda3/lib/python3.9/site-packages/torch/jit/_trace.py", line 803, in trace

name = _qualified_name(func)

File "/home/gaoyi/anaconda3/lib/python3.9/site-packages/torch/_jit_internal.py", line 1125, in _qualified_name

raise RuntimeError("Could not get name of python class object")

RuntimeError: Could not get name of python class object

这是因为这个大家伙不能作为一个模型类加载,故也不能直接通过torch.jit.trace进行转化,我们换个方式,将子模型进行转化

模型转化

通过打印print(pipe.unet),可以看出这个unet是一个普通的网络,拥有一堆熟悉的网络层:

UNet2DConditionModel(

(conv_in): Conv2d(4, 320, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1))

(time_proj): Timesteps()

(time_embedding): TimestepEmbedding(

(linear_1): Linear(in_features=320, out_features=1280, bias=True)

(act): SiLU()

(linear_2): Linear(in_features=1280, out_features=1280, bias=True)

)

(down_blocks): ModuleList(

(0): CrossAttnDownBlock2D(

(attentions): ModuleList(

(0): Transformer2DModel(

(norm): GroupNorm(32, 320, eps=1e-06, affine=True)

(proj_in): Linear(in_features=320, out_features=320, bias=True)

(transformer_blocks): ModuleList(

(0): BasicTransformerBlock(

(attn1): CrossAttention(

(to_q): Linear(in_features=320, out_features=320, bias=False)

(to_k): Linear(in_features=320, out_features=320, bias=False)

(to_v): Linear(in_features=320, out_features=320, bias=False)

(to_out): ModuleList(

(0): Linear(in_features=320, out_features=320, bias=True)

(1): Dropout(p=0.0, inplace=False)

)

)

(ff): FeedForward(

(net): ModuleList(

(0): GEGLU(

(proj): Linear(in_features=320, out_features=2560, bias=True)

)

(1): Dropout(p=0.0, inplace=False)

(2): Linear(in_features=1280, out_features=320, bias=True)

)

)

(attn2): CrossAttention(

(to_q): Linear(in_features=320, out_features=320, bias=False)

(to_k): Linear(in_features=1024, out_features=320, bias=False)

(to_v): Linear(in_features=1024, out_features=320, bias=False)

(to_out): ModuleList(

(0): Linear(in_features=320, out_features=320, bias=True)

(1): Dropout(p=0.0, inplace=False)

)

)

(norm1): LayerNorm((320,), eps=1e-05, elementwise_affine=True)

(norm2): LayerNorm((320,), eps=1e-05, elementwise_affine=True)

(norm3): LayerNorm((320,), eps=1e-05, elementwise_affine=True)

)

)

(proj_out): Linear(in_features=320, out_features=320, bias=True)

)

(1): Transformer2DModel(

...

...略

...

(conv_norm_out): GroupNorm(32, 320, eps=1e-05, affine=True)

(conv_act): SiLU()

(conv_out): Conv2d(320, 4, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1))

)

好,那我们就可以将这个子模型进行转化,变成需要的LibTorch模型,但是我们不知道这个模型需要的输入,通过打印的信息我们知道了这个模型的名字是UNet2DConditionModel,所以我们可以从Hugging Face的官方文档进行查询:UNet2DConditionModel

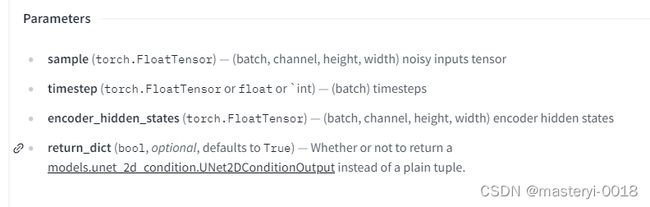

查询发现模型的输入为:

但是具体的数值依旧不知道,这时候可以通过print(model.config)进行查看:

FrozenDict([('sample_size', 64), ('in_channels', 4), ('out_channels', 4), ('center_input_sample', False),

('flip_sin_to_cos', True), ('freq_shift', 0), ('down_block_types', ['CrossAttnDownBlock2D',

'CrossAttnDownBlock2D', 'CrossAttnDownBlock2D', 'DownBlock2D']), ('mid_block_type',

'UNetMidBlock2DCrossAttn'), ('up_block_types', ['UpBlock2D', 'CrossAttnUpBlock2D',

'CrossAttnUpBlock2D', 'CrossAttnUpBlock2D']), ('only_cross_attention', False),

('block_out_channels', [320, 640, 1280, 1280]), ('layers_per_block', 2), ('downsample_padding', 1),

('mid_block_scale_factor', 1), ('act_fn', 'silu'), ('norm_num_groups', 32), ('norm_eps', 1e-05),

('cross_attention_dim', 1024), ('attention_head_dim', [5, 10, 20, 20]), ('dual_cross_attention', False),

('use_linear_projection', True), ('class_embed_type', None), ('num_class_embeds', None),

('upcast_attention', False), ('resnet_time_scale_shift', 'default'), ('_class_name', 'UNet2DConditionModel'),

('_diffusers_version', '0.10.2'), ('_name_or_path',

'/home/gaoyi/.cache/huggingface/diffusers/models--hakurei--waifu-diffusion/snapshots/55fd50bfae0dd8bcc4bd3a6f25cb167580b972a0/unet')])

一个大字典,找到我们所需要的('sample_size', 64), ('in_channels', 4), ('out_channels', 4),作为用于实例化的输入,此时我们的.py文件如下:

model = torch.load("pipe-unet.pth")

# print(model.config)

# print(model)

example = torch.rand(1, 4, 64, 64)

timestep = torch.rand(1)

encoder_hidden_states = torch.rand(1, 4, 64, 64)

traced_script_module = torch.jit.trace(model, (example, timestep, encoder_hidden_states))

traced_script_module.save("pipe-unet.pt")

但是报错:mat1 can not be multiplied with mat2, shape 256x64 and 1024x320,大概是这么个问题,具体的信息就不粘贴了,既然是矩阵形状不对,那就改形状,之前理解的encoder_hidden_states形状与example应该是一样的,但看起来不对,可是改了1024x1024之后又遇到了新的问题,计算注意力的时候数据太多,接受的参数只有三个,所以干脆将encoder_hidden_states = torch.rand(1, 4, 1024),实测通过

之后的新问题,好像是实例化的时候输入元组的问题,具体如下:

RuntimeError: Encountering a dict at the output of the tracer might cause the trace to be incorrect,

this is only valid if the container structure does not change based on the module's inputs.

Consider using a constant container instead (e.g. for `list`, use a `tuple` instead. for `dict`,

use a `NamedTuple` instead). If you absolutely need this and know the side effects,

pass strict=False to trace() to allow this behavior.

应该是需要在转换的时候传一个参数strict=False,调整完之后的代码如下:

model = torch.load("pipe-unet.pth")

# print(model.config)

# print(model)

example = torch.rand(1, 4, 64, 64)

timestep = torch.rand(1)

encoder_hidden_states = torch.rand(1, 4, 1024)

traced_script_module = torch.jit.trace(model, (example, timestep, encoder_hidden_states), strict=False)

traced_script_module.save("pipe-unet.pt")

成功保存!

模型测试

根据PyTorch官网的测试教程,编写相应的C++文件,然后使用CMake进行编译,最终生成example-app的可执行文件,运行:

./example-app ../pipe-unet.pt

输出ok,成功转化!