【flink学习笔记】【3】集群模式-环境部署

文章目录

-

- 一、环境配置

- 二、安装flink

- 三、向集群提交作业

-

- 报错处理finishConnect(..) failed: No route to host

- 四、终端提交任务

- 五、部署模式

-

- 5.1 独立模式standalone

- 5.2 yarn模式

一、环境配置

- centos7.5

- java8

- hadoop

- ssh、关闭防火墙

- node00、node01、node02

二、安装flink

https://www.apache.org/dyn/closer.lua/flink/flink-1.13.6/flink-1.13.6-bin-scala_2.12.tgz

1.解压

[root@node00 servers]# tar -zxvf flink-1.13.6-bin-scala_2.12.tgz -C /export/servers/

2.修改flink-conf.yaml

3. 修改master、workers主机

masters:node00

workers:node01 node02

其他参数

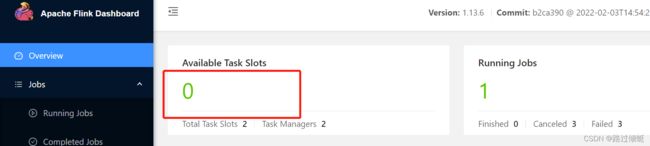

任务槽:taskmanager.numberOfTaskSlots: 1

并行度:parallelism.default: 1

- 分发flink目录

scp -r flink-1.13.6/ node01:$PWD

scp -r flink-1.13.6/ node02:$PWD

- 启动

[root@node00 servers]# cd flink-1.13.6

[root@node00 flink-1.13.6]# bin/start-cluster.sh

Starting cluster.

Starting standalonesession daemon on host node00.

Starting taskexecutor daemon on host node01.

Starting taskexecutor daemon on host node02.

7.检查flink进程

[root@node00 flink-1.13.6]# jps

1861 QuorumPeerMain

2901 NodeManager

2119 NameNode

3384 JobHistoryServer

17545 StandaloneSessionClusterEntrypoint

2250 DataNode

2443 SecondaryNameNode

17614 Jps

2767 ResourceManager

三、向集群提交作业

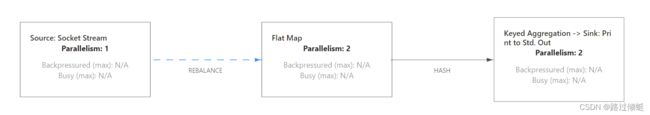

show plan

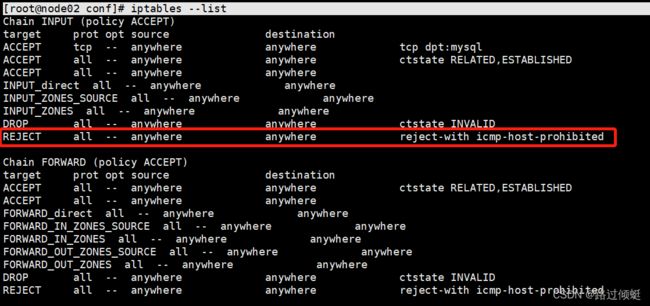

报错处理finishConnect(…) failed: No route to host

现象:设置并行度1时,node01可正常执行任务,当并行度2时,报错node02无法连接。

查看iptables

iptables --list

iptables -F

或者

# 新增规则(-I表示插入在链的第一位置,-A 表示追加到链的末尾位置,防火墙规则是从上往下读取)

[root@data ~] iptables -I INPUT -p tcp --dport 8081-j ACCEPT

# 保存规则到默认文件/etc/sysconfig/iptables

[root@data ~] service iptables save

# 重启

[root@data ~] service iptables restart

Caused by: java.util.concurrent.CompletionException:

org.apache.flink.runtime.jobmanager.scheduler.NoResourceAvailableException:

Could not acquire the minimum required resources.

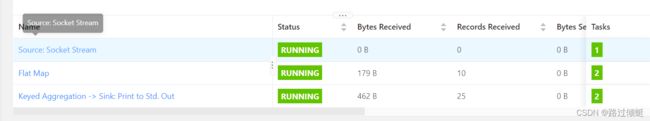

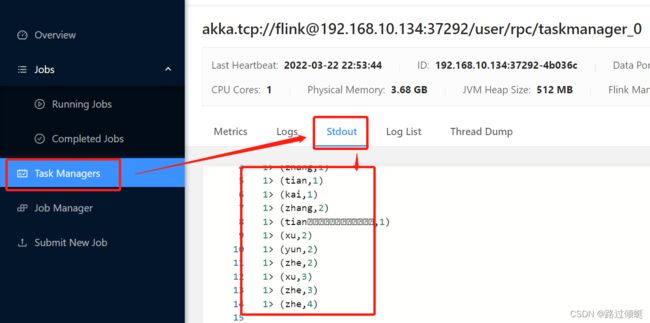

四、终端提交任务

提交任务

bin/flink run -m node00:8081 -c com.shinho.wc.NoBoundryWordCount -p 2 ./original-FlinkStudy-1.0-SNAPSHOT.jar

取消任务

[root@node02 flink-1.13.6]# ./bin/flink cancel ec1e5c6f1a92782cfc4d37893cc57978

Cancelling job ec1e5c6f1a92782cfc4d37893cc57978.

Cancelled job ec1e5c6f1a92782cfc4d37893cc57978.

五、部署模式

- 会话模式 session-mode

先有集群、再提交作业,资源已经固定,集群不根据作业而改变。一旦资源不够:作业失败。适合规模小、执行时间短作业。 - 单作业模式

资源按照作业隔离开,提交作业后,创建flink集群。作业结束集群关闭。单作业模式是首选。一定需要外界资源管理平台(yarn、kubernetes)。 - 应用模式

类似单作业模式,但是是jar包和集群一对一。

5.1 独立模式standalone

不支持单作业模式,所需要的的flink组件都是操作系统上的jvm进程。

5.2 yarn模式

必要配置:

hadoop环境变量

客户端-flink应用-yarn的resourcemanager-向nodemanager申请容器

静态分配是资源浪费

yarn是动态分配

yarn会话模式

两个flink hadoop jar依赖放在flink/lib下

①https://mvnrepository.com/artifact/org.apache.flink/flink-shaded-hadoop-3-uber/3.1.1.7.2.9.0-173-9.0

②https://mvnrepository.com/artifact/commons-cli/commons-cli

yarn-session.sh -n 4 -jm 1024m -tm 4096m

提交flink作业(并行度2、并行度1各一个)

./bin/flink run -c com.shinho.wc.NoBoundryWordCount ./original-FlinkStudy-1.0-SNAPSHOT.jar

./bin/flink run -c com.shinho.wc.NoBoundryWordCount -p 2 ./original-FlinkStudy-1.0-SNAPSHOT.jar