FlinkSQL实现滚动窗口和滑动窗口

实现滚动窗口

package cn._51doit.flink.day11;

import org.apache.flink.api.common.functions.MapFunction;

import org.apache.flink.api.common.typeinfo.Types;

import org.apache.flink.streaming.api.datastream.DataStream;

import org.apache.flink.streaming.api.datastream.DataStreamSource;

import org.apache.flink.streaming.api.datastream.SingleOutputStreamOperator;

import org.apache.flink.streaming.api.environment.StreamExecutionEnvironment;

import org.apache.flink.streaming.api.functions.timestamps.BoundedOutOfOrdernessTimestampExtractor;

import org.apache.flink.streaming.api.windowing.time.Time;

import org.apache.flink.table.api.Table;

import org.apache.flink.table.api.bridge.java.StreamTableEnvironment;

import org.apache.flink.types.Row;

import static org.apache.flink.table.api.Expressions.$;

/**

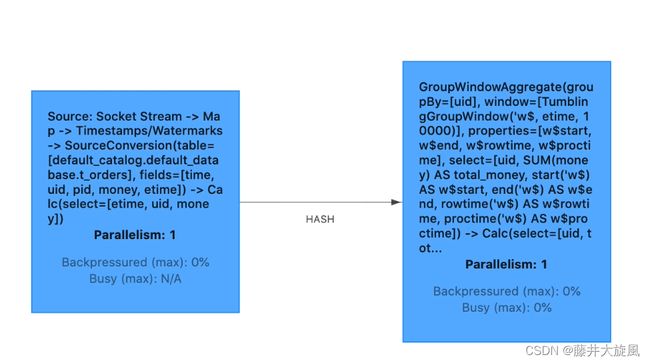

* 使用SQL的方式,实现滚动窗口聚合

*/

public class SqlTumblingEventTimeWindow {

public static void main(String[] args) throws Exception {

StreamExecutionEnvironment env = StreamExecutionEnvironment.getExecutionEnvironment();

//为了触发方便,并行度设置为1

env.setParallelism(1);

//如果希望能够容错,要开启checkpoint

env.enableCheckpointing(10000);

StreamTableEnvironment tableEnv = StreamTableEnvironment.create(env);

//1000,u1,p1,5

//2000,u1,p1,5

//2000,u2,p1,3

//2000,u2,p2,8

//9999,u1,p1,5

//18888,u2,p1,3

//19999,u2,p1,3

DataStreamSource socketTextStream = env.socketTextStream("localhost", 8888);

SingleOutputStreamOperator rowDataStream = socketTextStream.map(

new MapFunction() {

@Override

public Row map(String line) throws Exception {

String[] fields = line.split(",");

Long time = Long.parseLong(fields[0]);

String uid = fields[1];

String pid = fields[2];

Double money = Double.parseDouble(fields[3]);

return Row.of(time, uid, pid, money);

}

}).returns(Types.ROW(Types.LONG, Types.STRING, Types.STRING, Types.DOUBLE));

//提取数据中的EventTime并生成WaterMark

DataStream waterMarksRow = rowDataStream.assignTimestampsAndWatermarks(

new BoundedOutOfOrdernessTimestampExtractor(Time.seconds(0)) {

@Override

public long extractTimestamp(Row row) {

return (long) row.getField(0);

}

});

//将DataStream注册成表并指定schema信息

//$("etime").rowtime(),将数据流中的EVENT Time取名为etime

tableEnv.createTemporaryView("t_orders", waterMarksRow, $("time"), $("uid"), $("pid"), $("money"), $("etime").rowtime());//最后一个字段叫什么都可以,rowtime()方法用来提取eventTime,相对的,proctime()方法用来拿到processingTime;而第一个time其实也是eventTime,不要也没有关系

//将同一个滚动窗口内,相同用户id的money进行sum操作(没有取出窗口的起始时间、结束时间)

//String sql = "SELECT uid, SUM(money) total_money FROM t_orders GROUP BY TUMBLE(etime, INTERVAL '10' SECOND), uid";

//使用SQL实现按照EventTime划分滚动窗口聚合

String sql = "SELECT uid, SUM(money) total_money, TUMBLE_START(etime, INTERVAL '10' SECOND) as win_start, " +

"TUMBLE_END(etime, INTERVAL '10' SECOND) as win_end " +

"FROM t_orders GROUP BY TUMBLE(etime, INTERVAL '10' SECOND), uid";//GROUPBY这两个字段表示只会将同一窗口同一用户id的金额聚合

//waterMarksRow.keyBy(t -> t.getField(1)).window(TumblingEventTimeWindows.of(Time.seconds(10))).sum(3)

Table table = tableEnv.sqlQuery(sql);

//使用TableEnv将table转成AppendStream

DataStream result = tableEnv.toAppendStream(table, Row.class);

result.print();

env.execute();

}

}

//in:

//1000,u1,p1,5

//2000,u1,p1,5

//2000,u2,p1,3

//2000,u2,p2,8

//9999,u1,p1,5

//out

//+I[U1,15.0,1970-01-01T00:00,1970-01-01T00:00:10]

//+I[U2,11.0,1970-01-01T00:00,1970-01-01T00:00:10]

//in

//18888,u2,p1,3

//19999,u2,p1,3

//out

//+I[u2,6.0,1970-01-01T00:00:10,1970-01-01T00:00:20]

实现滑动窗口

package cn._51doit.flink.day11;

import org.apache.flink.api.common.eventtime.WatermarkStrategy;

import org.apache.flink.api.common.functions.MapFunction;

import org.apache.flink.api.common.typeinfo.Types;

import org.apache.flink.streaming.api.datastream.DataStream;

import org.apache.flink.streaming.api.datastream.DataStreamSource;

import org.apache.flink.streaming.api.environment.StreamExecutionEnvironment;

import org.apache.flink.table.api.Table;

import org.apache.flink.table.api.bridge.java.StreamTableEnvironment;

import org.apache.flink.types.Row;

import java.time.Duration;

import static org.apache.flink.table.api.Expressions.$;

public class SqlSlidingEventTimeWindows {

public static void main(String[] args) throws Exception {

StreamExecutionEnvironment env = StreamExecutionEnvironment.getExecutionEnvironment();

env.setParallelism(1);

StreamTableEnvironment tableEnv = StreamTableEnvironment.create(env);

//1000,u1,p1,5

//2000,u1,p1,5

//2000,u2,p1,3

//3000,u1,p1,5

//4000,u1,p1,5

//9999,u2,p1,3

DataStreamSource lines = env.socketTextStream("localhost", 8888);

DataStream rowDataStream = lines.map(new MapFunction() {

@Override

public Row map(String line) throws Exception {

String[] fields = line.split(",");

Long time = Long.parseLong(fields[0]);

String uid = fields[1];

String pid = fields[2];

Double money = Double.parseDouble(fields[3]);

return Row.of(time, uid, pid, money);

}

}).returns(Types.ROW(Types.LONG, Types.STRING, Types.STRING, Types.DOUBLE));

//提取数据中的EventTime并生成WaterMark

DataStream waterMarksRow = rowDataStream.assignTimestampsAndWatermarks(WatermarkStrategy.forBoundedOutOfOrderness(Duration.ofMillis(0)).withTimestampAssigner((r, t) -> (long) r.getField(0)));

//将DataStream注册成表并指定schema信息

//tableEnv.registerDataStream("t_orders", waterMarksRow, "time, uid, pid, money, rowtime.rowtime");

tableEnv.createTemporaryView("t_orders", waterMarksRow, $("time"), $("uid"), $("pid"), $("money"), $("rowtime").rowtime());

//使用SQL实现按照EventTime划分滑动窗口聚合,长度是10s,2s滑动一次

String sql = "SELECT uid, SUM(money) total_money, HOP_START(rowtime, INTERVAL '2' SECOND, INTERVAL '10' SECOND) as winStart, HOP_END(rowtime, INTERVAL '2' SECOND, INTERVAL '10' SECOND) as widEnd" +

" FROM t_orders GROUP BY HOP(rowtime, INTERVAL '2' SECOND, INTERVAL '10' SECOND), uid";

Table table = tableEnv.sqlQuery(sql);

//使用TableEnv将table转成AppendStream

DataStream result = tableEnv.toAppendStream(table, Row.class);

result.print();

env.execute();

}

}

I[U1,5.0,1969-12-31T23:59:52,1970-01-01T00:00:02]

I[U1,10.0,1969-12-31T23:59:54,1970-01-01T00:00:04]

I[U2,11.0,1969-12-31T23:59:54,1970-01-01T00:00:04]

I[U2,11.0,1969-12-31T23:59:56,1970-01-01T00:00:06]

I[U1,10.0,1969-12-31T23:59:56,1970-01-01T00:00:06]

I[U2,11.0,1969-12-31T23:59:58,1970-01-01T00:00:08]

I[U1,10.0,1969-12-31T23:59:58,1970-01-01T00:00:08]