springboot mybatis和spark-sql、SDB对接

springboot mybatis和spark-sql对接

本次将在已经部署了spark、hive和SequoiaDB的环境中,通过实验来实现springboot、mybatis框架与spark-sql的整合,通过hive-sql实现spark的鉴权和权限控制,并能够通过springboot、mybatis访问spark查询SequoiaDB中的数据,具体部署环境为下:

jdk1.8 spark2.1.1 hadoop2.9.2 hive1.2.2

本次实验SequoiaDB巨杉数据库集群拓仆结构为三分区单副本,其中包括:

- 1 个 SequoiaSQL-MySQL 数据库实例节点

- 1 个引擎协调节点

- 1 个编目节点

- 3 个数据节点

本次实验分为下列步骤:

- 使用MySQL配置hive元数据库

- hive配置部分,需正确配置hive-site.xml配置文件中连接mysql的jdbc配置以及开启鉴权功能

- 元数据库的初始化

- 配置spark连接metastore以及开启鉴权功能

- 添加测试数据

- 配置IDEA项目

- 调用方法进行测试

使用MySQL配置hive元数据库

使用sdbadmin用户进入mysql-shell:

su sdbadmin

/opt/sequoiasql/mysql/bin/mysql -h 127.0.0.1 -P 3306 -u root

创建metauser用户,用于后续hive以及spark连接元数据库:

CREATE USER 'metauser'@'%' IDENTIFIED BY 'metauser';

给metauser用户授权:

GRANT ALL ON *.* TO 'metauser'@'%';

创建元数据库metastore:

CREATE DATABASE metastore CHARACTER SET 'latin1' COLLATE 'latin1_bin';

刷新权限:

FLUSH PRIVILEGES;

退出mysql-shell:

quit;

hive配置部分

复制以下代码到实验环境终端执行,用于添加hive元数据信息的数据库配置文件hive-site.xml到${HIVE_HOME}/conf目录下,并正确配置:

cat > /opt/apache-hive-1.2.2-bin/conf/hive-site.xml<< EOF

javax.jdo.option.ConnectionURL

jdbc:mysql://localhost/metastore?createDatabaseIfNotExist=true

javax.jdo.option.ConnectionDriverName

com.mysql.jdbc.Driver

javax.jdo.option.ConnectionUserName

metauser

javax.jdo.option.ConnectionPassword

metauser

hive.test.authz.sstd.hs2.mode

true

hive.server2.enable.doAs

true

hive.users.in.admin.role

root

hive.server2.thrift.port

9073

hive.server2.authentication

CUSTOM

hive.server2.custom.authentication.class

com.sequoiadb.spark.sql.hive.SequoiadbAuth

hive.security.authorization.manager

org.apache.hadoop.hive.ql.security.authorization.plugin.sqlstd.SQLStdHiveAuthorizerFactory

EOF

拷贝依赖jar到${HIVE_HOME}/auxlib(第三方jar包目录)目录下,如果auxlib目录不存在,则自行创建:

cp spark-authorizer-2.1.1.jar /opt/apache-hive-1.2.2-bin/auxlib

cp mysql-connector-java-5.1.7-bin.jar /opt/apache-hive-1.2.2-bin/auxlib

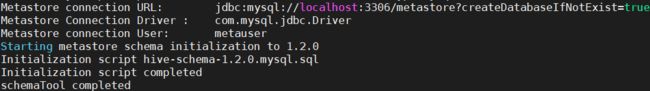

元数据库metastore初始化配置

修改${HIVE_HOME}/conf/hive-env.sh,添加以下代码(本次实验hadoop安装在/opt下,可根据用户情况自行修改),本次实验hive-env位置为:/opt/apache-hive-1.2.2-bin/conf/hive-env.sh

export HADOOP_HOME=/opt/hadoop-2.9.2

使用hive1.2.2软件上提供的schematool工具初始化metastore,${HIVE_HOME}/bin/schematool -dbType mysql -initSchema

apache-hive-1.2.2-bin/bin/schematool -dbType mysql -initSchema

使用sdbadmin用户进入mysql-shell,在metastore database中,创建一个USER表,表名称为DBUSER:

su sdbadmin

/opt/sequoiasql/mysql/bin/mysql -h 127.0.0.1 -P 3306 -u root

use metastore;

create table DBUSER (dbuser varchar(100), passwd char(50), primary key (dbuser));

insert into DBUSER(dbuser, passwd) values ('root', md5('admin'));

为 thrift server 预先创建了一个 root 的用户,密码为 ‘admin’ 未来如果要增加用户,用类似的 insert 命令添加

对metastore库下的DBS表创建触发器(目的是为了让用户可以跨库create table as,当用户在sparksql create database时,触发器自动对DBS表的OWNER_NAME,OWNER_TYPE两个字段进行更新):

delimiter ||

create trigger dbs_trigger

before insert on DBS

for each row

begin

set new.OWNER_NAME="public";

set new.OWNER_TYPE="ROLE";

end ||

delimiter ;

spark配置:

spark sql 增加鉴权和权限管理,需添加依赖jar包到${SPARK_HOME}/jars(本次实验spark安装目录为/opt/spark/)

cp spark-authorizer-2.1.1.jar /opt/spark/jars

cp mysql-connector-java-5.1.7-bin.jar /opt/spark/jars

复制以下代码到实验环境终端执行,用于添加hive元数据信息的数据库配置文件hive-site.xml到${SPARK_HOME}/conf目录下,并正确配置:

cat > /opt/spark/conf/hive-site.xml<< EOF

javax.jdo.option.ConnectionURL

jdbc:mysql://localhost/metastore?createDatabaseIfNotExist=true

javax.jdo.option.ConnectionDriverName

com.mysql.jdbc.Driver

javax.jdo.option.ConnectionUserName

metauser

javax.jdo.option.ConnectionPassword

metauser

hive.security.authorization.createtable.owner.grants

INSERT,SELECT

hive.security.authorization.enabled

true

hive.security.authorization.manager

org.apache.hadoop.hive.ql.security.authorization.plugin.sqlstd.SQLStdHiveAuthorizerFactory

hive.test.authz.sstd.hs2.mode

true

hive.server2.authentication

CUSTOM

hive.server2.custom.authentication.class

com.sequoiadb.spark.sql.hive.SequoiadbAuth

EOF

在 ${SPARK_HOME}/conf/spark-defaults.conf 配置文件中,增加以下内容

spark.sql.extensions=org.apache.ranger.authorization.spark.authorizer.SequoiadbSparkSQLExtension

启动spark:

./opt/spark/sbin/start-all.sh

启动thriftserver服务:

./opt/spark/sbin/start-thriftserver.sh

查看端口监听状态:

netstat -anp|grep 10000

使用spark自带的beeline客户端工具连接到thriftserver服务(默认端口10000):

./bin/beeline -u jdbc:hive2://localhost:10000 -n root -p admin

在 spark sql 中创建数据表,执行建表的USER 对该表拥有 INSERT 和 SELECT 权限 如果其他 USER希望访问该表,应该在 hive 的thrift server 中,执行 grant 命令,以赋予其他 USER 对应权限

操作方式:

启动hive的thrift服务

${HIVE_HOME}/bin/hiveserver2 >${HIVE_HOME}/hive_thriftserver.log 2>&1 &

通过hive自己的beeline连接到hive-site.xml配置的(hive.server2.thrift.port)接口中,使用root登录以及密码为admin(在上述中mysql对DBUSER表插入的用户)

进入到${HIVE_HOME},输入下面代码:

./bin/beeline -u jdbc:hive2://localhost:9073 -n root -p admin

权限控制:

set role admin;

grant SELECT on table test to user USERNAME;

grant INSERT on table test to user USERNAME;

添加测试数据

在SequoiaDB中创建域和集合空间:

var db=new Sdb("localhost",11810);

db.createDomain("scottdomain",["datagroup1","datagroup2","datagroup3"],{AutoSplit:true});

db.createCS("scott",{Domain:"scottdomain"});

在MySQL中创建测试表以及数据:

使用sdbadmin用户登录到mysql-shell中:

/opt/sequoiasql/mysql/bin/mysql -h 127.0.0.1 -P 3306 -u root

创建数据库:

create database scott;

use scott;

创建表emp(另外三张表同理):

create table emp(

empno int unsigned auto_increment primary key COMMENT '雇员编号',

ename varchar(15) COMMENT '雇员姓名',

job varchar(10) COMMENT '雇员职位',

mgr int unsigned COMMENT '雇员对应的领导的编号',

hiredate date COMMENT '雇员的雇佣日期',

sal decimal(7,2) COMMENT '雇员的基本工资',

comm decimal(7,2) COMMENT '奖金',

deptno int unsigned COMMENT '所在部门'

)ENGINE = sequoiadb COMMENT = "雇员表, sequoiadb: { table_options: { ShardingKey: { 'empno': 1 }, ShardingType: 'hash', 'Compressed': true, 'CompressionType': 'lzw', 'AutoSplit': true, 'EnsureShardingIndex': false } }";

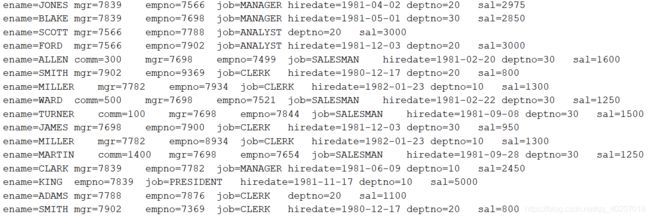

并添加测试数据到emp表中:

INSERT INTO emp VALUES (7369,'SMITH','CLERK',7902,'1980-12-17',800,NULL,20);

INSERT INTO emp VALUES (7499,'ALLEN','SALESMAN',7698,'1981-2-20',1600,300,30);

INSERT INTO emp VALUES (7521,'WARD','SALESMAN',7698,'1981-2-22',1250,500,30);

INSERT INTO emp VALUES (7566,'JONES','MANAGER',7839,'1981-4-2',2975,NULL,20);

INSERT INTO emp VALUES (7654,'MARTIN','SALESMAN',7698,'1981-9-28',1250,1400,30);

INSERT INTO emp VALUES (7698,'BLAKE','MANAGER',7839,'1981-5-1',2850,NULL,30);

INSERT INTO emp VALUES (7782,'CLARK','MANAGER',7839,'1981-6-9',2450,NULL,10);

INSERT INTO emp VALUES (7788,'SCOTT','ANALYST',7566,'87-7-13',3000,NULL,20);

INSERT INTO emp VALUES (7839,'KING','PRESIDENT',NULL,'1981-11-17',5000,NULL,10);

INSERT INTO emp VALUES (7844,'TURNER','SALESMAN',7698,'1981-9-8',1500,100,30);

INSERT INTO emp VALUES (7876,'ADAMS','CLERK',7788,'87-7-13',1100,NULL,20);

INSERT INTO emp VALUES (7900,'JAMES','CLERK',7698,'1981-12-3',950,NULL,30);

INSERT INTO emp VALUES (7902,'FORD','ANALYST',7566,'1981-12-3',3000,NULL,20);

INSERT INTO emp VALUES (7934,'MILLER','CLERK',7782,'1982-1-23',1300,NULL,10);

配置IDEA项目

springboot mybatis和spark sql对接部分:

项目结构:

在maven项目pom.xml文件中添加以下依赖:

<dependency>

<groupId>com.alibabagroupId>

<artifactId>fastjsonartifactId>

<version>1.2.58version>

dependency>

<dependency>

<groupId>com.alibabagroupId>

<artifactId>druid-spring-boot-starterartifactId>

<version>1.1.18version>

dependency>

<dependency>

<groupId>com.baomidougroupId>

<artifactId>mybatis-plus-coreartifactId>

<version>${mybatis-plus.version}version>

dependency>

<dependency>

<groupId>com.baomidougroupId>

<artifactId>mybatis-plus-extensionartifactId>

<version>${mybatis-plus.version}version>

dependency>

<dependency>

<groupId>commons-logginggroupId>

<artifactId>commons-loggingartifactId>

<version>1.1.3version>

dependency>

<dependency>

<groupId>org.apache.hivegroupId>

<artifactId>hive-execartifactId>

<version>1.2.1version>

dependency>

<dependency>

<groupId>org.apache.hivegroupId>

<artifactId>hive-metastoreartifactId>

<version>1.2.1version>

dependency>

<dependency>

<groupId>org.apache.httpcomponentsgroupId>

<artifactId>httpclientartifactId>

<version>4.5.2version>

dependency>

<dependency>

<groupId>org.apache.httpcomponentsgroupId>

<artifactId>httpcoreartifactId>

<version>4.4.4version>

dependency>

<dependency>

<groupId>org.apache.thriftgroupId>

<artifactId>libthriftartifactId>

<version>0.9.2version>

dependency>

<dependency>

<groupId>log4jgroupId>

<artifactId>log4jartifactId>

<version>1.2.17version>

dependency>

<dependency>

<groupId>org.slf4jgroupId>

<artifactId>slf4j-apiartifactId>

<version>1.7.10version>

dependency>

<dependency>

<groupId>org.slf4jgroupId>

<artifactId>slf4j-log4j12artifactId>

<version>1.7.10version>

dependency>

<dependency>

<groupId>org.apache.sparkgroupId>

<artifactId>spark-hive-thriftserver_2.11artifactId>

<version>2.0.1version>

<scope>providedscope>

dependency>

<dependency>

<groupId>org.apache.sparkgroupId>

<artifactId>spark-network-common_2.11artifactId>

<version>2.0.1version>

dependency>

<dependency>

<groupId>com.sequoiadbgroupId>

<artifactId>sequoiadb-driverartifactId>

<version>3.2.1version>

dependency>

<dependency>

<groupId>com.sequoiadbgroupId>

<artifactId>spark-sequoiadb_2.11artifactId>

<version>2.8.0version>

dependency>

<dependency>

<groupId>com.sequoiadbgroupId>

<artifactId>spark-sequoiadb-scala_2.11.2artifactId>

<version>1.12version>

dependency>

<dependency>

<groupId>org.apache.sparkgroupId>

<artifactId>spark-sql_2.11artifactId>

<version>2.2.2version>

dependency>

<dependency>

<groupId>org.apache.hivegroupId>

<artifactId>hive-jdbcartifactId>

<version>1.2.1version>

dependency>

<dependency>

<groupId>org.apache.hadoopgroupId>

<artifactId>hadoop-commonartifactId>

<version>3.2.0version>

dependency>

在application.properties配置文件中,添加如下配置:

server.port=8090

#datasource config

#指定连接池类型

spring.datasource.type=com.alibaba.druid.pool.DruidDataSource

#指定驱动

spring.datasource.driver-class-name=org.apache.hive.jdbc.HiveDriver

#指定连接地址、用户名和密码

spring.datasource.url=jdbc:hive2://192.168.80.132:10000/default

spring.datasource.username=root

spring.datasource.password=admin

#初始化连接数量

spring.datasource.druid.initialSize=1

#最大空闲连接数

spring.datasource.druid.minIdle=5

#最大并发连接数

spring.datasource.druid.maxActive=20

#配置获取连接等待超时的时间

spring.datasource.druid.maxWait=60000

#配置间隔多久才进行一次检测,检测需要关闭的空闲连接,单位是毫秒

spring.datasource.druid.timeBetweenEvictionRunMillis=60000

#配置一个连接在池中最小生存的时间,单位是毫秒

spring.datasource.druid.minEvictableIdelTimeMillis=300000

#用来检测连接是否有效的sql,要求是一个查询语句

spring.datasource.druid.validation-query=SELECT 1

#mybatis

#mybatis-plus.mapper-locations=classpath:mapper/*.xml

#mybatis-plus.configuration.cache-enabled=false

#映射xml文件位置

mybatis.mapper-locations=classpath:mapper/*.xml

#需要扫描实体类的位置

mybatis.type-aliases-package=com.sdb.spark.demo.entity

#spring mvc配置静态文件

spring.mvc.static-path-pattern=/static/**

#热部署

spring.devtools.restart.enabled=true

spring.devtools.restart.additional-paths=src/main/java

spring.devtools.restart.exclude=WEB-INF/**

在com.sdb.spark.demo.entity包中配置emp实体类Emp:

/**

* 雇员表

*

* @author yousongxian

* @date 2020-07-29

*/

public class Emp {

private Integer empno;//雇员编号

private String ename;//雇员姓名、

private String job;//雇员职位

private Integer mgr;//雇员对应的领导的编号

private String hiredate;//雇员的雇佣日期

private Double sal;//雇员的基本工资

private Double comm;//奖金

private Integer deptno;//所在部门

}

//省略getter和setter方法

@Override

public String toString() {

return "Emp{" +

"empno=" + empno +

", ename='" + ename + '\'' +

", job='" + job + '\'' +

", mgr=" + mgr +

", hiredate='" + hiredate + '\'' +

", sal=" + sal +

", comm=" + comm +

", deptno=" + deptno +

'}';

}

在classpath:resources下创建新文件夹mapper,并新建mybatis实体类映射文件EmpMapper.xml(映射Emp)

在EmpMapper.xml文件中新增通用查询映射结果(column对应创建表时候定义的字段名,property对应实体类中的属性名称):

<resultMap id="BaseResultMap" type="com.sdb.spark.demo.entity.Emp">

<id column="empno" property="empno"/>

<result column="ename" property="ename" />

<result column="job" property="job" />

<result column="mgr" property="mgr" />

<result column="hiredate" property="hiredate" />

<result column="sal" property="sal" />

<result column="comm" property="comm" />

<result column="deptno" property="deptno" />

resultMap>

在EmpMapper.xml中新增查询全部的方法,返回类型为map

<select id="selectAll" resultType="map" parameterType="string">

select * from ${tablename}

select>

在EmpMapper.xml中新增创建表tmp的方法(第一种)

<update id="createTableEmp">

CREATE TABLE emp

(

empno INT,

ename STRING,

job STRING,

mgr INT,

hiredate date,

sal decimal(7,2),

comm decimal(7,2),

deptno INT

)

USING com.sequoiadb.spark OPTIONS

(

host 'localhost:11810',

collectionspace 'scott',

collection 'emp'

)update>

在EmpMapper.xml中新增创建表tmp_schema的方法(第二种:使用连接器自动生成SCHEMA),要求在建表时 SequoiaDB 的关联集合中就已经存在数据记录

<update id="createTableEmpSchema">

CREATE TABLE emp_schema USING com.sequoiadb.spark OPTIONS

(

host 'localhost:11810',

collectionspace 'scott',

collection 'emp'

)

update>

在EmpMapper.xml中新增创建表tmp_as_select的方法,输入参数为map类型(第三种:通过 SQL 结果集创建表)

<update id="createTableAsSelect" parameterType="map">

CREATE TABLE ${tablename} USING com.sequoiadb.spark OPTIONS

(

host 'localhost:11810',

domain 'scottdomain',

collectionspace 'scott',

collection #{tablename},

shardingkey '{"_id":1}',

shadingtype 'hash',

autosplit true

)AS ${condition}

update>

在EmpMapper.xml最上方标签内添加namespace属性,这里指定namespace=“com.sdb.spark.demo.mapper.EmpMapper”,意思是EmpMapper.xml内定义的方法映射接口为com.sdb.spark.demo.mapper包下的EmpMapper接口文件

<mapper namespace="com.sdb.spark.demo.mapper.EmpMapper">

在com.sdb.spark.demo.mapper中配置EmpMapper接口文件,并根据在EmpMapper.xml中定义的方法id以及输入、输出类型创建方法(EmpMapper.xml文件中定义的update类型方法默认返回值为操作影响的行数(int)):

注意:方法名称跟xml映射文件中定义的方法id必须一致

List<Map<String,Object>> selectAll(String tablename);//查询全部

int createTableEmp();//创建emp表

int createTableEmpSchema();//用自动生成schema的方式创建emp_schema表

int createTableAsSelect(Map<String,String>map);//用查询结果创建emp_as_select表

int insertEmp(Emp emp);//对emp插入记录

com.sdb.spark.demo.service中配置EmpService接口并定义以下方法:

List<Map<String,Object>> selectAll(String tablename);

int createTableEmp();

int createTableEmpSchema();//用自动生成schema的方式创建emp_schema表

int createTableAsSelect(Map<String,String> map);//用查询结果创建emp_as_select表

int insertEmp(Emp emp);//对emp插入记录

com.sdb.spark.demo.service.Impl中创建EmpServiceImpl类并实现EmpService接口,并在类上方添加@service注解:

@Service

public class EmpServiceImpl implements EmpService {

@Autowired

private EmpMapper empMapper;

@Override

public List<Map<String,Object>> selectAll(String tablename) {

return empMapper.selectAll(tablename);

}

@Override

public int createTableEmp() {

return empMapper.createTableEmp();

}

@Override

public int createTableEmpSchema() {

return empMapper.createTableEmpSchema();

}

@Override

public int createTableAsSelect(Map<String,String>map) {

return empMapper.createTableAsSelect(map);

}

@Override

public int insertEmp(Emp emp) {

return empMapper.insertEmp(emp);

}

}

在测试类调用方法部分:

在classpath:test.java.com.sdb.spark.demo.DemoApplicationTests测试类下,添加以下代码,用于调用EmpService实现类中的方法

定义EmpService类型的变量,并添加注解@Autowired实现自动注入:

@Autowired

private EmpService empService;

定义方法selectAll用于查询全部,并在方法上添加junit注解@Test用于测试:

@Test

public List<Map<String,Object>> selectAll(){

String tablename="emp";

List<Map<String,Object>>resultlist=new ArrayList<Map<String, Object>>();

resultlist =empService.selectAll(tablename);

for(Map<String,Object>map:resultlist){

for(Map.Entry<String,Object>m:map.entrySet()){

System.out.print(m.getKey()+"="+m.getValue()+"\t");

}

System.out.println();

}

return resultlist;

}

定义三种创建表的方法,并各自在方法上添加junit注解@Test用于测试:

@Test

public void createTable(){

empService.createTableEmp();

}

@Test

public void createTableEmpSchema(){

empService.createTableEmpSchema();

}

@Test

public void createTableAsSelect(){

Map<String,String> map=new HashMap<String, String>();

String tablename="emp_as_select";

//String condition="select empno,ename from emp";

map.put("tablename",tablename);

map.put("condition",condition);

if(map.get("tablename").equals("")||map.get("tablename")==null||map.get("condition").equals("")||map.get("condition")==null){

System.out.println("请输入正确表明和条件");

}else {

empService.createTableAsSelect(map);

}

}

在springboot启动类DemoApplication的类名上方,添加spring扫描注解,具体类配置如下:

@SpringBootApplication(scanBasePackages = {"com.sdb.spark.demo.service.Impl"})

@MapperScan(basePackages = {"com.sdb.spark.demo.mapper"})

public class DemoApplication {

public static void main(String[] args) {

SpringApplication.run(DemoApplication.class, args);

}

}