Spark Shell简单介绍

初始化Spark

编写一个Spark程序第一步要做的事情就是创建一个SparkContext对象,SparkContext对象告诉Spark如何连接到集群。在创建一个SparkContext对象之前,必须先创建一个SparkConf对象,SparkConf对象包含了Spark应用程序的相关信息。

每个JVM只能运行一个SparkContext,在创建另一个新的SparkContext对象前,必须将旧的对象stop()。下面语句就初始化了一个SparkContext:

val conf = new SparkConf().setAppName(appName).setMaster(master)

new SparkContext(conf)

appName参数是你Spark应用程序的名称,这个名称会显示在集群UI界面上;master参数可以是Spark,Mesos或Yarn集群的URL,还可以是代表单机模式运行的“local”字符串。在实际生产中,不建议在程序代码中指定master,而是在用spark-submit命令提交应用时才指定master,这样做的好处是不用修改代码就能将应用部署到任何一个集群模式中运行。对于本地测试或者单元测试,可通过“local”将Spark应用程序运行在本地的一个进程中。

使用Shell

当启动一个Spark shell时,Spark shell已经预先创建好一个SparkContext对象,其变量名为“sc”。如果你再新建一个SparkContext对象,那么它将不会运行下去。我们可以使用–master标记来指定以何种方式连接集群,也可以使用–jars标记添加JAR包到classpath中,多个JAR包之间以逗号分隔;还可以使用–packages标记添加Maven依赖到shell会话中,多个依赖间用逗号隔开。另外通过–repositories标记添加外部的repository。下面语句在本地模式下,使用四核运行spark-shell:

./bin/spark-shell --master local[4]

或者在启动时添加code.jar包到classpath:

./bin/spark-shell --master local[4] --jars code.jar

启动时使用Maven坐标(groupId,artifactId,version)添加依赖包:

./bin/spark-shell --master local[4] --packages "org.example:example:0.1"

对于spark-shell的其他标记,通过执行spark-shell --help获取

[root@master bin]# ./spark-shell --help

Usage: ./bin/spark-shell [options]

Options:

--master MASTER_URL spark://host:port, mesos://host:port, yarn, or local.

--deploy-mode DEPLOY_MODE Whether to launch the driver program locally ("client") or

on one of the worker machines inside the cluster ("cluster")

(Default: client).

--class CLASS_NAME Your application's main class (for Java / Scala apps).

--name NAME A name of your application.

--jars JARS Comma-separated list of local jars to include on the driver

and executor classpaths.

--packages Comma-separated list of maven coordinates of jars to include

on the driver and executor classpaths. Will search the local

maven repo, then maven central and any additional remote

repositories given by --repositories. The format for the

coordinates should be groupId:artifactId:version.

--exclude-packages Comma-separated list of groupId:artifactId, to exclude while

resolving the dependencies provided in --packages to avoid

dependency conflicts.

--repositories Comma-separated list of additional remote repositories to

search for the maven coordinates given with --packages.

--py-files PY_FILES Comma-separated list of .zip, .egg, or .py files to place

on the PYTHONPATH for Python apps.

--files FILES Comma-separated list of files to be placed in the working

directory of each executor.

--conf PROP=VALUE Arbitrary Spark configuration property.

--properties-file FILE Path to a file from which to load extra properties. If not

specified, this will look for conf/spark-defaults.conf.

--driver-memory MEM Memory for driver (e.g. 1000M, 2G) (Default: 1024M).

--driver-java-options Extra Java options to pass to the driver.

--driver-library-path Extra library path entries to pass to the driver.

--driver-class-path Extra class path entries to pass to the driver. Note that

jars added with --jars are automatically included in the

classpath.

--executor-memory MEM Memory per executor (e.g. 1000M, 2G) (Default: 1G).

--proxy-user NAME User to impersonate when submitting the application.

This argument does not work with --principal / --keytab.

--help, -h Show this help message and exit.

--verbose, -v Print additional debug output.

--version, Print the version of current Spark.

Spark standalone with cluster deploy mode only:

--driver-cores NUM Cores for driver (Default: 1).

Spark standalone or Mesos with cluster deploy mode only:

--supervise If given, restarts the driver on failure.

--kill SUBMISSION_ID If given, kills the driver specified.

--status SUBMISSION_ID If given, requests the status of the driver specified.

Spark standalone and Mesos only:

--total-executor-cores NUM Total cores for all executors.

Spark standalone and YARN only:

--executor-cores NUM Number of cores per executor. (Default: 1 in YARN mode,

or all available cores on the worker in standalone mode)

YARN-only:

--driver-cores NUM Number of cores used by the driver, only in cluster mode

(Default: 1).

--queue QUEUE_NAME The YARN queue to submit to (Default: "default").

--num-executors NUM Number of executors to launch (Default: 2).

If dynamic allocation is enabled, the initial number of

executors will be at least NUM.

--archives ARCHIVES Comma separated list of archives to be extracted into the

working directory of each executor.

--principal PRINCIPAL Principal to be used to login to KDC, while running on

secure HDFS.

--keytab KEYTAB The full path to the file that contains the keytab for the

principal specified above. This keytab will be copied to

the node running the Application Master via the Secure

Distributed Cache, for renewing the login tickets and the

delegation tokens periodically.

启动Spark shell

Spark shell启动界面如下:

[root@master bin]# ./spark-shell --master local[2]

Setting default log level to "WARN".

To adjust logging level use sc.setLogLevel(newLevel).

19/05/17 14:54:38 WARN util.NativeCodeLoader: Unable to load native-hadoop library for your platform... using builtin-java classes where applicable

19/05/17 14:54:41 WARN spark.SparkContext: Use an existing SparkContext, some configuration may not take effect.

Spark context Web UI available at http://192.168.230.10:4040

Spark context available as 'sc' (master = local[2], app id = local-1558076081004).

Spark session available as 'spark'.

Welcome to

____ __

/ __/__ ___ _____/ /__

_\ \/ _ \/ _ `/ __/ '_/

/___/ .__/\_,_/_/ /_/\_\ version 2.0.2

/_/

Using Scala version 2.11.8 (Java HotSpot(TM) 64-Bit Server VM, Java 1.8.0_172)

Type in expressions to have them evaluated.

Type :help for more information.

scala>

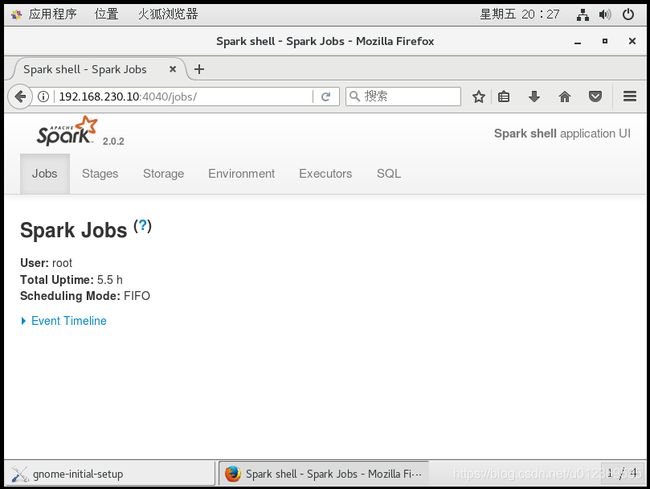

Spark Shell Web UI

从启动报的信息可知,Spark context的Web UI入口是 http://192.168.230.10:4040,在浏览器输入入口地址后的界面如下:

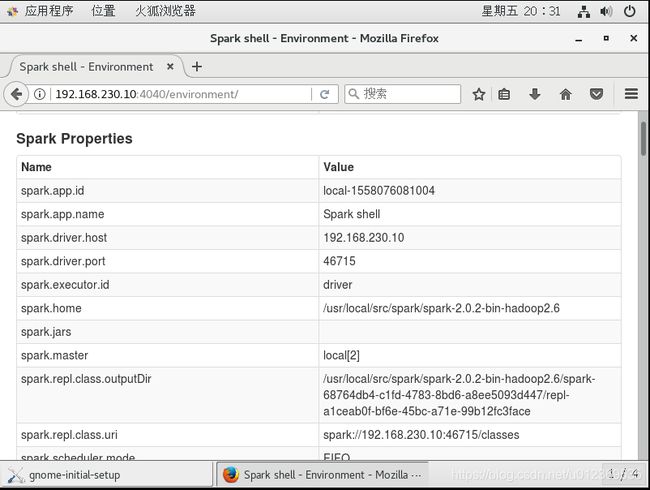

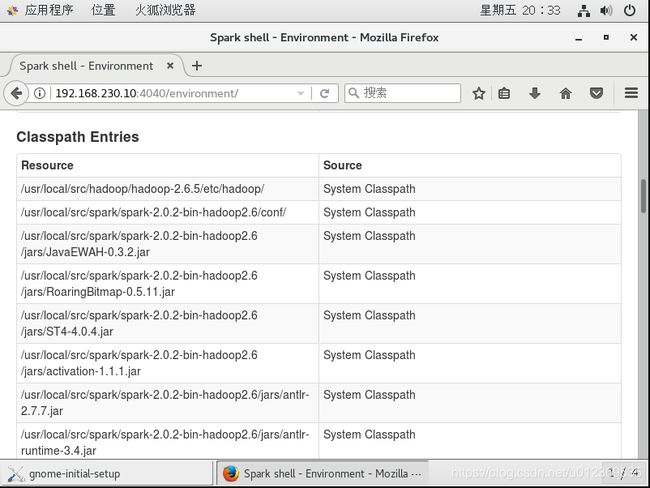

在界面的右上角可看到“Spark shell application UI”,说明启动Spark shell其实是运行一个Spark应用程序,其应用的名称叫“Spark shell”,进一步可以看到配置信息如下:

在界面的右上角可看到“Spark shell application UI”,说明启动Spark shell其实是运行一个Spark应用程序,其应用的名称叫“Spark shell”,进一步可以看到配置信息如下:

Spark Shell本质

另外,我们查看一下{SPARK_HOME}/bin/saprk-shell脚本,发现脚本里有执行${SPARK_HOME}/bin/spark-submit的命令,并且–name标记的值是“spark shell”,这说明spark-shell的本质是在后台调用了spark-submit脚本来启动应用程序的,在spark-shell中已经创建了一个名为sc的SparkContext对象。执行./bin/spark-shell其实是提交运行一个名为“spark shell”的Spark Application,以交互式的命令行形式展现给用户,也相当于是Spark应用的Driver程序,执行用户输入的代码指令并展示结果。

function main() {

if $cygwin; then

# Workaround for issue involving JLine and Cygwin

# (see http://sourceforge.net/p/jline/bugs/40/).

# If you're using the Mintty terminal emulator in Cygwin, may need to set the

# "Backspace sends ^H" setting in "Keys" section of the Mintty options

# (see https://github.com/sbt/sbt/issues/562).

stty -icanon min 1 -echo > /dev/null 2>&1

export SPARK_SUBMIT_OPTS="$SPARK_SUBMIT_OPTS -Djline.terminal=unix"

"${SPARK_HOME}"/bin/spark-submit --class org.apache.spark.repl.Main --name "Spark shell" "$@"

stty icanon echo > /dev/null 2>&1

else

export SPARK_SUBMIT_OPTS

"${SPARK_HOME}"/bin/spark-submit --class org.apache.spark.repl.Main --name "Spark shell" "$@"

fi

}

启动spark shell后,查看本机的进程,可以看到SparkSubmit进程:

[root@master bin]# jps

2275 SparkSubmit

5007 Jps

退出Spark Shell

退出Spark Shell的正确操作是:quit,而不是我们常用的ctrl+c

Welcome to

____ __

/ __/__ ___ _____/ /__

_\ \/ _ \/ _ `/ __/ '_/

/___/ .__/\_,_/_/ /_/\_\ version 2.0.2

/_/

Using Scala version 2.11.8 (Java HotSpot(TM) 64-Bit Server VM, Java 1.8.0_172)

Type in expressions to have them evaluated.

Type :help for more information.

scala> :quit

[root@master bin]# jps

8431 Jps