【RocketMQ】基于RocketMQ 5.1.0版本的自动故障恢复集群实践(Controller内嵌方式)

文章目录

- 需求

- 准备

- nameserver

- dashboard

- Broker

- exporter

- 遇到的问题

- 写在最后

需求

-

RocketMQ版本为5.1.0;

-

搭建一个3主3从的集群,采用交叉部署(避免两台机器互为主从),节省机器资源;

-

3个nameserver、1个exporter、1个dashboard;

-

支持自动故障恢复,controller采用内嵌在nameserver中的方式部署;

-

异步刷盘;

-

主从切换时,不能丢消息

准备

机器准备:

- 172.24.30.192

- 172.24.30.193

- 172.24.30.194

部署规划:

| 服务名 | IP | 端口 |

|---|---|---|

| nameserver(controller-n0) | 172.24.30.192 | 19876(controller: 19878) |

| nameserver(controller-n1) | 172.24.30.193 | 19876(controller: 19878) |

| nameserver(controller-n2) | 172.24.30.194 | 19876(controller: 19878) |

| broker-a | 172.24.30.192 | 13210 |

| broker-a-s | 172.24.30.193 | 13210 |

| broker-b | 172.24.30.193 | 13220 |

| broker-b-s | 172.24.30.194 | 13220 |

| broker-c | 172.24.30.194 | 13230 |

| broker-c-s | 172.24.30.192 | 13230 |

| dashboard | 172.24.30.193 | 18281 |

| exporter | 172.24.30.192 | 18282 |

下载二进制文件包:https://rocketmq.apache.org/download/

nameserver

配置文件:

这里只是演示如何配置,所以只展示一个nameserver配置,其余的把controllerDLegerSelfId改成自己节点对应的即可,注意:这个参数是不能重复的。

# nameserver相关

listenPort = 19876

rocketmqHome = /neworiental/rocketmq-5.1.0/rocketmq-nameserver

useEpollNativeSelector = true

orderMessageEnable = true

serverPooledByteBufAllocatorEnable = true

kvConfigPath = /neworiental/rocketmq-5.1.0/rocketmq-nameserver/store/namesrv/kvConfig.json

configStorePath = /neworiental/rocketmq-5.1.0/rocketmq-nameserver/conf/nameserver.conf

# controller相关

enableControllerInNamesrv = true

controllerDLegerGroup = littleCat-Controller

controllerDLegerPeers = n0-172.24.30.192:19878;n1-172.24.30.193:19878;n2-172.24.30.194:19878

controllerDLegerSelfId = n0

controllerStorePath = /neworiental/rocketmq-5.1.0/rocketmq-controller/store

enableElectUncleanMaster = false

notifyBrokerRoleChanged = true

-

enableElectUncleanMaster: 是否支持选举在同步状态集之外的节点为master,如果设置为true,可能会丢消息

-

修改conf目录下的rmq.namesrv.logback.xml,主要是批量修改日志路径,如果可以接受默认路径则忽略;

- 提示:日志目录可以使用相对路径,这样可以一劳永逸,保证每个服务使用不同的目录即可;

-

修改bin目录下的runserver.sh,主要修改GC日志文件路径、JVM启动参数:xmx、xms,如接受默认则忽略,默认为8G,如果是搭建测试的伪集群,小心机器扛不住;

启动脚本:

#!/bin/sh

. /etc/profile

nohup sh /neworiental/rocketmq-5.1.0/rocketmq-nameserver/bin/mqnamesrv -c /neworiental/rocketmq-5.1.0/rocketmq-nameserver/conf/nameserver.conf >/dev/null 2>&1 &

echo "startup nameserver..."

停止脚本:

如果一台机器上部署了多个nameserver,不要用这种方式:sh /neworiental/rocketmq-5.1.0/rocketmq-nameserver/bin/mqshutdown namesrv 去停止nameserver,这种方式会停止所有的nameserver

#!/bin/bash

. /etc/profile

PID=`ps -ef | grep '/neworiental/rocketmq-5.1.0/rocketmq-nameserver' | grep -v grep | awk '{print $2}'`

if [[ "" != "$PID" ]]; then

echo "killing rocketmq-nameserver : $PID"

kill $PID

fi

启动Broker前,把所有nameserver成功启动。

dashboard

略,见另一篇文章:https://blog.csdn.net/sinat_14840559/article/details/129737390?spm=1001.2014.3001.5501

Broker

官方的文档中提到:这种模式下,不需要指定brokerId和brokerRole,可以将所有节点的brokerRole设置为SLAVE,brokerId设置为-1(主从来回切换,手动配置的0其实也无效了)。所以先注册成功的broker即为master。

为了验证,最好先启动一组broker,确定功能符合预期之后,再启动全部broker。

思考:这种模式其实就不存在主从异步写(ASYNC_MASTER)了,主从同步是按Raft协议实现的。

broker-a主节点:

brokerClusterName = littleCat

brokerName = broker-a

brokerId = -1

listenPort = 13210

namesrvAddr = 172.24.30.192:19876;172.24.30.193:19876;172.24.30.194:19876;

# 开启controller支持

enableControllerMode = true

controllerAddr = 172.24.30.192:19878;172.24.30.193:19878;172.24.30.194:19878;

deleteWhen = 04

fileReservedTime = 48

brokerRole = SLAVE

flushDiskType = ASYNC_FLUSH

autoCreateTopicEnable = false

autoCreateSubscriptionGroup = false

maxTransferBytesOnMessageInDisk = 65536

rocketmqHome = /neworiental/rocketmq-5.1.0/broker-a

storePathConsumerQueue = /neworiental/rocketmq-5.1.0/broker-a/store/consumequeue

brokerIP2 = 172.24.30.192

brokerIP1 = 172.24.30.192

aclEnable = false

storePathRootDir = /neworiental/rocketmq-5.1.0/broker-a/store

storePathCommitLog = /neworiental/rocketmq-5.1.0/broker-a/store/commitlog

# 3000天:3600*24*3000

timerMaxDelaySec = 259200000

traceTopicEnable = true

timerPrecisionMs = 1000

timerEnableDisruptor = true

启动脚本:

#!/bin/bash

. /etc/profile

nohup sh /neworiental/rocketmq-5.1.0/broker-a/bin/mqbroker -c /neworiental/rocketmq-5.1.0/broker-a/conf/broker.conf >/dev/null 2>&1 &

echo "deploying broker-a..."

停止脚本:

#!/bin/bash

. /etc/profile

PID=`ps -ef | grep '/neworiental/rocketmq-5.1.0/rocketmq-broker-a' | grep -v grep | awk '{print $2}'`

if [[ "" != "$PID" ]]; then

echo "killing rocketmq-5-broker-a : $PID"

kill $PID

fi

broker-a从节点:

brokerClusterName = littleCat

brokerName = broker-a

brokerId = -1

listenPort = 13210

namesrvAddr=172.24.30.192:19876;172.24.30.193:19876;172.24.30.194:19876;

# 开启controller支持

enableControllerMode = true

controllerAddr = 172.24.30.192:19878;172.24.30.193:19878;172.24.30.194:19878;

deleteWhen = 04

fileReservedTime = 48

brokerRole = SLAVE

flushDiskType = ASYNC_FLUSH

autoCreateTopicEnable = false

autoCreateSubscriptionGroup = false

maxTransferBytesOnMessageInDisk = 65536

rocketmqHome=/neworiental/rocketmq-5.1.0/broker-a-s1

storePathConsumerQueue=/neworiental/rocketmq-5.1.0/broker-a-s1/store/consumequeue

brokerIP2=172.24.30.193

brokerIP1=172.24.30.193

aclEnable=false

storePathRootDir=/neworiental/rocketmq-5.1.0/broker-a-s1/store

storePathCommitLog=/neworiental/rocketmq-5.1.0/broker-a-s1/store/commitlog

# 3000天:3600*24*3000

timerMaxDelaySec=259200000

traceTopicEnable=true

timerPrecisionMs=1000

timerEnableDisruptor=true

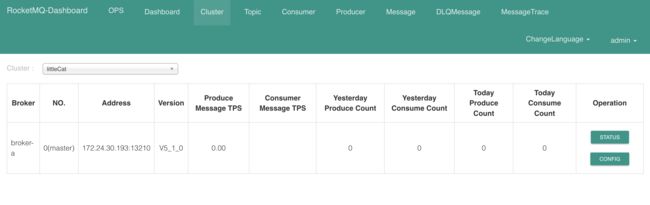

启动两个broker后,观察dashboard,已经识别到broker-a的主从节点了:

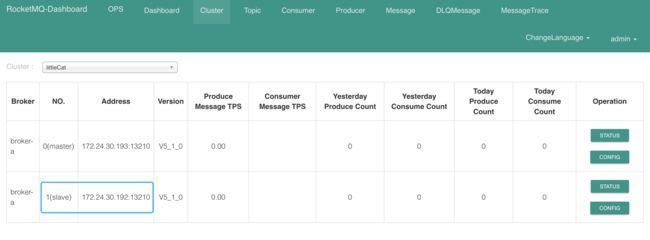

将master节点kill掉之后,效果如下:

slave成功切换为master,重新启动kill掉的节点:

成为当前master的slave节点,符合预期,接下来就正常启动broker-b、broker-b-s、broker-c、broker-c-s完成集群搭建。

exporter

略,见另一篇文章:https://blog.csdn.net/sinat_14840559/article/details/119782996

对于最新版集群,最好下载新版本的exporter:https://github.com/apache/rocketmq-exporter

遇到的问题

- 同一台机器上部署多个broker时,后面启动的broker启动不成功,闪退:

原因:同一台机器上,两个broker的端口设置太相近,broker会开启几个端口来做内部通信,默认比配置的port少1和2,高版本的可能会占用更多,所以端口设置尽量相差大点,这个问题耽误了很久,日志中并没有说端口占用之类的提示,很不友好。

-

一组broker中,同时出现两个slave,其中一个日志正常,另一个报错: Error happen when change sync state set

原因:来回主从切换过程中导致内部维护的SyncStateSet出现问题,将两个broker停止,然后先先启动日志正常的broker,再启动日志报错的broker即可,如果先启动报错的broker,会选举master失败: CODE: 2012 DESC: The broker has not master, and this new registered broker can’t not be elected as master

写在最后

用了两年的K8S来管理集群,这次由于之前写的Operater不支持新版本集群,需要临时搭一个,尽管之前搭过很多次,但是不得不说,相较于手动搭建,K8S真的是方便太多了,手动搭建,再细心也会有纰漏,还要做systemd、资源协调等等。。。

手动搭建中间件集群,是一个精细活,一个小小的配置错误,就可能会让你陷入无限深渊,缠绕你很久排查不到,所以,准备工作很重要,把所有的文件、配置都先准备好,检查好,最后一气呵成即可。

这篇文章相对来说,只是把主要的流程过一下,详细的配置文件需要自己去CV一下,主要是验证新版本的Controller,要学习完整搭建流程的,可以参考:https://blog.csdn.net/sinat_14840559/article/details/108391651