zookeeper分布式锁从零实践与源码解析

现今服务大都是集群部署,使用操作系统锁或基于AQS的JUC并发工具,不能满足`跨JVM`线程同步的需求。本文从中间件安装开始,以抢购商品为例,实现一个`zk`分布式锁。

## 一、实现方式对比

分布式锁常见实现有如下三种:

- 数据库,基于唯一索引

- `redis`,基于原子命令与`lua`脚本

- `zookeeper`,基于临时顺序节点

我们选择zk实现的原因如下:

- `mysql`实现锁依赖IO,性能过低

- `redis`主从部署存在数据同步问题

- 实现`redLock`资源占用高、性能较低

- `zk`本身具有分布式一致性,`ZAB`协议请查阅参考资料

- `zk`实现较为简洁

- 生产环境本身已有`zk`集群

## 二、业务场景描述

假设我们要抢购一部新发行的手机,限制条件如下:

- 商品库存总量为`10`台

- 并发量大约为`10000`

- 不能超卖

## 三、中间件安装

- `docker`安装:

参考:[容器运行时docker与服务编排](https://juejin.cn/post/7083742011576025118)

- `zookeeper`安装

```

docker run ‐‐privileged=true ‐d ‐‐name zookeeper ‐p 8001:2181 ‐p 8002:8080 ‐d zookeeper:latest

```

这里宿主机端口映射,可自定义。

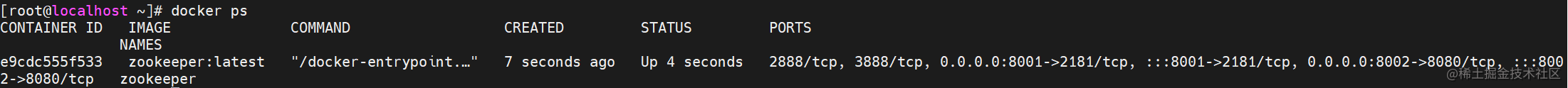

- 运行`docker ps`查看运行状态

- 防火墙开放端口

```

firewall‐cmd ‐‐zone=public ‐‐add‐port=2181/tcp ‐‐permanent

firewall‐cmd ‐‐zone=public ‐‐add‐port=8080/tcp ‐‐permanent

firewall‐cmd ‐‐reload

```

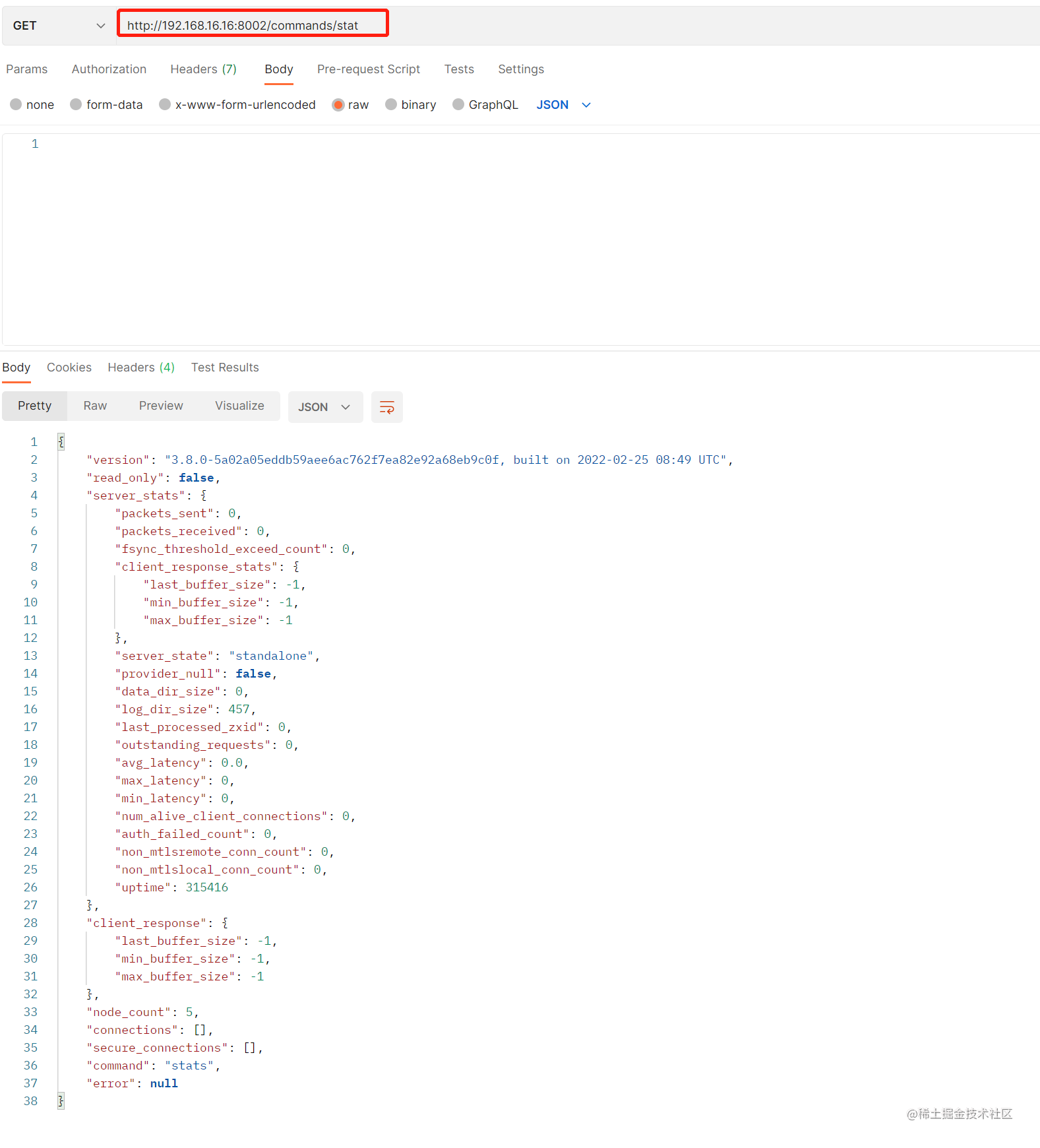

- 验证zookeeper

有返回状态即可。

## 四、代码实现

这里我们使用`curator`客户端进行实现。

- 引入依赖

```

```

- 核心代码实现

```

@Component("zk")

public class FlashSaleHandler {

// 手机销售总量为10

public static AtomicInteger storageCount = new AtomicInteger(10);

public static final String SERVER_ADDR = "192.168.16.16:8001";

public Boolean flashSale() {

// 重试策略

RetryPolicy policy = new ExponentialBackoffRetry(2000, 3);

// 创建客户端

CuratorFramework client = CuratorFrameworkFactory.builder()

.namespace(String.valueOf(new Random().nextInt()))

.connectString(SERVER_ADDR)

.retryPolicy(policy)

.build();

// 连接zk服务端

client.start();

// 构建锁对象

final InterProcessMutex mutex = new InterProcessMutex(client, "/locks/iphone13");

// 秒杀逻辑

try {

// 获取锁

mutex.acquire();

// 秒杀减库存

if (storageCount.get() > 0) {

System.out.println(Thread.currentThread().getName()

+ "成功抢购,剩余库存" + storageCount.decrementAndGet());

return true;

} else {

// 库存不足

System.out.println("sold out...");

return false;

}

} catch (Exception exception) {

// 这里我们直接忽略

System.out.println("just ignore");

} finally {

// 释放锁

try {

mutex.release();

} catch (Exception exception) {

// 这里我们直接忽略

System.out.println("just ignore");

}

}

return false;

}

}

```

## 五、测试

测试线程池配置:

```

@Configuration

public class ThreadPoolCreator {

/**

* 核心线程数

*/

private static int corePoolSize = Runtime.getRuntime().availableProcessors() * 3;

/**

* 最大线程数 避免内存交换 设置为核心核心线程数

*/

private static int maximumPoolSize = corePoolSize;

/**

* 最大空闲时间

*/

private static long keepAliveTime = 3;

/**

* 最大空闲时间单位

*/

private static TimeUnit unit = TimeUnit.MINUTES;

/**

* 使用有界队列,避免内存溢出

*/

private static BlockingQueue

/**

* 线程工厂,这里我们使用可命名的线程工厂,方便业务区分以及生产问题排查。

*/

private static ThreadFactory threadFactory = new ThreadFactoryBuilder()

.setNameFormat("flashSale-%d").build();

/**

* 拒绝策略 根据业务选择或者自定义

*/

private static RejectedExecutionHandler handler = new ThreadPoolExecutor.AbortPolicy();

@Bean

public ThreadPoolExecutor threadPoolExecutor(){

return new ThreadPoolExecutor(

corePoolSize,

maximumPoolSize,

keepAliveTime, unit,

workQueue,

threadFactory,

handler);

}

}

```

测试逻辑:

```

...

@Resource

private FlashSaleHandler zk;

@Test

public void testFlashSale(){

List

List

for (int i=0; i<10000; i++){

CompletableFuture

threadPoolExecutor);

futures.add(future);

}

CompletableFuture

.allOf(futures.toArray(new CompletableFuture[futures.size()]))

.whenComplete((v, t)-> futures.forEach(eachFuture -> {

results.add(eachFuture.getNow(null));

})).join();

// 最终抢购成功数量为10

Assertions.assertEquals(results.stream()

.filter(result -> result == true).collect(Collectors.toList()).size(), 10);

}

```

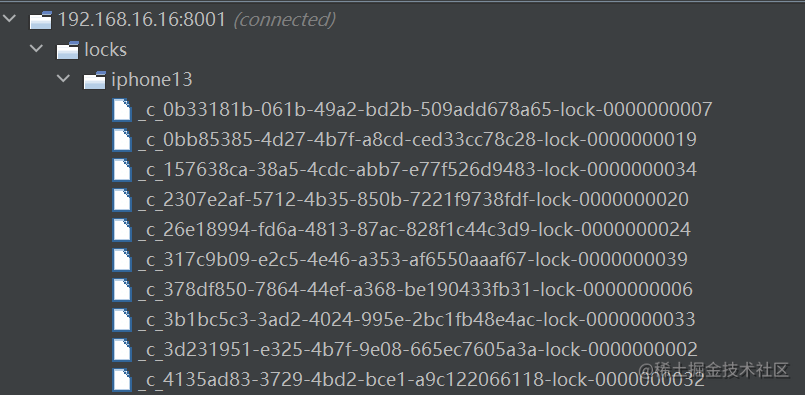

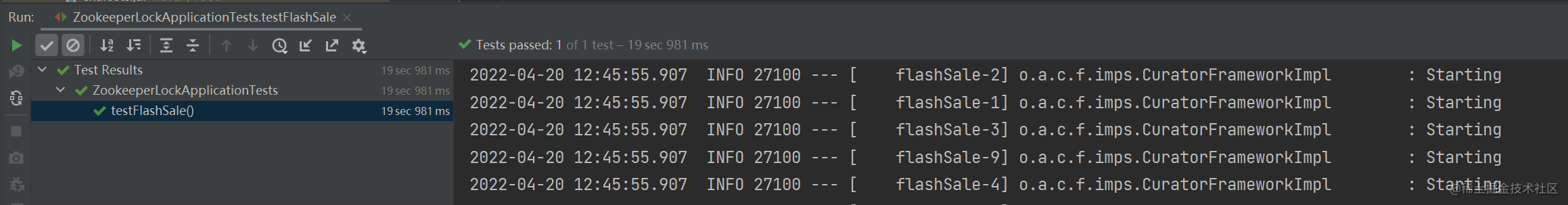

运行单元测试,查看zookeeper节点情况与执行结果:

## 六、源码解析

本节我们从`mutex.acquire()`获取锁方法开始。

```

public void acquire() throws Exception {

if (!this.internalLock(-1L, (TimeUnit)null)) {

throw new IOException("Lost connection while trying to acquire lock: " + this.basePath);

}

}

```

我们进入`internalLock(...)`方法

```

private boolean internalLock(long time, TimeUnit unit) throws Exception {

// 获取当前线程

Thread currentThread = Thread.currentThread();

// 获取当前线程的lockData

InterProcessMutex.LockData lockData = (InterProcessMutex.LockData)this.threadData.get(currentThread);

if (lockData != null) {

// lockData不为空,说明当前线程已经加过锁,这里我们重入即可。

lockData.lockCount.incrementAndGet();

return true;

} else {

// lockData不为空,尝试枷锁,这里会返回一个临时顺序节点,我们这里是/locks/iphone13/...

String lockPath = this.internals.attemptLock(time, unit, this.getLockNodeBytes());

if (lockPath != null) {

// 加锁成功,构建lockData对象

InterProcessMutex.LockData newLockData = new InterProcessMutex.LockData(currentThread, lockPath);

this.threadData.put(currentThread, newLockData);

return true;

} else {

return false;

}

}

}

```

上面我们发现一直在操作一个`lockData`对象,我们来看看`lockData`中的内容:

```

private static class LockData {

final Thread owningThread;

final String lockPath;

final AtomicInteger lockCount;

...

}

```

我们可以看到该类主要包含三个属性:

- `owningThread` 代表锁所属的线程

- `lockPath` 表示临时顺序节点的路径

- `lockCount` 用于锁重入和解锁的计数器

我们接着看加锁方法`attemptLock(...)`:

```

String attemptLock(long time, TimeUnit unit, byte[] lockNodeBytes) throws Exception {

...

while(!isDone) {

isDone = true;

try {

// 创建锁

ourPath = this.driver.createsTheLock(this.client, this.path, localLockNodeBytes);

// 多个线程抢锁阻塞等待

hasTheLock = this.internalLockLoop(startMillis, millisToWait, ourPath);

} catch (NoNodeException var14) {

if (!this.client.getZookeeperClient().getRetryPolicy().allowRetry(retryCount++, System.currentTimeMillis() - startMillis, RetryLoop.getDefaultRetrySleeper())) {

throw var14;

}

isDone = false;

}

}

return hasTheLock ? ourPath : null;

}

```

先看下创建锁的方法`createsTheLock(...)`:

```

public String createsTheLock(CuratorFramework client, String path, byte[] lockNodeBytes) throws Exception {

String ourPath;

// 默认是null,这里我们跳过

if (lockNodeBytes != null) {

ourPath = (String)((ACLBackgroundPathAndBytesable)client.create().creatingParentContainersIfNeeded().withProtection().withMode(CreateMode.EPHEMERAL_SEQUENTIAL)).forPath(path, lockNodeBytes);

} else {

// 主要执行逻辑,创建节点

ourPath = (String)((ACLBackgroundPathAndBytesable)client.create().creatingParentContainersIfNeeded().withProtection().withMode(CreateMode.EPHEMERAL_SEQUENTIAL)).forPath(path);

}

return ourPath;

}

```

这里有一个节点类型的枚举:

```

PERSISTENT(0, false, false, false, false),

PERSISTENT_SEQUENTIAL(2, false, true, false, false),

EPHEMERAL(1, true, false, false, false),

EPHEMERAL_SEQUENTIAL(3, true, true, false, false),

CONTAINER(4, false, false, true, false),

PERSISTENT_WITH_TTL(5, false, false, false, true),

PERSISTENT_SEQUENTIAL_WITH_TTL(6, false, true, false, true);

```

我们需要创建的是`EPHEMERAL_SEQUENTIAL`临时顺序节点。

接下来我们看看抢锁的方法`internalLockLoop(...)`:

```

private boolean internalLockLoop(long startMillis, Long millisToWait, String ourPath) throws Exception {

boolean haveTheLock = false;

boolean doDelete = false;

try {

...

while(this.client.getState() == CuratorFrameworkState.STARTED && !haveTheLock) {

// 获取节点/lock/iphone13下面的临时顺序节点并按编号升序排列

List

// 获取当前线程创建的临时顺序节点名称

String sequenceNodeName = ourPath.substring(this.basePath.length() + 1);

// 尝试获取锁,判断当前节点的编号,如果是第一个则抢占锁

PredicateResults predicateResults = this.driver.getsTheLock(this.client, children, sequenceNodeName, this.maxLeases);

// 当前客户端获取到锁,直接返回true

if (predicateResults.getsTheLock()) {

haveTheLock = true;

} else {

// 当前没抢到锁,根据排序结果则监听上一个节点

String previousSequencePath = this.basePath + "/" + predicateResults.getPathToWatch();

synchronized(this) {

try {

// 开启监听器,监听上一个节点

((BackgroundPathable)this.client.getData()

.usingWatcher(this.watcher)).forPath(previousSequencePath);

if (millisToWait == null) {

// 阻塞等待

this.wait();

} else {

...

}

...

return haveTheLock;

}

```

这里我们看下监听器`this.watcher`的初始化创建:

```

private final Watcher watcher = new Watcher() {

public void process(WatchedEvent event) {

// 通知所有wait的节点

LockInternals.this.client.postSafeNotify(LockInternals.this);

}

};

```

加锁流程分析完后,我们看下如何解锁:

```

public void release() throws Exception {

Thread currentThread = Thread.currentThread();

// 获取之前创建的lockData对象

InterProcessMutex.LockData lockData = (InterProcessMutex.LockData)this.threadData.get(currentThread);

if (lockData == null) {

// 未获取到监视器锁异常

throw new IllegalMonitorStateException("You do not own the lock: " + this.basePath);

} else {

// 不管是否是重入锁,lockCount - 1

int newLockCount = lockData.lockCount.decrementAndGet();

if (newLockCount <= 0) {

if (newLockCount < 0) {

throw new IllegalMonitorStateException("Lock count has gone negative for lock: " + this.basePath);

} else {

try {

// 重入次数为0,直接释放锁并发送解锁事件

this.internals.releaseLock(lockData.lockPath);

} finally {

// 从concurrentHashMap中删除

this.threadData.remove(currentThread);

}

}

}

}

}

```

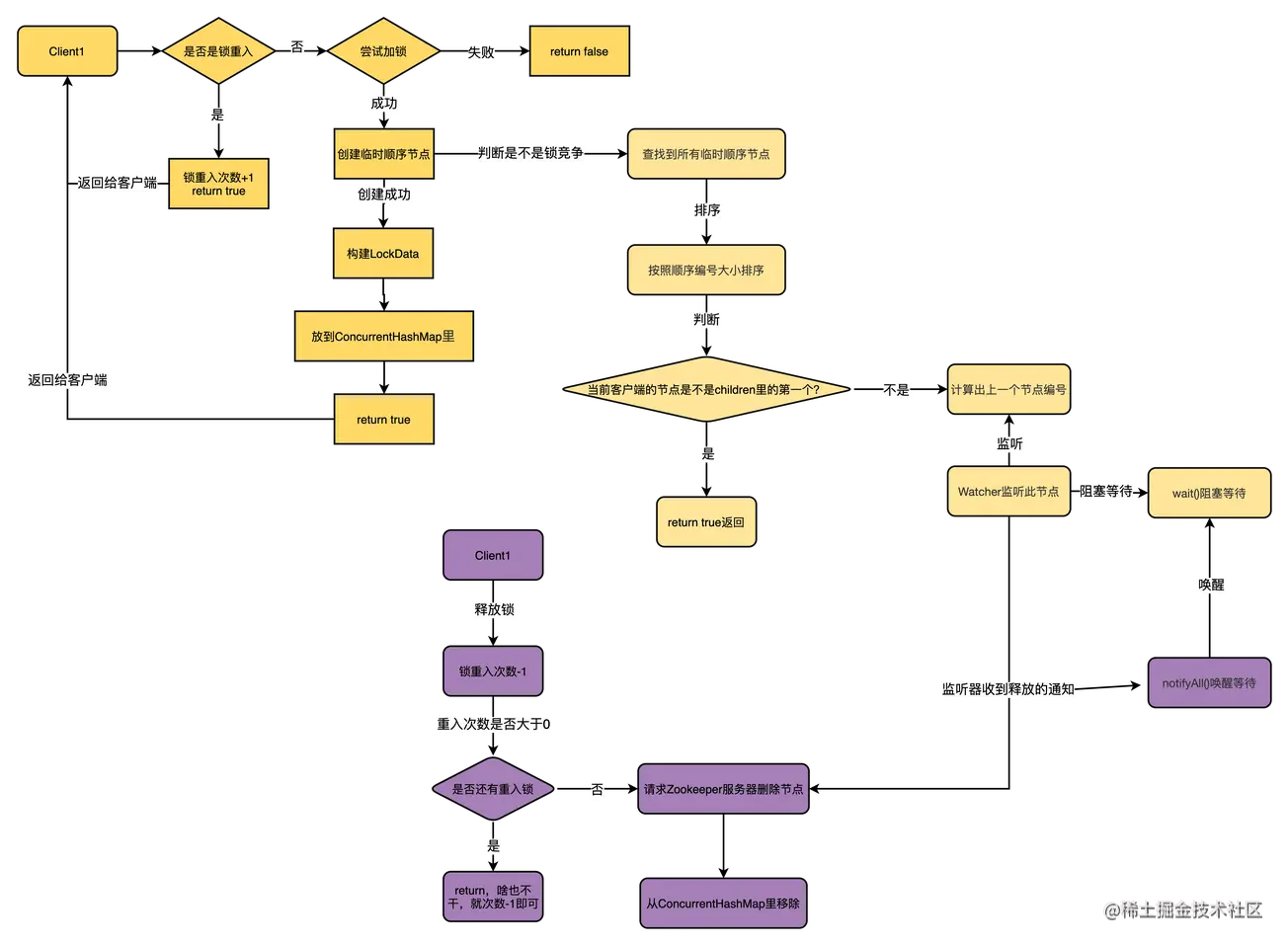

总体流程图如下:

## 七、总结

zookeeper也并非是分布式一致性的银弹,心跳维持在网络抖动等场景,节点会因为超时未发送心跳出现假性死亡。在生产中,建议根据自己的业务需求,灵活合理地选择合适的分布式锁方案。

## 八、参考资料

- [Redis、ZK分布式锁的前世今生](https://juejin.cn/post/7038473714970656775)

- [分布式共识算法ZAB](https://zhuanlan.zhihu.com/p/443717026)

- [容器运行时docker与服务编排](https://juejin.cn/post/7083742011576025118)